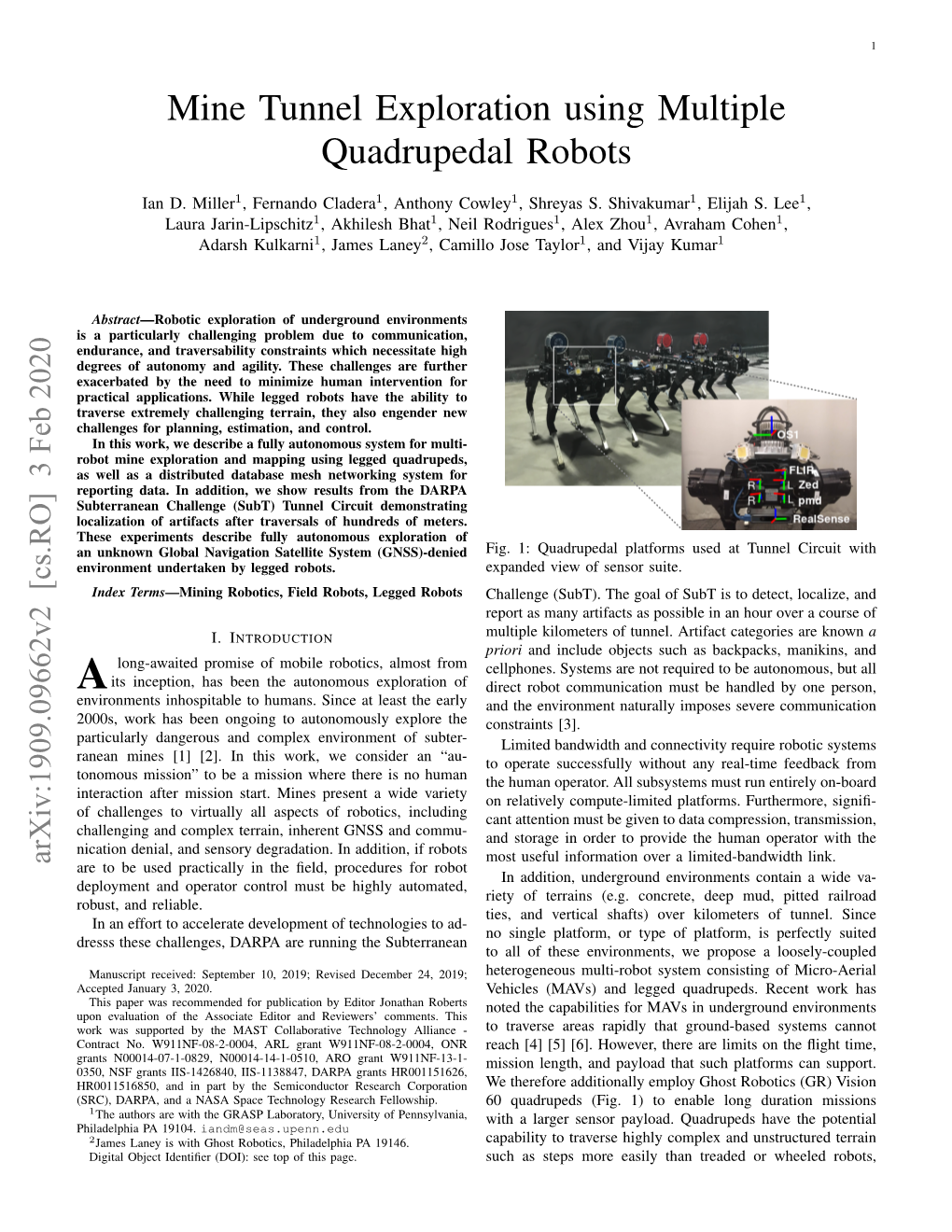

Mine Tunnel Exploration Using Multiple Quadrupedal Robots

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Autonomous Exploration and Mapping of Abandoned Mines

Autonomous Exploration and Mapping of Abandoned Mines Sebastian Thrun2, Scott Thayer1, William Whittaker1, Christopher Baker1 Wolfram Burgard3, David Ferguson1, Dirk Hahnel¨ 3, Michael Montemerlo2, Aaron Morris1, Zachary Omohundro1, Charlie Reverte1, Warren Whittaker1 1 Robotics Institute, Carnegie Mellon University, Pittsburgh, PA 2 Computer Science Department, Stanford University, Stanford, CA 3 Computer Science Department, University of Freiburg, Freiburg, Germany Abstract Abandoned mines pose significant threats to society, yet a large fraction of them lack ac- curate maps. This article discusses the software architecture of an autonomous robotic system designed to explore and map abandoned mines. We have built a robot capable of au- tonomously exploring abandoned mines. A new set of software tools is presented, enabling robots to acquire maps of unprecedented size and accuracy. On May 30, 2003, our robot “Groundhog” successfully explored and mapped a main corridor of the abandoned Mathies mine near Courtney, PA. The article also discusses some of the challenges that arise in the subterraneans environments, and some the difficulties of building truly autonomous robots. 1 Introduction In recent years, the quest to find and explore new, unexplored terrain has led to the deploy- ment of more and more sophisticated robotic systems, designed to traverse increasingly remote locations. Robotic systems have successfully explored volcanoes [5], searched meteorites in Antarctica [1, 44], traversed deserts [3], explored and mapped the sea bed [12], even explored other planets [26]. This article presents a robot system designed to explore spaces much closer to us: abandoned underground mines. According to a recent survey [6], “tens of thousands, perhaps even hundreds of thousands, of abandoned mines exist today in the United States. -

Treatment of Disused Lead Mine Shafts

Cover: The many surviving lead mining shafts and sites of Derbyshire and the Peak District feature a wide variety of conservation interests. As shown here (top left - David Webb) shafts provide access for cave exploration, though a safe method of access may need to be provided as shown here (bottom right – Ian Gates). On the surface there is archaeological interest in the waste hillocks and other structure (top right John Humble) and specialist plant communities survive that include metal tolerant species such as this spring sandwort (bottom left – John Humble). Shafts were often lined with a dry stone walling known as ginging (centre right – David Webb) which can be vulnerable to damage when they are capped. Report prepared by Entec UK Ltd for Derbyshire County Council, Peak District National Park Authority, Natural England, English Heritage and Derbyshire Caving Association. Funded by the above organisations and East Midlands Development Agency Copyright @2007 Derbyshire County Council, Peak District National Park Authority, Natural England, English Heritage and Derbyshire Caving Association. Images @2007 Derbyshire County Council, Peak District National Park Authority, Natural England, English Heritage and Derbyshire Caving Association unless otherwise stated. First published 2007 All rights reserved. No part of this report may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, or any information storage or retrieval system , without permission in writing from the copyright holders. Designed by Entec Ltd. I Foreword The lead mining remains of Derbyshire and the Peak District are of national importance for their ecological, archaeological, historical, geological and landscape value, as well as providing opportunities for recreation and enjoyment which are valued by many people. -

Northern Paiute and Western Shoshone Land Use in Northern Nevada: a Class I Ethnographic/Ethnohistoric Overview

U.S. DEPARTMENT OF THE INTERIOR Bureau of Land Management NEVADA NORTHERN PAIUTE AND WESTERN SHOSHONE LAND USE IN NORTHERN NEVADA: A CLASS I ETHNOGRAPHIC/ETHNOHISTORIC OVERVIEW Ginny Bengston CULTURAL RESOURCE SERIES NO. 12 2003 SWCA ENVIROHMENTAL CON..·S:.. .U LTt;NTS . iitew.a,e.El t:ti.r B'i!lt e.a:b ~f l-amd :Nf'arat:1.iern'.~nt N~:¥G~GI Sl$i~-'®'ffl'c~. P,rceP,GJ r.ei l l§y. SWGA.,,En:v,ir.e.m"me'Y-tfol I €on's.wlf.arats NORTHERN PAIUTE AND WESTERN SHOSHONE LAND USE IN NORTHERN NEVADA: A CLASS I ETHNOGRAPHIC/ETHNOHISTORIC OVERVIEW Submitted to BUREAU OF LAND MANAGEMENT Nevada State Office 1340 Financial Boulevard Reno, Nevada 89520-0008 Submitted by SWCA, INC. Environmental Consultants 5370 Kietzke Lane, Suite 205 Reno, Nevada 89511 (775) 826-1700 Prepared by Ginny Bengston SWCA Cultural Resources Report No. 02-551 December 16, 2002 TABLE OF CONTENTS List of Figures ................................................................v List of Tables .................................................................v List of Appendixes ............................................................ vi CHAPTER 1. INTRODUCTION .................................................1 CHAPTER 2. ETHNOGRAPHIC OVERVIEW .....................................4 Northern Paiute ............................................................4 Habitation Patterns .......................................................8 Subsistence .............................................................9 Burial Practices ........................................................11 -

Derbyserfine Caver No

DerbysErfine Caver No. 113 D !i\ at-rc*l \ The newsletter of the DERBYSHIRE CAVING ASSOCIATION TITE DERBYSHIRE CAVER SPRING 2OO2 No.113 EDITOR: Alan Keen, 130, Whitehitl Road, Ellistown Coalville, Leicester, LE67 lEp TEL: 01530-264199, lil4obile:07967979081, E-MAIL: [email protected] COPY DATE FOR TI{E NEXT ISSI]E IS 25ih MAY 2OO2 Material for inclusion can be sent hand-writteq phoned irL sent on disk (Word format ifpossible & Jpeg pictures) or e-mailed. Subscription to this publication is €7 for four issues, see back page for details. The views expressed in this publication are not necessarily those ofthe Editor or ofthe DCA. The DCA website is at www.theDCA.org.uk Cover Picture: Admidng stemples in Odin Mine, Castleton. Photo by Anthony Botham CONTENTS PAGE PAGE I Jug Holes shaft restoration project 7 Clean ups/Eco-Hangers & Ropes 3 Mandale Mine 9 DCRO callout rcports and information Peak Cavem round-up 10 Going Quackers! 4 DCA policy on CroW 2000 11 Late News: Peak Season extended 6 Jug Holes/Hillocks/F&M news Dowsing in the Hamps & Manifold DAVB EDWARDS & ASSOCIATBS OUTDOOR ADVENTT]RE ACTTYTTY PROYIDER & CONSI]LTANT 1 Sycamore Barn, OffMain Road, Taddington, Buxton, DERBYSIIIRE, SK17 9TR TeVFax. 01298 85375 Mobile 07808 181E0r E-rnail. [email protected] Website. w*ry.dave.edwardsandassociates.net CAVING TECHNICAL SKILLS TRAINING TO THE HIGHEST PROFESSIONAL STANDARI) FOR INDMDUALS, cROUpS AND CLUBS. sRT, RIGGING & SELF RESCUE LADDER AND LIFELII\E CLASSIC CAVING COIIRSES (in the UK & France) TRAINING AND ASSESSMENT FOR TIIE CIC AND LCMLA SCIIEMES Just give me a call to discuss your training requirements. -

TITLE PAGE.Wpd

Proceedings of BAT GATE DESIGN: A TECHNICAL INTERACTIVE FORUM Held at Red Lion Hotel Austin, Texas March 4-6, 2002 BAT CONSERVATION INTERNATIONAL Edited by: Kimery C. Vories Dianne Throgmorton Proceedings of Bat Gate Design: A Technical Interactive Forum Proceedings of Bat Gate Design: A Technical Interactive Forum held March 4 -6, 2002 at the Red Lion Hotel, Austin, Texas Edited by: Kimery C. Vories Dianne Throgmorton Published by U.S. Department of Interior, Office of Surface Mining, Alton, Illinois and Coal Research Center, Southern Illinois University, Carbondale, Illinois U.S. Department of Interior, Office of Surface Mining, Alton, Illinois Coal Research Center, Southern Illinois University, Carbondale, Illinois Copyright 2002 by the Office of Surface Mining. All rights reserved. Printed in the United States of America 8 7 6 5 4 3 2 1 Library of Congress Cataloging-in-Publication Data Bat Gate Design: A Technical Interactive Forum (2002: Austin, Texas) Proceedings of Bat Gate Design: Red Lion Hotel, Austin, Texas, March 4-6, 2002/ edited by Kimery C. Vories, Dianne Throgmorton; sponsored by U.S. Dept. of the Interior, Office of Surface Mining and Fish and Wildlife Service, Bat Conservation International, the National Cave and Karst Management Symposium, USDA Natural Resources Conservation Service, the National Speleological Society, Texas Parks and Wildlife, the Lower Colorado River Authority, the Indiana Karst Conservancy, and Coal Research Center, Southern Illinois University at Carbondale. p. cm. Includes bibliographical references. ISBN 1-885189-05-2 1. Bat ConservationBUnited States Congresses. 2. Bat Gate Design BUnited States Congresses. 3. Cave Management BUnited State Congresses. 4. Strip miningBEnvironmental aspectsBUnited States Congresses. -

Idaho Mining & Exploration 2004

Idaho Mining & Exploration 2004 Virginia S. Gillerman Idaho Geological Survey Idaho Non-fuel Mineral Production (USGS Data) 2003: $ 268.76 million Industrials = 70.5% 500 450 400 350 300 Con. S&G 250 Silver Million $ 200 Total 150 100 50 0 1998 1999 2000 2001 2002 2003 HEADLINE NEWS: December 2, SILVER NEAR $ 8 an ounce !! 2004 Gold hits new 16-year highs Plus Mo, Co, Cu, building stone Peaks near $ 460 an ounce !! prices also on the rise !! Over 1.1 billion ounces of silver since 1880’s Hecla - Lucky Friday Mine 2003: 2.25 million oz. Ag, + Pb, Zn 2004: $ 8.5 million development drift from 5900 level Silver Shaft to Gold Hunter ore body Added 50+ employees this year Mullan, Idaho Lucky Friday: 2004 5900 Drift - 4000’ of 4800’ completed 8 DDH to test ore on 5600 and 5900 levels Extended known strike of Gold Hunter veins Expand by end 2005 Lucky Friday mine Hecla – Mining sets of narrower veins in Gold Hunter system as 30” slusher stopes Miner Walt Lambott – typical of Hecla’s very experienced and hard working employees Coeur d’Alene Mines – Galena Mine 2003: 3.7 million ounces Ag; 2004 about 3.5 million Mining 72 vein from 5200 to 5700 levels, Upper Silver vein and 117 vein Major Development Project Ongoing Galena mine – replacing reserves Mucking Waste, 2400 level 72 vein in Polaris Fault, 5500 level Galena Mine: Development & Exploration Drifting to west on 3400 level towards 117 and 215 veins Polaris Fault and 72 vein below 5500 Drilled 40,000 feet of core this year Sterling Mining – Sunshine Mine Closed in 2001 after -

What's the Deal with Tintina Resources and Its Proposed Black

What’s the Deal With Tintina Resources and its Proposed Black Butte Mine? Montana Trout Unlimited July 2014 Who is Tintina Resources? Tintina is a little-known, fairly new Vancouver, Canada, mine exploration company. It has never operated a mine. Four of its six board members are Canadians. The other two board members are Americans who represent large hedge funds that together own more than half the shares in the company. The company’s two main front people are Meagher County natives -- its vice president of exploration, and its public relations staffer. Though the company touts its relationship with both as proof it cares about the local environment, neither individual is important enough to be on the board of directors. Tintina’s cash and assets, to this point, are fairly limited. It will probably need a large partner to operate a mine. Or, it will sell its interests to an established mining company once it has added value by further characterizing its potential ore reserves, or by obtaining mining permits. In June, an Australian copper mining company was in Montana investigating potential purchase of the Black Butte Mine project. What is Tintina Proposing? Tintina proposes to construct a large underground mine primarily for copper on private property near main Sheep Creek and its South Fork, in the upper Smith River drainage. Though it has not submitted specific mine plans to date, conceptual plans the company has been using to sell the project is that its footprint – tailings (waste material), waste rock, crushing facilities, discharge areas, potential water treatment plant, roads, etc., could take up a considerable amount of the 7,500 private acres it is leasing. -

Imperial Metals Corporation Annual Information Form for the Year Ended December 31, 2020

Imperial Metals Corporation Annual Information Form For the Year Ended December 31, 2020 March 29, 2021 Imperial Metals Corporation | 2020 Annual Information Form [ intentionally left blank ] Imperial Metals Corporation | 2020 Annual Information Form Table of Contents Page Content Information 1 Company Business & Corporate Structure 6 General Development & Outlook 8 Mineral Properties | Red Chris Mine 15 Mineral Properties | Mount Polley Mine 29 Mineral Properties | Huckleberry Mine 41 Other Properties 48 Dividends and Distributions 49 Capital Structure 49 Market for Securities 49 Directors & Executive Officers 50 Corporate Cease Trade Orders or Bankruptcies 53 Conflicts of Interest 53 Interest of Management & Others in Material Transactions 53 Material Contracts 53 Legal Proceedings 54 Transfer Agent & Registrar 54 Interests of Experts 54 Additional Information 54 Imperial Metals Corporation | 2020 Annual Information Form [ intentionally left blank ] Imperial Metals Corporation | 2020 Annual Information Form P 1 Content Information All references in this Annual Information Form (“AIF”) to “Imperial”, “Company”, “we” and “our” apply collectively to Imperial Metals Corporation and its subsidiaries. Cautionary Note Regarding Forward-Looking Information This AIF provides material information about Imperial Metals Corporation and its business, operations and developments for the year ended December 31, 2020, and plans for the future based on facts and circumstances as at March 29, 2021. Except for statements of historical fact relating to -

St Ives Gold Mine Technical Short Form Report 31 December 2012 1

St Ives Gold Mine Technical Short Form Report 31 December 2012 1. Overview The St Ives Gold Mining Company (Proprietary) Limited (St Ives) Salient featuru ess is wholly owned by Gold Fields Limited and is situated some 80 kilometres south of Kalgoorlie. The St Ives operations are X Mineral Resources at 4.7 Moz. conducted within mining tenements comprising 277 mining X Mineral Reserves at 2.2 Moz. titles (54,749 hectares), three mineral titles (364 hectares), 13 exploration licences (27,192 hectares), 22 prospecting X High-cost heap leach operation closed. licences (2,700 hectares) and 19 miscellaneous licences X Highly prospective tenement delivered new (14,500 hectares) for a total area of approximately exploration camp and Mineral Resources at 99,594 hectares. St Ives has security of tenure for all current Invincible. mining titles and exploration licences that contribute to the X Mineral Reserves. Neptune Mineral Resources and Mineral Reserves continue to grow; detailed technical St Ives represents a solid base for growth in Australia and is an planning initiated. important contributor to the Gold Fields’ vision of being “the X Hamlet well on track to achieve full production global leader in sustainable gold mining” with a target contribution in 2013. from the Australasia Region of approximately 0.7 Moz per annum X Life of Mine extends to 2018 (6 years). by 2015. St Ives currently operates four underground mines accessed via declines and several open pits, a centralised administrative office, an engineering workshop and CIP processing plant. Declared Mineral Resources at St Ives decreased by 490 koz primarily due to depletion and closure of the Leviathan, Formidable, Dianna, Minotaur and Britannia Footwall pits during 2012. -

Guidance and Navigation Software for Autonomous Underwater Explorer of Flooded Mines Zorana Milošević, Ramon A

Guidance and Navigation Software for Autonomous Underwater Explorer of Flooded Mines Zorana Milošević, Ramon A. Suarez Fernandez, Sergio Dominguez, Claudio Rossi Centre for Automation and Robotics UPM-CSIC, Universidad Politécnica de Madrid, Calle de José Gutiérrez Abascal, 2, 28006, Madrid, Spain, [email protected] Abstract There are a vast number of abandoned old flooded mines in Europe, with inadequate information of their current status. Exploring and surveying such mines is both unfeasible and hazardous for human divers. UX-1 is an autonomous robotic platform for survey and exploration of such mines. In this work, we present the design, implementation and testing of the guidance and navigation system of UX-1. The system has been tested using a software-in-the-loop and hardware-in-the-loop techniques, and in a real mine environment. Keywords: underwater robots, flooded mines, mine exploration Introduction Additionally, the depth of those mines poses The total number of flooded mines in Europe severe limitations on the possibilities of their is unknown. The estimations range from 5000 exploration, since human cave diving can be up to 30000. Many of such mines may still dangerous or even lethal in harsh deep mine have considerable amounts of raw materials conditions. and minerals that were disregarded during For the above mentioned reasons, robotics the operational lifetime of the mines due to imposes itself as a good solution for a non- low commercial value that made their further invasive flooded mine exploration. By using exploitation economically unfeasible. An an autonomous robotic system for gathering example of these materials is fluorite, which valuable geoscientific and spatial data, the until recently was considered a waste mineral strategic decisions on re-opening abandoned in many lead-zinc mines but now is on the mines can be supported by real data which EU list of critical raw materials (European cannot be gathered in any other way. -

Operations and Projects

2 operations and projects 2.1 Americas region (Cerro Corona and projects – 16 Salares Norte and Woodjam) 2.2 Australia region (Agnew, Darlot, Granny Smith, 34 St Ives plus FSE Project) 2.3 South Africa region (South Deep) 86 2.4 West Africa region (Damang and Tarkwa) 102 2.5 Corporate Development project (Arctic 128 Platinum (APP)) 2.1 Americas region The Americas region is anchored by the standout Cerro Corona mine delivering excellent free cash flow margins and the Salares Norte exploration project continues to show favourable results Cerro Corona (Peru) The Americas region currently Operations (Cerro Corona): accounts for 3% of the gold Mineral Resources Mineral Resource and 3% of the gold Mineral Reserve 3.0Moz gold and base, excluding growth 1,006Mlb copper projects. Mineral Reserves 1.8Moz gold and 623Mlb copper The Gold Fields Mineral Resource and Mineral Reserve Supplement to the Integrated Annual Report 31 December 2014 REGIONS, OPERATIONS AND PROJECTS 17 s¬ Strong anchor point for cementing cash flow and growth within the region s¬ Two new Jaw crusher’s installed to facilitate SAG mill throughput rates as rock hardness increases s¬ Prefeasibility work in progress to assess the potential to add additional reserves based on TSF expansion and WSF capacity opportunities s¬ Options to process the oxide stockpiles are being investigated CANADA s¬ 2,572m of infill-drilling and 66km of re-logging was completed in 2014 increasing knowledge of the ore body at depth (hypogene alteration, clay, density, alteration, hardness and silicification), which will enhance the 2015 Mineral Resource model s¬ Life-of-Mine extends to 2023 (nine years) s¬ 99.5% attributable to Gold Fields Projects (Mineral Resources only) WOODJAM (CANADA) 0.6Moz gold and 1,705Mlb copper PERU 51% attributable to Lima Gold Fields SALARES NORTE (CHILE) CHILE 3.1Moz gold and 33.6Moz silver 100% attributable to Gold Fields Operational pro¿le (Cerro Corona) Mining method Open-pit Infrastructure One open-pit. -

Journal of Cave and Karst Studies

September 2017 Volume 79, Number 2 JOURNAL OF ISSN 1090-6924 A Publication of the National CAVE AND KARST Speleological Society STUDIES DEDICATED TO THE ADVANCEMENT OF SCIENCE, EDUCATION, EXPLORATION, AND CONSERVATION Published By The National Speleological Society http://caves.org/pub/journal Office 6001 Pulaski Pike NW Huntsville, AL 35810 USA Editorial EDITORIAL: CHANGES TO THE JOURNAL OF CAVE AND KARST STUDIES Scott A. Engel Production Editor In 2011, the Journal of Cave and Karst Studies began transitioning to an electronic distribution process. While not all readership agreed with this change, it was done, in part, to address budgetary limitations within the National Speleologi- cal Society. But more importantly, it was done to conform with to similar changes being done by peer publications and across the publication industry. As predicted, in the six years since we made this transition, the movement away from print distribution and toward electronic only distribution has continued to expand and evolve across the scientific com- munity and the globe. In that respect, our experiment has been a success, and it is increasingly apparent that a return to full print distribution is unlikely and unnecessary. With that in mind, this issue represents the next evolution of the Journal as we continue to modernize our production process. As you look through this issue, you will find that we have made two major changes to improve the Journal. A brief description of the changes and the reasons behind them are presented below. Publication Schedule By transitioning to a predominantly electronic distribution, we have been able to control and reduce costs incurred by the National Speleological Society to produce and distribute individual issues of the Journal.