5.5 the Smart Search Engine

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Why We Need an Independent Index of the Web ¬ Dirk Lewandowski 50 Society of the Query Reader

Politics of Search Engines 49 René König and Miriam Rasch (eds), Society of the Query Reader: Reections on Web Search, Amsterdam: Institute of Network Cultures, 2014. ISBN: 978-90-818575-8-1. Why We Need an Independent Index of the Web ¬ Dirk Lewandowski 50 Society of the Query Reader Why We Need an Independent Index of the Web ¬ Dirk Lewandowski Search engine indexes function as a ‘local copy of the web’1, forming the foundation of every search engine. Search engines need to look for new documents constantly, detect changes made to existing documents, and remove documents from the index when they are no longer available on the web. When one considers that the web com- prises many billions of documents that are constantly changing, the challenge search engines face becomes clear. It is impossible to maintain a perfectly complete and current index.2 The pool of data changes thousands of times each second. No search engine can keep up with this rapid pace of change.3 The ‘local copy of the web’ can thus be viewed as the Holy Grail of web indexing at best – in practice, different search engines will always attain a varied degree of success in pursuing this goal.4 Search engines do not merely capture the text of the documents they find (as is of- ten falsely assumed). They also generate complex replicas of the documents. These representations include, for instance, information on the popularity of the document (measured by the number of times it is accessed or how many links to the document exist on the web), information extracted from the documents (for example the name of the author or the date the document was created), and an alternative text-based 1. -

Distributed Indexing/Searching Workshop Agenda, Attendee List, and Position Papers

Distributed Indexing/Searching Workshop Agenda, Attendee List, and Position Papers Held May 28-19, 1996 in Cambridge, Massachusetts Sponsored by the World Wide Web Consortium Workshop co-chairs: Michael Schwartz, @Home Network Mic Bowman, Transarc Corp. This workshop brings together a cross-section of people concerned with distributed indexing and searching, to explore areas of common concern where standards might be defined. The Call For Participation1 suggested particular focus on repository interfaces that support efficient and powerful distributed indexing and searching. There was a great deal of interest in this workshop. Because of our desire to limit attendance to a workable size group while maximizing breadth of attendee backgrounds, we limited attendance to one person per position paper, and furthermore we limited attendance to one person per institution. In some cases, attendees submitted multiple position papers, with the intention of discussing each of their projects or ideas that were relevant to the workshop. We had not anticipated this interpretation of the Call For Participation; our intention was to use the position papers to select participants, not to provide a forum for enumerating ideas and projects. As a compromise, we decided to choose among the submitted papers and allow multiple position papers per person and per institution, but to restrict attendance as noted above. Hence, in the paper list below there are some cases where one author or institution has multiple position papers. 1 http://www.w3.org/pub/WWW/Search/960528/cfp.html 1 Agenda The Distributed Indexing/Searching Workshop will span two days. The first day's goal is to identify areas for potential standardization through several directed discussion sessions. -

Natural Language Processing Technique for Information Extraction and Analysis

International Journal of Research Studies in Computer Science and Engineering (IJRSCSE) Volume 2, Issue 8, August 2015, PP 32-40 ISSN 2349-4840 (Print) & ISSN 2349-4859 (Online) www.arcjournals.org Natural Language Processing Technique for Information Extraction and Analysis T. Sri Sravya1, T. Sudha2, M. Soumya Harika3 1 M.Tech (C.S.E) Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 2 Head (I/C) of C.S.E & IT Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 3 M. Tech C.S.E, Assistant Professor, Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] Abstract: In the current internet era, there are a large number of systems and sensors which generate data continuously and inform users about their status and the status of devices and surroundings they monitor. Examples include web cameras at traffic intersections, key government installations etc., seismic activity measurement sensors, tsunami early warning systems and many others. A Natural Language Processing based activity, the current project is aimed at extracting entities from data collected from various sources such as social media, internet news articles and other websites and integrating this data into contextual information, providing visualization of this data on a map and further performing co-reference analysis to establish linkage amongst the entities. Keywords: Apache Nutch, Solr, crawling, indexing 1. INTRODUCTION In today’s harsh global business arena, the pace of events has increased rapidly, with technological innovations occurring at ever-increasing speed and considerably shorter life cycles. -

United States Patent (19) 11 Patent Number: 6,094,649 Bowen Et Al

US006094649A United States Patent (19) 11 Patent Number: 6,094,649 Bowen et al. (45) Date of Patent: Jul. 25, 2000 54) KEYWORD SEARCHES OF STRUCTURED “Charles Schwab Broadens Deployment of Fulcrum-Based DATABASES Corporate Knowledge Library Application', Uknown, Full 75 Inventors: Stephen J Bowen, Sandy; Don R crum Technologies Inc., Mar. 3, 1997, pp. 1-3. Brown, Salt Lake City, both of Utah (List continued on next page.) 73 Assignee: PartNet, Inc., Salt Lake City, Utah 21 Appl. No.: 08/995,700 Primary Examiner-Hosain T. Alam 22 Filed: Dec. 22, 1997 Assistant Examiner Thuy Pardo Attorney, Agent, or Firm-Computer Law---- (51) Int. Cl." ...................................................... G06F 17/30 52 U.S. Cl. ......................................... 707/3; 707/5; 707/4 (57 ABSTRACT 58 Field of Search .................................... 707/1, 2, 3, 4, 707/5, 531, 532,500 Methods and Systems are provided for Supporting keyword Searches of data items in a structured database, Such as a 56) References Cited relational database. Selected data items are retrieved using U.S. PATENT DOCUMENTS an SQL query or other mechanism. The retrieved data values 5,375,235 12/1994 Berry et al. ................................. is are documented using a markup language such as HTML. 5,469,354 11/1995 Hatakeyama et al. ... 707/3 The documents are indexed using a web crawler or other 5,546,578 8/1996 Takada ................. ... 707/5 indexing agent. Data items may be Selected for indexing by 5,685,003 11/1997 Peltonen et al. .. ... 707/531 5,787.295 7/1998 Nakao ........... ... 707/500 identifying them in a data dictionary. The indexing agent 5,787,421 7/1998 Nomiyama .. -

1.3.4 Web Technologies Concise Notes

OCR Computer Science A Level 1.3.4 Web Technologies Concise Notes www.pmt.education Specification 1.3.4 a) ● HTML ● CSS ● JavaScript 1.3.4 b) ● Search engine indexing 1.3.4 c) ● PageRank algorithm 1.3.4 d) ● Server and Client side processing www.pmt.education Web Development HTML ● HTML is the language/script that web pages are written in, ● It allows a browser to interpret and render a webpage for the viewer by describing the structure and order of the webpage. ● The language uses tags written in angle brackets (<tag>, </tag>) there are two sections of a webpage, a body and head. HTML Tags ● <html> : all code written within these tags is interpreted as HTML, ● <body> :Defines the content in the main browser content area, ● <link> :this is used to link to a css stylesheet (explained later in the notes) ● <head> :Defines the browser tab or window heading area, ● <title> :Defines the text that appears with the tab or window heading area, ● <h1>, <h2>, <h3> :Heading styles in decreasing sizes, ● <p> :A paragraph separated with a line space above and below ● <img> :Self closing image with parameters (img src = location, height=x, width = y) ● <a> : Anchor tag defining a hyperlink with location parameters (<a href= location> link text </a>) ● <ol> :Defines an ordered list, ● <ul> :Defines an unordered list, ● <li> :defines an individual list item ● <div> :creates a division of a page into separate areas each which can be referred to uniquely by name, (<div id= “page”>) Classes and Identifiers ● Class and identifier selectors are the names which you style, this means groups of items can be styled, the selectors for html are usually the div tags. -

Towards the Ontology Web Search Engine

TOWARDS THE ONTOLOGY WEB SEARCH ENGINE Olegs Verhodubs [email protected] Abstract. The project of the Ontology Web Search Engine is presented in this paper. The main purpose of this paper is to develop such a project that can be easily implemented. Ontology Web Search Engine is software to look for and index ontologies in the Web. OWL (Web Ontology Languages) ontologies are meant, and they are necessary for the functioning of the SWES (Semantic Web Expert System). SWES is an expert system that will use found ontologies from the Web, generating rules from them, and will supplement its knowledge base with these generated rules. It is expected that the SWES will serve as a universal expert system for the average user. Keywords: Ontology Web Search Engine, Search Engine, Crawler, Indexer, Semantic Web I. INTRODUCTION The technological development of the Web during the last few decades has provided us with more information than we can comprehend or manage effectively [1]. Typical uses of the Web involve seeking and making use of information, searching for and getting in touch with other people, reviewing catalogs of online stores and ordering products by filling out forms, and viewing adult material. Keyword-based search engines such as YAHOO, GOOGLE and others are the main tools for using the Web, and they provide with links to relevant pages in the Web. Despite improvements in search engine technology, the difficulties remain essentially the same [2]. Firstly, relevant pages, retrieved by search engines, are useless, if they are distributed among a large number of mildly relevant or irrelevant pages. -

Web-Page Indexing Based on the Prioritize Ontology Terms

Web-page Indexing based on the Prioritize Ontology Terms Sukanta Sinha1, 4, Rana Dattagupta2, Debajyoti Mukhopadhyay3, 4 1Tata Consultancy Services Ltd., Victoria Park Building, Salt Lake, Kolkata 700091, India [email protected] 2Computer Science Dept., Jadavpur University, Kolkata 700032, India [email protected] 3Information Technology Dept., Maharashtra Institute of Technology, Pune 411038, India [email protected] 4WIDiCoReL Research Lab, Green Tower, C-9/1, Golf Green, Kolkata 700095, India Abstract. In this world, globalization has become a basic and most popular human trend. To globalize information, people are going to publish the docu- ments in the internet. As a result, information volume of internet has become huge. To handle that huge volume of information, Web searcher uses search engines. The Web-page indexing mechanism of a search engine plays a big role to retrieve Web search results in a faster way from the huge volume of Web re- sources. Web researchers have introduced various types of Web-page indexing mechanism to retrieve Web-pages from Web-page repository. In this paper, we have illustrated a new approach of design and development of Web-page index- ing. The proposed Web-page indexing mechanism has applied on domain spe- cific Web-pages and we have identified the Web-page domain based on an On- tology. In our approach, first we prioritize the Ontology terms that exist in the Web-page content then apply our own indexing mechanism to index that Web- page. The main advantage of storing an index is to optimize the speed and per- formance while finding relevant documents from the domain specific search engine storage area for a user given search query. -

Exploring Search Engine Optimization (SEO) Techniques for Dynamic Websites

Master Thesis Computer Science Thesis no: MCS-2011-10 March, 2011 __________________________________________________________________________ Exploring Search Engine Optimization (SEO) Techniques for Dynamic Websites Wasfa Kanwal School of Computing Blekinge Institute of Technology SE – 371 39 Karlskrona Sweden This thesis is submitted to the School of Computing at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Computer Science. The thesis is equivalent to 20 weeks of full time studies. ___________________________________________________________________________________ Contact Information: Author: Wasfa Kanwal E-mail: [email protected] University advisor: Martin Boldt, PhD. School of Computing School of Computing Internet : www.bth.se/com Blekinge Institute of Technology Phone : +46 455 38 50 00 SE – 371 39 Karlskrona Fax : +46 455 38 50 57 Sweden ii ABSTRACT Context: With growing number of online businesses, Search Engine Optimization (SEO) has become vital to capitalize a business because SEO is key factor for marketing an online business. SEO is the process to optimize a website so that it ranks well on Search Engine Result Pages (SERPs). Dynamic websites are commonly used for e-commerce because they are easier to update and expand; however they are subjected to indexing related problems. Objectives: This research aims to examine and address dynamic websites indexing related issues. To achieve aims and objectives of this research I intend to explore dynamic websites indexing considerations, investigate SEO tools to carry SEO campaign in three major search engines (Google, Yahoo and Bing), experiment SEO techniques, and determine to what extent dynamic websites can be made search engine friendly on these major search engines. -

Check out Our Initial Report Example Here

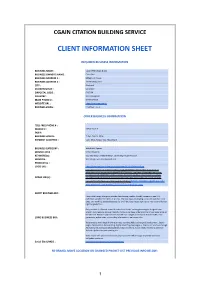

CGAIN CITATION BUILDING SERVICE CLIENT INFORMATION SHEET REQUIRED BUSINESS INFORMATION BUSINESS NAME : CGain Web Design & SEO BUSINESS OWNER'S NAME : Chris Giles BUSINESS ADDRESS 1 : Millennium House BUSINESS ADDRESS 2 : 161 Mowbray Drive CITY : Blackpool STATEPROVINCE : Lancashire ZIPPOSTAL CODE : FY3 7UN COUNTRY : United Kingdom MAIN PHONE # : 01253 675593 WEBSITE URL : hPps://www.cgain.co.uk BUSINESS EMAIL : [email protected] OTHER BUSINESS INFORMATION TOLL FREE PHONE # : MOBILE # : 07518 931616 FAX # : BUSINESS HOURS : 7 days, 9am to 10pm PAYMENT ACCEPTED : Cash, BACS, Paypal, Visa, Mastercard BUSINESS CATEGORY : Web & SEO Agency SERVICE AREA : United Kingdom KEYWORD(s) : seo, web design, website design, search engine op[misa[on SERVICES : Web design, web development, seo PRODUCT(s) : LOGO URL : hPps://www.cgain.co.uk/wp-content/uploads/2019/10/650x520.jpg hPps://www.cgain.co.uk/wp-content/uploads/2020/06/owllegal-website.jpg, hPps:// www.cgain.co.uk/wp-content/uploads/2020/06/weelz_.jpg,hPps://www.cgain.co.uk/wp- content/uploads/2020/06/mobility-scooters-blackpool.jpg,hPps://www.cgain.co.uk/wp- IMAGE URL(s) : content/uploads/2020/06/quickcrepesburnley.jpg,hPps://www.cgain.co.uk/wp-content/ uploads/2019/03/fleet-equestrian-website-2018.jpg,https://www.cgain.co.uk/ wp-content/uploads/2019/03/magellan.jpg SHORT BUSINESS BIO : CGain Web Design Blackpool provide class-leading, mobile-friendly, responsive and fully op[mised websites for clients of all sizes. We have been developing successful websites since 1995. Our work has covered websites for small local businesses right up to interna[onal human rights organisa[ons. Every website is different, honed to reflect our clients’ exis[ng branding or designed from scratch. -

Google Bing Facebook Findopen Foursquare

The Network Google Active Monthly Users: 1B+ Today's world of smartphones, mobile moments, and self-driving cars demands accurate location data more than ever. And no single search, maps, and apps provider is more important to your location marketing strategy than Google. With the Yext Location Manager, you can manage your location data on Google My Business—the tool through which Businesses can supply data to Google Search, Google Maps, and Google+. Bing Active Monthly Users: 150M+ With more than 20% of search market share in the US and rapidly increasing traction worldwide, Bing is an essential piece of the local ecosystem. More than 150 million users search for local Businesses and services on Bing every month. Beginning in 2016, Bing will also power search results across the AOL portfolio of sites, including Huffington Post, Engadget, and TechCrunch. Facebook Active Monthly Users: 1.35B Facebook is the world’s Biggest social network with more than 2 Billion users. Yext Sync for FaceBook makes it easy to manage accurate and up-to-date contact information, photos, messages and more. FindOpen FindOpen is one of the world’s leading online Business directories and helps consumers find the critical information they need to know aBout the Businesses they’d like to visit, like hours of operation, holiday hours, special hours, menus, product and service lists, and more. FindOpen — also known as FindeOffen, TrovaAperto, TrouverOuvert, VindOpen, nyitva.hu, EncuentreAbierto, AussieHours, FindAaben, TeraZOtwarte, HittaӦppna, and deschis.ro in the many countries it operates — provides a fully responsive experience, no matter where its gloBal users are searching from. -

Awareness Watch™ Newsletter by Marcus P

Awareness Watch™ Newsletter By Marcus P. Zillman, M.S., A.M.H.A. http://www.AwarenessWatch.com/ V9N4 April 2011 Welcome to the V9N4 April 2011 issue of the Awareness Watch™ Newsletter. This newsletter is available as a complimentary subscription and will be issued monthly. Each newsletter will feature the following: Awareness Watch™ Featured Report Awareness Watch™ Spotters Awareness Watch™ Book/Paper/Article Review Subject Tracer™ Information Blogs I am always open to feedback from readers so please feel free to email with all suggestions, reviews and new resources that you feel would be appropriate for inclusion in an upcoming issue of Awareness Watch™. This is an ongoing work of creativity and you will be observing constant changes, constant updates knowing that “change” is the only thing that will remain constant!! Awareness Watch™ Featured Report This month’s featured report covers Deep Web Research. This is a comprehensive miniguide of reference resources covering deep web research currently available on the Internet. The below list of sources is taken from my Subject Tracer™ Information Blog titled Deep Web Research and is constantly updated with Subject Tracer™ bots at the following URL: http://www.DeepWeb.us/ These resources and sources will help you to discover the many pathways available to you through the Internet to find the latest reference deep web resources and sites. 1 Awareness Watch V9N4 April 2011 Newsletter http://www.AwarenessWatch.com/ [email protected] eVoice: 800-858-1462 © 2011 Marcus P. Zillman, M.S., A.M.H.A. Deep Web Research Bots, Blogs and News Aggregators (http://www.BotsBlogs.com/) is a keynote presentation that I have been delivering over the last several years, and much of my information comes from the extensive research that I have completed over the years into the “invisible” or what I like to call the “deep” web. -

How Does Google Work?

With Genealogy Links: http://www.ci.oswego.or.us/library/genealogy-presentation How does Google work? Google’s Computers: Google utilizes many computers known as “servers” to administer (serve) Google services. Google likely has around one million servers for different functions including analyzing search queries and delivering search results. One of their largest “server farms” is located in the Dalles, Oregon. Google is very secretive about how many servers it actually has. When you run a search with Google, you’ll notice that Google brags that brings back your results in a fraction of a second —For example: About 11,700,000 results (0.27 seconds), this is the result of many of Google’s servers working together on your search together. Indexing the Web: Google browses the visible web and creates and re-creates an index of the websites it browses. When you run a Google search you aren’t searching the Web itself, but instead Google’s index of sites with links to live pages that it created when it last indexed the web. Google has the largest index of web pages of any search engine, indexing over 55 billion web pages! When Google indexes the web it makes a copy of the site for its “cache”. When you search with Google and come across a website which is currently down, you can mouseover a site in the search results, then the double arrows and then click on “cached” to see what the site recently looked like when it was last indexed or before it went down. Useful for find- ing information that “was just there yesterday!”.