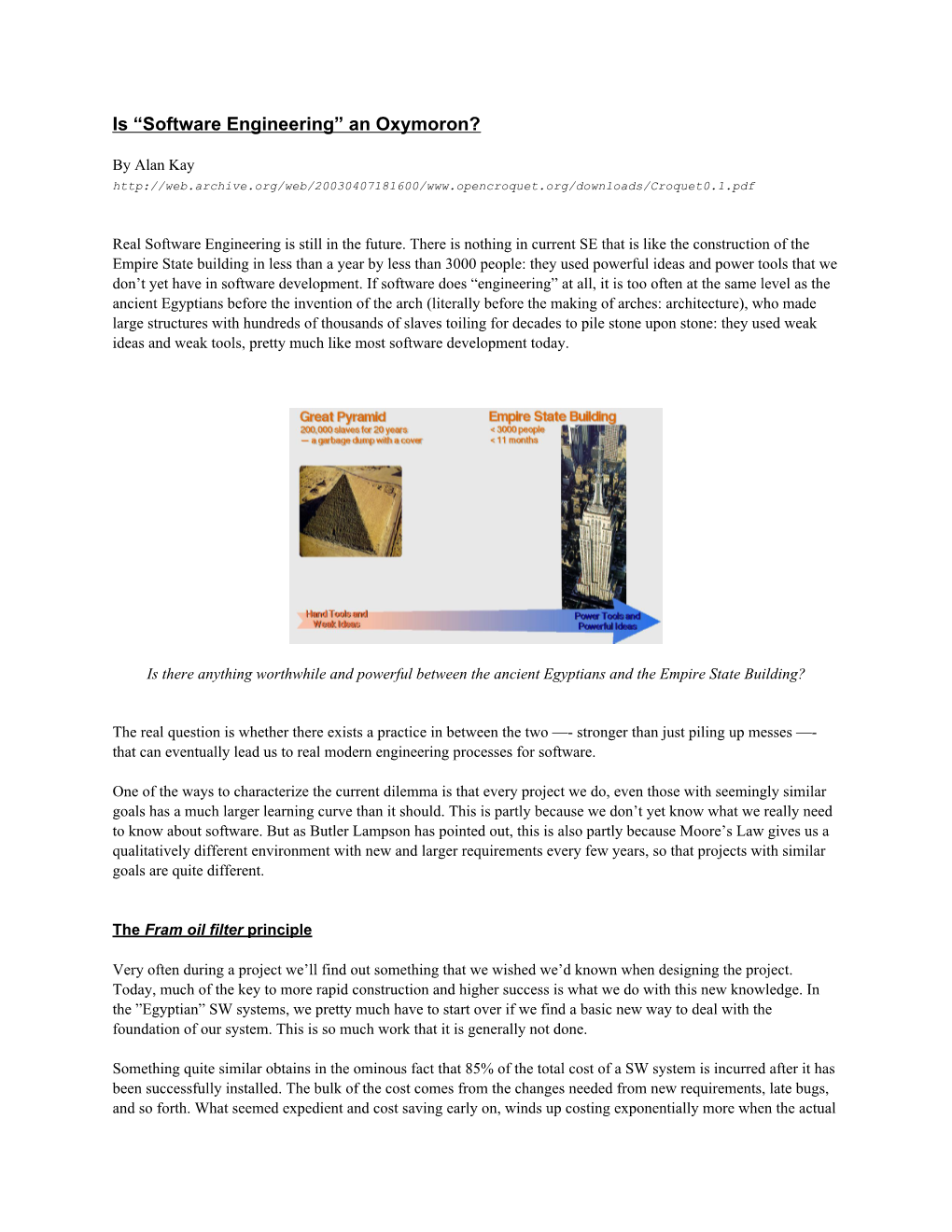

Is “Software Engineering” an Oxymoron?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

QATAR ASW ONLINE SCRIPT V.8.0 Pages

“DEXIGN THE FUTURE: Innovation for Exponential Times” ARNOLD WASSERMAN COMMENCEMENT ADDRESS VIRGINIA COMMONWEALTH UNIVERSITY IN QATAR 2 MAY 2016 مساء الخير .Good afternoon It is an honor to be invited to address you on this important day. Today you artists and designers graduate into the future. Do you think about the future? Of course you do. But how do you think about the future? Is the future tangible - or is it intangible ? Is it a subject for rational deliberation? Or is it an imagined fantasy that forever recedes before us? Is the future simply whatever happens to us next? Or is it something we deliberately create? Can we Dexign the Future? What I would like us to think about for the next few moments is how creative professionals like yourselves might think about the future. What I have to say applies as much to art as it does to design. Both are technologies of creative innovation. I will take the liberty here of calling it all design. My speech is also available online. There you can follow a number of links to what I will talk about here. The future you are graduating into is an exponential future. What that means is that everything is happening faster and at an accelerating rate. For example, human knowledge is doubling every 12 months. And that curve is accelerating. An IBM researcher foresees that within a few years knowledge will be doubling every 12 hours as we build out the internet of things globally. This acceleration of human knowledge implies that the primary skill of a knowledge worker like yourself is research, analysis and synthesis of the not-yet-known. -

Simula Mother Tongue for a Generation of Nordic Programmers

Simula! Mother Tongue! for a Generation of! Nordic Programmers! Yngve Sundblad HCI, CSC, KTH! ! KTH - CSC (School of Computer Science and Communication) Yngve Sundblad – Simula OctoberYngve 2010Sundblad! Inspired by Ole-Johan Dahl, 1931-2002, and Kristen Nygaard, 1926-2002" “From the cold waters of Norway comes Object-Oriented Programming” " (first line in Bertrand Meyer#s widely used text book Object Oriented Software Construction) ! ! KTH - CSC (School of Computer Science and Communication) Yngve Sundblad – Simula OctoberYngve 2010Sundblad! Simula concepts 1967" •# Class of similar Objects (in Simula declaration of CLASS with data and actions)! •# Objects created as Instances of a Class (in Simula NEW object of class)! •# Data attributes of a class (in Simula type declared as parameters or internal)! •# Method attributes are patterns of action (PROCEDURE)! •# Message passing, calls of methods (in Simula dot-notation)! •# Subclasses that inherit from superclasses! •# Polymorphism with several subclasses to a superclass! •# Co-routines (in Simula Detach – Resume)! •# Encapsulation of data supporting abstractions! ! KTH - CSC (School of Computer Science and Communication) Yngve Sundblad – Simula OctoberYngve 2010Sundblad! Simula example BEGIN! REF(taxi) t;" CLASS taxi(n); INTEGER n;! BEGIN ! INTEGER pax;" PROCEDURE book;" IF pax<n THEN pax:=pax+1;! pax:=n;" END of taxi;! t:-NEW taxi(5);" t.book; t.book;" print(t.pax)" END! Output: 7 ! ! KTH - CSC (School of Computer Science and Communication) Yngve Sundblad – Simula OctoberYngve 2010Sundblad! -

The Development of Smalltalk Topic Paper #7

The Development of Smalltalk Topic Paper #7 Alex Laird Max Earn Peer CS-3210-01 Reviewer On Time/Format 1 2/4/09 Survey of Programming Languages Correct 5 Spring 2009 Clear 2 Computer Science, (319) 360-8771 Concise 2 [email protected] Grading Rubric Grading Total 10 pts ABSTRACT Dynamically typed languages are easier on the compiler because This paper describes the development of the Smalltalk it has to make fewer passes and the brunt of checking is done on programming language. the syntax of the code. Compilation of Smalltalk is just-in-time compilation, also known Keywords as dynamic translation. It means that upon compilation, Smalltalk code is translated into byte code that is interpreted upon usage and Development, Smalltalk, Programming, Language at that point the interpreter converts the code to machine language and the code is executed. Dynamic translations work very well 1. INTRODUCTION with dynamic typing. Smalltalk, yet another programming language originally developed for educational purposes and now having a much 5. IMPLEMENTATIONS broader horizon, first appeared publically in the computing Smalltalk has been implemented in numerous ways and has been industry in 1980 as a dynamically-typed object-oriented language the influence of many future languages such as Java, Python, based largely on message-passing in Simula and Lisp. Object-C, and Ruby, just to name a few. Athena is a Smalltalk scripting engine for Java. Little Smalltalk 2. EARLY HISTORY was the first interpreter of the programming language to be used Development for Smalltalk started in 1969, but the language outside of the Xerox PARC. -

Design and Implementation of an Optionally-Typed Functional Programming Language

Design and Implementation of an Optionally-Typed Functional Programming Language Shaobai Li Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2017-215 http://www2.eecs.berkeley.edu/Pubs/TechRpts/2017/EECS-2017-215.html December 14, 2017 Copyright © 2017, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Design and Implementation of an Optionally-Typed Functional Programming Language by Patrick S. Li A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Engineering { Electrical Engineering and Computer Sciences in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Koushik Sen, Chair Adjunct Professor Jonathan Bachrach Professor George Necula Professor Sara McMains Fall 2017 Design and Implementation of an Optionally-Typed Functional Programming Language Copyright 2017 by Patrick S. Li 1 Abstract Design and Implementation of an Optionally-Typed Functional Programming Language by Patrick S. Li Doctor of Philosophy in Engineering { Electrical Engineering and Computer Sciences University of California, Berkeley Professor Koushik Sen, Chair This thesis describes the motivation, design, and implementation of L.B. Stanza, an optionally- typed functional programming language aimed at helping programmers tackle the complexity of architecting large programs and increasing their productivity across the entire software development life cycle. -

A Model of Inheritance for Declarative Visual Programming Languages

An Abstract Of The Dissertation Of Rebecca Djang for the degree of Doctor of Philosophy in Computer Science presented on December 17, 1998. Title: Similarity Inheritance: A Model of Inheritance for Declarative Visual Programming Languages. Abstract approved: Margaret M. Burnett Declarative visual programming languages (VPLs), including spreadsheets, make up a large portion of both research and commercial VPLs. Spreadsheets in particular enjoy a wide audience, including end users. Unfortunately, spreadsheets and most other declarative VPLs still suffer from some of the problems that have been solved in other languages, such as ad-hoc (cut-and-paste) reuse of code which has been remedied in object-oriented languages, for example, through the code-reuse mechanism of inheritance. We believe spreadsheets and other declarative VPLs can benefit from the addition of an inheritance-like mechanism for fine-grained code reuse. This dissertation first examines the opportunities for supporting reuse inherent in declarative VPLs, and then introduces similarity inheritance and describes a prototype of this model in the research spreadsheet language Forms/3. Similarity inheritance is very flexible, allowing multiple granularities of code sharing and even mutual inheritance; it includes explicit representations of inherited code and all sharing relationships, and it subsumes the current spreadsheet mechanisms for formula propagation, providing a gradual migration from simple formula reuse to more sophisticated uses of inheritance among objects. Since the inheritance model separates inheritance from types, we investigate what notion of types is appropriate to support reuse of functions on different types (operation polymorphism). Because it is important to us that immediate feedback, which is characteristic of many VPLs, be preserved, including feedback with respect to type errors, we introduce a model of types suitable for static type inference in the presence of operation polymorphism with similarity inheritance. -

Reading List

EECS 101 Introduction to Computer Science Dinda, Spring, 2009 An Introduction to Computer Science For Everyone Reading List Note: You do not need to read or buy all of these. The syllabus and/or class web page describes the required readings and what books to buy. For readings that are not in the required books, I will either provide pointers to web documents or hand out copies in class. Books David Harel, Computers Ltd: What They Really Can’t Do, Oxford University Press, 2003. Fred Brooks, The Mythical Man-month: Essays on Software Engineering, 20th Anniversary Edition, Addison-Wesley, 1995. Joel Spolsky, Joel on Software: And on Diverse and Occasionally Related Matters That Will Prove of Interest to Software Developers, Designers, and Managers, and to Those Who, Whether by Good Fortune or Ill Luck, Work with Them in Some Capacity, APress, 2004. Most content is available for free from Spolsky’s Blog (see http://www.joelonsoftware.com) Paul Graham, Hackers and Painters, O’Reilly, 2004. See also Graham’s site: http://www.paulgraham.com/ Martin Davis, The Universal Computer: The Road from Leibniz to Turing, W.W. Norton and Company, 2000. Ted Nelson, Computer Lib/Dream Machines, 1974. This book is now very rare and very expensive, which is sad given how visionary it was. Simon Singh, The Code Book: The Science of Secrecy from Ancient Egypt to Quantum Cryptography, Anchor, 2000. Douglas Hofstadter, Goedel, Escher, Bach: The Eternal Golden Braid, 20th Anniversary Edition, Basic Books, 1999. Stuart Russell and Peter Norvig, Artificial Intelligence: A Modern Approach, 2nd Edition, Prentice Hall, 2003. -

The Cedar Programming Environment: a Midterm Report and Examination

The Cedar Programming Environment: A Midterm Report and Examination Warren Teitelman The Cedar Programming Environment: A Midterm Report and Examination Warren Teitelman t CSL-83-11 June 1984 [P83-00012] © Copyright 1984 Xerox Corporation. All rights reserved. CR Categories and Subject Descriptors: D.2_6 [Software Engineering]: Programming environments. Additional Keywords and Phrases: integrated programming environment, experimental programming, display oriented user interface, strongly typed programming language environment, personal computing. t The author's present address is: Sun Microsystems, Inc., 2550 Garcia Avenue, Mountain View, Ca. 94043. The work described here was performed while employed by Xerox Corporation. XEROX Xerox Corporation Palo Alto Research Center 3333 Coyote Hill Road Palo Alto, California 94304 1 Abstract: This collection of papers comprises a report on Cedar, a state-of-the-art programming system. Cedar combines in a single integrated environment: high-quality graphics, a sophisticated editor and document preparation facility, and a variety of tools for the programmer to use in the construction and debugging of his programs. The Cedar Programming Language is a strongly-typed, compiler-oriented language of the Pascal family. What is especially interesting about the Ce~ar project is that it is one of the few examples where an interactive, experimental programming environment has been built for this kind of language. In the past, such environments have been confined to dynamically typed languages like Lisp and Smalltalk. The first paper, "The Roots of Cedar," describes the conditions in 1978 in the Xerox Palo Alto Research Center's Computer Science Laboratory that led us to embark on the Cedar project and helped to define its objectives and goals. -

MVC Revival on the Web

MVC Revival on the Web Janko Mivšek [email protected] @mivsek @aidaweb ESUG 2013 Motivation 30 years of Smalltalk, 30 years of MVC 34 years exa!tly, sin!e "#$# %ot in JavaScri&t MVC frameworks 'or Sin(le)*age and +ealtime web a&&s ,e Smalltalkers s-ould respe!t and revive our &earls better Contents MVC e &lained %istory /sa(e in !.rrent JavaScri&t frameworks /sa(e in Smalltalk C.rrent and f.t.re MVC in 0ida/Web MVC Explained events Ar!hite!tural desi(n &attern - Model for domain s&e!ifi! data and logi! View updates Controller ) View for &resentation to t-e .ser ) Controller for intera!tions wit- t-e .ser UI and .&datin( domain model Domain changes Main benefit: actions observing ) se&aration of !on!erns Model MVC based on Observer pattern subscribe ) 3bserver looks at observee Observer changed - 3bservee is not aware of t-at s u 4e&enden!y me!-anism2 B t n - 3bserver is de&endent on 3bservee state e observing v - 3bservee m.st re&ort state !-an(es to 3bserver E b - *.b/S.b Event 6.s de!ou&les 3bservee from u S / 3bserver to &reserve its .nawarnes of observation b u changed P Observee Main benefit: (Observable) ) se&aration of !on!erns Multiple observers subscribe - M.lti&le observers of t-e same 3bservee Observer changed - 7n MVC an 3bservee is View and 3bservee is domain Model, t-erefore2 s u B t - many views of t-e same model n e observing v - many a&&s E b - many .sers u S / - mix of all t-ree !ases b u Observee changed P (Observable) Example: Counter demo in Aida/Web M.lti.ser realtime web !ounter exam&le -ttp://demo.aidaweb.si – !li!k Realtime on t-e left – !li!k De!rease or In!rease to c-an(e counter – !ounter is c-an(ed on all ot-er8s browsers History of MVC (1) 7nvented by 9rygve +eenska.g w-en -e worked in "#$:1$# wit- Alan ;ay's group on <ero *arc on Smalltalk and Dynabook – 'inal term Model)View)Controller !oined 10. -

STEPS Toward Expressive Programming Systems, 2010 Progress Report Submitted to the National Science Foundation (NSF) October 2010

STEPS Toward Expressive Programming Systems, 2010 Progress Report Submitted to the National Science Foundation (NSF) October 2010 Viewpoints Research Institute, 1209 Grand Central Avenue, Glendale, CA 91201 t: (818) 332-3001 f: (818) 244-9761 VPRI Technical Report TR-2010-004 NSF Award: 0639876 Year 4 Annual Report: October 2010 STEPS Toward Expressive Programming Systems Viewpoints Research Institute, Glendale CA Important Note For Viewing The PDF Of This Report We have noticed that Adobe Reader and Acrobat do not do the best rendering of scaled pictures. Try different magnifications (e.g. 118%) to find the best scale. Apple Preview does a better job. Table Of Contents STEPS For The General Public 2 STEPS Project Introduction 3 STEPS In 2010 5 The “Frank” Personal Computing System 5 The Parts Of Frank 7 1. DBjr 7 a. User Interface Approach 9 b. Some of the Document Styles We’ve Made 11 2. LWorld 17 3. Gezira/Nile 18 4. NotSqueak 20 5. Networking 20 6. OMeta 22 7. Nothing 22 2010 STEPS Experiments And Papers 23 Training and Development 26 Outreach Activities 26 References 27 Appendix 1: Nothing Grammar in OMeta 28 Appendix 2: A Copying Garbage Collector in Nothing 30 VPRI Technical Report TR-2010-004 A new requirement for NSF reports is to include a few jargon‑free paragraphs to help the general public un‑ derstand the nature of the research. This seems like a very good idea. Here is our first attempt. STEPS For The General Public If computing is important—for daily life, learning, business, national defense, jobs, and more—then qualitatively advancing computing is extremely important. -

Dynamic Object-Oriented Programming with Smalltalk

Dynamic Object-Oriented Programming with Smalltalk 1. Introduction Prof. O. Nierstrasz Autumn Semester 2009 LECTURE TITLE What is surprising about Smalltalk > Everything is an object > Everything happens by sending messages > All the source code is there all the time > You can't lose code > You can change everything > You can change things without restarting the system > The Debugger is your Friend © Oscar Nierstrasz 2 ST — Introduction Why Smalltalk? > Pure object-oriented language and environment — “Everything is an object” > Origin of many innovations in OO development — RDD, IDE, MVC, XUnit … > Improves on many of its successors — Fully interactive and dynamic © Oscar Nierstrasz 1.3 ST — Introduction What is Smalltalk? > Pure OO language — Single inheritance — Dynamically typed > Language and environment — Guiding principle: “Everything is an Object” — Class browser, debugger, inspector, … — Mature class library and tools > Virtual machine — Objects exist in a persistent image [+ changes] — Incremental compilation © Oscar Nierstrasz 1.4 ST — Introduction Smalltalk vs. C++ vs. Java Smalltalk C++ Java Object model Pure Hybrid Hybrid Garbage collection Automatic Manual Automatic Inheritance Single Multiple Single Types Dynamic Static Static Reflection Fully reflective Introspection Introspection Semaphores, Some libraries Monitors Concurrency Monitors Categories, Namespaces Packages Modules namespaces © Oscar Nierstrasz 1.5 ST — Introduction Smalltalk: a State of Mind > Small and uniform language — Syntax fits on one sheet of paper > -

As We May Communicate Carson Reynolds Department of Technical Communication University of Washington

As We May Communicate Carson Reynolds Department of Technical Communication University of Washington Abstract The purpose of this article is to critique and reshape one of the fundamental paradigms of Human-Computer Interaction: the workspace. This treatise argues that the concept of a workspace—as an interaction metaphor—has certain intrinsic defects. As an alternative, a new interaction model, the communication space is offered in the hope that it will bring user interfaces closer to the ideal of human-computer symbiosis. Keywords: Workspace, Communication Space, Human-Computer Interaction Our computer systems and corresponding interfaces have come quite a long way in recent years. We no longer patiently punch cards or type obscure and unintelligible commands to interact with our computers. However, out current graphical user interfaces, for all of their advantages, still have shortcomings. It is the purpose of this paper to attempt to deduce these flaws by carefully examining our earliest and most basic formulation of what a computer should be: a workspace. The History of the Workspace The modern computerized workspace has its beginning in Vannevar Bush’s landmark article, “As We May Think.” Bush presented the MEMEX: his vision of an ideal workspace for researchers and scholars that was capable of retrieving and managing information. Bush thought that machines capable of manipulating information could transform the way that humans think. What did Bush’s idealized workspace involve? It consists of a desk, and while it can presumably be operated from a distance, it is primarily the piece of furniture at which he works. On top are slanting translucent screens, on which material can be projected for convenient reading. -

Session 1: Introducing Extreme Java

Extreme Java G22.3033-006 Session 1 – Main Theme Introducing Extreme Java Dr. Jean-Claude Franchitti New York University Computer Science Department Courant Institute of Mathematical Sciences Agenda • Course Logistics, Structure and Objectives • Java Programming Language Features • Java Environment Limitations • Upcoming Features in “Tiger” 1.5 • Model Driven Architectures for Java Systems • Java Enterprise Application Enabling • Agile Modeling & eXtreme Programming • Aspect-Oriented Programming & Refactoring • Java Application Performance Enhancement • Readings, Class Project, and Assignment #1a 2 1 Course Logistics • Course Web Site • http://cs.nyu.edu/courses/spring03/G22.3033-006/index.htm • http://www.nyu.edu/classes/jcf/g22.3033-007/ • (Password: extjava) • Course Structure, Objectives, and References • See detailed syllabus on the course Web site under handouts • See ordered list of references on the course Web site • Textbooks • Mastering Java 2, J2SE 1.4 • J2EE: The Complete Reference • Expert One-on-One: J2EE Design and Development 3 Part I Java Features 4 2 Java Features Review • Language Categories • Imperative • Functional • Logic-based • Model-based PL Comparison Framework • Data Model • Behavioral Model • Event Model • Execution, Persistence, etc. • See Session 1 Handout on Java Review 5 J2SE 1.4 Features http://java.sun.com/j2se/1.4/index.html 6 3 J2SE 1.4 Features (continued) • HotSpot virtual machine • Full 64-bit support • Improved garbage collection • Ability to exploit multiprocessing • Optimization of the Java2D