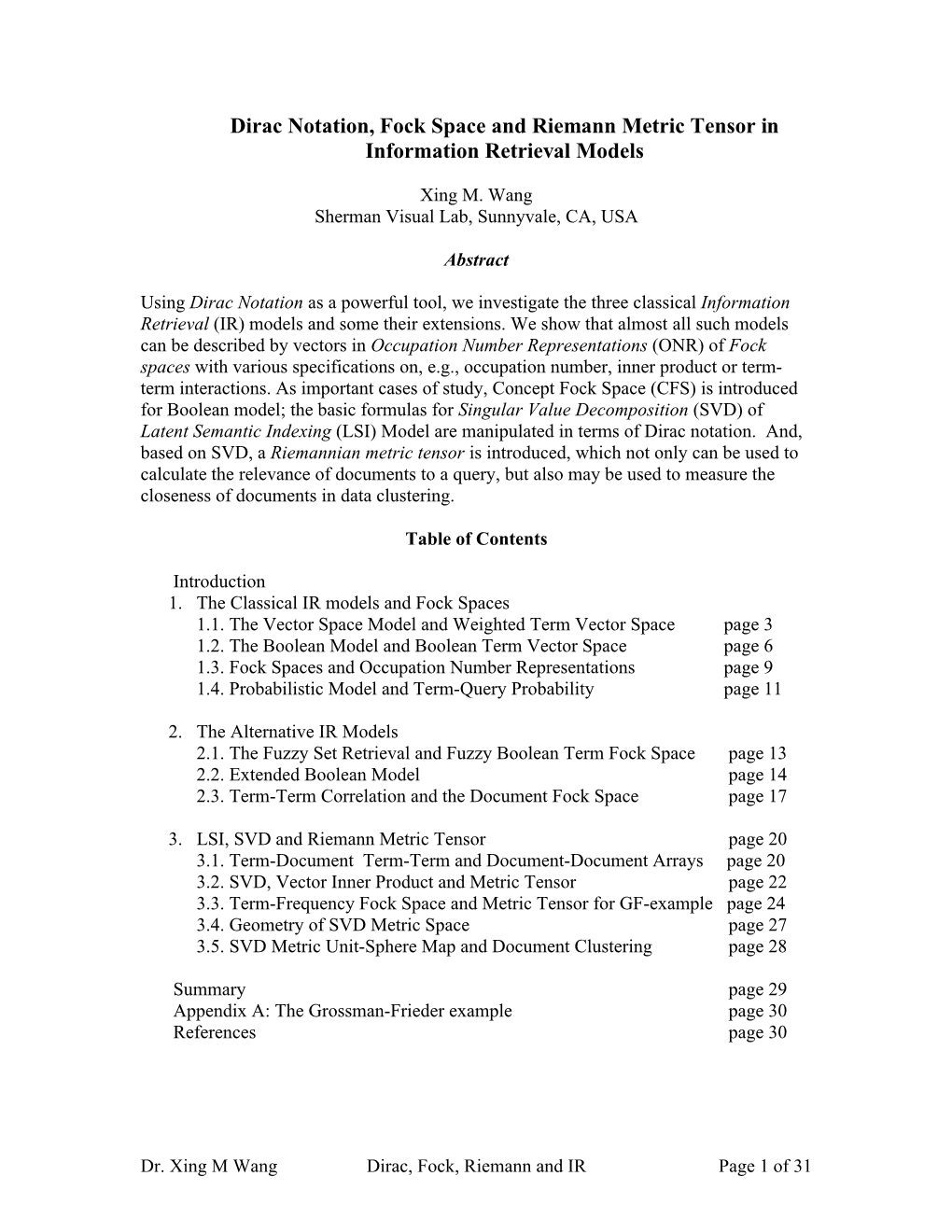

Dirac Notation, Fock Space and Riemann Metric Tensor in Information Retrieval Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 4 Introduction to Many-Body Quantum Mechanics

Chapter 4 Introduction to many-body quantum mechanics 4.1 The complexity of the quantum many-body prob- lem After learning how to solve the 1-body Schr¨odinger equation, let us next generalize to more particles. If a single body quantum problem is described by a Hilbert space of dimension dim = d then N distinguishable quantum particles are described by theH H tensor product of N Hilbert spaces N (N) N ⊗ (4.1) H ≡ H ≡ H i=1 O with dimension dN . As a first example, a single spin-1/2 has a Hilbert space = C2 of dimension 2, but N spin-1/2 have a Hilbert space (N) = C2N of dimensionH 2N . Similarly, a single H particle in three dimensional space is described by a complex-valued wave function ψ(~x) of the position ~x of the particle, while N distinguishable particles are described by a complex-valued wave function ψ(~x1,...,~xN ) of the positions ~x1,...,~xN of the particles. Approximating the Hilbert space of the single particle by a finite basis set with d basis functions, the N-particle basisH approximated by the same finite basis set for single particles needs dN basis functions. This exponential scaling of the Hilbert space dimension with the number of particles is a big challenge. Even in the simplest case – a spin-1/2 with d = 2, the basis for N = 30 spins is already of of size 230 109. A single complex vector needs 16 GByte of memory and will not fit into the memory≈ of your personal computer anymore. -

Second Quantization

Chapter 1 Second Quantization 1.1 Creation and Annihilation Operators in Quan- tum Mechanics We will begin with a quick review of creation and annihilation operators in the non-relativistic linear harmonic oscillator. Let a and a† be two operators acting on an abstract Hilbert space of states, and satisfying the commutation relation a,a† = 1 (1.1) where by “1” we mean the identity operator of this Hilbert space. The operators a and a† are not self-adjoint but are the adjoint of each other. Let α be a state which we will take to be an eigenvector of the Hermitian operators| ia†a with eigenvalue α which is a real number, a†a α = α α (1.2) | i | i Hence, α = α a†a α = a α 2 0 (1.3) h | | i k | ik ≥ where we used the fundamental axiom of Quantum Mechanics that the norm of all states in the physical Hilbert space is positive. As a result, the eigenvalues α of the eigenstates of a†a must be non-negative real numbers. Furthermore, since for all operators A, B and C [AB, C]= A [B, C] + [A, C] B (1.4) we get a†a,a = a (1.5) − † † † a a,a = a (1.6) 1 2 CHAPTER 1. SECOND QUANTIZATION i.e., a and a† are “eigen-operators” of a†a. Hence, a†a a = a a†a 1 (1.7) − † † † † a a a = a a a +1 (1.8) Consequently we find a†a a α = a a†a 1 α = (α 1) a α (1.9) | i − | i − | i Hence the state aα is an eigenstate of a†a with eigenvalue α 1, provided a α = 0. -

Orthogonalization of Fermion K-Body Operators and Representability

Orthogonalization of fermion k-Body operators and representability Bach, Volker Rauch, Robert April 10, 2019 Abstract The reduced k-particle density matrix of a density matrix on finite- dimensional, fermion Fock space can be defined as the image under the orthogonal projection in the Hilbert-Schmidt geometry onto the space of k- body observables. A proper understanding of this projection is therefore intimately related to the representability problem, a long-standing open problem in computational quantum chemistry. Given an orthonormal basis in the finite-dimensional one-particle Hilbert space, we explicitly construct an orthonormal basis of the space of Fock space operators which restricts to an orthonormal basis of the space of k-body operators for all k. 1 Introduction 1.1 Motivation: Representability problems In quantum chemistry, molecules are usually modeled as non-relativistic many- fermion systems (Born-Oppenheimer approximation). More specifically, the Hilbert space of these systems is given by the fermion Fock space = f (h), where h is the (complex) Hilbert space of a single electron (e.g. h = LF2(R3)F C2), and the Hamiltonian H is usually a two-body operator or, more generally,⊗ a k-body operator on . A key physical quantity whose computation is an im- F arXiv:1807.05299v2 [math-ph] 9 Apr 2019 portant task is the ground state energy . E0(H) = inf ϕ(H) (1) ϕ∈S of the system, where ( )′ is a suitable set of states on ( ), where ( ) is the Banach space ofS⊆B boundedF operators on and ( )′ BitsF dual. A directB F evaluation of (1) is, however, practically impossibleF dueB F to the vast size of the state space . -

Chapter 4 MANY PARTICLE SYSTEMS

Chapter 4 MANY PARTICLE SYSTEMS The postulates of quantum mechanics outlined in previous chapters include no restrictions as to the kind of systems to which they are intended to apply. Thus, although we have considered numerous examples drawn from the quantum mechanics of a single particle, the postulates themselves are intended to apply to all quantum systems, including those containing more than one and possibly very many particles. Thus, the only real obstacle to our immediate application of the postulates to a system of many (possibly interacting) particles is that we have till now avoided the question of what the linear vector space, the state vector, and the operators of a many-particle quantum mechanical system look like. The construction of such a space turns out to be fairly straightforward, but it involves the forming a certain kind of methematical product of di¤erent linear vector spaces, referred to as a direct or tensor product. Indeed, the basic principle underlying the construction of the state spaces of many-particle quantum mechanical systems can be succinctly stated as follows: The state vector à of a system of N particles is an element of the direct product space j i S(N) = S(1) S(2) S(N) ¢¢¢ formed from the N single-particle spaces associated with each particle. To understand this principle we need to explore the structure of such direct prod- uct spaces. This exploration forms the focus of the next section, after which we will return to the subject of many particle quantum mechanical systems. 4.1 The Direct Product of Linear Vector Spaces Let S1 and S2 be two independent quantum mechanical state spaces, of dimension N1 and N2, respectively (either or both of which may be in…nite). -

Fock Space Dynamics

TCM315 Fall 2020: Introduction to Open Quantum Systems Lecture 10: Fock space dynamics Course handouts are designed as a study aid and are not meant to replace the recommended textbooks. Handouts may contain typos and/or errors. The students are encouraged to verify the information contained within and to report any issue to the lecturer. CONTENTS Introduction 1 Composite systems of indistinguishable particles1 Permutation group S2 of 2-objects 2 Admissible states of three indistiguishable systems2 Parastatistics 4 The spin-statistics theorem 5 Fock space 5 Creation and annihilation of particles 6 Canonical commutation relations 6 Canonical anti-commutation relations 7 Explicit example for a Fock space with 2 distinguishable Fermion states7 Central oscillator of a linear boson system8 Decoupling of the one particle sector 9 References 9 INTRODUCTION The chapter 5 of [6] oers a conceptually very transparent introduction to indistinguishable particle kinematics in quantum mechanics. Particularly valuable is the brief but clear discussion of para-statistics. The last sections of the chapter are devoted to expounding, physics style, the second quantization formalism. Chapter I of [2] is a very clear and detailed presentation of second quantization formalism in the form that is needed in mathematical physics applications. Finally, the dynamics of a central oscillator in an external eld draws from chapter 11 of [4]. COMPOSITE SYSTEMS OF INDISTINGUISHABLE PARTICLES The composite system postulate tells us that the Hilbert space of a multi-partite system is the tensor product of the Hilbert spaces of the constituents. In other words, the composite system Hilbert space, is spanned by an orthonormal basis constructed by taking the tensor product of the elements of the orthonormal bases of the Hilbert spaces of the constituent systems. -

Quantum Physics in Non-Separable Hilbert Spaces

Quantum Physics in Non-Separable Hilbert Spaces John Earman Dept. HPS University of Pittsburgh In Mathematical Foundations of Quantum Mechanics (1932) von Neumann made separability of Hilbert space an axiom. Subsequent work in mathemat- ics (some of it by von Neumann himself) investigated non-separable Hilbert spaces, and mathematical physicists have sometimes made use of them. This note discusses some of the problems that arise in trying to treat quantum systems with non-separable spaces. Some of the problems are “merely tech- nical”but others point to interesting foundations issues for quantum theory, both in its abstract mathematical form and its applications to physical sys- tems. Nothing new or original is attempted here. Rather this note aims to bring into focus some issues that have for too long remained on the edge of consciousness for philosophers of physics. 1 Introduction A Hilbert space is separable if there is a countable dense set of vec- tors; equivalently,H admits a countable orthonormal basis. In Mathematical Foundations of QuantumH Mechanics (1932, 1955) von Neumann made sep- arability one of the axioms of his codification of the formalism of quantum mechanics. Working with a separable Hilbert space certainly simplifies mat- ters and provides for understandable realizations of the Hilbert space axioms: all infinite dimensional separable Hilbert spaces are the “same”: they are iso- 2 morphically isometric to LC(R), the space of square integrable complex val- 2 ued functions of R with inner product , := (x)(x)dx, , LC(R). h i 2 2 This Hilbert space in turn is isomorphically isometric to `C(N), the vector space of square summable sequences of complexR numbers with inner prod- S uct , := n1=1 xn yn, = (x1, x2, ...), = (y1, y2, ...) . -

Isometries, Fock Spaces, and Spectral Analysis of Schr¨Odinger Operators on Trees

Isometries, Fock Spaces, and Spectral Analysis of Schrodinger¨ Operators on Trees V. Georgescu and S. Golenia´ CNRS Departement´ de Mathematiques´ Universite´ de Cergy-Pontoise 2 avenue Adolphe Chauvin 95302 Cergy-Pontoise Cedex France [email protected] [email protected] June 15, 2004 Abstract We construct conjugate operators for the real part of a completely non unitary isometry and we give applications to the spectral and scattering the- ory of a class of operators on (complete) Fock spaces, natural generalizations of the Schrodinger¨ operators on trees. We consider C ∗-algebras generated by such Hamiltonians with certain types of anisotropy at infinity, we compute their quotient with respect to the ideal of compact operators, and give formu- las for the essential spectrum of these Hamiltonians. 1 Introduction The Laplace operator on a graph Γ acts on functions f : Γ C according to the relation ! (∆f)(x) = (f(y) f(x)); (1.1) − yX$x where y x means that x and y are connected by an edge. The spectral analysis $ and the scattering theory of the operators on `2(Γ) associated to expressions of the form L = ∆ + V , where V is a real function on Γ, is an interesting question which does not seem to have been much studied (we have in mind here only situations involving non trivial essential spectrum). 1 Our interest on these questions has been aroused by the work of C. Allard and R. Froese [All, AlF] devoted to the case when Γ is a binary tree: their main results are the construction of a conjugate operator for L under suitable conditions on the potential V and the proof of the Mourre estimate. -

How to Construct a Consistent and Physically Relevant the Fock Space of Neutrino Flavor States?

125 EPJ Web of Conferences , 03016 (2016) DOI: 10.1051/epjconf/201612503016 QUARKS -2016 How to construct a consistent and physically relevant the Fock space of neutrino flavor states? A. E.Lobanov1, 1Department of Theoretical Physics, Faculty of Physics, Moscow State University, 119991 Moscow, Russia Abstract. We propose a modification of the electroweak theory, where the fermions with the same electroweak quantum numbers are combined in multiplets and are treated as different quantum states of a single particle. Thereby, in describing the electroweak in- teractions it is possible to use four fundamental fermions only. In this model, the mixing and oscillations of the particles arise as a direct consequence of the general principles of quantum field theory. The developed approach enables one to calculate the probabilities of the processes taking place in the detector at long distances from the particle source. Calculations of higher-order processes including the computation of the contributions due to radiative corrections can be performed in the framework of perturbation theory using the regular diagram technique. 1 Introduction The Standard Model of electroweak interactions based on the non-Abelian gauge symmetry of the interactions [1], the generation of particle masses due to the spontaneous symmetry breaking mech- anism [2], and the philosophy of mixing of particle generations [3] is universally recognized. Its predictions obtained in the framework of perturbation theory are in a very good agreement with the experimental data, and there is no serious reason, at least at the energies available at present, for its main propositions to be revised. However, in describing such an important and firmly experimentally established phenomenon as neutrino oscillations [4], an essentially phenomenological theory based on the pioneer works by B. -

Back and Forth from Fock Space to Hilbert Space: a Guide for Commuters

Back and forth from Fock space to Hilbert space: a guide for commuters Andrea Beggi1, Ilaria Siloi2, Claudia Benedetti3, Enrico Piccinini4, Luca Razzoli5, Paolo Bordone5, Matteo G. A. Paris3;6 1I.I.S. Primo Levi, I-41058 Vignola, Italy. 2Department of Physics, University of North Texas, 76201 Denton, Texas, USA. 3Quantum Technology Lab, Dipartimento di Fisica Aldo Pontremoli, Universit`a degli Studi di Milano, I-20133 Milano, Italy. 4Dipartimento di Ingegneria dell'Energia Elettrica e dell'Informazione Guglielmo Marconi, Universit`adi Bologna, I-40136 Bologna, Italy. 5Dipartimento di Scienze Fisiche, Informatiche e Matematiche, Universit`adi Modena e Reggio Emilia, I-41125 Modena, Italy. 6The Institute of Mathematical Sciences, CIT Campus, Taramani, Chennai, 600113, India. Abstract. Quantum states of systems made of many identical particles, e.g. those described by Fermi-Hubbard and Bose-Hubbard models, are conveniently depicted in the Fock space. However, in order to evaluate some specific observables or to study the system dynamics, it is often more effective to employ the Hilbert space description. Moving effectively from one description to the other is thus a desirable feature, especially when a numerical approach is needed. Here we recall the construction of the Fock space for systems of indistinguishable particles, and then present a set of recipes and advices for those students and researchers in the need to commute back and forth from one description to the other. The two-particle case is discussed in some details and few guidelines for numerical implementations are given. arXiv:1805.04552v2 [quant-ph] 31 Aug 2018 Back and forth from Fock space to Hilbert space: a guide for commuters 2 1. -

What Is a Photon? Foundations of Quantum Field Theory

What is a Photon? Foundations of Quantum Field Theory C. G. Torre June 16, 2018 2 What is a Photon? Foundations of Quantum Field Theory Version 1.0 Copyright c 2018. Charles Torre, Utah State University. PDF created June 16, 2018 Contents 1 Introduction 5 1.1 Why do we need this course? . 5 1.2 Why do we need quantum fields? . 5 1.3 Problems . 6 2 The Harmonic Oscillator 7 2.1 Classical mechanics: Lagrangian, Hamiltonian, and equations of motion . 7 2.2 Classical mechanics: coupled oscillations . 8 2.3 The postulates of quantum mechanics . 10 2.4 The quantum oscillator . 11 2.5 Energy spectrum . 12 2.6 Position, momentum, and their continuous spectra . 15 2.6.1 Position . 15 2.6.2 Momentum . 18 2.6.3 General formalism . 19 2.7 Time evolution . 20 2.8 Coherent States . 23 2.9 Problems . 24 3 Tensor Products and Identical Particles 27 3.1 Definition of the tensor product . 27 3.2 Observables and the tensor product . 31 3.3 Symmetric and antisymmetric tensors. Identical particles. 32 3.4 Symmetrization and anti-symmetrization for any number of particles . 35 3.5 Problems . 36 4 Fock Space 38 4.1 Definitions . 38 4.2 Occupation numbers. Creation and annihilation operators. 40 4.3 Observables. Field operators. 43 4.3.1 1-particle observables . 43 4.3.2 2-particle observables . 46 4.3.3 Field operators and wave functions . 47 4.4 Time evolution of the field operators . 49 4.5 General formalism . 51 3 4 CONTENTS 4.6 Relation to the Hilbert space of quantum normal modes . -

Harmonic Oscillator,A,A , Fock Space, Identicle Particles, Bose/Fermi

ho_fs_ident.nb:10/14/04::22:47:34 1 HarmonicOscillator,a,a†,FockSpace,IdenticleParticles, Bose/Fermi ThissetoflecturesintroducesthealgebraictreatmentoftheHarmonicOscillatorandappliestheresulttoastring,a prototypicalsystemwithalargenumberofdegreesoffreedom.ThatsystemisusedtointroduceFockspace,discuss systemsofidenticleparticlesandintroduceBose/Fermiannihilationandcreationoperators. Á HarmonicOscillator ü ClassicSHO TheclassicalHamiltonianforthesimpleharmonicoscillatoris 1 2 k 2 1 2 mw2 2 H = ÅÅÅÅÅÅÅÅÅ2 m p + ÅÅÅÅ2 x = ÅÅÅÅÅÅÅÅÅ2 m p + ÅÅÅÅÅÅÅÅÅÅÅÅ2 x Thisleadstosimpleharmonicmotionwithfrequencyw = k m . r s ü QM:wavemechanics ∑ Makethereplacementp = -i ÅÅÅÅ∑xÅÅÅ ,andsolveforthewavefunctions.Forexample,Merzbacherchapter5. ü QM:operatorapproach Introduceraisingandloweringoperators( aanda† )andsolvesimplealgebraiceigenvalueproblem.Note:insome contexts(fieldtheory)a, a† arealsoknownasannihilationandcreationoperators. ü Setupofproblem,introductionofa, a†, and N ü ForconveniencesimplifyH Define:p' = ÅÅÅÅÅÅÅÅpÅÅÅÅÅÅÅ andx' = mw x,inwhichcaseH canberewrittenas mw r r w 2 2 H = ÅÅ2ÅÅÅ p' + x' + / ü Defineaanda† . Further,definetheoperator a = ÅÅÅÅ1ÅÅÅÅÅÅ x' + ip' 2 r + / andsincexandparehermitian,theadjointis a† = ÅÅÅÅ1ÅÅÅÅÅÅ x' - ip' . 2 r + / ho_fs_ident.nb:10/14/04::22:47:34 2 Alsonotethatx'andp'canberewrittenas x' = ÅÅÅÅ1ÅÅÅÅÅÅ a† + a andp' = ÅÅÅÅÅÅÅÅi ÅÅ a† - a . 2 2 r + / r + / Thecommutatorofaanda† is † 1 a, a = ÅÅ2ÅÅ x' + ip', x' - ip' = i p', x' = i p, x = 1 # ' # ' # ' # ' and † 1 1 2 2 1 2 2 1 a a = ÅÅ2ÅÅ x' - ip' x' + ip' = ÅÅ2ÅÅ x' -

Fock Space Formalism, the Net Operator (52) Acts As

Free Fields, Harmonic Oscillators, and Identical Bosons A free quantum field and its canonical conjugate are equivalent to a family of harmonic oscillators (one oscillator for each plane wave), which is in turn equivalent to a quantum theory of free identical bosons. In this note, I will show how all of this works for the relativistic scalar field' ^(x) and its conjugateπ ^(x). And then I will turn around and show that a quantum theory of any kind of identical bosons is equivalent to a family of oscillators. (Harmonic for the free particles, non-harmonic if the particles interact with each other.) Moreover, for the non-relativistic particles, the oscillator family is in turn equivalent to a non-relativistic quantum field theory. In this note we shall work in the Schr¨odingerpicture of Quantum Mechanics because it's more convenient for dealing with the eigenstates and the eigenvalues. Consequently, all operators | including the quantum fields such as' ^(x) | are time-independent. From Relativistic Fields to Harmonic Oscillators Let us start with the relativistic scalar field' ^(x) and its conjugateπ ^(x); they obey the canonical commutation relations [' ^(x); '^(x0)] = 0; [^π(x); π^(x0)] = 0; [' ^(x); π^(x0)] = iδ(3)(x − x0) (1) and are governed by the Hamiltonian Z ^ 3 1 2 1 2 1 2 2 H = d x 2 π^ (x) + 2 (r'^(x)) + 2 m '^ (x) : (2) We want to expand the fields into plane-wave modes' ^k andπ ^k, and to avoid technical difficulties with the oscillators and their eigenstates, we want discrete modes. Therefore, we replace the infinite x space with a finite but very large box of size L × L × L, and impose periodic boundary conditions |' ^(x + L; y; z) =' ^(x; y + L; z) =' ^(x; y; z + L) =' ^(x; y; z), etc., etc.