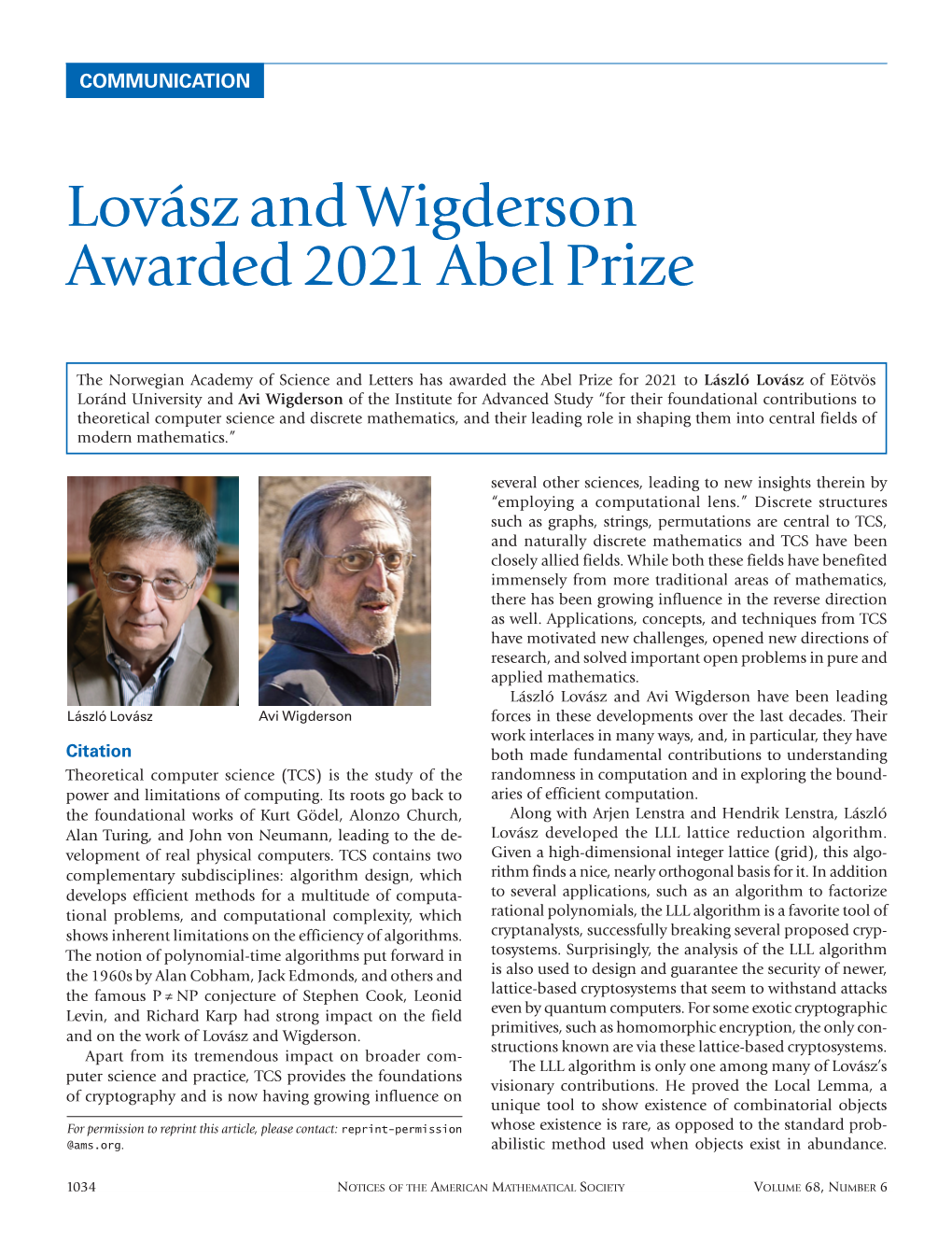

Lovász and Wigderson Awarded 2021 Abel Prize

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Zig-Zag Product

High Dimensional Expanders Lecture 12: Agreement Tests Lecture by: Irit Dinur Scribe: Irit Dinur In this lecture we describe agreement tests, which are a generalization of direct product tests and low degree tests, both of which play a role in PCP constructions. It turns out that the underlying structures that support agreement tests are invariably some kind of high dimensional expander, as we shall see. 1 General Setup It is a basic fact of computation that any global computation can be broken down into a sequence of local steps. The PCP theorem [AS98, ALM+98] says that moreover, this can be done in a robust fashion, so that as long as most steps are correct, the entire computation checks out. At the heart of this is a local-to-global argument that allows deducing a global property from local pieces that fit together only approximately. In an agreement test, a function is given by an ensemble of local restrictions. The agreement test checks that the restrictions agree when they overlap, and the main question is whether typical agreement of the local pieces implies that there exists a global function that agrees with most local restrictions. Let us describe the basic framework, consisting of a quadruple (V; X; fFSgS2X ; D). • Ground set: Let V be a set of n points (or vertices). • Collection of small subsets: Let X be a collection of subsets S ⊂ V , typically for each S 2 X we have jSj n. • Local functions: for each subset S 2 X, there is a space FS ⊂ ff : S ! Σg of functions on S. -

Going Beyond Dual Execution: MPC for Functions with Efficient Verification

Going Beyond Dual Execution: MPC for Functions with Efficient Verification Carmit Hazay abhi shelat ∗ Bar-Ilan University Northeastern Universityy Muthuramakrishnan Venkitasubramaniamz University of Rochester Abstract The dual execution paradigm of Mohassel and Franklin (PKC’06) and Huang, Katz and Evans (IEEE ’12) shows how to achieve the notion of 1-bit leakage security at roughly twice the cost of semi-honest security for the special case of two-party secure computation. To date, there are no multi-party compu- tation (MPC) protocols that offer such a strong trade-off between security and semi-honest performance. Our main result is to address this shortcoming by designing 1-bit leakage protocols for the multi- party setting, albeit for a special class of functions. We say that function f (x, y) is efficiently verifiable by g if the running time of g is always smaller than f and g(x, y, z) = 1 if and only if f (x, y) = z. In the two-party setting, we first improve dual execution by observing that the “second execution” can be an evaluation of g instead of f , and that by definition, the evaluation of g is asymptotically more efficient. Our main MPC result is to construct a 1-bit leakage protocol for such functions from any passive protocol for f that is secure up to additive errors and any active protocol for g. An important result by Genkin et al. (STOC ’14) shows how the classic protocols by Goldreich et al. (STOC ’87) and Ben-Or et al. (STOC ’88) naturally support this property, which allows to instantiate our compiler with two-party and multi-party protocols. -

On the Randomness Complexity of Interactive Proofs and Statistical Zero-Knowledge Proofs*

On the Randomness Complexity of Interactive Proofs and Statistical Zero-Knowledge Proofs* Benny Applebaum† Eyal Golombek* Abstract We study the randomness complexity of interactive proofs and zero-knowledge proofs. In particular, we ask whether it is possible to reduce the randomness complexity, R, of the verifier to be comparable with the number of bits, CV , that the verifier sends during the interaction. We show that such randomness sparsification is possible in several settings. Specifically, unconditional sparsification can be obtained in the non-uniform setting (where the verifier is modelled as a circuit), and in the uniform setting where the parties have access to a (reusable) common-random-string (CRS). We further show that constant-round uniform protocols can be sparsified without a CRS under a plausible worst-case complexity-theoretic assumption that was used previously in the context of derandomization. All the above sparsification results preserve statistical-zero knowledge provided that this property holds against a cheating verifier. We further show that randomness sparsification can be applied to honest-verifier statistical zero-knowledge (HVSZK) proofs at the expense of increasing the communica- tion from the prover by R−F bits, or, in the case of honest-verifier perfect zero-knowledge (HVPZK) by slowing down the simulation by a factor of 2R−F . Here F is a new measure of accessible bit complexity of an HVZK proof system that ranges from 0 to R, where a maximal grade of R is achieved when zero- knowledge holds against a “semi-malicious” verifier that maliciously selects its random tape and then plays honestly. -

LINEAR ALGEBRA METHODS in COMBINATORICS László Babai

LINEAR ALGEBRA METHODS IN COMBINATORICS L´aszl´oBabai and P´eterFrankl Version 2.1∗ March 2020 ||||| ∗ Slight update of Version 2, 1992. ||||||||||||||||||||||| 1 c L´aszl´oBabai and P´eterFrankl. 1988, 1992, 2020. Preface Due perhaps to a recognition of the wide applicability of their elementary concepts and techniques, both combinatorics and linear algebra have gained increased representation in college mathematics curricula in recent decades. The combinatorial nature of the determinant expansion (and the related difficulty in teaching it) may hint at the plausibility of some link between the two areas. A more profound connection, the use of determinants in combinatorial enumeration goes back at least to the work of Kirchhoff in the middle of the 19th century on counting spanning trees in an electrical network. It is much less known, however, that quite apart from the theory of determinants, the elements of the theory of linear spaces has found striking applications to the theory of families of finite sets. With a mere knowledge of the concept of linear independence, unexpected connections can be made between algebra and combinatorics, thus greatly enhancing the impact of each subject on the student's perception of beauty and sense of coherence in mathematics. If these adjectives seem inflated, the reader is kindly invited to open the first chapter of the book, read the first page to the point where the first result is stated (\No more than 32 clubs can be formed in Oddtown"), and try to prove it before reading on. (The effect would, of course, be magnified if the title of this volume did not give away where to look for clues.) What we have said so far may suggest that the best place to present this material is a mathematics enhancement program for motivated high school students. -

Karen Uhlenbeck Awarded the 2019 Abel Prize

RESEARCH NEWS Karen Uhlenbeck While she was in Urbana-Champagne (Uni- versity of Illinois), Karen Uhlenbeck worked Awarded the 2019 Abel with a postdoctoral fellow, Jonathan Sacks, Prize∗ on singularities of harmonic maps on 2D sur- faces. This was the beginning of a long journey in geometric analysis. In gauge the- Rukmini Dey ory, Uhlenbeck, in her remarkable ‘removable singularity theorem’, proved the existence of smooth local solutions to Yang–Mills equa- tions. The Fields medallist Simon Donaldson was very much influenced by her work. Sem- inal results of Donaldson and Uhlenbeck–Yau (amongst others) helped in establishing gauge theory on a firm mathematical footing. Uhlen- beck’s work with Terng on integrable systems is also very influential in the field. Karen Uhlenbeck is a professor emeritus of mathematics at the University of Texas at Austin, where she holds Sid W. Richardson Foundation Chair (since 1988). She is cur- Karen Uhlenbeck (Source: Wikimedia) rently a visiting associate at the Institute for Advanced Study, Princeton and a visiting se- nior research scholar at Princeton University. The 2019 Abel prize for lifetime achievements She has enthused many young women to take in mathematics was awarded for the first time up mathematics and runs a mentorship pro- to a woman mathematician, Professor Karen gram for women in mathematics at Princeton. Uhlenbeck. She is famous for her work in ge- Karen loves gardening and nature hikes. Hav- ometry, analysis and gauge theory. She has ing known her personally, I found she is one of proved very important (and hard) theorems in the most kind-hearted mathematicians I have analysis and applied them to geometry and ever known. -

The Wrangler3.2

Issue No. 3, Autumn 2004 Leicester The Maths Wrangler From the mathematicians at the University of Leicester http://www.math.le.ac.uk/ email: [email protected] WELCOME TO The Wrangler This is the third issue of our maths newsletter from the University of Leicester’s Department of Mathematics. You can pick it up on-line and all the back issues at our new web address http://www.math.le.ac.uk/WRANGLER. If you would like to be added to our mailing list or have suggestions, just drop us a line at [email protected], we’ll be flattered! What do we have here in this new issue? You may wonder what wallpaper and frying pans have to do with each other. We will tell you a fascinating story how they come up in new interdisciplinary research linking geometry and physics. We portrait our chancellor Sir Michael Atiyah, who recently has received the Abel prize, the maths equivalent of the Nobel prize. Sir Michael Atiyah will also open the new building of the MMC, which will be named after him, with a public maths lecture. Everyone is very welcome! More info at our new homepage http://www.math.le.ac.uk/ Contents Welcome to The Wrangler Wallpaper and Frying Pans - Linking Geometry and Physics K-Theory, Geometry and Physics - Sir Michael Atiyah gets Abel Prize The GooglePlexor The Mathematical Modelling Centre MMC The chancellor of the University of Leicester and world famous mathematician Sir Michael Atiyah (middle) receives the Abel Prize of the year 2004 together with colleague Isadore Singer (left) during the prize ceremony with King Harald of Norway. -

Foundations of Cryptography – a Primer Oded Goldreich

Foundations of Cryptography –APrimer Foundations of Cryptography –APrimer Oded Goldreich Department of Computer Science Weizmann Institute of Science Rehovot Israel [email protected] Boston – Delft Foundations and TrendsR in Theoretical Computer Science Published, sold and distributed by: now Publishers Inc. PO Box 1024 Hanover, MA 02339 USA Tel. +1 781 871 0245 www.nowpublishers.com [email protected] Outside North America: now Publishers Inc. PO Box 179 2600 AD Delft The Netherlands Tel. +31-6-51115274 A Cataloging-in-Publication record is available from the Library of Congress Printed on acid-free paper ISBN: 1-933019-02-6; ISSNs: Paper version 1551-305X; Electronic version 1551-3068 c 2005 O. Goldreich All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, mechanical, photocopying, recording or otherwise, without prior written permission of the publishers. now Publishers Inc. has an exclusive license to publish this mate- rial worldwide. Permission to use this content must be obtained from the copyright license holder. Please apply to now Publishers, PO Box 179, 2600 AD Delft, The Netherlands, www.nowpublishers.com; e-mail: [email protected] Contents 1 Introduction and Preliminaries 1 1.1 Introduction 1 1.2 Preliminaries 7 IBasicTools 10 2 Computational Difficulty and One-way Functions 13 2.1 One-way functions 14 2.2 Hard-core predicates 18 3 Pseudorandomness 23 3.1 Computational indistinguishability 24 3.2 Pseudorandom generators -

Robert P. Langlands Receives the Abel Prize

Robert P. Langlands receives the Abel Prize The Norwegian Academy of Science and Letters has decided to award the Abel Prize for 2018 to Robert P. Langlands of the Institute for Advanced Study, Princeton, USA “for his visionary program connecting representation theory to number theory.” Robert P. Langlands has been awarded the Abel Prize project in modern mathematics has as wide a scope, has for his work dating back to January 1967. He was then produced so many deep results, and has so many people a 30-year-old associate professor at Princeton, working working on it. Its depth and breadth have grown and during the Christmas break. He wrote a 17-page letter the Langlands program is now frequently described as a to the great French mathematician André Weil, aged 60, grand unified theory of mathematics. outlining some of his new mathematical insights. The President of the Norwegian Academy of Science and “If you are willing to read it as pure speculation I would Letters, Ole M. Sejersted, announced the winner of the appreciate that,” he wrote. “If not – I am sure you have a 2018 Abel Prize at the Academy in Oslo today, 20 March. waste basket handy.” Biography Fortunately, the letter did not end up in a waste basket. His letter introduced a theory that created a completely Robert P. Langlands was born in New Westminster, new way of thinking about mathematics: it suggested British Columbia, in 1936. He graduated from the deep links between two areas, number theory and University of British Columbia with an undergraduate harmonic analysis, which had previously been considered degree in 1957 and an MSc in 1958, and from Yale as unrelated. -

László Lovász Avi Wigderson De L’Université Eötvös Loránd À De L’Institute for Advanced Study De Budapest, En Hongrie Et À Princeton, Aux États-Unis

2021 L’Académie des sciences et des lettres de Norvège a décidé de décerner le prix Abel 2021 à László Lovász Avi Wigderson de l’université Eötvös Loránd à de l’Institute for Advanced Study de Budapest, en Hongrie et à Princeton, aux États-Unis, « pour leurs contributions fondamentales à l’informatique théorique et aux mathématiques discrètes, et pour leur rôle de premier plan dans leur transformation en domaines centraux des mathématiques contemporaines ». L’informatique théorique est l’étude de la puissance croissante sur plusieurs autres sciences, ce et des limites du calcul. Elle trouve son origine qui permet de faire de nouvelles découvertes dans les travaux fondateurs de Kurt Gödel, Alonzo en « chaussant des lunettes d’informaticien ». Church, Alan Turing et John von Neumann, qui ont Les structures discrètes telles que les graphes, conduit au développement de véritables ordinateurs les chaînes de caractères et les permutations physiques. L’informatique théorique comprend deux sont au cœur de l’informatique théorique, et les sous-disciplines complémentaires : l’algorithmique mathématiques discrètes et l’informatique théorique qui développe des méthodes efficaces pour une ont naturellement été des domaines étroitement multitude de problèmes de calcul ; et la complexité, liés. Certes, ces deux domaines ont énormément qui montre les limites inhérentes à l’efficacité des bénéficié des champs de recherche plus traditionnels algorithmes. La notion d’algorithmes en temps des mathématiques, mais on constate également polynomial mise en avant dans les années 1960 par une influence croissante dans le sens inverse. Alan Cobham, Jack Edmonds et d’autres, ainsi que Les applications, concepts et techniques de la célèbre conjecture P≠NP de Stephen Cook, Leonid l’informatique théorique ont généré de nouveaux Levin et Richard Karp ont eu un fort impact sur le défis, ouvert de nouvelles directions de recherche domaine et sur les travaux de Lovász et Wigderson. -

Relations and Equivalences Between Circuit Lower Bounds and Karp-Lipton Theorems*

Electronic Colloquium on Computational Complexity, Report No. 75 (2019) Relations and Equivalences Between Circuit Lower Bounds and Karp-Lipton Theorems* Lijie Chen Dylan M. McKay Cody D. Murray† R. Ryan Williams MIT MIT MIT Abstract A frontier open problem in circuit complexity is to prove PNP 6⊂ SIZE[nk] for all k; this is a neces- NP sary intermediate step towards NP 6⊂ P=poly. Previously, for several classes containing P , including NP NP NP , ZPP , and S2P, such lower bounds have been proved via Karp-Lipton-style Theorems: to prove k C 6⊂ SIZE[n ] for all k, we show that C ⊂ P=poly implies a “collapse” D = C for some larger class D, where we already know D 6⊂ SIZE[nk] for all k. It seems obvious that one could take a different approach to prove circuit lower bounds for PNP that does not require proving any Karp-Lipton-style theorems along the way. We show this intuition is wrong: (weak) Karp-Lipton-style theorems for PNP are equivalent to fixed-polynomial size circuit lower NP NP k NP bounds for P . That is, P 6⊂ SIZE[n ] for all k if and only if (NP ⊂ P=poly implies PH ⊂ i.o.-P=n ). Next, we present new consequences of the assumption NP ⊂ P=poly, towards proving similar re- sults for NP circuit lower bounds. We show that under the assumption, fixed-polynomial circuit lower bounds for NP, nondeterministic polynomial-time derandomizations, and various fixed-polynomial time simulations of NP are all equivalent. Applying this equivalence, we show that circuit lower bounds for NP imply better Karp-Lipton collapses. -

The Flajolet-Martin Sketch Itself Preserves Differential Privacy: Private Counting with Minimal Space

The Flajolet-Martin Sketch Itself Preserves Differential Privacy: Private Counting with Minimal Space Adam Smith Shuang Song Abhradeep Thakurta Boston University Google Research, Brain Team Google Research, Brain Team [email protected] [email protected] [email protected] Abstract We revisit the problem of counting the number of distinct elements F0(D) in a data stream D, over a domain [u]. We propose an ("; δ)-differentially private algorithm that approximates F0(D) within a factor of (1 ± γ), and with additive error of p O( ln(1/δ)="), using space O(ln(ln(u)/γ)/γ2). We improve on the prior work at least quadratically and up to exponentially, in terms of both space and additive p error. Our additive error guarantee is optimal up to a factor of O( ln(1/δ)), n ln(u) 1 o and the space bound is optimal up to a factor of O min ln γ ; γ2 . We assume the existence of an ideal uniform random hash function, and ignore the space required to store it. We later relax this requirement by assuming pseudo- random functions and appealing to a computational variant of differential privacy, SIM-CDP. Our algorithm is built on top of the celebrated Flajolet-Martin (FM) sketch. We show that FM-sketch is differentially private as is, as long as there are p ≈ ln(1/δ)=(εγ) distinct elements in the data set. Along the way, we prove a structural result showing that the maximum of k i.i.d. random variables is statisti- cally close (in the sense of "-differential privacy) to the maximum of (k + 1) i.i.d. -

Calibrating Noise to Sensitivity in Private Data Analysis

Calibrating Noise to Sensitivity in Private Data Analysis Cynthia Dwork1, Frank McSherry1, Kobbi Nissim2, and Adam Smith3? 1 Microsoft Research, Silicon Valley. {dwork,mcsherry}@microsoft.com 2 Ben-Gurion University. [email protected] 3 Weizmann Institute of Science. [email protected] Abstract. We continue a line of research initiated in [10, 11] on privacy- preserving statistical databases. Consider a trusted server that holds a database of sensitive information. Given a query function f mapping databases to reals, the so-called true answer is the result of applying f to the database. To protect privacy, the true answer is perturbed by the addition of random noise generated according to a carefully chosen distribution, and this response, the true answer plus noise, is returned to the user. Previous work focused on the case of noisy sums, in which f = P i g(xi), where xi denotes the ith row of the database and g maps database rows to [0, 1]. We extend the study to general functions f, proving that privacy can be preserved by calibrating the standard devi- ation of the noise according to the sensitivity of the function f. Roughly speaking, this is the amount that any single argument to f can change its output. The new analysis shows that for several particular applications substantially less noise is needed than was previously understood to be the case. The first step is a very clean characterization of privacy in terms of indistinguishability of transcripts. Additionally, we obtain separation re- sults showing the increased value of interactive sanitization mechanisms over non-interactive.