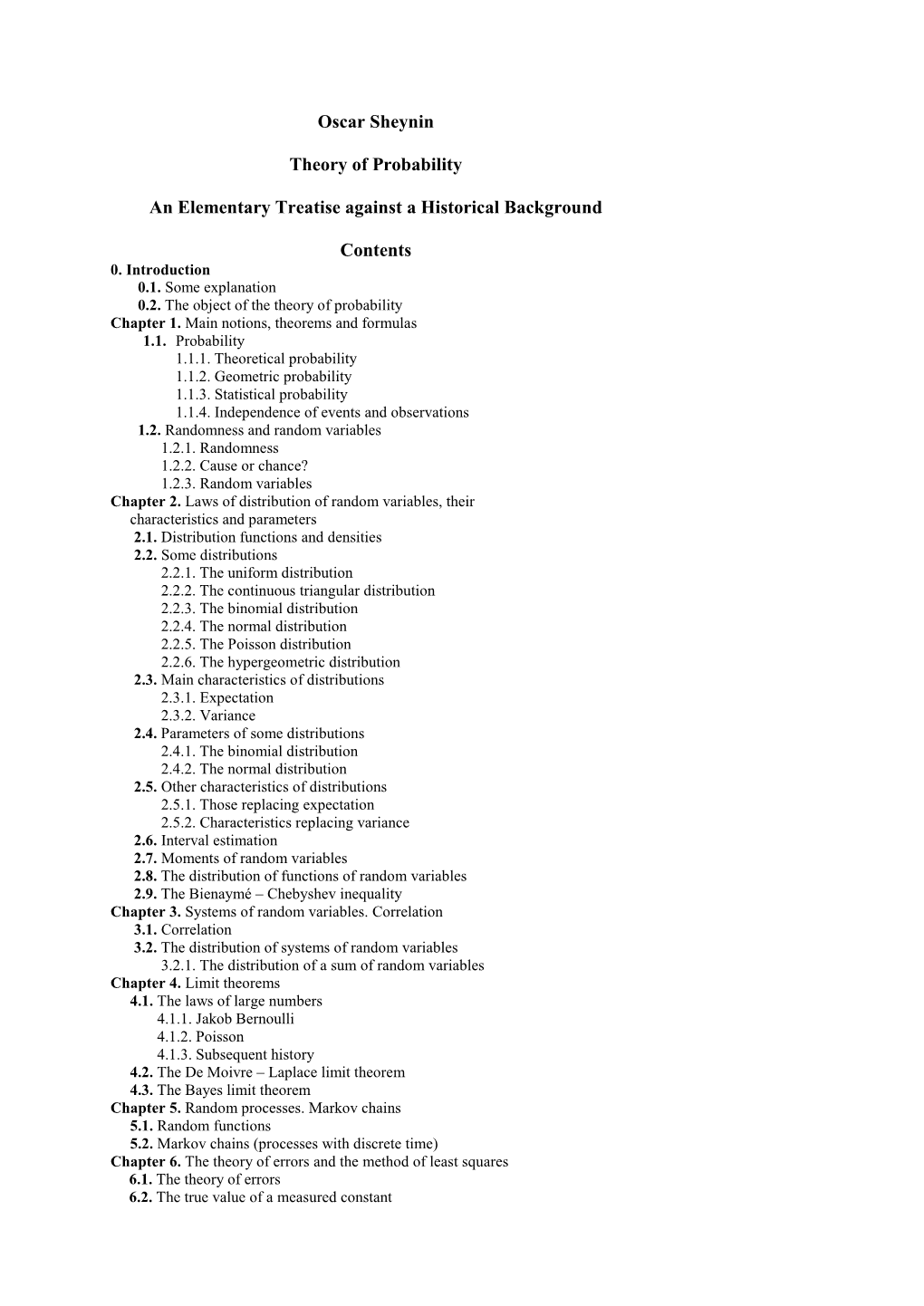

Oscar Sheynin Theory of Probability an Elementary Treatise Against A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Probability and Its Applications

Probability and its Applications Published in association with the Applied Probability Trust Editors: S. Asmussen, J. Gani, P. Jagers, T.G. Kurtz Probability and its Applications Azencott et al.: Series of Irregular Observations. Forecasting and Model Building. 1986 Bass: Diffusions and Elliptic Operators. 1997 Bass: Probabilistic Techniques in Analysis. 1995 Berglund/Gentz: Noise-Induced Phenomena in Slow-Fast Dynamical Systems: A Sample-Paths Approach. 2006 Biagini/Hu/Øksendal/Zhang: Stochastic Calculus for Fractional Brownian Motion and Applications. 2008 Chen: Eigenvalues, Inequalities and Ergodic Theory. 2005 Costa/Fragoso/Marques: Discrete-Time Markov Jump Linear Systems. 2005 Daley/Vere-Jones: An Introduction to the Theory of Point Processes I: Elementary Theory and Methods. 2nd ed. 2003, corr. 2nd printing 2005 Daley/Vere-Jones: An Introduction to the Theory of Point Processes II: General Theory and Structure. 2nd ed. 2008 de la Peña/Gine: Decoupling: From Dependence to Independence, Randomly Stopped Processes, U-Statistics and Processes, Martingales and Beyond. 1999 de la Peña/Lai/Shao: Self-Normalized Processes. 2009 Del Moral: Feynman-Kac Formulae. Genealogical and Interacting Particle Systems with Applications. 2004 Durrett: Probability Models for DNA Sequence Evolution. 2002, 2nd ed. 2008 Ethier: The Doctrine of Chances. Probabilistic Aspects of Gambling. 2010 Feng: The Poisson–Dirichlet Distribution and Related Topics. 2010 Galambos/Simonelli: Bonferroni-Type Inequalities with Equations. 1996 Gani (ed.): The Craft of Probabilistic Modelling. A Collection of Personal Accounts. 1986 Gut: Stopped Random Walks. Limit Theorems and Applications. 1987 Guyon: Random Fields on a Network. Modeling, Statistics and Applications. 1995 Kallenberg: Foundations of Modern Probability. 1997, 2nd ed. 2002 Kallenberg: Probabilistic Symmetries and Invariance Principles. -

A Tricentenary History of the Law of Large Numbers

Bernoulli 19(4), 2013, 1088–1121 DOI: 10.3150/12-BEJSP12 A Tricentenary history of the Law of Large Numbers EUGENE SENETA School of Mathematics and Statistics FO7, University of Sydney, NSW 2006, Australia. E-mail: [email protected] The Weak Law of Large Numbers is traced chronologically from its inception as Jacob Bernoulli’s Theorem in 1713, through De Moivre’s Theorem, to ultimate forms due to Uspensky and Khinchin in the 1930s, and beyond. Both aspects of Jacob Bernoulli’s Theorem: 1. As limit theorem (sample size n →∞), and: 2. Determining sufficiently large sample size for specified precision, for known and also unknown p (the inversion problem), are studied, in frequentist and Bayesian settings. The Bienaym´e–Chebyshev Inequality is shown to be a meeting point of the French and Russian directions in the history. Particular emphasis is given to less well-known aspects especially of the Russian direction, with the work of Chebyshev, Markov (the organizer of Bicentennial celebrations), and S.N. Bernstein as focal points. Keywords: Bienaym´e–Chebyshev Inequality; Jacob Bernoulli’s Theorem; J.V. Uspensky and S.N. Bernstein; Markov’s Theorem; P.A. Nekrasov and A.A. Markov; Stirling’s approximation 1. Introduction 1.1. Jacob Bernoulli’s Theorem Jacob Bernoulli’s Theorem was much more than the first instance of what came to be know in later times as the Weak Law of Large Numbers (WLLN). In modern notation Bernoulli showed that, for fixed p, any given small positive number ε, and any given large positive number c (for example c = 1000), n may be specified so that: arXiv:1309.6488v1 [math.ST] 25 Sep 2013 X 1 P p >ε < (1) n − c +1 for n n0(ε,c). -

Maty's Biography of Abraham De Moivre, Translated

Statistical Science 2007, Vol. 22, No. 1, 109–136 DOI: 10.1214/088342306000000268 c Institute of Mathematical Statistics, 2007 Maty’s Biography of Abraham De Moivre, Translated, Annotated and Augmented David R. Bellhouse and Christian Genest Abstract. November 27, 2004, marked the 250th anniversary of the death of Abraham De Moivre, best known in statistical circles for his famous large-sample approximation to the binomial distribution, whose generalization is now referred to as the Central Limit Theorem. De Moivre was one of the great pioneers of classical probability the- ory. He also made seminal contributions in analytic geometry, complex analysis and the theory of annuities. The first biography of De Moivre, on which almost all subsequent ones have since relied, was written in French by Matthew Maty. It was published in 1755 in the Journal britannique. The authors provide here, for the first time, a complete translation into English of Maty’s biography of De Moivre. New mate- rial, much of it taken from modern sources, is given in footnotes, along with numerous annotations designed to provide additional clarity to Maty’s biography for contemporary readers. INTRODUCTION ´emigr´es that both of them are known to have fre- Matthew Maty (1718–1776) was born of Huguenot quented. In the weeks prior to De Moivre’s death, parentage in the city of Utrecht, in Holland. He stud- Maty began to interview him in order to write his ied medicine and philosophy at the University of biography. De Moivre died shortly after giving his Leiden before immigrating to England in 1740. Af- reminiscences up to the late 1680s and Maty com- ter a decade in London, he edited for six years the pleted the task using only his own knowledge of the Journal britannique, a French-language publication man and De Moivre’s published work. -

History of Statistics 2. Origin of the Normal Curve – Abraham Demoivre

History of Statistics 2. Origin of the Normal Curve – Abraham DeMoivre (1667- 1754) The normal curve is perhaps the most important probability graph in all of statistics. Its formula is shown here with a familiar picture. The “ e” in the formula is the irrational number we use as the base for natural logarithms. μ and σ are the mean and standard deviation of the curve. The person who first derived the formula, Abraham DeMoivre (1667- 1754), was solving a gambling problem whose solution depended on finding the sum of the terms of a binomial distribution. Later work, especially by Gauss about 1800, used the normal distribution to describe the pattern of random measurement error in observational data. Neither man used the name “normal curve.” That expression did not appear until the 1870s. The normal curve formula appears in mathematics as a limiting case of what would happen if you had an infinite number of data points. To prove mathematically that some theoretical distribution is actually normal you have to be familiar with the idea of limits, with adding up more and more smaller and smaller terms. This process is a fundamental component of calculus. So it’s not surprising that the formula first appeared at the same time the basic ideas of calculus were being developed in England and Europe in the late 17 th and early 18 th centuries. The normal curve formula first appeared in a paper by DeMoivre in 1733. He lived in England, having left France when he was about 20 years old. Many French Protestants, the Huguenots, left France when the King canceled the Edict of Nantes which had given them civil rights. -

6. the Valuation of Life Annuities ______

6. The Valuation of Life Annuities ____________________________________________________________ Origins of Life Annuities The origins of life annuities can be traced to ancient times. Socially determined rules of inheritance usually meant a sizable portion of the family estate would be left to a predetermined individual, often the first born son. Bequests such as usufructs, maintenances and life incomes were common methods of providing security to family members and others not directly entitled to inheritances. 1 One element of the Falcidian law of ancient Rome, effective from 40 BC, was that the rightful heir(s) to an estate was entitled to not less than one quarter of the property left by a testator, the so-called “Falcidian fourth’ (Bernoulli 1709, ch. 5). This created a judicial quandary requiring any other legacies to be valued and, if the total legacy value exceeded three quarters of the value of the total estate, these bequests had to be reduced proportionately. The Falcidian fourth created a legitimate valuation problem for jurists because many types of bequests did not have observable market values. Because there was not a developed market for life annuities, this was the case for bequests of life incomes. Some method was required to convert bequests of life incomes to a form that could be valued. In Roman law, this problem was solved by the jurist Ulpian (Domitianus Ulpianus, ?- 228) who devised a table for the conversion of life annuities to annuities certain, a security for which there was a known method of valuation. Ulpian's Conversion Table is given by Greenwood (1940) and Hald (1990, p.117): Age of annuitant in years 0-19 20-24 25-29 30-34 35-39 40...49 50-54 55-59 60- Comparable Term to maturity of an annuity certain in years 30 28 25 22 20 19...10 9 7 5 The connection between age and the pricing of life annuities is a fundamental insight of Ulpian's table. -

Ars Conjectandi Three Hundred Years On

Significance - Statistics making sense Bernoulli and the Foundations of Statistics. Can you correct a 300-year-old error? Julian Champkin Ars Conjectandi is not a book that non-statisticians will have heard of, nor one that many statisticians will have heard of either. The title means ‘The Art of Conjecturing’ – which in turn means roughly ‘What You Can Work Out From the Evidence.’ But it is worth statisticians celebrating it, because it is the book that gave an adequate mathematical foundation to their discipline, and it was published 300 years ago this year. More people will have heard of its author. Jacob Bernouilli was one of a huge mathematical family of Bernoullis. In physics, aircraft engineers base everything they do on Bernoulli’s principle. It explains how aircraft wings give lift, is the basis of fluid dynamics, and was discovered by Jacob’s nephew Daniel Bernoulli. Jacob Bernoulli (1654-1705) Johann Bernoulli made important advances in mathematical calculus. He was Jacob’s younger brother – the two fell out bitterly. Johann fell out with his fluid-dynamics son Daniel, too, and even falsified the date on a book of his own to try to show that he had discovered the principle first. But our statistical Bernoulli is Jacob. In the higher reaches of pure mathematics he is loved for Bernoulli numbers, which are fiendishly complicated things which I do not pretend to understand but which apparently underpin number theory. In statistics, his contribution was two-fold: Bernoulli trials are, essentially, coinflips repeated lots of times. Toss a fair coin ten times, and you might well get 6 heads and four tails rather than an exact 5/5 split. -

The Significance of Jacob Bernoulli's Ars Conjectandi

The Significance of Jacob Bernoulli’s Ars Conjectandi for the Philosophy of Probability Today Glenn Shafer Rutgers University More than 300 years ago, in a fertile period from 1684 to 1689, Jacob Bernoulli worked out a strategy for applying the mathematics of games of chance to the domain of probability. That strategy came into public view in August 1705, eight years after Jacob’s death at the age of 50, when his masterpiece, Ars Conjectandi., was published. Unfortunately, the message of Ars Conjectandi was not fully absorbed at the time of its publication, and it has been obscured by various intellectual fashions during the past 300 years. The theme of this article is that Jacob’s contributions to the philosophy of probability are still fresh in many ways. After 300 years, we still have something to learn from his way of seeing the problems and from his approach to solving them. And the fog that obscured his ideas is clearing. Our intellectual situation today may permit us a deeper appreciation of Jacob’s message than any of our predecessors were able to achieve. I will pursue this theme chronologically. First, I will review Jacob’s problem situation and how he dealt with it. Second I will look at the changing obstacles to the reception of his message over the last three centuries. Finally, I will make a case for paying more attention today. 2 Newton 1642 1727 Leibniz 1646 1716 Jacob 1654 1705 Johann 1667 1748 Nicolaus 1687 1759 Figure 1 Jacob’s contemporaries. 1. Jacob’s Problem Situation Jacob Bernoulli was born in Basel on the 27th of December of 1654, the same year probability was born in Paris. -

Abraham DE MOIVRE B. 26 May 1667 - D

Abraham DE MOIVRE b. 26 May 1667 - d. 27 November 1754 Summary. The first textbook of a calculus of probabilities to contain a form of local central limit theorem grew out of the activities of the lonely Huguenot de Moivre who was forced up to old age to make his living by solving problems of games of chance and of annuities on lives for his clients whom he used to meet in a London coffeehouse. Due to the fact that he was born as Abraham Moivre and educated in France for the first 18 years of his life and that he later changed his name and his nationality in order to become, as a Mr. de Moivre, an English citizen, biographical interest in him seems to be relatively restricted compared with his significance as one of the outstanding mathematicians of his time. Nearly all contemporary biographical information on de Moivre goes back to his biography of Matthew Maty, member, later secretary of the Royal Society, and a close friend of de Moivre’s in old age. Abraham Moivre stemmed from a Protestant family. His father was a Protestant surgeon from Vitry-le-Fran¸cois in the Champagne. From the age of five to eleven he was educated by the Catholic P´eres de la doctrine Chr`etienne. Then he moved to the Protestant Academy at Sedan were he mainly studied Greek. After the latter was forced to close in 1681 for its pro- fession of faith, Moivre continued his studies at Saumur between 1682 and 1684 before joining his parents who had meanwhile moved to Paris. -

A. De Moivre: 'De Mensura Sortis' Or 'On the Measurement of Chance' Author(S): A

A. de Moivre: 'De Mensura Sortis' or 'On the Measurement of Chance' Author(s): A. Hald, Abraham de Moivre and Bruce McClintock Source: International Statistical Review / Revue Internationale de Statistique, Vol. 52, No. 3 (Dec., 1984), pp. 229-262 Published by: International Statistical Institute (ISI) Stable URL: https://www.jstor.org/stable/1403045 Accessed: 03-10-2018 12:34 UTC JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at https://about.jstor.org/terms International Statistical Institute (ISI) is collaborating with JSTOR to digitize, preserve and extend access to International Statistical Review / Revue Internationale de Statistique This content downloaded from 132.174.254.12 on Wed, 03 Oct 2018 12:34:22 UTC All use subject to https://about.jstor.org/terms International Statistical Review, (1984), 52, 3, pp. 229-262. Printed in Great Britain SInternational Statistical Institute A. de Moivre: 'De Mensura Sortis' or 'On the Measurement of Chance' [Philosophical Transactions (Numb. 329) for the months of January, February and March, 1711] Commentary on 'De Mensura Sortis' A. Hald Institute of Mathematical Statistics, University of Copenhagen, Universitetsparken 5, DK- 2100 Copenhagen 0, Denmark Summary De Moivre's first work on probability, De Mensura Sortis seu, de Probabilitate Eventuum in Ludis a Casu Fortuito Pendentibus, is translated into English. -

Självständiga Arbeten I Matematik

SJÄLVSTÄNDIGA ARBETEN I MATEMATIK MATEMATISKA INSTITUTIONEN, STOCKHOLMS UNIVERSITET The emergence of probability theory av Jimmy Calén 2015 - No 5 MATEMATISKA INSTITUTIONEN, STOCKHOLMS UNIVERSITET, 106 91 STOCKHOLM The emergence of probability theory Jimmy Calén Självständigt arbete i matematik 15 högskolepoäng, grundnivå Handledare: Paul Vaderlind 2015 Abstract This bachelor thesis will examine how, and for what reason, some of the fundamental probabilistic concepts emerged. The main focus will be on the transition from empiricism to science during the 17th and 18th century. 1 2 Acknowledgements I would first like to express my gratitude to my supervisor Paul Vaderlind for suggesting a topic in consummate accordance with my main mathematical interest, and also for his guidance during the writing process. Special thanks go to my loved ones, for their support and company during my studies. 3 Contents 1 Introduction ...................................................................................................................................... 5 2 Philosophical exposition of the probability concept ........................................................................ 6 3 Games of chance in early times ....................................................................................................... 7 4 The faltering steps before the 17th centuries breakthrough .............................................................. 9 5 Blaise Pascal & Pierre de Fermat – The 1654 years foundation of probability theory ................. -

Ars Conjectandi

DIDACTICS OF MATHEMATICS No. 7 (11) 2010 THE ART OF CONJECTURING (ARS CONJECTANDI) 1 ON THE HISTORICAL ORIGIN OF NORMAL DISTRIBUTION Ludomir Lauda ński Abstract. The paper offers a range of historic investigations regarding the normal distribu- tion, frequently also referred to as the Gaussian distribution. The first one is the Error Analysis, the second one is the Probability Theory with its old exposition called the Theory of Chance. The latter regarded as the essential, despite the fact that the origin of the Error Theory can be associated with Galileo Galilei and his Dialogo sopra i due massimi sistemi del mondo Tolemaico e Copernico . However, the normal distribution regarded in this way was not found before 1808-9 as a result of the combined efforts of Robert Adrain and his Researches Concerning Isotomous Curves on the one hand and Carl F. Gauss and his Theoria motus corporum coelestium in sectionibus conicis Solem ambienitum on the other. While considering the Theory of Chance – it is necessary to acknowledge The Doctrine of Chances of Abraham de Moivre – 1733 and the proof contained in this work showing the normal distribution derived as the liming case of the binomial distribution with the number of Bernoulli trials tending to infinity. Therefore the simplest conclusion of the paper is: the normal distribution should be rather attributed to Abraham de Moivre than to Carl Friedrich Gauss. Keywords: binomial distribution, Errors Theory, normal distribution. Personal statement. One day I was asked what the origin of Gaussian distribution was and whether I may shortly explain its origin? After one month of efforts dedicated to this question I had to confess that my hasty answer was inaccurate and reflected my underestimation of the problem. -

Derivation of Gaussian Probability Distribution: a New Approach

Applied Mathematics, 2020, 11, 436-446 https://www.scirp.org/journal/am ISSN Online: 2152-7393 ISSN Print: 2152-7385 Derivation of Gaussian Probability Distribution: A New Approach A. T. Adeniran1, O. Faweya2*, T. O. Ogunlade3, K. O. Balogun4 1Department of Statistics, University of Ibadan, Ibadan, Nigeria 2Department of Statistics, Ekiti State University (EKSU), Ado-Ekiti, Nigeria 3Department of Mathematics, Ekiti State University (EKSU), Ado-Ekiti, Nigeria 4Department of Statistics, Federal School of Statistics, Ibadan, Nigeria How to cite this paper: Adeniran, A.T., Abstract Faweya, O., Ogunlade, T.O. and Balogun, K.O. (2020) Derivation of Gaussian Proba- The famous de Moivre’s Laplace limit theorem proved the probability density bility Distribution: A New Approach. Ap- function of Gaussian distribution from binomial probability mass function plied Mathematics, 11, 436-446. under specified conditions. De Moivre’s Laplace approach is cumbersome as https://doi.org/10.4236/am.2020.116031 it relies heavily on many lemmas and theorems. This paper invented an al- Received: March 17, 2020 ternative and less rigorous method of deriving Gaussian distribution from Accepted: May 29, 2020 basic random experiment conditional on some assumptions. Published: June 1, 2020 Keywords Copyright © 2020 by author(s) and Scientific Research Publishing Inc. De Moivres Laplace Limit Theorem, Binomial Probability Mass Function, This work is licensed under the Creative Gaussian Distribution, Random Experiment Commons Attribution International License (CC BY 4.0). http://creativecommons.org/licenses/by/4.0/ Open Access 1. Introduction A well celebrated, fundamental probability distribution for the class of conti- nuous functions is the classical Gaussian distribution named after the German Mathematician Karl Friedrich Gauss in 1809.