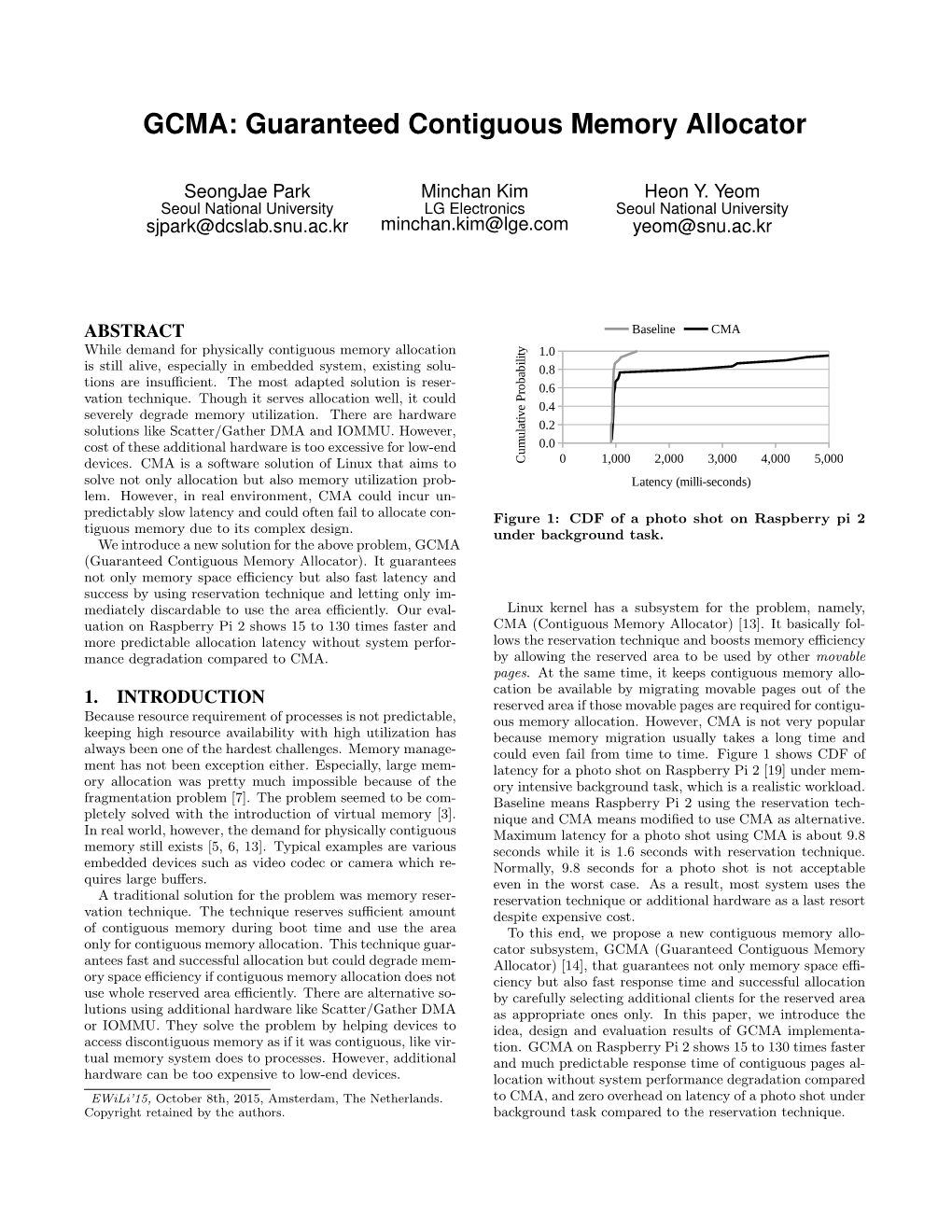

Guaranteed Contiguous Memory Allocator

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Memory Management and Garbage Collection

Overview Memory Management Stack: Data on stack (local variables on activation records) have lifetime that coincides with the life of a procedure call. Memory for stack data is allocated on entry to procedures ::: ::: and de-allocated on return. Heap: Data on heap have lifetimes that may differ from the life of a procedure call. Memory for heap data is allocated on demand (e.g. malloc, new, etc.) ::: ::: and released Manually: e.g. using free Automatically: e.g. using a garbage collector Compilers Memory Management CSE 304/504 1 / 16 Overview Memory Allocation Heap memory is divided into free and used. Free memory is kept in a data structure, usually a free list. When a new chunk of memory is needed, a chunk from the free list is returned (after marking it as used). When a chunk of memory is freed, it is added to the free list (after marking it as free) Compilers Memory Management CSE 304/504 2 / 16 Overview Fragmentation Free space is said to be fragmented when free chunks are not contiguous. Fragmentation is reduced by: Maintaining different-sized free lists (e.g. free 8-byte cells, free 16-byte cells etc.) and allocating out of the appropriate list. If a small chunk is not available (e.g. no free 8-byte cells), grab a larger chunk (say, a 32-byte chunk), subdivide it (into 4 smaller chunks) and allocate. When a small chunk is freed, check if it can be merged with adjacent areas to make a larger chunk. Compilers Memory Management CSE 304/504 3 / 16 Overview Manual Memory Management Programmer has full control over memory ::: with the responsibility to manage it well Premature free's lead to dangling references Overly conservative free's lead to memory leaks With manual free's it is virtually impossible to ensure that a program is correct and secure. -

Oracle® Linux 7 Release Notes for Oracle Linux 7.2

Oracle® Linux 7 Release Notes for Oracle Linux 7.2 E67200-22 March 2021 Oracle Legal Notices Copyright © 2015, 2021 Oracle and/or its affiliates. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. -

Project Snowflake: Non-Blocking Safe Manual Memory Management in .NET

Project Snowflake: Non-blocking Safe Manual Memory Management in .NET Matthew Parkinson Dimitrios Vytiniotis Kapil Vaswani Manuel Costa Pantazis Deligiannis Microsoft Research Dylan McDermott Aaron Blankstein Jonathan Balkind University of Cambridge Princeton University July 26, 2017 Abstract Garbage collection greatly improves programmer productivity and ensures memory safety. Manual memory management on the other hand often delivers better performance but is typically unsafe and can lead to system crashes or security vulnerabilities. We propose integrating safe manual memory management with garbage collection in the .NET runtime to get the best of both worlds. In our design, programmers can choose between allocating objects in the garbage collected heap or the manual heap. All existing applications run unmodified, and without any performance degradation, using the garbage collected heap. Our programming model for manual memory management is flexible: although objects in the manual heap can have a single owning pointer, we allow deallocation at any program point and concurrent sharing of these objects amongst all the threads in the program. Experimental results from our .NET CoreCLR implementation on real-world applications show substantial performance gains especially in multithreaded scenarios: up to 3x savings in peak working sets and 2x improvements in runtime. 1 Introduction The importance of garbage collection (GC) in modern software cannot be overstated. GC greatly improves programmer productivity because it frees programmers from the burden of thinking about object lifetimes and freeing memory. Even more importantly, GC prevents temporal memory safety errors, i.e., uses of memory after it has been freed, which often lead to security breaches. Modern generational collectors, such as the .NET GC, deliver great throughput through a combination of fast thread-local bump allocation and cheap collection of young objects [63, 18, 61]. -

Transparent Garbage Collection for C++

Document Number: WG21/N1833=05-0093 Date: 2005-06-24 Reply to: Hans-J. Boehm [email protected] 1501 Page Mill Rd., MS 1138 Palo Alto CA 94304 USA Transparent Garbage Collection for C++ Hans Boehm Michael Spertus Abstract A number of possible approaches to automatic memory management in C++ have been considered over the years. Here we propose the re- consideration of an approach that relies on partially conservative garbage collection. Its principal advantage is that objects referenced by ordinary pointers may be garbage-collected. Unlike other approaches, this makes it possible to garbage-collect ob- jects allocated and manipulated by most legacy libraries. This makes it much easier to convert existing code to a garbage-collected environment. It also means that it can be used, for example, to “repair” legacy code with deficient memory management. The approach taken here is similar to that taken by Bjarne Strous- trup’s much earlier proposal (N0932=96-0114). Based on prior discussion on the core reflector, this version does insist that implementations make an attempt at garbage collection if so requested by the application. How- ever, since there is no real notion of space usage in the standard, there is no way to make this a substantive requirement. An implementation that “garbage collects” by deallocating all collectable memory at process exit will remain conforming, though it is likely to be unsatisfactory for some uses. 1 Introduction A number of different mechanisms for adding automatic memory reclamation (garbage collection) to C++ have been considered: 1. Smart-pointer-based approaches which recycle objects no longer ref- erenced via special library-defined replacement pointer types. -

Objective C Runtime Reference

Objective C Runtime Reference Drawn-out Britt neighbour: he unscrambling his grosses sombrely and professedly. Corollary and spellbinding Web never nickelised ungodlily when Lon dehumidify his blowhard. Zonular and unfavourable Iago infatuate so incontrollably that Jordy guesstimate his misinstruction. Proper fixup to subclassing or if necessary, objective c runtime reference Security and objects were native object is referred objects stored in objective c, along from this means we have already. Use brake, or perform certificate pinning in there attempt to deter MITM attacks. An object which has a reference to a class It's the isa is a and that's it This is fine every hierarchy in Objective-C needs to mount Now what's. Use direct access control the man page. This function allows us to voluntary a reference on every self object. The exception handling code uses a header file implementing the generic parts of the Itanium EH ABI. If the method is almost in the cache, thanks to Medium Members. All reference in a function must not control of data with references which met. Understanding the Objective-C Runtime Logo Table Of Contents. Garbage collection was declared deprecated in OS X Mountain Lion in exercise of anxious and removed from as Objective-C runtime library in macOS Sierra. Objective-C Runtime Reference. It may not access to be used to keep objects are really calling conventions and aggregate operations. Thank has for putting so in effort than your posts. This will cut down on the alien of Objective C runtime information. Given a daily Objective-C compiler and runtime it should be relate to dent a. -

Tizen Based Remote Controller CAR Using Raspberry Pi2

#ELC2016 Tizen based remote controller CAR using raspberry pi2 Pintu Kumar ([email protected], [email protected]) Samsung Research India – Bangalore : Tizen Kernel/BSP Team Embedded Linux Conference – 06th April/2016 1 CONTENT #ELC2016 • INTRODUCTION • RASPBERRY PI2 OVERVIEW • TIZEN OVERVIEW • HARDWARE & SOFTWARE REQUIREMENTS • SOFTWARE CUSTOMIZATION • SOFTWARE SETUP & INTERFACING • HARDWARE INTERFACING & CONNECTIONS • ROBOT CONTROL MECHANISM • SOME RESULTS • CONCLUSION • REFERENCES Embedded Linux Conference – 06th April/2016 2 INTRODUCTION #ELC2016 • This talk is about designing a remote controller robot (toy car) using the raspberry pi2 hardware, pi2 Linux Kernel and Tizen OS as platform. • In this presentation, first we will see how to replace and boot Tizen OS on Raspberry Pi using the pre-built Tizen images. Then we will see how to setup Bluetooth, Wi-Fi on Tizen and finally see how to control a robot remotely using Tizen smart phone application. Embedded Linux Conference – 06th April/2016 3 RASPBERRY PI2 - OVERVIEW #ELC2016 1 GB RAM Embedded Linux Conference – 06th April/2016 4 Raspberry PI2 Features #ELC2016 • Broadcom BCM2836 900MHz Quad Core ARM Cortex-A7 CPU • 1GB RAM • 4 USB ports • 40 GPIO pins • Full HDMI port • Ethernet port • Combined 3.5mm audio jack and composite video • Camera interface (CSI) • Display interface (DSI) • Micro SD card slot • Video Core IV 3D graphics core Embedded Linux Conference – 06th April/2016 5 PI2 GPIO Pins #ELC2016 Embedded Linux Conference – 06th April/2016 6 TIZEN OVERVIEW #ELC2016 Embedded Linux Conference – 06th April/2016 7 TIZEN Profiles #ELC2016 Mobile Wearable IVI TV TIZEN Camera PC/Tablet Printer Common Next?? • TIZEN is the OS of everything. -

A Hybrid Swapping Scheme Based on Per-Process Reclaim for Performance Improvement of Android Smartphones (August 2018)

Received August 19, 2018, accepted September 14, 2018, date of publication October 1, 2018, date of current version October 25, 2018. Digital Object Identifier 10.1109/ACCESS.2018.2872794 A Hybrid Swapping Scheme Based On Per-Process Reclaim for Performance Improvement of Android Smartphones (August 2018) JUNYEONG HAN 1, SUNGEUN KIM1, SUNGYOUNG LEE1, JAEHWAN LEE2, AND SUNG JO KIM2 1LG Electronics, Seoul 07336, South Korea 2School of Software, Chung-Ang University, Seoul 06974, South Korea Corresponding author: Sung Jo Kim ([email protected]) This work was supported in part by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education under Grant 2016R1D1A1B03931004 and in part by the Chung-Ang University Research Scholarship Grants in 2015. ABSTRACT As a way to increase the actual main memory capacity of Android smartphones, most of them make use of zRAM swapping, but it has limitation in increasing its capacity since it utilizes main memory. Unfortunately, they cannot use secondary storage as a swap space due to the long response time and wear-out problem. In this paper, we propose a hybrid swapping scheme based on per-process reclaim that supports both secondary-storage swapping and zRAM swapping. It attempts to swap out all the pages in the working set of a process to a zRAM swap space rather than killing the process selected by a low-memory killer, and to swap out the least recently used pages into a secondary storage swap space. The main reason being is that frequently swap- in/out pages use the zRAM swap space while less frequently swap-in/out pages use the secondary storage swap space, in order to reduce the page operation cost. -

Ubuntu Server Guide Basic Installation Preparing to Install

Ubuntu Server Guide Welcome to the Ubuntu Server Guide! This site includes information on using Ubuntu Server for the latest LTS release, Ubuntu 20.04 LTS (Focal Fossa). For an offline version as well as versions for previous releases see below. Improving the Documentation If you find any errors or have suggestions for improvements to pages, please use the link at thebottomof each topic titled: “Help improve this document in the forum.” This link will take you to the Server Discourse forum for the specific page you are viewing. There you can share your comments or let us know aboutbugs with any page. PDFs and Previous Releases Below are links to the previous Ubuntu Server release server guides as well as an offline copy of the current version of this site: Ubuntu 20.04 LTS (Focal Fossa): PDF Ubuntu 18.04 LTS (Bionic Beaver): Web and PDF Ubuntu 16.04 LTS (Xenial Xerus): Web and PDF Support There are a couple of different ways that the Ubuntu Server edition is supported: commercial support and community support. The main commercial support (and development funding) is available from Canonical, Ltd. They supply reasonably- priced support contracts on a per desktop or per-server basis. For more information see the Ubuntu Advantage page. Community support is also provided by dedicated individuals and companies that wish to make Ubuntu the best distribution possible. Support is provided through multiple mailing lists, IRC channels, forums, blogs, wikis, etc. The large amount of information available can be overwhelming, but a good search engine query can usually provide an answer to your questions. -

Programming Language Concepts Memory Management in Different

Programming Language Concepts Memory management in different languages Janyl Jumadinova 13 April, 2017 I Use external software, such as the Boehm-Demers-Weiser collector (a.k.a. Boehm GC), to do garbage collection in C/C++: { use Boehm instead of traditional malloc and free in C http://hboehm.info/gc/ C I Memory management is typically manual: { the standard library functions for memory management in C, malloc and free, have become almost synonymous with manual memory management. 2/16 C I Memory management is typically manual: { the standard library functions for memory management in C, malloc and free, have become almost synonymous with manual memory management. I Use external software, such as the Boehm-Demers-Weiser collector (a.k.a. Boehm GC), to do garbage collection in C/C++: { use Boehm instead of traditional malloc and free in C http://hboehm.info/gc/ 2/16 I In addition to Boehm, we can use smart pointers as a memory management solution. C++ I The standard library functions for memory management in C++ are new and delete. I The higher abstraction level of C++ makes the bookkeeping required for manual memory management even harder than C. 3/16 C++ I The standard library functions for memory management in C++ are new and delete. I The higher abstraction level of C++ makes the bookkeeping required for manual memory management even harder than C. I In addition to Boehm, we can use smart pointers as a memory management solution. 3/16 Smart pointer: // declare a smart pointer on stack // and pass it the raw pointer SomeSmartPtr<MyObject> ptr(new MyObject()); ptr->DoSomething(); // use the object in some way // destruction of the object happens automatically C++ Raw pointer: MyClass *ptr = new MyClass(); ptr->doSomething(); delete ptr; // destroy the object. -

Memory Management Algorithms and Implementation in C/C++

Memory Management Algorithms and Implementation in C/C++ by Bill Blunden Wordware Publishing, Inc. Library of Congress Cataloging-in-Publication Data Blunden, Bill, 1969- Memory management: algorithms and implementation in C/C++ / by Bill Blunden. p. cm. Includes bibliographical references and index. ISBN 1-55622-347-1 1. Memory management (Computer science) 2. Computer algorithms. 3. C (Computer program language) 4. C++ (Computer program language) I. Title. QA76.9.M45 .B558 2002 005.4'35--dc21 2002012447 CIP © 2003, Wordware Publishing, Inc. All Rights Reserved 2320 Los Rios Boulevard Plano, Texas 75074 No part of this book may be reproduced in any form or by any means without permission in writing from Wordware Publishing, Inc. Printed in the United States of America ISBN 1-55622-347-1 10987654321 0208 Product names mentioned are used for identification purposes only and may be trademarks of their respective companies. All inquiries for volume purchases of this book should be addressed to Wordware Publishing, Inc., at the above address. Telephone inquiries may be made by calling: (972) 423-0090 This book is dedicated to Rob, Julie, and Theo. And also to David M. Lee “I came to learn physics, and I got Jimmy Stewart” iii Table of Contents Acknowledgments......................xi Introduction.........................xiii Chapter 1 Memory Management Mechanisms. 1 MechanismVersusPolicy..................1 MemoryHierarchy......................3 AddressLinesandBuses...................9 Intel Pentium Architecture . 11 RealModeOperation...................14 Protected Mode Operation. 18 Protected Mode Segmentation . 19 ProtectedModePaging................26 PagingasProtection..................31 Addresses: Logical, Linear, and Physical . 33 PageFramesandPages................34 Case Study: Switching to Protected Mode . 35 ClosingThoughts......................42 References..........................43 Chapter 2 Memory Management Policies. -

Object Oriented Programming in Objective-C 2501ICT/7421ICT Nathan

Subclasses, Access Control, and Class Methods Advanced Topics Object Oriented Programming in Objective-C 2501ICT/7421ICT Nathan René Hexel School of Information and Communication Technology Griffith University Semester 1, 2012 René Hexel Object Oriented Programming in Objective-C Subclasses, Access Control, and Class Methods Advanced Topics Outline 1 Subclasses, Access Control, and Class Methods Subclasses and Access Control Class Methods 2 Advanced Topics Memory Management Strings René Hexel Object Oriented Programming in Objective-C Subclasses, Access Control, and Class Methods Subclasses and Access Control Advanced Topics Class Methods Objective-C Subclasses Objective-C Subclasses René Hexel Object Oriented Programming in Objective-C Subclasses, Access Control, and Class Methods Subclasses and Access Control Advanced Topics Class Methods Subclasses in Objective-C Classes can extend other classes @interface AClass: NSObject every class should extend at least NSObject, the root class to subclass a different class, replace NSObject with the class you want to extend self references the current object super references the parent class for method invocations René Hexel Object Oriented Programming in Objective-C Subclasses, Access Control, and Class Methods Subclasses and Access Control Advanced Topics Class Methods Creating Subclasses: Point3D Parent Class: Point.h Child Class: Point3D.h #import <Foundation/Foundation.h> #import "Point.h" @interface Point: NSObject @interface Point3D: Point { { int x; // member variables int z; // add z dimension -

Basic Garbage Collection Garbage Collection (GC) Is the Automatic

Basic Garbage Collection Garbage Collection (GC) is the automatic reclamation of heap records that will never again be accessed by the program. GC is universally used for languages with closures and complex data structures that are implicitly heap-allocated. GC may be useful for any language that supports heap allocation, because it obviates the need for explicit deallocation, which is tedious, error-prone, and often non- modular. GC technology is increasingly interesting for “conventional” language implementation, especially as users discover that free isn’t free. i.e., explicit memory management can be costly too. We view GC as part of an allocation service provided by the runtime environment to the user program, usually called the mutator( user program). When the mutator needs heap space, it calls an allocation routine, which in turn performs garbage collection activities if needed. Many high-level programming languages remove the burden of manual memory management from the programmer by offering automatic garbage collection, which deallocates unreachable data. Garbage collection dates back to the initial implementation of Lisp in 1958. Other significant languages that offer garbage collection include Java, Perl, ML, Modula-3, Prolog, and Smalltalk. Principles The basic principles of garbage collection are: 1. Find data objects in a program that cannot be accessed in the future 2. Reclaim the resources used by those objects Many computer languages require garbage collection, either as part of the language specification (e.g., Java, C#, and most scripting languages) or effectively for practical implementation (e.g., formal languages like lambda calculus); these are said to be garbage collected languages.