PRINCIPLES of REMOTE Sensing1x

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Remote Sensing in India

Remote Sensing in India India’s Remote Sensing starts following the successful demonstration flights of Bhaskara-1 and Bhaskara-2 satellites launched in 1979 and 1981, respectively, with the development of indigenous Indian Remote Sensing (IRS) satellite program. Indian Remote Sensing (IRS) satellite system was commissioned with the launch of IRS-1A, in 1988. With many satellites in operation, IRS is the largest civilian remote sensing satellite constellation in the world providing imageries in a variety of spatial resolutions, spectral bands and swaths. Data from Indian Remote Sensing satellites are used for various applications of resources survey and management under the National Natural Resources Management System (NNRMS in following: Pre harvest crop area and production estimation of major crops. Drought/irrigation monitoring and assessment based on vegetation condition. Flood risk zone mapping and flood damage assessment. Hydro-geomorphologic maps for locating underground water resources for drilling well. Snow-melt run-off estimates for planning water use in downstream projects. Land use and land cover mapping. Urban planning. Forest survey. Wetland mapping. Environmental impact analysis. Mineral Prospecting. Coastal studies. India’s Recent IRS satellites 2012: Radar Imaging Satellite-1 (RISAT-1) imaging of the surface features during both day and night under all weather conditions. 2011: Megha-Tropiques, for studying the water cycle and energy exchanges in the tropics. 2011: RESOURCESAT-2 to continue the remote sensing data services to global users provided by RESOURCESAT-1, and to provide data with enhanced multispectral and spatial coverage as well. 2010: Cartosat-2B to provide multiple spot scene imageries. It is capable of imaging a swath (geographical strip) of 9.6 km with a resolution of better than 1 metre. -

25 Years of Indian Remote Sensing Satellite (IRS)

2525 YearsYears ofof IndianIndian RemoteRemote SensingSensing SatelliteSatellite (IRS)(IRS) SeriesSeries Vinay K Dadhwal Director National Remote Sensing Centre (NRSC), ISRO Hyderabad, INDIA 50 th Session of Scientific & Technical Subcommittee of COPUOS, 11-22 Feb., 2013, Vienna The Beginning • 1962 : Indian National Committee on Space Research (INCOSPAR), at PRL, Ahmedabad • 1963 : First Sounding Rocket launch from Thumba (Nov 21, 1963) • 1967 : Experimental Satellite Communication Earth Station (ESCES) established at Ahmedabad • 1969 : Indian Space Research Organisation (ISRO) established (15 August) PrePre IRSIRS --1A1A SatellitesSatellites • ARYABHATTA, first Indian satellite launched in April 1975 • Ten satellites before IRS-1A (7 for EO; 2 Met) • 5 Procured & 5 SLV / ASLV launch SAMIR : 3 band MW Radiometer SROSS : Stretched Rohini Series Satellite IndianIndian RemoteRemote SensingSensing SatelliteSatellite (IRS)(IRS) –– 1A1A • First Operational EO Application satellite, built in India, launch USSR • Carried 4-band multispectral camera (3 nos), 72m & 36m resolution Satellite Launch: March 17, 1988 Baikanur Cosmodrome Kazakhstan SinceSince IRSIRS --1A1A • Established of operational EO activities for – EO data acquisition, processing & archival – Applications & institutionalization – Public services in resource & disaster management – PSLV Launch Program to support EO missions – International partnership, cooperation & global data sets EarlyEarly IRSIRS MultispectralMultispectral SensorsSensors • 1st Generation : IRS-1A, IRS-1B • -

India and China Space Programs: from Genesis of Space Technologies to Major Space Programs and What That Means for the Internati

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2009 India And China Space Programs: From Genesis Of Space Technologies To Major Space Programs And What That Means For The Internati Gaurav Bhola University of Central Florida Part of the Political Science Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Masters Thesis (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Bhola, Gaurav, "India And China Space Programs: From Genesis Of Space Technologies To Major Space Programs And What That Means For The Internati" (2009). Electronic Theses and Dissertations, 2004-2019. 4109. https://stars.library.ucf.edu/etd/4109 INDIA AND CHINA SPACE PROGRAMS: FROM GENESIS OF SPACE TECHNOLOGIES TO MAJOR SPACE PROGRAMS AND WHAT THAT MEANS FOR THE INTERNATIONAL COMMUNITY by GAURAV BHOLA B.S. University of Central Florida, 1998 A dissertation submitted in partial fulfillment of the requirements for the degree of Master of Arts in the Department of Political Science in the College of Arts and Humanities at the University of Central Florida Orlando, Florida Summer Term 2009 Major Professor: Roger Handberg © 2009 Gaurav Bhola ii ABSTRACT The Indian and Chinese space programs have evolved into technologically advanced vehicles of national prestige and international competition for developed nations. The programs continue to evolve with impetus that India and China will have the same space capabilities as the United States with in the coming years. -

Annual Report 2017 - 2018 Annual Report 2017 - 2018 Citizens’ Charter of Department of Space

GSAT-17 Satellites Images icro M sat ries Satellit Se e -2 at s to r a C 0 SAT-1 4 G 9 -C V L S P III-D1 -Mk LV GS INS -1 C Asia Satell uth ite o (G S S A T - 09 9 LV-F ) GS ries Sat Se ellit t-2 e sa to 8 r -C3 a LV C PS Annual Report 2017 - 2018 Annual Report 2017 - 2018 Citizens’ Charter of Department Of Space Department Of Space (DOS) has the primary responsibility of promoting the development of space science, technology and applications towards achieving self-reliance and facilitating in all round development of the nation. With this basic objective, DOS has evolved the following programmes: • Indian National Satellite (INSAT) programme for telecommunication, television broadcasting, meteorology, developmental education, societal applications such as telemedicine, tele-education, tele-advisories and similar such services • Indian Remote Sensing (IRS) satellite programme for the management of natural resources and various developmental projects across the country using space based imagery • Indigenous capability for the design and development of satellite and associated technologies for communications, navigation, remote sensing and space sciences • Design and development of launch vehicles for access to space and orbiting INSAT / GSAT, IRS and IRNSS satellites and space science missions • Research and development in space sciences and technologies as well as application programmes for national development The Department Of Space is committed to: • Carrying out research and development in satellite and launch vehicle technology with a goal to achieve total self reliance • Provide national space infrastructure for telecommunications and broadcasting needs of the country • Provide satellite services required for weather forecasting, monitoring, etc. -

Ocean Colour Monitor (OCM) Onboard OCEANSAT-2 Mission

Ocean Colour Monitor (OCM) onboard OCEANSAT-2 mission Prakash Chauhan and Rangnath Navalgund Space Applications Centre, (ISRO), Ahemdabad-380015, India 1.0 Introduction Space borne ocean-colour remote sensing has already been established as a tool to provide quantitative information on the sea water constituents. Ocean-colour data from the first Indian ocean observation satellite OCEANSAT-1 was extensively used for various societal and scientific applications like Potential Fishing Zone (PFZ) identification, estimation of primary productivity, algal bloom detection and studying the coastal processes. OCEANSAT-2 spacecraft of Indian Space Research Organization (ISRO) is the second satellite in ocean series, which was successfully launched on September 23, 2009 from Shriharikota by Polar Satellite Launch Vehicle (PSLV)-C14 rocket. The OCEANSAT-2 satellite carried three main instruments namely i) Ku band pencil beam scatterometer, ii) modified Ocean Colour Monitor (OCM) and iii) Radio Occultation Sounder of Atmosphere (ROSA) instrument of Italian Space Agency (ASI). The OCEANSAT-2 OCM is mainly designed to provide continuity to the OCEANSAT-1 OCM instrument and to obtain quantitative information of ocean-colour variables e.g. chlorophyll-a concentration, vertical diffuse attenuation of the light, (Kd) and total suspended matter (TSM) concentration in coastal waters, apart from ocean-colour information OCM data will also be useful for studying the aerosol transport and terrestrial bio-sphere. OCEANSAT-2 OCM is almost identical to OCEANSAT-1 OCM, however central wavelength of two spectral bands i.e. band 6 and 7 have been shifted. The spectral band 6, which was located at 670 nm in OCEANSAT-1 OCM has now been shifted to 620 nm for improved quantification of suspended sediments. -

Space Collaboration Between India and France Asie.Visions 78

AAssiiee..VViissiioonnss 7788 ______________________________________________________________________ Space Collaboration between India and France -Towards a New Era- _________________________________________________________________ Ajey LELE September 2015 . Center for Asian Studies The Institut français des relations internationales (Ifri) is a research center and a forum for debate on major international political and economic issues. Headed by Thierry de Montbrial since its founding in 1979, Ifri is a non- governmental and a non-profit organization. As an independent think tank, Ifri sets its own research agenda, publishing its findings regularly for a global audience. Using an interdisciplinary approach, Ifri brings together political and economic decision-makers, researchers and internationally renowned experts to animate its debate and research activities. With offices in Paris and Brussels, Ifri stands out as one of the rare French think tanks to have positioned itself at the very heart of European debate. The opinions expressed in this text are the responsibility of the author alone. ISBN: 978-2-36567-433-1 © All rights reserved, Ifri, 2015 IFRI IFRI-BRUXELLES 27, RUE DE LA PROCESSION RUE MARIE-THÉRÈSE, 21 75740 PARIS CEDEX 15 – FRANCE 1000 – BRUXELLES – BELGIQUE Tel: +33 (0)1 40 61 60 00 Tel: +32 (0)2 238 51 10 Fax: +33 (0)1 40 61 60 60 Fax: +32 (0)2 238 51 15 Email: [email protected] Email: [email protected] WEBSITE: www.ifri.org Ifri Center for Asian Studies Asia is at the core of major global economic, political and security challenges. The Centre for Asian Studies provides documented expertise and a platform of discussion on Asian issues through the publication of research papers, partnerships with international think-tanks and the organization of seminars and conferences. -

Indian Remote Sensing Satellites (IRS)

Topic: Indian Remote Sensing Satellites (IRS) Course: Remote Sensing and GIS (CC-11) M.A. Geography (Sem.-3) By Dr. Md. Nazim Professor, Department of Geography Patna College, Patna University Lecture-5 Concept: India's remote sensing program was developed with the idea of applying space technologies for the benefit of human kind and the development of the country. The program involved the development of three principal capabilities. The first was to design, build and launch satellites to a sun synchronous orbit. The second was to establish and operate ground stations for spacecraft control, data transfer along with data processing and archival. The third was to use the data obtained for various applications on the ground. India demonstrated the ability of remote sensing for societal application by detecting coconut root-wilt disease from a helicopter mounted multispectral camera in 1970. This was followed by flying two experimental satellites, Bhaskara-1 in 1979 and Bhaskara-2 in 1981. These satellites carried optical and microwave payloads. India's remote sensing programme under the Indian Space Research Organization (ISRO) started off in 1988 with the IRS-1A, the first of the series of indigenous state-of-art operating remote sensing satellites, which was successfully launched into a polar sun-synchronous orbit on March 17, 1988 from the Soviet Cosmodrome at Baikonur. It has sensors like LISS-I which had a spatial resolution of 72.5 meters with a swath of 148 km on ground. LISS-II had two separate imaging sensors, LISS-II A and LISS-II B, with spatial resolution of 36.25 meters each and mounted on the spacecraft in such a way to provide a composite swath of 146.98 km on ground. -

Status Report on the Current and Future Satellite Systems by ISRO

Coordination Group for Meteorological Satellites - CGMS Status report on the current and future satellite systems by ISRO Presented to CGMS-47 plenary session, agenda item 4 ISRO, version1, 23 May 2019 Coordination Group for Meteorological Satellites - CGMS EXAMPLE - Overview - Planning of ISRO satellite systemsfor Oceans and Atmosphere YEAR... 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 OCEANSAT-2 Megha-Tropiques INSAT-3D SARAL- AltiKa Scatsat-1 INSAT-3DR GISAT RISAT-1 Present and Future Programmes Oceansat-3 Oceansat-3A NISAR INSAT-3DS TRISHNA ISRO, version1, 23 May 2019 Coordination Group for Meteorological Satellites - CGMS CURRENT GEO SATELLITES : INSAT-3D/3DR Both INSAT-3D/3DR are operational Geo missions. New products of cloud properties (CTT,CTP), CSBT (WV, TIR) and TPW are fully operational. CTP (hPa) CTT (K) 05 May2019 0600 UTC CSBT-TIR-1 (K) CSBT-WV (K) ISRO, version1, 23 May 2019 Coordination Group for Meteorological Satellites - CGMS SCATSAT-1: L4 Data Products Category LEVEL 4 Data Products at Category Parameter Res Format Products Dissemination Method MOSDAC GeoTiff Sigma0, (Km) Gamma0,BT (2 Km Resolution) L1B Scan mode Sigma0 25,50 HDF5 L1, L2A On user request and with L2A Swath grid Sigma0 25,50 HDF5 authentication INDIA VVDES,VVASC,VVBOTH, L2B Winds 25,50 HDF5 HHDES,HHASC,HHBOTH L3S Sigma0 (Day wise Global 25,50 HDF5 L2B, L3, L4 As an open FTP gridded) GLOBAL VVDES,VVASC,VVBOTH, HHDES,HHASC,HHBOTH L3W Winds (Day wise Global 25, 50 HDF5 Quickbrowse Anonymous user on gridded) MOSDAC portal NPOLAR24 -

Resourcesat, Cartosat, Oceansat

Agency Report: Indian Earth Observation Programme Nitant Dube Space Applications Centre Indian Space Research Organisation Presentation to CEOS – WGISS-41, Canberra Australia, March 2016 Indian EO Programme: The Uniqueness • Vibrant Space Segment: Constellation of Satellites • Resourcesat, Cartosat, Oceansat • Strong Ground Mechanisms • Effective Institutional Framework - NNRMS • Enabling infrastructure – Processing, Generation and dissemination of information • Observational Network • Goals o Provide continuity of data, also with enhanced capabilities o Conduct periodic natural resources inventory & enable the national spatial data infrastructure o Maximize outreach of natural resources information o Enable ocean state forecasting and improved weather forecasting; information needs for disaster monitoring and mitigation INSAT-3D SARAL The Evolution 2013 Imager, Sounder RISAT-1 : C-SAR 2012 27 Years since IRS-1A 2011 RESOURCESAT- MEGHA- CARTOSAT-2B : 2: LISS 3, LISS 4, TROPIQUES: PAN AWiFS SAPHIR, SCARAB & 2010 MADRAS IMS-1: Mx, HySI RISAT-2 : X-SAR CARTOSAT-2A : 2009 PAN 2008 OCEANSAT-2: OCM, CARTOSAT-1: 2005 SCAT, ROSA PAN stereo 2001 TES : PAN 2007 2003 CARTOSAT-2: PAN 1997 IRS-1D : LISS-3, 1999 RESOURCESAT-1 : PAN & WiFS LISS 3; LISS 4; AWiFS OCEANSAT-1 : OCM & MSMR • Thematic Satellites Series • Operational Indian Remote Sensing Satellites 1996 1995 • National missions on various themes IRS-1C : LISS-3, IRS-P3 : WiFS, PAN & WiFS MOS, X-Ray • GIS databases for decision support • Integrated mission for sustainable development 1994 • -

Prof. U.R. Rao Chairman, PRL Council (Former Chairman, ISRO & Secretary, DOS) Department of Space, Antariksh Bhavan New BEL Road, Bangalore – 560 094

INDIA’S SPACE PROGRAM (AN OVERVIEW) (Lecture–1) Prof. U.R. Rao Chairman, PRL Council (Former Chairman, ISRO & Secretary, DOS) Department of Space, Antariksh Bhavan New BEL Road, Bangalore – 560 094 (2006) Slide -1 INDIA DECIDES TO GO INTO SPACE • Background – Ground / Balloon Based Studies, Atmospheric Sciences, Cosmic Rays, Astrophysics • Thumba Equatorial Rocket Launching Station (TERLS) in 1962 Cooperation with NASA, USSR, CNES AND UK Rocket Experiments to Study Equatorial Aeronomy Meteorology and Astrophysics • Population (1.06 Billion), Per capita GDP (550$), Illiteracy (39%), Population Below PL (30%) India with 16% Population, 2% Land, 1.5% Forest, Consumes 2% Energy, has 1.5% Global GDP. India Opts Space Technology for Rapid Socio-Economic Development Slide - 2 INDIAN SPACE ENDEAVOUR There are some who question the relevance of space activities in a developing nation. To us, there is no ambiguity of purpose. We do not have the fantasy of competing with the economically advanced nations in the exploration of the Moon or the planets or manned space-flight. But we are convinced that if we are to play a meaningful role nationally, and in the comity of nations, we must be second to none in the applications of advanced technologies to the real problems of man and society IRS BUDGET Rs 3148 Cr/ annum LAUNCHER APPLICATIONS HUMAN RESOURCES LEADERSHIP EXPERTISE 16500 strong LARGE USER BASE INSAT INTERNATIONAL COOPERATION INDUSTRY VIKRAM A. SARABHAI SPACE ASSETS Remote sensing & SPACE COMMERCE Telecom satellite Constellations INFRASTRUCTURE End-to-end capability STATE OF THE ART TECHNOLOGY Slide - 3 HUMBLE BEGINNING • Establishment of Space Science Tech Center, Thumba-1965 Rocket Technology Development – Centaur – Rohini • Earth Station at Ahmedabad – 1968 / Space Applications Center 1972 Landsat Earth Station – Hyderabad – 1978 • Krishi Darshan (80 Village near Delhi) – Remote Sensing Aerial Expts. -

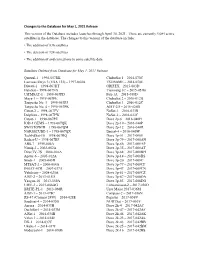

Changes to the Database for May 1, 2021 Release This Version of the Database Includes Launches Through April 30, 2021

Changes to the Database for May 1, 2021 Release This version of the Database includes launches through April 30, 2021. There are currently 4,084 active satellites in the database. The changes to this version of the database include: • The addition of 836 satellites • The deletion of 124 satellites • The addition of and corrections to some satellite data Satellites Deleted from Database for May 1, 2021 Release Quetzal-1 – 1998-057RK ChubuSat 1 – 2014-070C Lacrosse/Onyx 3 (USA 133) – 1997-064A TSUBAME – 2014-070E Diwata-1 – 1998-067HT GRIFEX – 2015-003D HaloSat – 1998-067NX Tianwang 1C – 2015-051B UiTMSAT-1 – 1998-067PD Fox-1A – 2015-058D Maya-1 -- 1998-067PE ChubuSat 2 – 2016-012B Tanyusha No. 3 – 1998-067PJ ChubuSat 3 – 2016-012C Tanyusha No. 4 – 1998-067PK AIST-2D – 2016-026B Catsat-2 -- 1998-067PV ÑuSat-1 – 2016-033B Delphini – 1998-067PW ÑuSat-2 – 2016-033C Catsat-1 – 1998-067PZ Dove 2p-6 – 2016-040H IOD-1 GEMS – 1998-067QK Dove 2p-10 – 2016-040P SWIATOWID – 1998-067QM Dove 2p-12 – 2016-040R NARSSCUBE-1 – 1998-067QX Beesat-4 – 2016-040W TechEdSat-10 – 1998-067RQ Dove 3p-51 – 2017-008E Radsat-U – 1998-067RF Dove 3p-79 – 2017-008AN ABS-7 – 1999-046A Dove 3p-86 – 2017-008AP Nimiq-2 – 2002-062A Dove 3p-35 – 2017-008AT DirecTV-7S – 2004-016A Dove 3p-68 – 2017-008BH Apstar-6 – 2005-012A Dove 3p-14 – 2017-008BS Sinah-1 – 2005-043D Dove 3p-20 – 2017-008C MTSAT-2 – 2006-004A Dove 3p-77 – 2017-008CF INSAT-4CR – 2007-037A Dove 3p-47 – 2017-008CN Yubileiny – 2008-025A Dove 3p-81 – 2017-008CZ AIST-2 – 2013-015D Dove 3p-87 – 2017-008DA Yaogan-18 -

Snow Water Equivalent Retrieval Using Ku- Band SCATSAT-1 Scatterometer Data for Northwest Himalayas

RSHS18-95-1 Remote Sensing and Hydrology Symposium (ICRS-IAHS) © Author(s) 2018. CC Attribution 4.0 license. Snow Water Equivalent Retrieval using Ku- band SCATSAT-1 Scatterometer Data for Northwest Himalayas Arpit Chouksey, Praveen Kumar Thakur, Suvrat Kaushik, Shiv Prasad Aggarwal, Bhaskar Ramchandra Nikam, Vaibhav Garg, and Pankaj Ramji Dhote Water Resources Department, Indian Institute of Remote Sensing, Indian Space Research Organisation, Dehradun, India ([email protected]) Space borne microwave radars have great application in monitoring seasonal snow cover changes regardless of the cloud cover and day-night conditions. The presence or absence of snow cover has very significant impact on the water and energy balance of Earth. Accurate estimation of snow extent and snow water equivalent (SWE) act as an input in many studies like snowmelt runoff estimation and water balance studies. The scope and effec- tiveness of optical and microwave satellite images in snow studies has been well demonstrated in last 30 years. SCATSAT-1 satellite was launched on 26th September 2016 in continuation with the Oceansat-2 scatterometer mission. SCATSAT-1 carries a Ku-band pencil beam scatterometer that acquires data in a conical fashion in HH and VV polarizations. The scatterometer provides global high resolution (2.25 km spatial resolution) images. It has been demonstrated by previous studies that Ku-band is more sensitive to the volume properties of snowpack in comparison to L and C band. Backscattering from the snowpack depends on sensor parameters such as fre- quency, polarisation, incidence angle and terrain properties such as surface roughness, snow density, snow depth, snow wetness and snow grain size.