Benchmarking BGP Routers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

"Mutually Controlled Routing with Independent Isps"

Mutually Controlled Routing with Independent ISPs Ratul Mahajan David Wetherall Thomas Anderson Microsoft Research University of Washington University of Washington and Intel Research Abstract – We present , an Internet routing pro- Our intent is to develop an interdomain routing proto- Wiser tocol that enables ISPs to jointly control routing in a way col that addresses these problems at a more basic level. that produces efficient end-to-end paths even when they We aim to allow all ISPs to exert control over all routes act in their own interests. is a simple extension to as large a degree as possible, while still selecting end- Wiser of BGP, uses only existing peering contracts for mone- to-end paths that are of high quality. This is a difficult tary exchange, and can be incrementally deployed. Each problem and there are very few examples of effective me- ISP selects paths in a way that presents a compromise diation in networks, despite competitive interests having between its own considerations and those of other ISPs. long been identified as an important factor [8]. Done over many routes, this allows each ISP to improve While it is not a priori obvious that it is possible to its situation by its own optimization criteria compared to succeed at this goal, our earlier work on Nexit [28] sug- the use of BGP today. We evaluate using a router- gests that efficient paths are, in fact, a feasible outcome of Wiser level prototype and simulation on measured ISP topolo- routing across independent ISPs in realistic network set- gies. We find that, unlike Internet routing today, tings. -

Openflow: Enabling Innovation in Campus Networks

OpenFlow: Enabling Innovation in Campus Networks Nick McKeown Tom Anderson Hari Balakrishnan Stanford University University of Washington MIT Guru Parulkar Larry Peterson Jennifer Rexford Stanford University Princeton University Princeton University Scott Shenker Jonathan Turner University of California, Washington University in Berkeley St. Louis This article is an editorial note submitted to CCR. It has NOT been peer reviewed. Authors take full responsibility for this article’s technical content. Comments can be posted through CCR Online. ABSTRACT to experiment with production traffic, which have created an This whitepaper proposes OpenFlow: a way for researchers exceedingly high barrier to entry for new ideas. Today, there to run experimental protocols in the networks they use ev- is almost no practical way to experiment with new network ery day. OpenFlow is based on an Ethernet switch, with protocols (e.g., new routing protocols, or alternatives to IP) an internal flow-table, and a standardized interface to add in sufficiently realistic settings (e.g., at scale carrying real and remove flow entries. Our goal is to encourage network- traffic) to gain the confidence needed for their widespread ing vendors to add OpenFlow to their switch products for deployment. The result is that most new ideas from the net- deployment in college campus backbones and wiring closets. working research community go untried and untested; hence We believe that OpenFlow is a pragmatic compromise: on the commonly held belief that the network infrastructure has one hand, it allows researchers to run experiments on hetero- “ossified”. geneous switches in a uniform way at line-rate and with high Having recognized the problem, the networking commu- port-density; while on the other hand, vendors do not need nity is hard at work developing programmable networks, to expose the internal workings of their switches. -

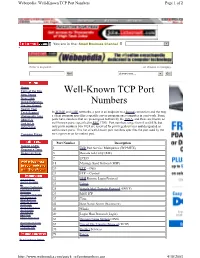

Well-Known TCP Port Numbers Page 1 of 2

Webopedia: Well-Known TCP Port Numbers Page 1 of 2 You are in the: Small Business Channel Jump to Website Enter a keyword... ...or choose a category. Go! choose one... Go! Home Term of the Day Well-Known TCP Port New Terms New Links Quick Reference Numbers Did You Know? Search Tool Tech Support In TCP/IP and UDP networks, a port is an endpoint to a logical connection and the way Webopedia Jobs a client program specifies a specific server program on a computer in a network. Some About Us ports have numbers that are preassigned to them by the IANA, and these are known as Link to Us well-known ports (specified in RFC 1700). Port numbers range from 0 to 65536, but Advertising only ports numbers 0 to 1024 are reserved for privileged services and designated as well-known ports. This list of well-known port numbers specifies the port used by the Compare Prices server process as its contact port. Port Number Description Submit a URL 1 TCP Port Service Multiplexer (TCPMUX) Request a Term Report an Error 5 Remote Job Entry (RJE) 7 ECHO 18 Message Send Protocol (MSP) 20 FTP -- Data 21 FTP -- Control Internet News 22 SSH Remote Login Protocol Internet Investing IT 23 Telnet Windows Technology Linux/Open Source 25 Simple Mail Transfer Protocol (SMTP) Developer Interactive Marketing 29 MSG ICP xSP Resources Small Business 37 Time Wireless Internet Downloads 42 Host Name Server (Nameserv) Internet Resources Internet Lists 43 WhoIs International EarthWeb 49 Login Host Protocol (Login) Career Resources 53 Domain Name System (DNS) Search internet.com Advertising -

NBAR2 Standard Protocol Pack 1.0

NBAR2 Standard Protocol Pack 1.0 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 © 2013 Cisco Systems, Inc. All rights reserved. CONTENTS CHAPTER 1 Release Notes for NBAR2 Standard Protocol Pack 1.0 1 CHAPTER 2 BGP 3 BITTORRENT 6 CITRIX 7 DHCP 8 DIRECTCONNECT 9 DNS 10 EDONKEY 11 EGP 12 EIGRP 13 EXCHANGE 14 FASTTRACK 15 FINGER 16 FTP 17 GNUTELLA 18 GOPHER 19 GRE 20 H323 21 HTTP 22 ICMP 23 IMAP 24 IPINIP 25 IPV6-ICMP 26 IRC 27 KAZAA2 28 KERBEROS 29 L2TP 30 NBAR2 Standard Protocol Pack 1.0 iii Contents LDAP 31 MGCP 32 NETBIOS 33 NETSHOW 34 NFS 35 NNTP 36 NOTES 37 NTP 38 OSPF 39 POP3 40 PPTP 41 PRINTER 42 RIP 43 RTCP 44 RTP 45 RTSP 46 SAP 47 SECURE-FTP 48 SECURE-HTTP 49 SECURE-IMAP 50 SECURE-IRC 51 SECURE-LDAP 52 SECURE-NNTP 53 SECURE-POP3 54 SECURE-TELNET 55 SIP 56 SKINNY 57 SKYPE 58 SMTP 59 SNMP 60 SOCKS 61 SQLNET 62 SQLSERVER 63 SSH 64 STREAMWORK 65 NBAR2 Standard Protocol Pack 1.0 iv Contents SUNRPC 66 SYSLOG 67 TELNET 68 TFTP 69 VDOLIVE 70 WINMX 71 NBAR2 Standard Protocol Pack 1.0 v Contents NBAR2 Standard Protocol Pack 1.0 vi CHAPTER 1 Release Notes for NBAR2 Standard Protocol Pack 1.0 NBAR2 Standard Protocol Pack Overview The Network Based Application Recognition (NBAR2) Standard Protocol Pack 1.0 is provided as the base protocol pack with an unlicensed Cisco image on a device. -

Secure Border Gateway Protocol (S-BGP) — Real World Performance and Deployment Issues

Secure Border Gateway Protocol (S-BGP) — Real World Performance and Deployment Issues Stephen Kent, Charles Lynn, Joanne Mikkelson, and Karen Seo BBN Technologies Abstract configuration information, or routing databases may be The Border Gateway Protocol (BGP), which is used to modified or replaced illicitly via unauthorized access to distribute routing information between autonomous a router, or to a server from which router software is systems, is an important component of the Internet's downloaded, or via a spoofed distribution channel, etc. routing infrastructure. Secure BGP (S-BGP) addresses Such attacks could result in transmission of fictitious critical BGP vulnerabilities by providing a scalable BGP messages, modification or replay of valid means of verifying the authenticity and authorization of messages, or suppression of valid messages. If BGP control traffic. To facilitate widespread adoption, cryptographic keying material is used to secure BGP S-BGP must avoid introducing undue overhead control traffic, that too may be compromised. We have (processing, bandwidth, storage) and must be developed security enhancements to BGP that address incrementally deployable, i.e., interoperable with BGP. most of these vulnerabilities by providing a secure, To provide a proof of concept demonstration, we scalable system: Secure-BGP (S-BGP) [1,3]. Better developed a prototype implementation of S-BGP and physical, procedural and basic communication security deployed it in DARPA’s CAIRN testbed. Real Internet for BGP routers could address some of these attacks. BGP traffic was fed to the testbed routers via replay of a However, such measures would not counter any of the recorded BGP peering session with an ISP’s BGP many forms of attacks that compromise routers router. -

Virtually Eliminating Router Bugs

Virtually Eliminating Router Bugs Eric Keller∗ Minlan Yu∗ Matthew Caesar† Jennifer Rexford∗ ∗ Princeton University, Princeton, NJ, USA † UIUC, Urbana, IL, USA [email protected] {minlanyu, jrex}@cs.princeton.edu [email protected] ABSTRACT 1. INTRODUCTION Software bugs in routers lead to network outages, security The Internet is an extremely large and complicated dis- vulnerabilities, and other unexpected behavior. Rather than tributed system. Selecting routes involves computations across simply crashing the router, bugs can violate protocol se- millions of routers spread over vast distances, multiple rout- mantics, rendering traditional failure detection and recovery ing protocols, and highly customizable routing policies. Most techniques ineffective. Handling router bugs is an increas- of the complexity in Internet routing exists in protocols im- ingly important problem as new applications demand higher plemented as software running on routers. These routers availability, and networks become better at dealing with tra- typically run an operating system, and a collection of proto- ditional failures. In this paper, we tailor software and data col daemons which implement the various tasks associated diversity (SDD) to the unique properties of routing proto- with protocol operation. Like any complex software, routing cols, so as to avoid buggy behavior at run time. Our bug- software is prone to implementation errors, or bugs. tolerant router executes multiple diverse instances of routing software, and uses voting to determine the output to publish 1.1 Challenges in dealing with router bugs to the forwarding table, or to advertise to neighbors. We de- sign and implement a router hypervisor that makes this par- The fact that bugs can produce incorrect and unpredictable allelism transparent to other routers, handles fault detection behavior, coupled with the mission-critical nature of Inter- and booting of new router instances, and performs voting in net routers, can produce disastrous results. -

IEEE Workshop on Open-Source Software Networking (OSSN) CALL for PAPERS

IEEE Workshop on Open-Source Software Networking (OSSN) CALL FOR PAPERS SEOUL, KOREA – June 6 2016 http://opennetworking.kr/ossn The IEEE International Workshop on Open-Source Software Networking (OSSN 2016) will be held on June 6, 2016 in Seoul Korea along with the 2nd IEEE International Conference on Network Softwarization (NetSoft 2016, sites.ieee.org/netsoft). The OSSN workshop aims to provide a venue for sharing experiences on developing and operating open-source software-centric networking tools and platforms. It intends to cover various aspects for open-source collaboration community of professionals from academia and industry. Full and short (work-in-process) papers are solicited to discuss experiences on development, deployment, operation, and experimental studies around the overall lifecycle of open-source software networking. Topics of Interest Authors are invited to submit papers that fall into any topics related with open-source software networking. Topics of interest include, but are not limited to, the following: • SDN (Software Defined Networking): ONOS, Open Daylight, Ryu, … • NFV (Network Function Virtualization): OPNFV, OpenMano, … • Switching: Open vSwitch, Open Switch, Open Network Linux, Indigo, … • Routing: Click, Zebra, Quagga, XORP, VyOS, Project Calico, … • Wireless: OpenLTE, OpenAirInterface, … • Cloud and Analytics: OpenStack Neutron, Docker networking, Hadoop, Spark, … • Monitoring and Messaging: Catti, Nagios, Zabbix, Zenoss, Ntop, Kafka, RabbitMQ, … • Security and Utilities: Snort, OpenVPN, Netfilter, IPtables, Wireshark, NIST Net, … • Development and Simulation: NetFPGA, GNU radio, ns-3, … Paper Submission Authors are invited to submit only original papers (written in English) not published or submitted for publication elsewhere. Full papers can be up to 6 pages while short (work-in-progress) papers are up to 4 pages. -

Network Working Group Internet Architecture Board Request for Comments: 1720 J

Network Working Group Internet Architecture Board Request for Comments: 1720 J. Postel, Editor Obsoletes: RFCs 1610, 1600, 1540, 1500, November 1994 1410, 1360, 1280, 1250, 1100, 1083, 1130, 1140, 1200 STD: 1 Category: Standards Track INTERNET OFFICIAL PROTOCOL STANDARDS Status of this Memo This memo describes the state of standardization of protocols used in the Internet as determined by the Internet Architecture Board (IAB). This memo is an Internet Standard. Distribution of this memo is unlimited. Table of Contents Introduction . 2 1. The Standardization Process . 3 2. The Request for Comments Documents . 5 3. Other Reference Documents . 6 3.1. Assigned Numbers . 6 3.2. Gateway Requirements . 6 3.3. Host Requirements . 6 3.4. The MIL-STD Documents . 6 4. Explanation of Terms . 7 4.1. Definitions of Protocol State (Maturity Level) . 8 4.1.1. Standard Protocol . 8 4.1.2. Draft Standard Protocol . 9 4.1.3. Proposed Standard Protocol . 9 4.1.4. Experimental Protocol . 9 4.1.5. Informational Protocol . 9 4.1.6. Historic Protocol . 9 4.2. Definitions of Protocol Status (Requirement Level) . 9 4.2.1. Required Protocol . 10 4.2.2. Recommended Protocol . 10 4.2.3. Elective Protocol . 10 4.2.4. Limited Use Protocol . 10 4.2.5. Not Recommended Protocol . 10 5. The Standards Track . 10 5.1. The RFC Processing Decision Table . 10 5.2. The Standards Track Diagram . 12 6. The Protocols . 14 6.1. Recent Changes . 14 Internet Architecture Board [Page 1] RFC 1720 Internet Standards November 1994 6.1.1. New RFCs . 14 6.1.2. -

Protecting the Integrity of Internet Routing: Border Gateway Protocol (BGP) Route Origin Validation

NIST SPECIAL PUBLICATION 1800-14C Protecting the Integrity of Internet Routing: Border Gateway Protocol (BGP) Route Origin Validation Volume C: How-To Guides William Haag Applied Cybersecurity Division Information Technology Laboratory Doug Montgomery Advanced Network Technologies Division Information Technology Laboratory Allen Tan The MITRE Corporation McLean, VA William C. Barker Dakota Consulting Silver Spring, MD June 2019 This publication is available free of charge from: https://doi.org/10.6028/NIST.SP.1800-14 The first draft of this publication is available free of charge from: https://www.nccoe.nist.gov/sites/default/files/library/sp1800/sidr-piir-nist-sp1800-14-draft.pdf This publication DISCLAIMER is available Certain commercial entities, equipment, products, or materials may be identified by name or company logo or other insignia in order to acknowledge their participation in this collaboration or to describe an free experimental procedure or concept adequately. Such identification is not intended to imply special of status or relationship with NIST or recommendation or endorsement by NIST or NCCoE; neither is it charge intended to imply that the entities, equipment, products, or materials are necessarily the best available for the purpose. from: http://doi.org/10.6028/NIST.SP.1800-14. National Institute of Standards and Technology Special Publication 1800-14C, Natl. Inst. Stand. Technol. Spec. Publ. 1800-14C, 61 pages, (June 2019), CODEN: NSPUE2 FEEDBACK As a private-public partnership, we are always seeking feedback on our Practice Guides. We are particularly interested in seeing how businesses apply NCCoE reference designs in the real world. If you have implemented the reference design, or have questions about applying it in your environment, please email us at [email protected]. -

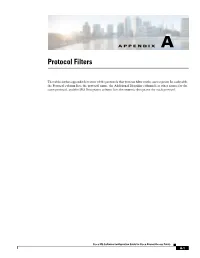

Appendix a Protocol Filters

APPENDIX A Protocol Filters The tables in this appendix list some of the protocols that you can filter on the access point. In each table, the Protocol column lists the protocol name, the Additional Identifier column lists other names for the same protocol, and the ISO Designator column lists the numeric designator for each protocol. Cisco IOS Software Configuration Guide for Cisco Aironet Access Points A-1 Appendix A Protocol Filters Table A-1 EtherType Protocols Protocol Additional Identifier ISO Designator ARP — 0x0806 RARP — 0x8035 IP — 0x0800 Berkeley Trailer Negotiation — 0x1000 LAN Test — 0x0708 X.25 Level3 X.25 0x0805 Banyan — 0x0BAD CDP — 0x2000 DEC XNS XNS 0x6000 DEC MOP Dump/Load — 0x6001 DEC MOP MOP 0x6002 DEC LAT LAT 0x6004 Ethertalk — 0x809B Appletalk ARP Appletalk 0x80F3 AARP IPX 802.2 — 0x00E0 IPX 802.3 — 0x00FF Novell IPX (old) — 0x8137 Novell IPX (new) IPX 0x8138 EAPOL (old) — 0x8180 EAPOL (new) — 0x888E Telxon TXP TXP 0x8729 Aironet DDP DDP 0x872D Enet Config Test — 0x9000 NetBUI — 0xF0F0 Cisco IOS Software Configuration Guide for Cisco Aironet Access Points A-2 Appendix A Protocol Filters Table A-2 IP Protocols Protocol Additional Identifier ISO Designator dummy — 0 Internet Control Message Protocol ICMP 1 Internet Group Management Protocol IGMP 2 Transmission Control Protocol TCP 6 Exterior Gateway Protocol EGP 8 PUP — 12 CHAOS — 16 User Datagram Protocol UDP 17 XNS-IDP IDP 22 ISO-TP4 TP4 29 ISO-CNLP CNLP 80 Banyan VINES VINES 83 Encapsulation Header encap_hdr 98 Spectralink Voice Protocol SVP 119 Spectralink raw -

Introduction to the Border Gateway Protocol – Case Study Using GNS3

Introduction to The Border Gateway Protocol – Case Study using GNS3 Sreenivasan Narasimhan1, Haniph Latchman2 Department of Electrical and Computer Engineering University of Florida, Gainesville, USA [email protected], [email protected] Abstract – As the internet evolves to become a vital resource for many organizations, configuring The Border Gateway protocol (BGP) as an exterior gateway protocol in order to connect to the Internet Service Providers (ISP) is crucial. The BGP system exchanges network reachability information with other BGP peers from which Autonomous System-level policy decisions can be made. Hence, BGP can also be described as Inter-Domain Routing (Inter-Autonomous System) Protocol. It guarantees loop-free exchange of information between BGP peers. Enterprises need to connect to two or more ISPs in order to provide redundancy as well as to improve efficiency. This is called Multihoming and is an important feature provided by BGP. In this way, organizations do not have to be constrained by the routing policy decisions of a particular ISP. BGP, unlike many of the other routing protocols is not used to learn about routes but to provide greater flow control between competitive Autonomous Systems. In this paper, we present a study on BGP, use a network simulator to configure BGP and implement its route-manipulation techniques. Index Terms – Border Gateway Protocol (BGP), Internet Service Provider (ISP), Autonomous System, Multihoming, GNS3. 1. INTRODUCTION Figure 1. Internet using BGP [2]. Routing protocols are broadly classified into two types – Link State In the figure, AS 65500 learns about the route 172.18.0.0/16 through routing (LSR) protocol and Distance Vector (DV) routing protocol. -

VSI TCP/IP Services for Openvms Concepts and Planning

VSI OpenVMS VSI TCP/IP Services for OpenVMS Concepts and Planning Document Number: DO-TCPCPL-01A Publication Date: October 2020 Revision Update Information: This is a new manual. Operating System and Version: VSI OpenVMS Integrity Version 8.4-2 VSI OpenVMS Alpha Version 8.4-2L1 Software Version: VSI TCP/IP Services Version 5.7 VMS Software, Inc. (VSI) Burlington, Massachusetts, USA VSI TCP/IP Services for OpenVMS Concepts and Planning Copyright © 2020 VMS Software, Inc. (VSI), Burlington, Massachusetts, USA Legal Notice Confidential computer software. Valid license from VSI required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor's standard commercial license. The information contained herein is subject to change without notice. The only warranties for VSI products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. VSI shall not be liable for technical or editorial errors or omissions contained herein. HPE, HPE Integrity, HPE Alpha, and HPE Proliant are trademarks or registered trademarks of Hewlett Packard Enterprise. Intel, Itanium and IA-64 are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. UNIX is a registered trademark of The Open Group. The VSI OpenVMS documentation set is available on DVD. ii VSI TCP/IP Services for OpenVMS Concepts and Planning Preface ................................................................................................................................... vii 1. About VSI .................................................................................................................... vii 2. Intended Audience .......................................................................................................