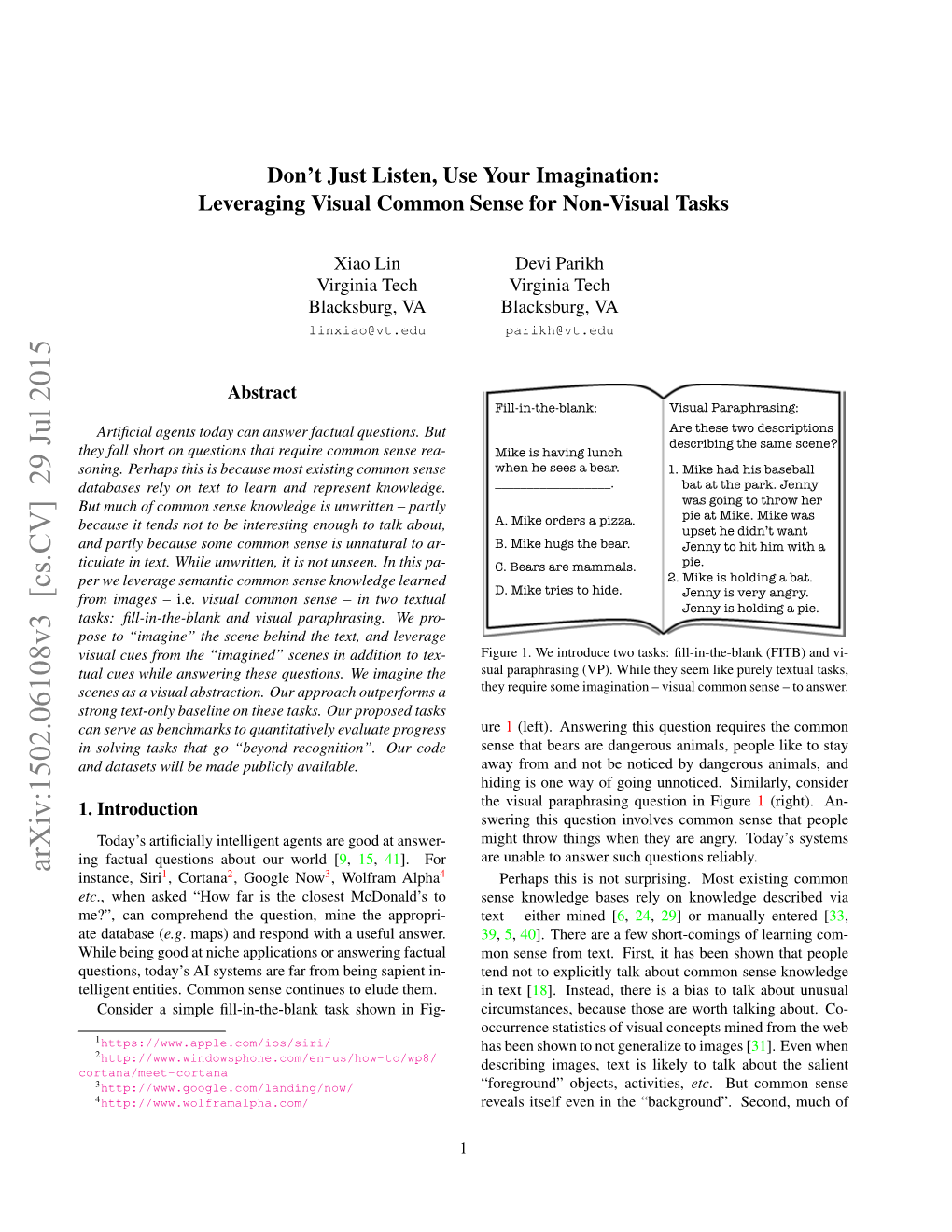

Don't Just Listen, Use Your Imagination: Leveraging Visual

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download Slides

a platform for all that we know savas parastatidis http://savas.me savasp transition from web to apps increasing focus on information (& knowledge) rise of personal digital assistants importance of near-real time processing http://aptito.com/blog/wp-content/uploads/2012/05/smartphone-apps.jpg today... storing computing computers are huge amounts great tools for of data managing indexing example google and microsoft both have copies of the entire web (and more) for indexing purposes tomorrow... storing computing computers are huge amounts great tools for of data managing indexing acquisition discovery aggregation organization we would like computers to of the world’s information also help with the automatic correlation analysis and knowledge interpretation inference data information knowledge intelligence wisdom expert systems watson freebase wolframalpha rdbms google now web indexing data is symbols (bits, numbers, characters) information adds meaning to data through the introduction of relationship - it answers questions such as “who”, “what”, “where”, and “when” knowledge is a description of how the world works - it’s the application of data and information in order to answer “how” questions G. Bellinger, D. Castro, and A. Mills, “Data, Information, Knowledge, and Wisdom,” Inform. pp. 1–4, 2004 web – the data platform web – the information platform web – the knowledge platform foundation for new experiences “wisdom is not a product of schooling but of the lifelong attempt to acquire it” representative examples wolframalpha watson source: -

Ontologies and Languages for Representing Mathematical Knowledge on the Semantic Web

Ontologies and Languages for Representing Mathematical Knowledge on the Semantic Web Editor(s): Aldo Gangemi, ISTC-CNR Rome, Italy Solicited review(s): Claudio Sacerdoti Coen, University of Bologna, Italy; Alexandre Passant, DERI, National University of Galway, Ireland; Aldo Gangemi, ISTC-CNR Rome, Italy Christoph Lange data vocabularies and domain knowledge from pure and ap- plied mathematics. FB 3 (Mathematics and Computer Science), Many fields of mathematics have not yet been imple- University of Bremen, Germany mented as proper Semantic Web ontologies; however, we Computer Science, Jacobs University Bremen, show that MathML and OpenMath, the standard XML-based exchange languages for mathematical knowledge, can be Germany fully integrated with RDF representations in order to con- E-mail: [email protected] tribute existing mathematical knowledge to the Web of Data. We conclude with a roadmap for getting the mathematical Web of Data started: what datasets to publish, how to inter- link them, and how to take advantage of these new connec- tions. Abstract. Mathematics is a ubiquitous foundation of sci- Keywords: mathematics, mathematical knowledge manage- ence, technology, and engineering. Specific areas of mathe- ment, ontologies, knowledge representation, formalization, matics, such as numeric and symbolic computation or logics, linked data, XML enjoy considerable software support. Working mathemati- cians have recently started to adopt Web 2.0 environments, such as blogs and wikis, but these systems lack machine sup- 1. Introduction: Mathematics on the Web – State port for knowledge organization and reuse, and they are dis- of the Art and Challenges connected from tools such as computer algebra systems or interactive proof assistants. -

Datatone: Managing Ambiguity in Natural Language Interfaces for Data Visualization Tong Gao1, Mira Dontcheva2, Eytan Adar1, Zhicheng Liu2, Karrie Karahalios3

DataTone: Managing Ambiguity in Natural Language Interfaces for Data Visualization Tong Gao1, Mira Dontcheva2, Eytan Adar1, Zhicheng Liu2, Karrie Karahalios3 1University of Michigan, 2Adobe Research 3University of Illinois, Ann Arbor, MI San Francisco, CA Urbana Champaign, IL fgaotong,[email protected] fmirad,[email protected] [email protected] ABSTRACT to be both flexible and easy to use. General purpose spread- Answering questions with data is a difficult and time- sheet tools, such as Microsoft Excel, focus largely on offer- consuming process. Visual dashboards and templates make ing rich data transformation operations. Visualizations are it easy to get started, but asking more sophisticated questions merely output to the calculations in the spreadsheet. Asking often requires learning a tool designed for expert analysts. a “visual question” requires users to translate their questions Natural language interaction allows users to ask questions di- into operations on the spreadsheet rather than operations on rectly in complex programs without having to learn how to the visualization. In contrast, visual analysis tools, such as use an interface. However, natural language is often ambigu- Tableau,1 creates visualizations automatically based on vari- ous. In this work we propose a mixed-initiative approach to ables of interest, allowing users to ask questions interactively managing ambiguity in natural language interfaces for data through the visualizations. However, because these tools are visualization. We model ambiguity throughout the process of often intended for domain specialists, they have complex in- turning a natural language query into a visualization and use terfaces and a steep learning curve. algorithmic disambiguation coupled with interactive ambigu- Natural language interaction offers a compelling complement ity widgets. -

A New Kind of Science

Wolfram|Alpha, A New Kind of Science A New Kind of Science Wolfram|Alpha, A New Kind of Science by Bruce Walters April 18, 2011 Research Paper for Spring 2012 INFSY 556 Data Warehousing Professor Rhoda Joseph, Ph.D. Penn State University at Harrisburg Wolfram|Alpha, A New Kind of Science Page 2 of 8 Abstract The core mission of Wolfram|Alpha is “to take expert-level knowledge, and create a system that can apply it automatically whenever and wherever it’s needed” says Stephen Wolfram, the technologies inventor (Wolfram, 2009-02). This paper examines Wolfram|Alpha in its present form. Introduction As the internet became available to the world mass population, British computer scientist Tim Berners-Lee provided “hypertext” as a means for its general consumption, and coined the phrase World Wide Web. The World Wide Web is often referred to simply as the Web, and Web 1.0 transformed how we communicate. Now, with Web 2.0 firmly entrenched in our being and going with us wherever we go, can 3.0 be far behind? Web 3.0, the semantic web, is a web that endeavors to understand meaning rather than syntactically precise commands (Andersen, 2010). Enter Wolfram|Alpha. Wolfram Alpha, officially launched in May 2009, is a rapidly evolving "computational search engine,” but rather than searching pre‐existing documents, it actually computes the answer, every time (Andersen, 2010). Wolfram|Alpha relies on a knowledgebase of data in order to perform these computations, which despite efforts to date, is still only a fraction of world’s knowledge. Scientist, author, and inventor Stephen Wolfram refers to the world’s knowledge this way: “It’s a sad but true fact that most data that’s generated or collected, even with considerable effort, never gets any kind of serious analysis” (Wolfram, 2009-02). -

RIO: an AI Based Virtual Assistant

International Journal of Computer Applications (0975 – 8887) Volume 180 – No.45, May 2018 RIO: An AI based Virtual Assistant Samruddhi S. Sawant Abhinav A. Bapat Komal K. Sheth Department of Information Department of Information Department of Information Technology Technology Technology NBN Sinhgad Technical Institute NBN Sinhgad Technical Institute NBN Sinhgad Technical Institute Campus Campus Campus Pune, India Pune, India Pune, India Swapnadip B. Kumbhar Rahul M. Samant Department of Information Technology Professor NBN Sinhgad Technical Institute Campus Department of Information Technology Pune, India NBN Sinhgad Technical Institute Campus Pune, India ABSTRACT benefitted by such virtual assistants. The rise of messaging In this world of corporate companies, a lot of importance is apps, the explosion of the app ecosystem, advancements in being given to Human Resources. Human Capital artificial intelligence (AI) and cognitive technologies, a Management (HCM) is an approach of Human Resource fascination with conversational user interfaces and a wider Management that connotes to viewing of employees as assets reach of automation are all driving the chatbot trend. A that can be invested in and managed to maximize business chatbot can be deployed over various platforms namely value. In this paper, we build a chatbot to manage all the Facebook messenger, Slack, Skype, Kik, etc. The most functions of HRM namely -- core HR, Talent Management preferred platform among businesses seems to be Facebook and Workforce management. A chatbot is a service, powered messenger (92%). There are around 80% of businesses that by rules and sometimes artificial intelligence that you interact would like to host their chatbot on their own website. -

Surviving the AI Hype – Fundamental Concepts to Understand Artificial Intelligence

WHITEPAPEr_ Surviving the AI Hype – Fund amental concepts to understand Artificial Intelligence 23.12.2016 luca-d3.com Whitepaper_ Surviving the AI Hype – Fundamental concepts to understand Artificial Intelligence Index 1. Introduction.................................................................................................................................................................................... 3 2. What are the most common definitions of AI? ......................................................................................................................... 3 3. What are the sub areas of AI? ...................................................................................................................................................... 5 4. How “intelligent” can Artificial Intelligence get? ....................................................................................................................... 7 Strong and weak AI ............................................................................................................................................................... 7 The Turing Test ..................................................................................................................................................................... 7 The Chinese Room Argument ............................................................................................................................................. 8 The Intentional Stance ........................................................................................................................................................ -

Now That You Have Siri Set the Way You Want, Close Settings and Give Siri a Try

Now that you have Siri set the way you want, close Settings and give Siri a try. Using Siri to Run Apps and Find Information You can get Siri's attention in several ways: Press the Home button until you hear two beeps. This works whether the phone is locked or not, unless you have configured your phone not to allow Siri to work from the lock screen. Press the center button on your wired or Bluetooth headphones until you hear two beeps. Raise the phone to your ear if it's unlocked and you've turned on the Raise to Speak feature in Siri's settings screen. Now speak to Siri. When you're done, you should hear two higher-pitched beeps, and then you may need to wait a while for Siri's response. When Siri is ready to answer, you may hear a spoken response, or if you asked Siri to do something, such as open an app, the action will be performed, and Siri won't say anything. If you asked for something complicated, such as directions to the theater or the weather forecast for next week, Siri won't speak all that information, but it will be available onscreen. You can flick through the information, and VoiceOver will read it. Similarly, Siri won't read back what it thinks you said, but you can read that information with VoiceOver. Sometimes Siri will need more information. Siri will ask a question, and then beep twice. You don't need to do anything special; just speak your answer. -

Master in Computer Science and Engineering Project for Knowledge

Master in Computer Science and Engineering Project for Knowledge Representation and Reasoning Course 2019/2020 Knowledge Representation and Reasoning is an important area in AI since the very beginning of this field and the area has proposed different approaches to represent and reason about knowledge. In our KRR course we will come across several paradigms: - First Order Logic - Horn Clauses - Rules and Production Systems - Frames - Description Logics - Non-monotonic Logics - Inheritance Systems Current knowledge representation systems use one or a mixture of the studied approaches. The aim of the project is to look into some of today's interesting knowledge representation and reasoning working systems and try to answer the following questions: - what were they built for, what was/is the purpose, what was/is the scope? - who built them (company, research group)? - are these systems still active and in use? or dormant? - what kind of representation paradigm(s) do they use or rely upon? which language is used to represent knowledge? If needed explain a bit of the language. - what kind of reasoning paradigm(s)/inference do they use or rely upon? which reasoners are used to infer knowledge? If needed explain a bit the reasoning procedures. - how were they build? from scratch, reusing, automatic learning, free collaborative anonymous volunteers, carefully build by a small closed team? Describe details. - how were they build from a process and life cycle point of view? Describe details. - what, how and from whom was knowledge extracted ? How was it implemented in the system? - what domain do they deal about ? - what kind of reasoning do they do ? - which other areas of AI are used in the system and combined with the KRR component? How are they combined? Explain as much in detail as possible. -

Natural Language Queries for Visual Data Analytics

Quda: Natural Language Queries for Visual Data Analytics SIWEI FU, Zhejiang Lab, China KAI XIONG, Zhejiang University, China XIAODONG GE, Zhejiang University, China SILIANG TANG, Zhejiang University, China WEI CHEN, Zhejiang University, China YINGCAI WU, Zhejiang University, China 1. Expert Query Collection 2. Paraphrase Generation 3. Paraphrase Validation 36 data tables from 11 domains Crowd sourcing Crowd Machine Inteview with 20 experts 20 paraphrases per expert query Validation results 920 expert queries Paraphrasing a1. Which video is the most liked? Sorting a1. Which video is the most liked? a. What is the most liked video? a2. What video has the most likes? a2. What video has the most likes? b.Rank the country by population a3. Please name the most liked video. a3. Please name the most liked video. in 2000 a4. This is the most like video a4. Which video has the highest c. Do app sizes fall into a few clusters? amount of likes? a5. Which video has the highest d. Is the age correlated with amount of likes? a5. This is the most like video …the market value? Fig. 1. Overview of the entire data acquisition procedure consisting of three stages. In the first stage, we collect 920 queries by interviewing 20 data analysts. Second, we expand the corpus by collecting paraphrases using crowd intelligence. Third, we borrow both the crowd force and a machine learning algorithm to score and reject paraphrases with low quality. The identification of analytic tasks from free text is critical for visualization-oriented natural language interfaces (V-NLIs) tosuggest effective visualizations. However, it is challenging due to the ambiguity and complexity nature of human language. -

Nicky: Toward a Virtual Assistant for Test and Measurement Instrument Recommendations

2017 IEEE 11th International Conference on Semantic Computing Nicky: Toward A Virtual Assistant for Test and Measurement Instrument Recommendations Robert Kincaid Graham Pollock Keysight Laboratories Keysight Laboratories Keysight Technologies Keysight Technologies Santa Clara, USA Roseville, USA [email protected] [email protected] Abstract—Natural language question answering has been an area have to design a test plan for the device under test that includes of active computer science research for decades. Recent advances specifying the measurements and ranges required. These have led to a new generation of virtual assistants or chatbots, measurement requirements dictate the specific test equipment frequently based on semantic modeling of some broadly general necessary. Creating this test setup can be a somewhat complex domain knowledge. However, answering questions about detailed, task. A typical test scenario will require many different highly technical, domain-specific capabilities and attributes measurements to be made over varying combinations of remains a difficult and complex problem. In this paper we discuss conditions and will usually involve multiple instruments and a prototype conversational virtual assistant designed for choosing devices. Test and measurement companies such as Keysight test and measurement equipment based on the detailed have product lines consisting of hundreds of different measurement requirements of the test engineer. Our system allows instruments (both current and legacy models still in use) with a for multi-stage queries which retain sufficient short-term context to support query refinement as well as compound questions. In variety of potentially overlapping capabilities. Each device has addition to the software architecture, we explore an approach to its own characteristic measurement functions, measurement ontology development that leverages inference from reasoners and ranges, accuracy, software compatibility and more. -

Ontomathp RO Ontology: a Linked Data Hub for Mathematics

OntoMathPRO Ontology: A Linked Data Hub for Mathematics Olga Nevzorova1,2, Nikita Zhiltsov1, Alexander Kirillovich1, and Evgeny Lipachev1 1 Kazan Federal University, Russia {onevzoro,nikita.zhiltsov,alik.kirillovich,elipachev}@gmail.com 2 Research Institute of Applied Semiotics of Tatarstan Academy of Sciences, Kazan, Russia Abstract. In this paper, we present an ontology of mathematical knowl- edge concepts that covers a wide range of the fields of mathematics and introduces a balanced representation between comprehensive and sensi- ble models. We demonstrate the applications of this representation in information extraction, semantic search, and education. We argue that the ontology can be a core of future integration of math-aware data sets in the Web of Data and, therefore, provide mappings onto relevant datasets, such as DBpedia and ScienceWISE. Keywords: Ontology engineering, mathematical knowledge, Linked Open Data. 1 Introduction Recent advances in computer mathematics [4] have made it possible to formalize particular mathematical areas including the proofs of some remarkable results (e.g. Four Color Theorem or Kepler’s Conjecture). Nevertheless, the creation of computer mathematics models is a slow process, requiring the excellent skills both in mathematics and programming. In this paper, we follow a different paradigm to mathematical knowledge representation that is based on ontology engineering and the Linked Data principles [6]. OntoMathPRO ontology1 intro- duces a reasonable trade-off between plain vocabularies and highly formalized models, aiming at computable proof-checking. OntoMathPRO was first briefly presented as a part of our previous work [22]. Since then, we have elaborated the ontology structure, improved interlinking with external resources and developed new applications to support the utility of the ontology in various use cases. -

Iphone User Guide for Ios 5.1 Software Contents

iPhone User Guide For iOS 5.1 Software Contents 9 Chapter 1: iPhone at a Glance 9 iPhone overview 9 Accessories 10 Buttons 12 Status icons 14 Chapter 2: Getting Started 14 Viewing this user guide on iPhone 14 What you need 14 Installing the SIM card 15 Setup and activation 15 Connecting iPhone to your computer 15 Connecting to the Internet 16 Setting up mail and other accounts 16 Managing content on your iOS devices 16 iCloud 18 Syncing with iTunes 19 Chapter 3: Basics 19 Using apps 22 Customizing the Home screen 24 Typing 27 Dictation 28 Printing 29 Searching 30 Voice Control 31 Notifications 32 Twitter 33 Apple Earphones with Remote and Mic 34 AirPlay 34 Bluetooth devices 35 Battery 37 Security features 38 Cleaning iPhone 38 Restarting or resetting iPhone 39 Chapter 4: Siri 39 What is Siri? 40 Using Siri 43 Correcting Siri 44 Siri and apps 55 Dictation 2 56 Chapter 5: Phone 56 Phone calls 60 FaceTime 61 Visual voicemail 62 Contacts 63 Favorites 63 Call forwarding, call waiting, and caller ID 64 Ringtones, Ring/Silent switch, and vibrate 64 International calls 65 Setting options for Phone 66 Chapter 6: Mail 66 Checking and reading email 67 Working with multiple accounts 67 Sending mail 68 Using links and detected data 68 Viewing attachments 68 Printing messages and attachments 69 Organizing mail 69 Searching mail 69 Mail accounts and settings 72 Chapter 7: Safari 72 Viewing webpages 73 Links 73 Reading List 73 Reader 73 Entering text and filling out forms 74 Searching 74 Bookmarks and history 74 Printing webpages, PDFs, and other documents