Bibbase Triplified

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Comparison of Researcher's Reference Management Software

Journal of Economics and Behavioral Studies Vol. 6, No. 7, pp. 561-568, July 2014 (ISSN: 2220-6140) A Comparison of Researcher’s Reference Management Software: Refworks, Mendeley, and EndNote Sujit Kumar Basak Durban University of Technology, South Africa [email protected] Abstract: This paper aimed to present a comparison of researcher’s reference management software such as RefWorks, Mendeley, and EndNote. This aim was achieved by comparing three software. The main results of this paper were concluded by comparing three software based on the experiment. The novelty of this paper is the comparison of researcher’s reference management software and it has showed that Mendeley reference management software can import more data from the Google Scholar for researchers. This finding could help to know researchers to use the reference management software. Keywords: Reference management software, comparison and researchers 1. Introduction Reference management software maintains a database to references and creates bibliographies and the reference lists for the written works. It makes easy to read and to record the elements for the reference comprises such as the author’s name, year of publication, and the title of an article, etc. (Reiss & Reiss, 2002). Reference Management Software is usually used by researchers, technologists, scientists, and authors, etc. to keep their records and utilize the bibliographic citations; hence it is one of the most complicated aspects among researchers. Formatting references as a matter of fact depends on a variety of citation styles which have been made the citation manager very essential for researchers at all levels (Gilmour & Cobus-Kuo, 2011). Reference management software is popularly known as bibliographic software, citation management software or personal bibliographic file managers (Nashelsky & Earley, 1991). -

Studies and Analysis of Reference Management Software: a Literature Review

Studies and analysis of reference management software: a literature review Jesús Tramullas Ana Sánchez-Casabón {jesus,asanchez}@unizar.es Dept .of Library & Information Science, University of Zaragoza Piedad Garrido-Picazo [email protected] Dept. of Computer and Software Engineering, University of Zaragoza Abstract: Reference management software is a well-known tool for scientific research work. Since the 1980s, it has been the subject of reviews and evaluations in library and information science literature. This paper presents a systematic review of published studies that evaluate reference management software with a comparative approach. The objective is to identify the types, models, and evaluation criteria that authors have adopted, in order to determine whether the methods used provide adequate methodological rigor and useful contributions to the field of study. Keywords: reference management software, evaluation methods, bibliography. 1. Introduction and background Reference management software has been a useful tool for researchers since the 1980s. In those early years, tools were made ad-hoc, and some were based on the dBase II/III database management system (Bertrand and Bader, 1980; Kunin, 1985). In a short period of time a market was created and commercial products were developed to provide support to this type of information resources. The need of researchers to systematize scientific literature in both group and personal contexts, and to integrate mechanisms into scientific production environments in order to facilitate and expedite the process of writing and publishing research results, requires that these types of applications receive almost constant attention in specialized library and information science literature. The result of this interest is reflected, in bibliographical terms, in the publication of numerous articles almost exclusively devoted to describing, analyzing, and comparing the characteristics of several reference management software products (Norman, 2010). -

Maximize Research Productivity with an Easy-To-Use Tool for Publishing and Managing Citations, Bibliographies, and References

Maximize research productivity with an easy-to-use tool for publishing and managing citations, bibliographies, and references 4 For institutions of higher education that want students and researchers to produce better, more accurate papers, the RefWorks reference manager simplifies the process of research, collaboration, data organization, and writing. RefWorks is easy to use, produces authoritative citations, provides round-the-clock support, and, as an entirely cloud-based solution, requires no syncing. With RefWorks, students and researchers are empowered to do the best work they can, while library administrators can support users, define institutional reference list styles, and analyze usage across the institution. With RefWorks you can: Save researchers time: Find, access, and capture research materials from virtually any source. Unify materials in one central workspace to facilitate storage, reuse, and sharing. Automatically generate bibliographies and authoritative citations. Collaborate on group projects and edit as a team online and in real time. Allow Library Admins greater control: Customize and manage user accounts. Measure usage with sophisticated RefWorks analytics and reporting. Set and disseminate institutional citation styles to drive consistency. Manage copyright compliance of full-text sharing, thus reducing the risk of copyright infringement. Participate in an administrator community site that provides peer support, open conversations, and resource-sharing. Integrate with your Link Resolver, storage applications, and third-party systems. Count on our world-class support team for help: Resolve any issue with our dedicated support and training staff. Take advantage of online resources – Knowledge Base, instructional videos, and LibGuides. 1 A user experience that researchers will love No syncing required! RefWorks is cloud based, and offers unlimited storage. -

Bibliografijos Ir PDF Tvarkymas Inžinerinės Grafikos Katedros

Bibliografijos ir PDF tvarkymas Inžinerinės grafikos katedros Kontaktai lektorius Edgaras Timinskas [email protected] Kūriniui Bibliografijos ir PDF tvarkymas, autorius Edgaras Timinskas, yra suteikta 2020-04-29 Creative Commons Priskyrimas - Nekomercinis platinimas - Analogiškas platinimas Pristatymą rasite: dspace.vgtu.lt 4.0 Tarptautinė licencija. Kuriuos citavimo įrankius naudojate? Prašau užpildykite trumpą apklausą. Pažymėkite įrankius, kuriuos naudojate rengdami mokslo darbus. https://goo.gl/forms/nsZK2tDo846bz3og1 2 Kuriuos socialinius tinklus naudojate? Prašau užpildykite trumpą apklausą. Pažymėkite socialinius tinklus, kuriuos naudojate mokslinei veiklai. https://goo.gl/forms/H9mLAzxnvF0PKOjG2 3 Turinys 1. Įvadas 13. Kiti mokslininkų socialiniai tinklai 2. Bibliografijos tvarkymas 14. Mokslinių išteklių paieška 3. PDF tvarkymas 15. Naudingos nuorodos ir literatūra 4. Programinių paketų palyginimas 5. Programos MENDELEY galimybės 6. Programos MENDELEY naudos 7. Programos MENDELEY diegimas 8. Darbas su programa MENDELEY 9. Citavimas su programa MENDELEY 10. Informacijos įkėlimas iš interneto 11. MENDELEY internete 12. Pagalbos centras 1 Įvadas Turinys Bibliografijos ir PDF tvarkymas (1) http://julitools.en.made-in-china.com/product/MqcmywkvlIVa/China-Axe- with-Plastic-Coating-Handle-A601-.html http://36.media.tumblr.com/5cbd642358a9b3eb547efa6 6e018fd4b/tumblr_mqkg2icebG1qzh8wko10_1280.jpg 6 Bibliografijos ir PDF tvarkymas (2) http://graphicssoft.about.com/od/digitalscrapbooking/ig/Manly-Digital- Scrapbooking-Kit/Oak-Tree.htm -

Refworks: Un Gestore Di Bibliografie Su

RefWorks: un gestore di bibliografie su web Un applicativo avanzato e innovativo per l’import di citazioni da database online, la creazione di archivi personali e la generazione di bibliografie in vari formati Angela Aceti, Nunzia Bellantonio Ispesl, dipartimento Processi organizzativi INTRODUZIONE nale di citazioni e generare automaticamente bibliografie in vari formati. Le citazioni volendo, Nel dicembre del 2006 il ministero della Salute ha avviato il possono essere inserite all’interno di docu- sistema Bibliosan, evoluzione di un precedente progetto fi- menti creati con un qualsiasi word processor. nalizzato che prevede la creazione di una rete tra tutte le bi- Le ricerche sul proprio database sono semplici 49 blioteche degli enti afferenti al ministero (Irccs, istituti zoo- e veloci: durante la fase di importazione dei re- d’informazione Fogli profilattici, Ispesl, Iss) per favorire l’acquisizione di risorse cord, il software genera automaticamente indici elettroniche nonché la circolazione e l’interscambio di docu- per autore, parola chiave e titolo del periodico. menti, in un’ottica di ottimizzazione di spesa e risultati. La bi- Con l’opzione quick search si possono eseguire blioteca dell’Ispesl è stata chiamata a prendervi parte fin ricerche generiche su tutti i campi disponibili, dall’inizio ed ha partecipato prima come unità operativa del mentre con la advanced search si può restringe- gruppo di lavoro e adesso come membro del comitato di ge- re la ricerca solo ad alcuni campi specifici. luglio-settembre 2007 stione. In questo ambito e attraverso l’appartenenza a Bi- RefWorks è anche un editor di bibliografie che bliosan - sistema bibliotecario degli enti di ricerca biomedici vengono redatte automaticamente e nello stile italiani - la biblioteca Ispesl ha acquisito il diritto di accesso desiderato, standard o personalizzato, facendo a diverse risorse elettroniche tra cui il software RefWorks risparmiare così tempo ed errori. -

On Publication Usage in a Social Bookmarking System

On Publication Usage in a Social Bookmarking System Daniel Zoller Stephan Doerfel Robert Jäschke Data Mining and Information ∗ L3S Research Center Retrieval Group ITeG, Knowledge and Data [email protected] University of Würzburg Engineering (KDE) Group [email protected] University of Kassel wuerzburg.de [email protected] Gerd Stumme Andreas Hotho ITeG, Knowledge and Data Data Mining and Information Engineering Group (KDE) Retrieval Group University of Kassel University of Würzburg [email protected] [email protected] kassel.de wuerzburg.de ABSTRACT The creation of impact measures from such data has been Scholarly success is traditionally measured in terms of cita- subsumed under the umbrella term altmetrics (alternative tions to publications. With the advent of publication man- metrics). According to the Altmetrics Manifesto [4] the agement and digital libraries on the web, scholarly usage goals of this initiative are to complement traditional bib- data has become a target of investigation and new impact liometric measures, to introduce diversity in measuring im- metrics computed on such usage data have been proposed pact, and to supplement peer-review. The manifesto also { so called altmetrics. In scholarly social bookmarking sys- appeals: \Work should correlate between altmetrics and ex- tems, scientists collect and manage publication meta data isting measures, predict citations from altmetrics and com- and thus reveal their interest in these publications. In this pare altmetrics with expert evaluation." In that spirit, we work, we investigate connections between usage metrics and analyze the usage metrics that can be computed in the social citations, and find posts, exports, and page views of publi- web system BibSonomy,1 a bookmarking tool for publica- cations to be correlated to citations. -

Bibsonomy: a Social Bookmark and Publication Sharing System

BibSonomy: A Social Bookmark and Publication Sharing System Andreas Hotho,1 Robert Jaschke,¨ 1,2 Christoph Schmitz,1 Gerd Stumme1,2 1 Knowledge & Data Engineering Group, Department of Mathematics and Computer Science, University of Kassel, Wilhelmshoher¨ Allee 73, D–34121 Kassel, Germany http://www.kde.cs.uni-kassel.de 2 Research Center L3S, Expo Plaza 1, D–30539 Hannover, Germany http://www.l3s.de Abstract. Social bookmark tools are rapidly emerging on the Web. In such sys- tems users are setting up lightweight conceptual structures called folksonomies. The reason for their immediate success is the fact that no specific skills are needed for participating. In this paper we specify a formal model for folksonomies and briefly describe our own system BibSonomy, which allows for sharing both book- marks and publication references in a kind of personal library. 1 Introduction Complementing the Semantic Web effort, a new breed of so-called “Web 2.0” appli- cations is currently emerging on the Web. These include user-centric publishing and knowledge management platforms like Wikis, Blogs, and social resource sharing tools. These tools, such as Flickr3 or del.icio.us,4 have acquired large numbers of users within less than two years.5 The reason for their immediate success is the fact that no specific skills are needed for participating, and that these tools yield immediate benefit for each individual user (e.g. organizing ones bookmarks in a browser-independent, persistent fashion) without too much overhead. Large numbers of users have created huge amounts of information within a very short period of time. -

Aigaion: a Web-Based Open Source Software for Managing the Bibliographic References

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by E-LIS repository Aigaion: A Web-based Open Source Software for Managing the Bibliographic References Sanjo Jose ([email protected]) Francis Jayakanth ([email protected]) National Centre for Science Information, Indian Institute of Science, Bangalore – 560 012 Abstract Publishing research papers is an integral part of a researcher's professional life. Every research article will invariably provide large number of citations/bibliographic references of the papers that are being cited in that article. All such citations are to be rendered in the citation style specified by a publisher and they should be accurate. Researchers, over a period of time, accumulate a large number of bibliographic references that are relevant to their research and cite relevant references in their own publications. Efficient management of bibliographic references is therefore an important task for every researcher and it will save considerable amount of researchers' time in locating the required citations and in the correct rendering of citation details. In this paper, we are reporting the features of Aigaion, a web-based, open-source software for reference management. 1. Introduction A citation or bibliographic citation is a reference to a book, article, web page, or any other published item. The reference will contain adequate details to facilitate the readers to locate the publication. Different citation systems and styles are being used in different disciplines like science, social science, humanities, etc. Referencing is also a standardised method of acknowledging the original source of information or idea. -

Overview of Advantages and Disadvantages of the Citation Managers Refworks, Mendeley and Zotero Teamwork Advantages Disadvantage

Overview of advantages and disadvantages of the citation managers RefWorks, Mendeley and Zotero Teamwork Advantages Disadvantages New RefWorks Possible to work on team projects • Import from most catalogues and • Integration with Word 2019 or Word 365 and hereby sharing the references. databases rather simple and fast. ProPlus on Mac computers may not work • Possible to import PDF-files. (properly). • Possible to annotate and highlight • Slow when importing simultaneously large the PDF-files. amounts of references (it is expected to • Compatible with Google Docs. improve shortly). • After graduation or termination of • 1 account per e-mail adress. your employment at UvA you can • Not compatible with Libre Office. still use your account. Legacy RefWorks • Possible to share and edit the • Possible to make multiple accounts • Integration with Word 2019 or Word 365 references in a joint account. using a single email address. ProPlus on Mac computers may not work • Possible to collaborate on • Suitable for systematic reviews, due (properly). documents with RW- to reasonable speed and • PDF-files can only be added one by one as references. transparency in deduplication. attachments. • Import from most catalogues and • No annotations or highlighting possible in databases rather simple and fast. PDF-files. • Not compatible with Google Docs. • Not compatible with Libre Office. Teamwork Advantages Disadvantages Mendeley • References can be shared and • Possible to import PDF-files. • Not to use with MacOS Mojave and edited. Full text can be jointly • Possible to annotate and highlight Catalina. annotated in private groups. the PDF-files, within a group as well. • Not compatible with Google Docs. • Collaborate on a document • Fast processing of large numbers of • Deduplication of references is not with references which can be references. -

Diskusi Online : Manajemen Referensi (Aplikasi Mendeley) Dalam Penulisan Karya Ilmiah

DISKUSI ONLINE : MANAJEMEN REFERENSI (APLIKASI MENDELEY) DALAM PENULISAN KARYA ILMIAH Eka Astuty1, Elpira Asmin2, & Eka Sukmawaty3 1,2Fakultas Kedokteran, Universitas Pattimura 3Fakultas Sains dan Teknologi, UIN Alauddin Makassar Email: [email protected], [email protected], [email protected] ABSTRACT : One of the requirements for students to achieve a bachelor's degree is to write scientific papers. Bibliography and citations are important elements in writing scientific papers. The arrangement of these two things is done manually or by application. Mendeley is a commonly used citation software. This activity was carried out online using the zoom application because during the Covid-19 pandemic, direct socialization or outreach activities could not be carried out because of the suggestion of Physical distancing. During the presentation of the material, discussion participants were invited to ask questions. The discussion participants were very enthusiastic and there were many questions submitted, including reference sources that could be used in writing scientific papers and the types of writing styles found in the Mendeley application. Community service activities in the form of online discussions about reference management (Mendeley Application) in writing scientific papers are expected to provide knowledge and information specifically for participants who are final year students. This online discussion is expected to also become a forum to refresh knowledge about reference management for participants who are lecturers. Keywords: Discussion, Online, Refference Management, Mendeley ABSTRAK : Salah satu syarat yang harus dipenuhi oleh mahasiswa untuk mencapai gelar sarjana yaitu harus menulis karya tulis ilmiah berupa skripsi. Daftar pustaka dan sitasi menjadi elemen penting dalam penulisan karya ilmiah. -

Reference Management Software (Rms) in an Academic Environment: a Survey at a Research University in Malaysia

Journal of Theoretical and Applied Information Technology 10 th June 2016. Vol.88. No.1 © 2005 - 2016 JATIT & LLS. All rights reserved . ISSN: 1992-8645 www.jatit.org E-ISSN: 1817-3195 REFERENCE MANAGEMENT SOFTWARE (RMS) IN AN ACADEMIC ENVIRONMENT: A SURVEY AT A RESEARCH UNIVERSITY IN MALAYSIA 1MOHAMMAD OSMANI, 2ROZAN MZA, 3BAKHTYAR ALI AHMAD, 4ARI SABIR ARIF 1 Department of Management, Mahabad Branch, Islamic Azad University, Mahabad, Iran 2 Faculty of Computing, Universiti Teknologi Malaysia (UTM), Johor, Malaysia 3 Faculty of Geo Information and Real Estate, Universiti Teknologi Malaysia (UTM), Johor, Malaysia 4 Faculty of Physical and Basic Education, University of Sulaimani (UOS), Sulaimani, Iraq E-mail: [email protected], [email protected] , [email protected], [email protected] ABSTRACT Reference Management Software is used by researchers in academics to manage the bibliographic citations they encounter in their research. With these tools, scholars keep track of the scientific literature they read, and to facilitate the editing of the scientific papers they write. This study presents the results of a quantitative survey performed at a research university in Malaysia. The aims of the survey were to observe how much these softwares are used by the scientific community, to see which softwares are most known and used, and to find out the reasons and the approaches behind their usage. Manually questionnaire was distributed to the Master and PhD students at all faculties in Jun 2014. The data collected were analysed through a constant comparative analysis, and the following categories were drawn: a basic practical approach to the instrument, the heavy impact of the time factor, the force of habit in scholars, economic issues, the importance of training and literacy, and the role that the library can have in this stage. -

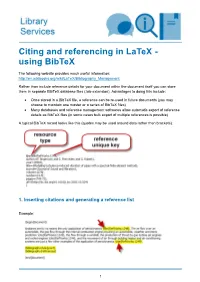

Citing and Referencing in Latex - Using Bibtex

Citing and referencing in LaTeX - using BibTeX The following website provides much useful information: http://en.wikibooks.org/wiki/LaTeX/Bibliography_Management Rather than include reference details for your document within the document itself you can store them in separate BibTeX database files (.bib extension). Advantages to doing this include: • Once stored in a BibTeX file, a reference can be re-used in future documents (you may choose to maintain one master or a series of BibTeX files) • Many databases and reference management softwares allow automatic export of reference details as BibTeX files (in some cases bulk export of multiple references is possible) A typical BibTeX record looks like this (quotes may be used around data rather than brackets): 1. Inserting citations and generating a reference list Example: 1 • To specify the output style of citations and references - insert the \bibliographystyle command e.g. \bibliographystyle{unsrt} where unsrt.bst is an available style file (a basic numeric style). Basic LaTeX comes with a few .bst style files; others can be downloaded from the web • To insert a citation in the text in the specified output style - insert the \cite command e.g. \cite{1942} where 1942 is the unique key for that reference. Variations on the \cite command can be used if using packages such as natbib (see below) • More flexible citing and referencing may be achieved by using other packages such as natbib (see below) or Biblatex • To generate the reference list in the specified output style - insert the \bibliography command e.g. \bibliography{references} where your reference details are stored in the file references.bib (kept in the same folder as the document).