UNIVERSITY of CALIFORNIA Los Angeles Initializing Hard-Label

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Issue 3 November 2013 N E W S the Cause of Kairos Fever; Seniors Sign up in Record Numbers Lights Outs, School’S Out, a New Song By: Jennielittleton Mr

The Miegian Entertainment 2013 p. 8 & 9 Volume 57 Issue 3 November 2013 N E W S The Cause of Kairos Fever; Seniors Sign Up in Record Numbers Lights Outs, School’s Out, A New Song By: JennieLittleton Mr. Creach does, to advertise Kairos. comes from “grow(ing) in relation- institutions in twelve states, and inter- Thanks to this year’s unprecedented ships with other people and see(ing) estingly enough, has also been a part Fills the Halls Editor-in-Chief show of spirit and zeal for the pro- the good in everyone.” Summed up, of the prison ministry movement ever What’s the Reason for These Blackouts? gram, each retreat has more seniors her experience was “nice and refresh- since. than expected, and for the first time at ing.” Mr. Creach explained that By: MariaBaska Traditionally each year, three By: LouieLaFeve lunches for everyone and record pur- parents of our status. Most kids who three-day retreats are publicized, Miege, a fourth Kairos retreat was on- Miege’s history with Kai- sidered but was not able to be sched- ros started when, after the chases by hand, but that wasn’t an could drive left immediately, and we staff writer planned, and prepared for, waiting staff writer option. Some of the emergency flood corralled the rest up in the Commons for seniors to sign up. Kairos, a Greek uled. “Kairos is an amazing retreat had spread from lights had been drained of power, so to try and find rides. This day went Bishop Miege unveiled its term meaning “The Lord’s Not much is revealed about Catholic/Jesuit school to Many Miegians were treat- experience that I wish certain hallways were entirely dark,” on the record as a whole day since we new song “Spirit of Bishop Miege,” Time,” is the title used to Kairos until the retreat has school, five juniors were ed to a pleasant Halloween surprise he said. -

The State of Robin Thicke 6 Upvotes | 2 July, 2014 | by Soanxious

The State of Robin Thicke 6 upvotes | 2 July, 2014 | by SoAnxious What does Red Pill Think of the current state of Robin Thicke and Paula Patton's relationship and his debut of an Album named after her Paula while estranged from her. Did he go full blue pill? Example Archived from theredarchive.com www.TheRedArchive.com Page 1 of 2 Comments chill_geddy • 3 points • 2 July, 2014 11:33 PM Dude is doing the Hollywood shuffle I don't think it would be a good look for him to be 100 percent honest shit it is douche chill inducing though [deleted] • 3 points • 2 July, 2014 11:48 PM From "Lost Without You" to now, nothing has changed with this guy. It doesn't surprise me in the least. DENNIS_SYSTEM_PUA • 3 points • 3 July, 2014 01:01 AM It's really sick and sad to watch, particularly know what we know and how much we could help him. If I woke up with that cat's life, I'd put a gun in my mouth. TangoAlphaBravo • 2 points • 2 July, 2014 11:31 PM We think they should eat a lentil stew together. intrcept • 3 points • 3 July, 2014 01:44 AM Note to self: if I ever become I rock star, I will never dedicate music to a specific woman. If we broke up, I might be stuck performing it on tour for the rest of my life. note-to-self-bot • 3 points • 4 July, 2014 01:58 AM You should always rememeber: if I ever become I rock star, I will never dedicate music to a specific woman. -

185(~ If You Have Issues Viewing Or Accessing This File Contact Us at NCJRS.Gov

If you have issues viewing or accessing this file contact us at NCJRS.gov. .. 185(~ ........ ] ............................... 7 cOmpihtion of writings was produced by the National Campaign to Stop Violence. For T~ore information on the Campaigns programs and activities, please contact the Campaign at: National Campaign to Stop Violence 1120 G Street, NW,, Suite 990 Washington, D.C 20005 (202) 393-7583 (800) Z56-O23S ;. ,. ," ;, '~i 7 ,.-,' , t, ~t}+,,;~, .... j.,~.,.~.,. J~ ']0]; ,.'b.t..'"'~' Please do not reprint any part of this book without first obtaining permission from the National Campaign to Stop Violence. Table of Contents I. Do the Wn~e Th/ngChallenge 1 ",3 II. Participating Organizations 3 III. National Campaign to Stop Violence Board of Directors 5 IV. Do the WrRe ~ Challenge Program National Finalists 7 Atlanta 9 Taronda Gibbons 11 Leo L. Tolin 17 Chicago 21 Roberto Coney 23 Rominna Villasegor 27 Denver 31 Emily B royies 33 PhiUip Dorsey 37 Hartford 41 James Shurko 43 Jackie Strong 47 Houston 51 Devell Blanton 53 Bianca Flores 59 Las Vegas 67 Takara Green 69 Paul Scott 73 Los Angeles 77 Jasmina Aragon 79 Raul Perez 85 iii Miami 89 Vanessa Butler 91 John Kelley 101 Mississippi 105 Sioban Scott 107 Shad White 111 Newark 115 Darren Alvarez 117 Iesha W'~liams 123 New Orleans 129 Nakeisha Brown 131 Avery Thomas 137 New York 143 Daniel Houck 145 Juanita Ramos 149 Philadelphia 155 Nancy Loi 157 Sean Medcalf 161 Washington, D.C 167 Angela Brown 169 Elijah Huggins 175 V. National Guard ChalleNGe Program Finalists 185 Alaska ChalleNGe -

Music for Guitar

So Long Marianne Leonard Cohen A Bm Come over to the window, my little darling D A Your letters they all say that you're beside me now I'd like to try to read your palm then why do I feel so alone G D I'm standing on a ledge and your fine spider web I used to think I was some sort of gypsy boy is fastening my ankle to a stone F#m E E4 E E7 before I let you take me home [Chorus] For now I need your hidden love A I'm cold as a new razor blade Now so long, Marianne, You left when I told you I was curious F#m I never said that I was brave It's time that we began E E4 E E7 [Chorus] to laugh and cry E E4 E E7 Oh, you are really such a pretty one and cry and laugh I see you've gone and changed your name again A A4 A And just when I climbed this whole mountainside about it all again to wash my eyelids in the rain [Chorus] Well you know that I love to live with you but you make me forget so very much Oh, your eyes, well, I forget your eyes I forget to pray for the angels your body's at home in every sea and then the angels forget to pray for us How come you gave away your news to everyone that you said was a secret to me [Chorus] We met when we were almost young deep in the green lilac park You held on to me like I was a crucifix as we went kneeling through the dark [Chorus] Stronger Kelly Clarkson Intro: Em C G D Em C G D Em C You heard that I was starting over with someone new You know the bed feels warmer Em C G D G D But told you I was moving on over you Sleeping here alone Em Em C You didn't think that I'd come back You know I dream in colour -

Dreams-041015 1.Pdf

DREAMS My Journey with Multiple Sclerosis By Kristie Salerno Kent DREAMS: MY JOURNEY WITH MULTIPLE SCLEROSIS. Copyright © 2013 by Acorda Therapeutics®, Inc. All rights reserved. Printed in the United States of America. Author’s Note I always dreamed of a career in the entertainment industry until a multiple sclerosis (MS) diagnosis changed my life. Rather than give up on my dream, following my diagnosis I decided to fight back and follow my passion. Songwriting and performance helped me find the strength to face my challenges and help others understand the impact of MS. “Dreams: My Journey with Multiple Sclerosis,” is an intimate and honest story of how, as people living with MS, we can continue to pursue our passion and use it to overcome denial and find the courage to take action to fight MS. It is also a story of how a serious health challenge does not mean you should let go of your plans for the future. The word 'dreams' may end in ‘MS,’ but MS doesn’t have to end your dreams. It has taken an extraordinary team effort to share my story with you. This book is dedicated to my greatest blessings - my children, Kingston and Giabella. You have filled mommy's heart with so much love, pride and joy and have made my ultimate dream come true! To my husband Michael - thank you for being my umbrella during the rainy days until the sun came out again and we could bask in its glow together. Each end of our rainbow has two pots of gold… our precious son and our beautiful daughter! To my heavenly Father, thank you for the gifts you have blessed me with. -

Songs by Artist

Sound Master Entertianment Songs by Artist smedenver.com Title Title Title .38 Special 2Pac 4 Him Caught Up In You California Love (Original Version) For Future Generations Hold On Loosely Changes 4 Non Blondes If I'd Been The One Dear Mama What's Up Rockin' Onto The Night Thugz Mansion 4 P.M. Second Chance Until The End Of Time Lay Down Your Love Wild Eyed Southern Boys 2Pac & Eminem Sukiyaki 10 Years One Day At A Time 4 Runner Beautiful 2Pac & Notorious B.I.G. Cain's Blood Through The Iris Runnin' Ripples 100 Proof Aged In Soul 3 Doors Down That Was Him (This Is Now) Somebody's Been Sleeping Away From The Sun 4 Seasons 10000 Maniacs Be Like That Rag Doll Because The Night Citizen Soldier 42nd Street Candy Everybody Wants Duck & Run 42nd Street More Than This Here Without You Lullaby Of Broadway These Are Days It's Not My Time We're In The Money Trouble Me Kryptonite 5 Stairsteps 10CC Landing In London Ooh Child Let Me Be Myself I'm Not In Love 50 Cent We Do For Love Let Me Go 21 Questions 112 Loser Disco Inferno Come See Me Road I'm On When I'm Gone In Da Club Dance With Me P.I.M.P. It's Over Now When You're Young 3 Of Hearts Wanksta Only You What Up Gangsta Arizona Rain Peaches & Cream Window Shopper Love Is Enough Right Here For You 50 Cent & Eminem 112 & Ludacris 30 Seconds To Mars Patiently Waiting Kill Hot & Wet 50 Cent & Nate Dogg 112 & Super Cat 311 21 Questions All Mixed Up Na Na Na 50 Cent & Olivia 12 Gauge Amber Beyond The Grey Sky Best Friend Dunkie Butt 5th Dimension 12 Stones Creatures (For A While) Down Aquarius (Let The Sun Shine In) Far Away First Straw AquariusLet The Sun Shine In 1910 Fruitgum Co. -

BOBBY CHARLES LYRICS Compiled by Robin Dunn & Chrissie Van Varik

BOBBY CHARLES LYRICS Compiled by Robin Dunn & Chrissie van Varik. Bobby Charles was born Robert Charles Guidry on 21st February 1938 in Abbeville, Louisiana. A native Cajun himself, he recalled that his life “changed for ever” when he re-tuned his parents’ radio set from a local Cajun station to one playing records by Fats Domino. Most successful as a songwriter, he is regarded as one of the founding fathers of swamp pop. His own vocal style was laidback and drawling. His biggest successes were songs other artists covered, such as ‘See You Later Alligator’ by Bill Haley & His Comets; ‘Walking To New Orleans’ by Fats Domino – with whom he recorded a duet of the same song in the 1990s – and ‘(I Don’t Know Why) But I Do’ by Clarence “Frogman” Henry. It allowed him to live off the songwriting royalties for the rest of his life! Two other well-known compositions are ‘The Jealous Kind’, recorded by Joe Cocker, and ‘Tennessee Blues’ which Kris Kristofferson committed to record. Disenchanted with the music business, Bobby disappeared from the music scene in the mid-1960s but returned in 1972 with a self-titled album on the Bearsville label on which he was accompanied by Rick Danko and several other members of the Band and Dr John. Bobby later made a rare live appearance as a guest singer on stage at The Last Waltz, the 1976 farewell concert of the Band, although his contribution was cut from Martin Scorsese’s film of the event. Bobby Charles returned to the studio in later years, recording a European-only album called Clean Water in 1987. -

An Analysis of Figurative Language in the Song Lyrics

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by IAIN Syekh Nurjati Cirebon AN ANALYSIS OF FIGURATIVE LANGUAGE IN THE SONG LYRICS BY MAHER ZAIN A THESIS Submitted to the English Eductaion Department of Tarbiyah Faculty of Syekh Nurjati State Institute for Islamic studies in Partial Fulfillment of the Requirements for the Scholar Degree of Islamic Education (S.Pd.I) By QURROTUL „AIN NIM : 59430775 ENGLISH EDUCATION DEPARTMENT OF TARBIYAH FACULTY OF SYEKH NURJATI STATE INSTITUTE FOR ISLAMIC STUDIES CIREBON 2013 ABSTRACT QURROTUL „AIN: AN ANALYSIS OF FIGURATIVE LANGUAGE IN THE SONG LYRICS BY MAHER ZAIN Now, there are many young people like music or song, especially English songs. They are interested to improve English to be mastery through English songs which are popular in this time. After they are listening and reading the text of song, not at all listener could catch the message of the song since a song consists of many messages should be understood by listener. Music and song is the part of this world of songs, an art based on the organization of sound in time. But many of them do not know about the meaning and messages of the song lyrics which they listen. In this thesis the writer would find out the figurative language of Islamic song by Maher Zain which has deep meaning and more messages for the listener, not only enjoy listening to music but also the listener and reader will gets knowledge about Islam more increase. Figurative language is language whit its literary in compatible terms. -

FIGURATIVE LANGUAGE in MAHER ZAIN's SONG LYRICS THESIS By

FIGURATIVE LANGUAGE IN MAHER ZAIN’S SONG LYRICS THESIS By LAILA ALVIANA DEWI NIM. 210916084 ENGLISH EDUCATION DEPARTMENT FACULTY OF TARBIYAH AND TEACHER TRAINING STATE INSTITUTE OF ISLAMIC STUDIES PONOROGO 2020 ABSTRACT DEWI, LAILA ALVIANA. 2020. Figurative Language in Maher Zain’s Song Lyrics in Academic Year 2019/2020. Thesis. English Education Department, Tarbiyah Faculty, State Istitute for Islamic Studies of Ponorogo, Advisor WiwinWidyawati, M.Hum. Key Word:Figurative language, lyric, song. Figurative language is language which uses all types of creative writing. Figurative language is important for the students especially who love the song. By knowing it, they will have the ability to comprehending meaning and mission of song. Lyric is expressing a person's personal feeling and thoughts through song. It means that the song can be used as the way to delivering idea and feeling so that the people can understand what the composer means. The purpose of this research were to find the types of figurative language in Maher Zain’s songs and disclose the most dominant types of figurative language in Maher Zain’s songs. This research applied descriptive qualitative approach. The research design was content analysis. It is intended to investigate those two research problems in relation to figurative language. The data were obtained from selected Maher Zain’s song lyrics, Hold My Hand, For The Rest of My Life, Number One For Me, Open Your Eyes and Awaken. By using figurative language theory of Perrine and supported by the theories of Abrams, Keraf and Reaske. To analysis it, used observation, note-taking and transcribing. -

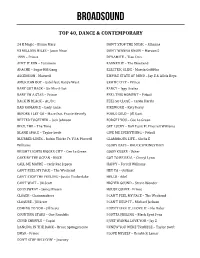

Broadsound Song List

BroadSound TOP 40, DANCE & CONTEMPORARY 24 K Magic – Bruno Mars DON’T STOP THE MUSIC – Rihanna 93 MILLION MILES – Jason Mraz DON’T WANNA KNOW – Maroon 5 1999 – Prince DYNAMITE – Tiao Cruz AIN’T IT FUN – Paramore EARNED IT – The Weekend APACHE – Sugar Hill Gang ELECTRIC SLIDE - Marcia Griffiths ASCENSION - Maxwell EMPIRE STATE OF MIND – Jay Z & Alicia Keys AMERICAN BOY – Estel feat. Kanye West EROTIC CITY – Prince BABY GOT BACK – Sir Mix-A-Lot FANCY – Iggy Azalea BABY I’M A STAR – Prince FEEL THIS MOMENT – Pitbull BACK IN BLACK – AC/DC FEEL SO CLOSE – Calvin Harris BAD ROMANCE – Lady GaGa FIREWORK – Katy Perry BEFORE I LET GO – Maze feat. Franie Beverly FOOLS GOLD – Jill Scott BETTER TOGETHER – Jack Johnson FORGET YOU – Cee Lo Green BIRD, THE – The Time GET LUCKY – Daft Punk Ft. Pherrell Williams BLANK SPACE – Taylor Swift GIVE ME EVERYTHING – Pitbull BLURRED LINES – Robin Thicke Ft. Y.I & Pherrell GLAMOROUS LIFE – Shelia E Williams GLORY DAYS – BRUCE SPRINGSTEEN BRIGHT LIGHTS BIGGER CITY – Cee Lo Green GOOD KISSER - Usher CAKE BY THE OCEAN - DNCE GOT TO BE REAL – Cheryl Lynn CALL ME MAYBE – Carly Rae Jepsen HAPPY – Ferrell Williams CAN’T FEEL MY FACE – The Weekend HEY YA – Outkast CAN’T STOP THE FEELING – Justin Timberlake HELLO - Adel CAN’T WAIT – Jill Scott HIGHER GOUND – Stevie Wonder COLD SWEAT – James Brown HOUSE QUAKE - Prince CLOSER - Chainsmokers I CAN’T FEEL MY FACE – The Weekend CLOSURE - Jill Scott I CAN’T HELP IT – Michael Jackson COMING TO YOU – Jill Scott I DON’T LIKE IT, I LOVE IT – Flo Rider COUNTING STARS – One Republic -

Fragmenting Kill/Shot Rachel Washington University of Arkansas, Fayetteville

University of Arkansas, Fayetteville ScholarWorks@UARK Theses and Dissertations 5-2015 Fragmenting Kill/Shot Rachel Washington University of Arkansas, Fayetteville Follow this and additional works at: http://scholarworks.uark.edu/etd Part of the Performance Studies Commons, and the Playwriting Commons Recommended Citation Washington, Rachel, "Fragmenting Kill/Shot" (2015). Theses and Dissertations. 1038. http://scholarworks.uark.edu/etd/1038 This Thesis is brought to you for free and open access by ScholarWorks@UARK. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of ScholarWorks@UARK. For more information, please contact [email protected], [email protected]. Fragmenting Kill/Shot Fragmenting Kill/Shot A thesis submitted in partial fulfillment of the requirement for the degree of Master of Fine Arts in Drama by Rachel Washington University of Notre Dame Bachelor of Arts in Film, Television, and Theatre, 2011 May 2015 University of Arkansas This thesis is approved for recommendation to the Graduate Council ___________________________________________ Professor Clinnesha Sibley Thesis Director ____________________________ ___________________________ Professor Les Wade Professor Robert Ford Committee Member Committee Member Abstract In “Fragmenting Kill/Shot”, I will explore how my play Kill/Shot changed over the course of the rehearsal and production process. I will explain and defend stylistic choices I made for this play, including how the others on the production team interpreted those stylistic choices. Finally, I will explore how changes to the script can help protect the play from being misinterpreted in the future. Acknowledgements I would like to extend special thanks to everyone who made this thesis possible. First, I would like to thank my thesis committee, Les Wade, Robert Ford, and Clinnesha Sibley. -

Voir Dire Grad Edition 2021

LINCOLN LAW SCHOOL OF SACRAMENTO LINCOLN LAWGRADUATION EDITION SCHOOL OF SACRAMENTO LINCOLN LAW SCHOOL OF 2021 1 2 3 5 Editors’ Letter From Dean’s Final My Dad, SACRAMENTO LINCOLN LAWNote Andy Smolich Message the Dean SCHOOL OF SACRAMENTO7 9 11 13 Golfing Community Legend, Tribute LINCOLN LAW SCHOOL OFAround Legacy SACRAMENTO LINCOLN LAW19 21 23 25 Scholarship Alum Key The Gray SCHOOL OF SACRAMENTO Advocate of Life LINCOLN LAW SCHOOL OF27 29 31 33 Home Mom Disability, Mandatory LLSA SACRAMENTO LINCOLN LAWto Lawyer Mom not Inability Minimum 35 37 41 43 SCHOOL OF SACRAMENTOAmong Roadmap Mentorship In Chambers LINCOLN LAW SCHOOL OFthe Range to Electives Program 45 47 Too Close 2021 SACRAMENTO LINCOLN LAWto Call Graduates SCHOOL OF SACRAMENTO VOIR DIRE 20 21 EDITORS’ A LETTER NOTE FROM ANDY SMOLICH The Voir Dire would like to congratulate Lincoln Law School’s Graduating Class of 2021. Dean Schiavenza notified the Board of Directors he intends to retire his position as dean of You made history by completing your entire final year of law school during a global epidemic. the law school at the end of the academic year. Jim has been an excellent academic leader And, although it was made more difficult by virtual classes; self-isolation; and a lack of of Lincoln Law School. I would say that he will be missed by faculty, students, and staff; but comradery, remember that your first three years marked the beginnings of new friendships fortunately for us all, he will continue to teach Torts. and many wonderful memories to cherish for a lifetime.