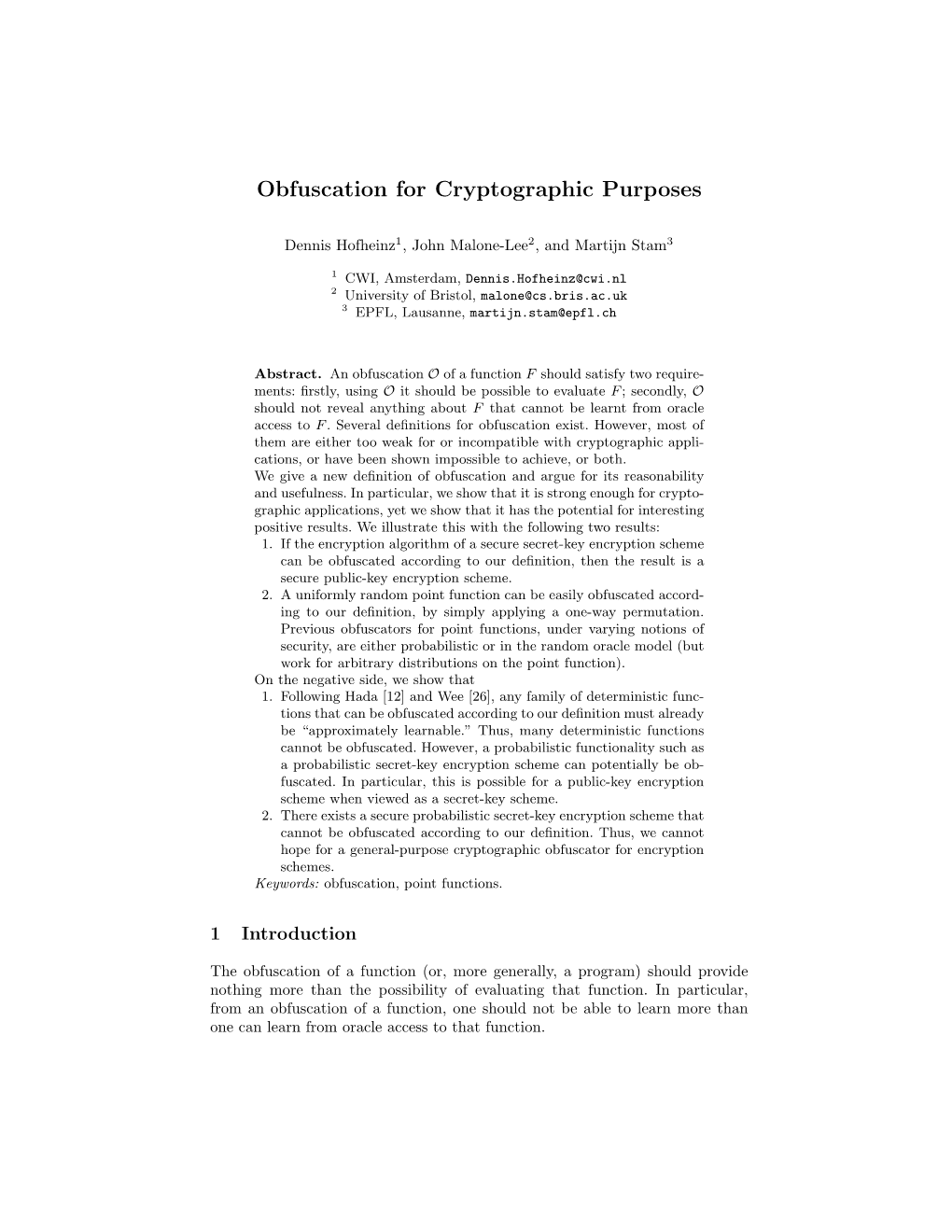

Obfuscation for Cryptographic Purposes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Aristotle's Illicit Quantifier Shift: Is He Guilty Or Innocent

Aristos Volume 1 Issue 2 Article 2 9-2015 Aristotle's Illicit Quantifier Shift: Is He Guilty or Innocent Jack Green The University of Notre Dame Australia Follow this and additional works at: https://researchonline.nd.edu.au/aristos Part of the Philosophy Commons, and the Religious Thought, Theology and Philosophy of Religion Commons Recommended Citation Green, J. (2015). "Aristotle's Illicit Quantifier Shift: Is He Guilty or Innocent," Aristos 1(2),, 1-18. https://doi.org/10.32613/aristos/ 2015.1.2.2 Retrieved from https://researchonline.nd.edu.au/aristos/vol1/iss2/2 This Article is brought to you by ResearchOnline@ND. It has been accepted for inclusion in Aristos by an authorized administrator of ResearchOnline@ND. For more information, please contact [email protected]. Green: Aristotle's Illicit Quantifier Shift: Is He Guilty or Innocent ARISTOTLE’S ILLICIT QUANTIFIER SHIFT: IS HE GUILTY OR INNOCENT? Jack Green 1. Introduction Aristotle’s Nicomachean Ethics (from hereon NE) falters at its very beginning. That is the claim of logicians and philosophers who believe that in the first book of the NE Aristotle mistakenly moves from ‘every action and pursuit aims at some good’ to ‘there is some one good at which all actions and pursuits aim.’1 Yet not everyone is convinced of Aristotle’s seeming blunder.2 In lieu of that, this paper has two purposes. Firstly, it is an attempt to bring some clarity to that debate in the face of divergent opinions of the location of the fallacy; some proposing it lies at I.i.1094a1-3, others at I.ii.1094a18-22, making it difficult to wade through the literature. -

Scope Ambiguity in Syntax and Semantics

Scope Ambiguity in Syntax and Semantics Ling324 Reading: Meaning and Grammar, pg. 142-157 Is Scope Ambiguity Semantically Real? (1) Everyone loves someone. a. Wide scope reading of universal quantifier: ∀x[person(x) →∃y[person(y) ∧ love(x,y)]] b. Wide scope reading of existential quantifier: ∃y[person(y) ∧∀x[person(x) → love(x,y)]] 1 Could one semantic representation handle both the readings? • ∃y∀x reading entails ∀x∃y reading. ∀x∃y describes a more general situation where everyone has someone who s/he loves, and ∃y∀x describes a more specific situation where everyone loves the same person. • Then, couldn’t we say that Everyone loves someone is associated with the semantic representation that describes the more general reading, and the more specific reading obtains under an appropriate context? That is, couldn’t we say that Everyone loves someone is not semantically ambiguous, and its only semantic representation is the following? ∀x[person(x) →∃y[person(y) ∧ love(x,y)]] • After all, this semantic representation reflects the syntax: In syntax, everyone c-commands someone. In semantics, everyone scopes over someone. 2 Arguments for Real Scope Ambiguity • The semantic representation with the scope of quantifiers reflecting the order in which quantifiers occur in a sentence does not always represent the most general reading. (2) a. There was a name tag near every plate. b. A guard is standing in front of every gate. c. A student guide took every visitor to two museums. • Could we stipulate that when interpreting a sentence, no matter which order the quantifiers occur, always assign wide scope to every and narrow scope to some, two, etc.? 3 Arguments for Real Scope Ambiguity (cont.) • But in a negative sentence, ¬∀x∃y reading entails ¬∃y∀x reading. -

Discrete Mathematics, Chapter 1.4-1.5: Predicate Logic

Discrete Mathematics, Chapter 1.4-1.5: Predicate Logic Richard Mayr University of Edinburgh, UK Richard Mayr (University of Edinburgh, UK) Discrete Mathematics. Chapter 1.4-1.5 1 / 23 Outline 1 Predicates 2 Quantifiers 3 Equivalences 4 Nested Quantifiers Richard Mayr (University of Edinburgh, UK) Discrete Mathematics. Chapter 1.4-1.5 2 / 23 Propositional Logic is not enough Suppose we have: “All men are mortal.” “Socrates is a man”. Does it follow that “Socrates is mortal” ? This cannot be expressed in propositional logic. We need a language to talk about objects, their properties and their relations. Richard Mayr (University of Edinburgh, UK) Discrete Mathematics. Chapter 1.4-1.5 3 / 23 Predicate Logic Extend propositional logic by the following new features. Variables: x; y; z;::: Predicates (i.e., propositional functions): P(x); Q(x); R(y); M(x; y);::: . Quantifiers: 8; 9. Propositional functions are a generalization of propositions. Can contain variables and predicates, e.g., P(x). Variables stand for (and can be replaced by) elements from their domain. Richard Mayr (University of Edinburgh, UK) Discrete Mathematics. Chapter 1.4-1.5 4 / 23 Propositional Functions Propositional functions become propositions (and thus have truth values) when all their variables are either I replaced by a value from their domain, or I bound by a quantifier P(x) denotes the value of propositional function P at x. The domain is often denoted by U (the universe). Example: Let P(x) denote “x > 5” and U be the integers. Then I P(8) is true. I P(5) is false. -

The Comparative Predictive Validity of Vague Quantifiers and Numeric

c European Survey Research Association Survey Research Methods (2014) ISSN 1864-3361 Vol.8, No. 3, pp. 169-179 http://www.surveymethods.org Is Vague Valid? The Comparative Predictive Validity of Vague Quantifiers and Numeric Response Options Tarek Al Baghal Institute of Social and Economic Research, University of Essex A number of surveys, including many student surveys, rely on vague quantifiers to measure behaviors important in evaluation. The ability of vague quantifiers to provide valid information, particularly compared to other measures of behaviors, has been questioned within both survey research generally and educational research specifically. Still, there is a dearth of research on whether vague quantifiers or numeric responses perform better in regards to validity. This study examines measurement properties of frequency estimation questions through the assessment of predictive validity, which has been shown to indicate performance of competing question for- mats. Data from the National Survey of Student Engagement (NSSE), a preeminent survey of university students, is analyzed in which two psychometrically tested benchmark scales, active and collaborative learning and student-faculty interaction, are measured through both vague quantifier and numeric responses. Predictive validity is assessed through correlations and re- gression models relating both vague and numeric scales to grades in school and two education experience satisfaction measures. Results support the view that the predictive validity is higher for vague quantifier scales, and hence better measurement properties, compared to numeric responses. These results are discussed in light of other findings on measurement properties of vague quantifiers and numeric responses, suggesting that vague quantifiers may be a useful measurement tool for behavioral data, particularly when it is the relationship between variables that are of interest. -

Propositional Logic, Truth Tables, and Predicate Logic (Rosen, Sections 1.1, 1.2, 1.3) TOPICS

Propositional Logic, Truth Tables, and Predicate Logic (Rosen, Sections 1.1, 1.2, 1.3) TOPICS • Propositional Logic • Logical Operations • Equivalences • Predicate Logic Logic? What is logic? Logic is a truth-preserving system of inference Truth-preserving: System: a set of If the initial mechanistic statements are transformations, based true, the inferred on syntax alone statements will be true Inference: the process of deriving (inferring) new statements from old statements Proposi0onal Logic n A proposion is a statement that is either true or false n Examples: n This class is CS122 (true) n Today is Sunday (false) n It is currently raining in Singapore (???) n Every proposi0on is true or false, but its truth value (true or false) may be unknown Proposi0onal Logic (II) n A proposi0onal statement is one of: n A simple proposi0on n denoted by a capital leJer, e.g. ‘A’. n A negaon of a proposi0onal statement n e.g. ¬A : “not A” n Two proposi0onal statements joined by a connecve n e.g. A ∧ B : “A and B” n e.g. A ∨ B : “A or B” n If a connec0ve joins complex statements, parenthesis are added n e.g. A ∧ (B∨C) Truth Tables n The truth value of a compound proposi0onal statement is determined by its truth table n Truth tables define the truth value of a connec0ve for every possible truth value of its terms Logical negaon n Negaon of proposi0on A is ¬A n A: It is snowing. n ¬A: It is not snowing n A: Newton knew Einstein. n ¬A: Newton did not know Einstein. -

Formal Logic: Quantifiers, Predicates, and Validity

Formal Logic: Quantifiers, Predicates, and Validity CS 130 – Discrete Structures Variables and Statements • Variables: A variable is a symbol that stands for an individual in a collection or set. For example, the variable x may stand for one of the days. We may let x = Monday, x = Tuesday, etc. • We normally use letters at the end of the alphabet as variables, such as x, y, z. • A collection of objects is called the domain of objects. For the above example, the days in the week is the domain of variable x. CS 130 – Discrete Structures 55 Quantifiers • Propositional wffs have rather limited expressive power. E.g., “For every x, x > 0”. • Quantifiers: Quantifiers are phrases that refer to given quantities, such as "for some" or "for all" or "for every", indicating how many objects have a certain property. • Two kinds of quantifiers: – Universal Quantifier: represented by , “for all”, “for every”, “for each”, or “for any”. – Existential Quantifier: represented by , “for some”, “there exists”, “there is a”, or “for at least one”. CS 130 – Discrete Structures 56 Predicates • Predicate: It is the verbal statement which describes the property of a variable. Usually represented by the letter P, the notation P(x) is used to represent some unspecified property or predicate that x may have. – P(x) = x has 30 days. – P(April) = April has 30 days. – What is the truth value of (x)P(x) where x is all the months and P(x) = x has less than 32 days • Combining the quantifier and the predicate, we get a complete statement of the form (x)P(x) or (x)P(x) • The collection of objects is called the domain of interpretation, and it must contain at least one object. -

Quantifiers and Dependent Types

Quantifiers and dependent types Constructive Logic (15-317) Instructor: Giselle Reis Lecture 05 We have largely ignored the quantifiers so far. It is time to give them the proper attention, and: (1) design its rules, (2) show that they are locally sound and complete and (3) give them a computational interpretation. Quantifiers are statements about some formula parametrized by a term. Since we are working with a first-order logic, this term will have a simple type τ, different from the type o of formulas. A second-order logic allows terms to be of type τ ! ι, for simple types τ and ι, and higher-order logics allow terms to have arbitrary types. In particular, one can quantify over formulas in second- and higher-order logics, but not on first-order logics. As a consequence, the principle of induction (in its general form) can be expressed on those logics as: 8P:8n:(P (z) ^ (8x:P (x) ⊃ P (s(x))) ⊃ P (n)) But this comes with a toll, so we will restrict ourselves to first-order in this course. Also, we could have multiple types for terms (many-sorted logic), but since a logic with finitely many types can be reduced to a single-sorted logic, we work with a single type for simplicity, called τ. 1 Rules in natural deduction To design the natural deduction rules for the quantifiers, we will follow the same procedure as for the other connectives and look at their meanings. Some examples will also help on the way. Since we now have a new ele- ment in our language, namely, terms, we will have a new judgment a : τ denoting that the term a has type τ. -

Vagueness and Quantification

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Institutional Research Information System University of Turin Vagueness and Quantification (postprint version) Journal of Philosophical Logic first online, 2015 Andrea Iacona This paper deals with the question of what it is for a quantifier expression to be vague. First it draws a distinction between two senses in which quantifier expressions may be said to be vague, and provides an account of the distinc- tion which rests on independently grounded assumptions. Then it suggests that, if some further assumptions are granted, the difference between the two senses considered can be represented at the formal level. Finally, it out- lines some implications of the account provided which bear on three debated issues concerning quantification. 1 Preliminary clarifications Let us start with some terminology. First of all, the term `quantifier expres- sion' will designate expressions such as `all', `some' or `more than half of', which occurs in noun phrases as determiners. For example, in `all philoso- phers', `all' occurs as a determiner of `philosophers', and the same position can be occupied by `some' or `more than half of'. This paper focuses on simple quantified sentences containing quantifier expressions so understood, such as the following: (1) All philosophers are rich (2) Some philosophers are rich (3) More than half of philosophers are rich Although this is a very restricted class of sentences, it is sufficiently repre- sentative to deserve consideration on its own. In the second place, the term `domain' will designate the totality of things over which a quantifier expression is taken to range. -

Neofregeanism and Quantifier Variance∗

NeoFregeanism and Quanti er Variance∗ Theodore Sider Aristotelian Society, Supplementary Volume 81 (2007): 201–32 NeoFregeanism is an intriguing but elusive philosophy of mathematical exis- tence. At crucial points, it goes cryptic and metaphorical. I want to put forward an interpretation of neoFregeanism—perhaps not one that actual neoFregeans will embrace—that makes sense of much of what they say. NeoFregeans should embrace quanti er variance.1 1. NeoFregeanism The neoFregeanism of Bob Hale and Crispin Wright is an attempt to resuscitate Frege’s logicism about arithmetic. Its goal is to combine two ideas. First: platonism about arithmetic. There really do exist numbers; numbers are mind- independent. Second: logicism. Arithmetic derives from logic plus de nitions. Thus, arithmetic knowledge rests on logical knowledge, even though its object is a realm of mind-independent abstract entities. 1.1 Frege on arithmetic Let us review Frege’s attempt to derive arithmetic from logic plus de nitions. “Arithmetic” here means second-order Peano arithmetic. “Logic” means (im- predicative) second-order logic.2 The “de nition” is what is now known as Hume’s Principle: Hume’s Principle F G(#x:F x=#x:Gx Eq(F,G)) 8 8 $ ∗Matti Eklund’s work connecting neoFregeanism to questions about the ontology of material objects (2006b, 2006a) sparked my interest in these topics. Thanks to Matti for helpful comments, and to Frank Arntzenius, Deniz Dagci, Kit Fine, John Hawthorne, Eli Hirsch, Anders Strand, Jason Turner, Dean Zimmerman, attendees of the 2006 BSPC conference (especially Joshua Brown, my commentator), and participants in my Spring 2006 seminar on metaontology. -

Code Obfuscation Against Abstraction Refinement Attacks

Under consideration for publication in Formal Aspects of Computing Code Obfuscation Against Abstraction Refinement Attacks Roberto Bruni1, Roberto Giacobazzi2;3 and Roberta Gori1 1 Dipartimento di Informatica, Universit`adi Pisa 2 Dipartimento di Informatica, Universit`adi Verona 3 IMDEA SW Institute, Spain Abstract. Code protection technologies require anti reverse engineering transformations to obfuscate pro- grams in such a way that tools and methods for program analysis become ineffective. We introduce the concept of model deformation inducing an effective code obfuscation against attacks performed by abstract model checking. This means complicating the model in such a way a high number of spurious traces are generated in any formal verification of the property to disclose about the system under attack. We transform the program model in order to make the removal of spurious counterexamples by abstraction refinement maximally inefficient. Because our approach is intended to defeat the fundamental abstraction refinement strategy, we are independent from the specific attack carried out by abstract model checking. A measure of the quality of the obfuscation obtained by model deformation is given together with a corresponding best obfuscation strategy for abstract model checking based on partition refinement. Keywords: Code obfuscation, verification, model checking, refinement 1. Introduction 1.1. The scenario Software systems are a strategic asset, which in addition to correctness deserves security and protection. This is particularly critical with the increase of mobile devices and ubiquitous computings, where the traditional black-box security model, with the attacker not able to see into the implementation system, is not adequate anymore. Code protection technologies are increasing their relevance due to the ubiquitous nature of modern untrusted environments where code runs. -

Logical Quantifiers

Logical Quantifiers 1. Subjects and Predicates: Here is an argument: 1. Chad is a duck. 2. All ducks are rabbits. 3. Therefore, Chad is a rabbit. We saw in unit one that this is a VALID argument (though it’s not sound). But, what would the sequent of this argument look like? Remember, in unit two we said that each letter must represent a STATEMENT—i.e., a string of words that can either be true or false. But, then, each line of the argument above is a different atomic statement. For instance, “Chad is a duck” is not a compound statement—it doesn’t have any simpler statements that it could be broken down into. (e.g., “Chad” is not a statement. It is just a name. It cannot be true or false.) So, would the sequent look like this? Sequent? D , A Ⱶ R But, how would one go about deriving “R” from “D” and “A”? The answer is that, in Propositional Logic, we cannot. Somehow, we need to be able to dig deeper, and be able to express the PARTS of propositions. That’s what this unit is about. In this unit, we will learn a new language: The language of Predicate Logic. Take a look at statement (1): “Chad is a duck.” It has a subject and a predicate. A subject of a sentence is the thing that some property or class is being attributed to, the predicate is the property or class that is being attributed to the subject. In this unit, we’re no longer going to use capital letters to represent atomic statements. -

Interpreting Scope Ambiguity

INTERPRETING SCOPE AMBIGUITY IN FIRST AND SECOND LANGAUGE PROCESSING: UNIVERSAL QUANTIFIERS AND NEGATION A DISSERTATION SUBMITTED TO THE GRADUATE DIVISION OF THE UNIVERSITY OF HAWAI‘I IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY IN LINGUISTICS MAY 2009 By Sunyoung Lee Dissertation Committee: William O’Grady, Chairperson Kamil Ud Deen Amy Schafer Bonnie Schwartz Ho-min Sohn Shuqiang Zhang We certify that we have read this dissertation and that, in our opinion, it is satisfactory in scope and quality as a dissertation for the degree of Doctor of Philosophy in Linguistics. DISSERTATION COMMITTEE Chairperson ii © Copyright 2009 by Sunyoung Lee iii ACKNOWLEDGEMENTS Although working on the dissertation was never an easy task, I was very fortunate to have opportunities to interact with a great many people who have helped to make this work possible. First and foremost, the greatest debt is to my advisor, William O’Grady. He has been watching my every step during my PhD program throughout the good times and the difficult ones. He was not only extraordinarily patient with any questions but also tirelessly generous with his time and his expertise. He helped me to shape my linguistic thinking through his unparalleled intellectual rigor and critical insights. I have learned so many things from him, including what a true scholar should be. William epitomizes the perfect mentor! I feel extremely blessed to have worked under his guidance. I am also indebted to all of my committee members. I am very grateful to Amy Schafer, who taught me all I know about psycholinguistic research.