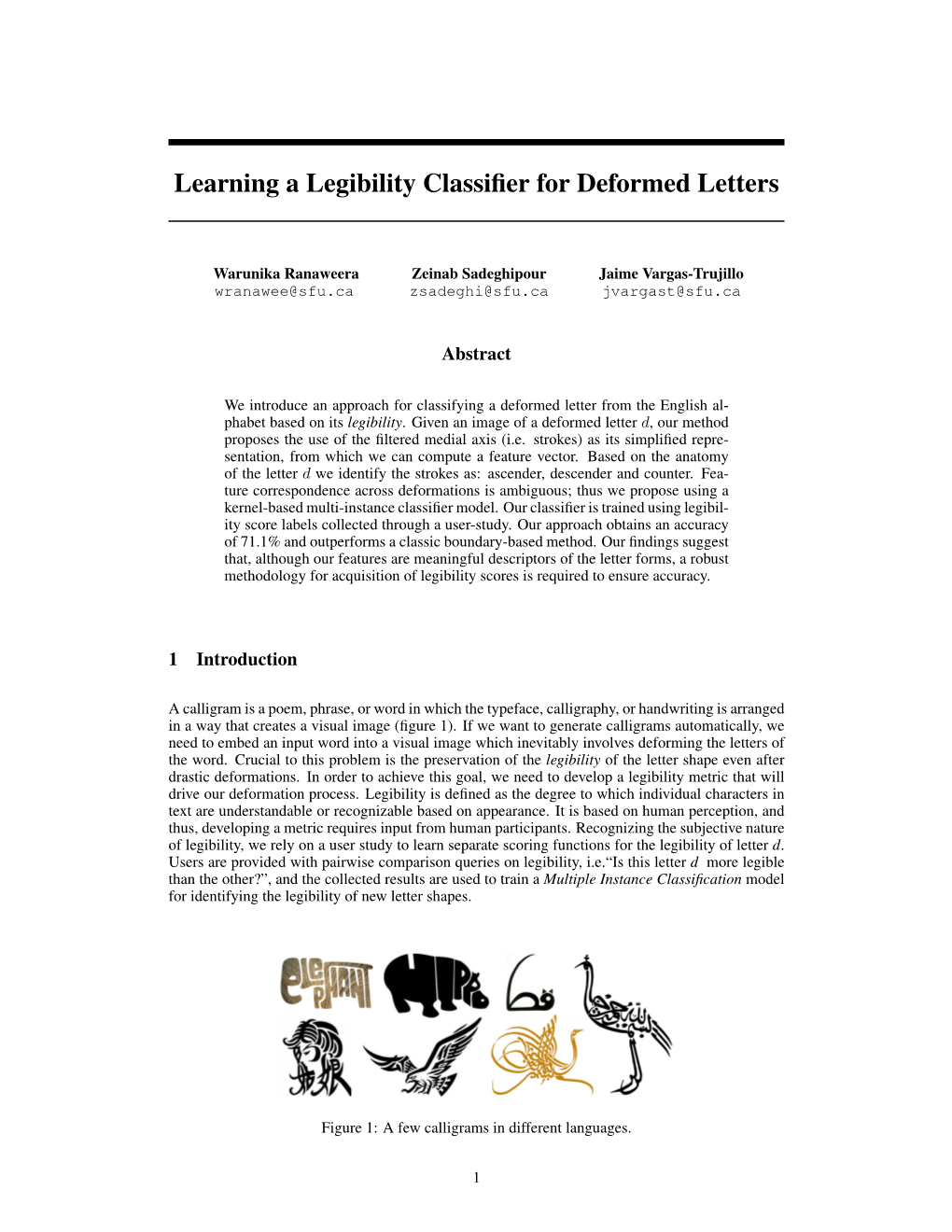

Learning a Legibility Classifier for Deformed Letters

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Letters Are Made for Reading

Letters are made for reading A lot of things has to be taken into consideration when you make typographical choices. With the typography of logos and headlines, etc, the style and tone of your publication is determined. But when it comes to the body text, legibility is more important than anything else. BACK IN THE LATE SEVENTIES when I was study- In ”micro” typography, on the other hand, legi- ing architecture at the Royal Academy of Arts, one bility is more important than style. Or maybe we of the rules we were taught was: ”A building must ought to talk about ”readability”, a phrase used by have a distinctive entrance”. That ought to make some typographers, making the point that what sense: It hardly matters what’s inside a house if you fi nd legible depends more than anything else you can’t fi nd the door, does it? on what you are used to read. However perfect Good typography, I’d say, is like the entrance the letterforms of a new typeface, it may still ap- to a house. It should lead you inside, help you to pear hard to read if people think it looks strange. the contents—which is indeed the most important Perhaps this is why most people still prefer text thing. with serifs even though repeated surveys have But then again, what is good typography? Well, shown no legibility differences between sans and as there are numerous disciplines of graphic de- serif typefaces. sign, this question does not have one single—or If you read the same newspaper every day, simple—answer. -

Basic Styles of Lettering for Monuments and Markers.Indd

BASIC STYLES OF LETTERING FOR MONUMENTS AND MARKERS Monument Builders of North America, Inc. AA GuideGuide ToTo TheThe SelectionSelection ofof LETTERINGLETTERING From primitive times, man has sought to crude or garish or awkward letters, but in communicate with his fellow men through letters of harmonized alphabets which have symbols and graphics which conveyed dignity, balance and legibility. At the same meaning. Slowly he evolved signs and time, they are letters which are designed to hieroglyphics which became the visual engrave or incise cleanly and clearly into expression of his language. monumental stone, and to resist change or obliteration through year after year of Ultimately, this process evolved into the exposure. writing and the alphabets of the various tongues and civilizations. The early scribes The purpose of this book is to illustrate the and artists refi ned these alphabets, and the basic styles or types of alphabets which have development of printing led to the design been proved in memorial art, and which are of alphabets of related character and ready both appropriate and practical in the lettering readability. of monuments and markers. Memorial art--one of the oldest of the arts- Lettering or engraving of family memorials -was among the fi rst to use symbols and or individual markers is done today with “letters” to inscribe lasting records and history superb fi delity through the use of lasers or the into stone. The sculptors and carvers of each sandblast process, which employs a powerful generation infl uenced the form of letters and stream or jet of abrasive “sand” to cut into the numerals and used them to add both meaning granite or marble. -

Thai Typefaces (Part 1): Assumption on Visibility and Legibility Problems

Thai Typefaces (Part 1): Assumption on Visibility and Legibility Problems 1* 2 3 Rachapoom PUNSONGSERM , Shoji SUNAGA , Hisayasu IHARA 1 Graduate School of Design, Kyushu University, Fukuoka, Japan 1 Faculty of Fine and Applied Arts, Thammasat University, Pathum Thani, Thailand 2,3 Faculty of Design, Kyushu University, Fukuoka, Japan Abstract Background Regarding the growing elderly population, which several countries are experiencing right now, various typeface designs have been developed to address the need for increased legibility and visibility. Certainly, common factors that affect the well-being of the elderly are related to visual acuity. The universal design fonts (UD fonts) developed in Japan are regarded as a role model for the development of highly legible and visible Thai typefaces. As Thailand’s society matures, the concern for creating a Thai UD font based on human-centered design is ideal for the purpose of supporting elderly people as well as people with amblyopia and low vision Methods As the first step to developing a Thai UD font, as a preliminary study, we qualitatively tested the tolerance of Thai characters under blurred conditions. We used the fifty Thai conventional text fonts in the boundary of ‘The Table of Thai Character's Relationship’ in the same character heights as stimuli. The stimuli simulated the visual acuity of people with poor vision at different blur levels. Results From the tests, we have established the expected Thai confused pairs, as the assumptions. We found the simulated condition revealed many problems with the visibility and the legibility matters of Thai Characters, as the assumptions; however, these findings led to ideas and possible solutions, which may provide assistance for the approach to designing the first Thai UD font. -

Vision Performance Institute

Vision Performance Institute Technical Report Individual character legibility James E. Sheedy, OD, PhD Yu-Chi Tai, PhD John Hayes, PhD The purpose of this study was to investigate the factors that influence the legibility of individual characters. Previous work in our lab [2], including the first study in this sequence, has studied the relative legibility of fonts with different anti- aliasing techniques or other presentation medias, such as paper. These studies have tested the relative legibility of a set of characters configured with the tested conditions. However the relative legibility of individual characters within the character set has not been studied. While many factors seem to affect the legibility of a character (e.g., character typeface, character size, image contrast, character rendering, the type of presentation media, the amount of text presented, viewing distance, etc.), it is not clear what makes a character more legible when presenting in one way than in another. In addition, the importance of those different factors to the legibility of one character may not be held when the same set of factors was presented in another character. Some characters may be more legible in one typeface and others more legible in another typeface. What are the character features that affect legibility? For example, some characters have wider openings (e.g., the opening of “c” in Calibri is wider than the character “c” in Helvetica); some letter g’s have double bowls while some have single (e.g., “g” in Batang vs. “g” in Verdana); some have longer ascenders or descenders (e.g., “b” in Constantia vs. -

Typographic Terms Alphabet the Characters of a Given Language, Arranged in a Traditional Order; 26 Characters in English

Typographic Terms alphabet The characters of a given language, arranged in a traditional order; 26 characters in English. ascender The part of a lowercase letter that rises above the main body of the letter (as in b, d, h). The part that extends above the x-height of a font. bad break Refers to widows or orphans in text copy, or a break that does not make sense of the phrasing of a line of copy, causing awkward reading. baseline The imaginary line upon which text rests. Descenders extend below the baseline. Also known as the "reading line." The line along which the bases of all capital letters (and most lowercase letters) are positioned. bleed An area of text or graphics that extends beyond the edge of the page. Commercial printers usually trim the paper after printing to create bleeds. body type The specific typeface that is used in the main text break The place where type is divided; may be the end of a line or paragraph, or as it reads best in display type. bullet A typeset character (a large dot or symbol) used to itemize lists or direct attention to the beginning of a line. (See dingbat.) cap height The height of the uppercase letters within a font. (See also cap line.) caps and small caps The typesetting option in which the lowercase letters are set as small capital letters; usually 75% the height of the size of the innercase. Typographic Terms character A symbol in writing. A letter, punctuation mark or figure. character count An estimation of the number of characters in a selection of type. -

Taking It In

Legibility Takin PhotoDisc Read ability iti What makes type eas ypography, once a craft practiced quietly by trade spe- cialists, has been democratized. Thousands of computer users—not Tjust those in the publishing business, as in the past—are suddenly Tresponsible for setting type. Most of these new typographers are looking for advice on how to set type so it’s easy to read. That’s not surprising, since there’s not much useful guidance on this topic in computer manuals. And despite the recent boom in in- formation on type and typography—with magazine articles and a spate of books, both new editions of old classics and brand-new works—too many of the precepts seem counterintuitive, and some ng even conflict with each other. It’s not all that useful to look at what the pros do, either, since so much of what we get in the mail and on newsstands seems hard to read or ugly or both. (And a lot of it seems to ignore most of the advice in the books and magazines.) One solution is to turn to research for scientific assurance about what’s readable and what’s not. We may notice that type seems hard to read when it’s too small or set with no leading or reversed out of a color—but is it really hard to read, or merely irritating? In fact, there’s good news and there’s bad news: hundreds of studies of read- ability have been conducted over the years, but hard-and-fast an- swers to the most vexing typographic questions are surprisingly difficult to extract from the results. -

Legibility of Electronic Paper

24.5 / S. C. Jeng Legibility of Electronic Paper Shie-Chang Jeng, Yen-Rong Lin, Kang-Hung Liu and Chi-Chang Liao Electronics Research & Service Organization, Industrial Technology Research Institute, Hsinchu 310, Taiwan Chao-Hua Wen Opto-Electronics & Systems Laboratories, Industrial Technology Research Institute, Hsinchu 310, Taiwan Chih-Yu Chao and Kong-King Shieh Department of Industrial Management, National Taiwan University of Science and Technology, Taipei, Taiwan Abstract been investigated. The reflective-type display utilizes Legibility of electronic paper was evaluated by the ambient light as a using the method of letter-search task and subjective visual comfort scaling. The results show that legibility reading source, so it is expected that the legibility may depends on the illumination level, and conventional be varying with the ambient light source and paper has a higher visual comfort rating than illuminance. electronic paper although they have the similar In this paper, we have studied the legibility of performance in the letter-search task. electronic paper under three different diffusive light 1. Introduction sources with five different illumination levels. The Electronic paper has become a forefront topic in ergonomic evaluation and comparison between the new generation of visual displays due to the light electronic paper and conventional paper could reveal weight, low power consumption and sunlight that whether the current specifications are good readability. Among the developing technologies, enough for reading. electronic paper made of electrophoretic electronic ink 2. Experimental design (E-ink) is likely the only available product in the Sony LIBRIé e-book made of E-ink [3] (CR:~4, market with a paper-white appearance [1]. -

Base Monospace

SPACE PROBE: Investigations Into Monospace Introducing Base Monospace Typeface BASE MONOSPACE Typeface design 1997ZUZANA LICKO Specimen design RUDY VANDERLANS Rr SPACE PROBE: Investigations into Monospace SPACE PROBE: Occasionally, we receive inquiries from type users asking Monospaced Versus Proportional Spacing Investigations Into Monospace us how many kerning pairs our fonts contain. It would seem 1. that the customer wants to be dazzled with numbers. Like cylinders in a car engine or the price earnings ratio of a /o/p/q/p/r/s/t/u/v/w/ Occasionally, we receive inquiries fromstock, type theusers higher asking the number of kerning pairs, the more us how many kerning pairs our fonts contain.impressed It thewould customer seem will be. What they fail to understand /x/y/s/v/z/t/u/v/ that the customer wants to be dazzled iswith that numbers. the art Like of kerning a typeface is as subjective a discipline as is the drawing of the letters themselves. The In a monospaced typeface, such as Base Monospace, cylinders in a car engine or the price earnings ratio of each character fits into the same character width. a stock, the higher the number of kerningfact pairs,that a theparticular more typeface has thousands of kerning impressed the customer will be. What theypairs fail is relative,to understand since some typefaces require more kerning is that the art of kerning a typeface pairsis as thansubjective others aby virtue of their design characteristics. /O/P/Q/O/Q/P/R/S/Q/T/U/V/ discipline as is the drawing of the lettersIn addition, themselves. -

The Effects of Font Type and Spacing of Text for Online Readability and Performance

CONTEMPORARY EDUCATIONAL TECHNOLOGY, 2014, 5(2), 161-174 The Effects of Font Type and Spacing of Text for Online Readability and Performance Nafiseh Hojjati & Balakrishnan Muniandy Universiti Sains Malaysia, Malaysia Abstract Texts are a group of letters which are printed or displayed in a particular style and size. In the course of the fast speed of technological development everywhere and expanding use of computer based instruction such as online courses, students spend more time on a computer screen than printed media. Texts have been the main element to convey messages. It has also been a significant component for learning. The main goal of this research is to measure the effects of font type and spacing of on screen text and its readability in improving and boosting the learner’s ability to read easily, recall information, and enhance their reading speed and comprehension from on screen text with different topics. The readability of text on screens is necessary to ensure effective engagement in order to enhance the level of students’ readability. For this purpose two font types were selected, Times New Roman (serif) and Verdana (san serif) for the respondents. Verdana was designed only for computer screens display. Readability test on a computer screen was conducted on 30 postgraduate students. Overall, the results showed that there was a significant difference between the readability of serif and san serif font type of on-screen display. The research findings suggest Verdana font type as a better choice in displaying long text for on-screen display. Keywords: Font type; Readability; Spacing; On-screen text; Serif; San serif Introduction Texts are a collection of letters and words which are printed or displayed in a particular style and size. -

Reading Typography-Sage Encyclopedia of Perception

Reading typography The configuration of text rendered by printed, mechanical or electronic means encompasses most of what we call typography. Typography may have an impact on legibility, the ease with which strings of letter forms can be decoded into words by a competent reader, and it may serve to supplement or support the semantic content of a text communication. Reading, of course, is an impressive perceptual and cognitive feat, and studying the effects on it of both experimental manipulation and ‘naturalistic’ observation of typographic variables may aid our understanding of more fundamental aspects of object and word recognition, as well as of reading itself. Typographic effects on legibility and typographic communications may operate at both small and large scales. At small scales, the forms making up individual letters (known as glyphs) have an impact on readers’ ability to distinguish and identify individual letters and hence to recognize words or symbols. Stylistic variants of letter forms such as italics and boldface may indicate emphasis, while stylized font families may convey cultural messages (e.g. “old- English”, “Broadway”, or cursive-style fonts). At larger scales, grosser features of typography such as margins, columnar organization, and inter-letter and inter-line spacing (termed ‘leading’) may also affect readability. Such features may also occasionally convey semantic content. An example of this is when very wide spacing (letter or line) is used to communicate opulence or sophistication. How typography impacts legibility and readability has important applications in signage design. On highways, where drivers may need to read signs from great distance and at high speeds, typography may have an impact on safety. -

DESN 130 - TYPOGRAPHY Page 1 Kerning & Tracking Kerning and Tracking Are Two Related and Frequently Confused Typographical Terms

DESN 130 - TYPOGRAPHY Page 1 Kerning & Tracking Kerning and tracking are two related and frequently confused typographical terms. Both refer to the adjustment of space between characters of type, also called letterspacing. Headlines usually benefit from kerning and text set in ALL CAPS almost always requires kerning for best appearance. Kerning is Selective Letterspacing Kerning is the adjustment of space between PAIRS of letters. Your headline type will not always look good right out of the computer. Some pairs of letters create awkward spaces that look bad and hinder legibility. Kerning adds or subtracts space between letters to create more visually appealing and readable text. In the era of metal type, the term kern defined the part of the letter that extended beyond the metal slug, allowing letters to be moved closer together. In digital type, kerning information for many commonly kerned character pairs is built-in to most quality fonts. Some software programs use these built-in kerning tables to apply automatic kerning to text. Anywhere from 50 to 1000 or more kerning pairs may be defined for any one font. The goal of kerning is to make the type have the appearance of an even and proportional spacing between all of the letters. This will require OPTICAL spacing to be used, not MECHANICAL spacing. In mechanical spacing, there is an equal distance between each letter. On optical spacing, the distance is relative to the shapes of the letters to make the letters form a natural looking group. Some fonts are better at doing this automatically than others. To optically space letters, you must look at the shape of the letter and use these basic rules: • More space – between two vertical shapes • Less space – between a vertical shape and a round shape • Even less space – between two round shapes Some specific combinations of letters will be more problematic than others, especially when your type is set in all caps. -

The Effect of Text Typographical Features on Legibility, Comprehension, and Retrieval of EFL Learners

English Language Teaching; Vol. 5, No. 8; 2012 ISSN 1916-4742 E-ISSN 1916-4750 Published by Canadian Center of Science and Education The Effect of Text Typographical Features on Legibility, Comprehension, and Retrieval of EFL Learners Hassan Soleimani1 & Elham Mohammadi1 1 Payame Noor University, Tehran, Iran Correspondence: Hassan Soleimani, Department of Applied Linguistics, Payame Noor University, Tehran, Iran. PO box 19395-3697. Tel: 98-912-253-5368. E-mail: [email protected] Received: May 1, 2012 Accepted: May 18, 2012 Online Published: July 11, 2012 doi:10.5539/elt.v5n8p207 URL: http://dx.doi.org/10.5539/elt.v5n8p207 Abstract This experimental study investigated the relationship between font type, font size, and line spacing and legibility, as measured by speed of reading, comprehension, and recalling. Instruments for testing legibility and reading comprehension were presented in eight typographical styles in print. The study tested 90 students for legibility and 76 students for comprehension. The subjects were chosen from Urmia Language Center in Urmia, Iran, and they were both girls and boys. Two weeks after the reading comprehension test, the subjects took part in recalling test. In all tests, the subjects were randomly assigned to one of the eight different typographical styles with Arial or Bookman Old Style (font selection) and 10pt or 12pt (font size). But the subjects had both set solid and double spaced leading in their instruments. Results indicated that the 12pt was read faster than 10pt. But font selection and line spacing did not have any significant effect on reading speed. Also, there were no significant results with regard to comprehension and recalling.