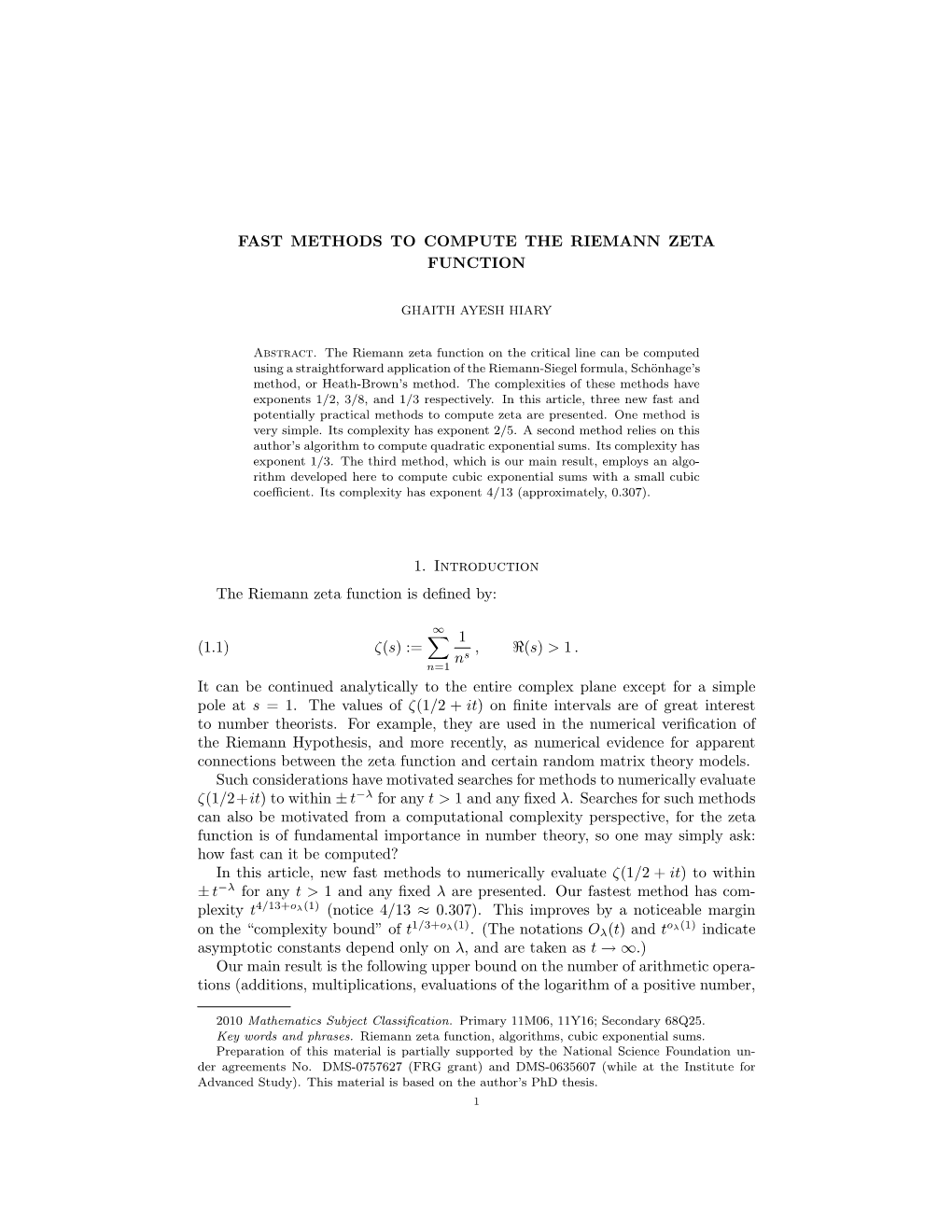

Fast Methods to Compute the Riemann Zeta Function 11

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Exponential Sums and the Distribution of Prime Numbers

CORE Metadata, citation and similar papers at core.ac.uk Provided by Helsingin yliopiston digitaalinen arkisto Exponential Sums and the Distribution of Prime Numbers Jori Merikoski HELSINGIN YLIOPISTO HELSINGFORS UNIVERSITET UNIVERSITY OF HELSINKI Tiedekunta/Osasto Fakultet/Sektion Faculty Laitos Institution Department Faculty of Science Department of Mathematics and Statistics Tekijä Författare Author Jori Merikoski Työn nimi Arbetets titel Title Exponential Sums and the Distribution of Prime Numbers Oppiaine Läroämne Subject Mathematics Työn laji Arbetets art Level Aika Datum Month and year Sivumäärä Sidoantal Number of pages Master's thesis February 2016 102 p. Tiivistelmä Referat Abstract We study growth estimates for the Riemann zeta function on the critical strip and their implications to the distribution of prime numbers. In particular, we use the growth estimates to prove the Hoheisel-Ingham Theorem, which gives an upper bound for the dierence between consecutive prime numbers. We also investigate the distribution of prime pairs, in connection which we oer original ideas. The Riemann zeta function is dened as s in the half-plane Re We extend ζ(s) := n∞=1 n− s > 1. it to a meromorphic function on the whole plane with a simple pole at s = 1, and show that it P satises the functional equation. We discuss two methods, van der Corput's and Vinogradov's, to give upper bounds for the growth of the zeta function on the critical strip 0 Re s 1. Both of ≤ ≤ these are based on the observation that ζ(s) is well approximated on the critical strip by a nite exponential sum T s T Van der Corput's method uses the Poisson n=1 n− = n=1 exp s log n . -

Uniform Distribution of Sequences

Alt 1-4 'A I #so 4, I s4.1 L" - _.u or- ''Ifi r '. ,- I 'may,, i ! :' m UNIFORM DISTRIHUTION OF SEQUENCES L. KUIPERS Southern Illinois University H. NIEDERREITER Southern Illinois University A WILEY-INTERSCIENCE PUBLICATION JOHN WILEY & SONS New York London Sydney Toronto Copyright © 1974, by John Wiley & Sons, Inc. All rights reserved. Published simultaneously in Canada. No part of this book may be reproduced by any means, nor transmitted, nor translated into a machine language with- out the written permission of the publisher. Library of Congress Cataloging in Publication Data: Kuipers, Lauwerens. Uniform distribution of sequences. (Pure and applied mathematics) "A Wiley-Interscience publication." Bibliography: 1. Sequences (Mathematics) 1. Niederreiter, H., joint author. II. Title. QA292.K84 515'.242 73-20497 ISBN 0-471-51045-9 Printed in the United States of America 10-987654321 To Francina and Gerlinde REFACCE The theory of uniform distribution modulo one (Gleichverteilung modulo Eins, equirepartition modulo un) is concerned, at least in its classical setting, with the distribution of fractional parts of real numbers in the unit interval (0, 1). The development of this theory started with Hermann Weyl's celebrated paper of 1916 titled: "Uber die Gleichverteilung von Zahlen mod. Eins." Weyl's work was primarily intended as a refinement of Kronecker's approxi- mation theorem, and, therefore, in its initial stage, the theory was deeply rooted in diophantine approximations. During the last decades the theory has unfolded beyond that framework. Today, the subject presents itself as a meeting ground for topics as diverse as number theory, probability theory, functional analysis, topological algebra, and so on. -

Exponential Sums and Applications in Number Theory and Analysis

Faculty of Sciences Department of Mathematics Exponential sums and applications in number theory and analysis Frederik Broucke Promotor: Prof. dr. J. Vindas Master's thesis submitted in partial fulfilment of the requirements for the degree of Master of Science in Mathematics Academic year 2017{2018 ii Voorwoord Het oorspronkelijke idee voor deze thesis was om het bewijs van het ternaire vermoeden van Goldbach van Helfgott [16] te bestuderen. Al snel werd mij duidelijk dat dit een monumentale opdracht zou zijn, gezien de omvang van het bewijs (ruim 300 bladzij- den). Daarom besloot ik om in de plaats de basisprincipes van de Hardy-Littlewood- of cirkelmethode te bestuderen, de techniek die de ruggengraat vormt van het bewijs van Helfgott, en die een zeer belangrijke plaats inneemt in de additieve getaltheorie in het algemeen. Hiervoor heb ik gedurende het eerste semester enkele hoofdstukken van het boek \The Hardy-Littlewood method" van R.C. Vaughan [37] gelezen. Dit is waarschijnlijk de moeilijkste wiskundige tekst die ik tot nu toe gelezen heb; de weinige tussenstappen, het gebrek aan details, en zinnen als \one easily sees that" waren vaak frustrerend en demotiverend. Toch heb ik doorgezet, en achteraf gezien ben ik echt wel blij dat ik dat gedaan heb. Niet alleen heb ik enorm veel bijgeleerd over het onderwerp, ik heb ook het gevoel dat ik beter of vlotter ben geworden in het lezen van (moeilijke) wiskundige teksten in het algemeen. Na het lezen van dit boek gaf mijn promotor, professor Vindas, me de opdracht om de idee¨en en technieken van de cirkelmethode toe te passen in de studie van de functie van Riemann, een \pathologische" continue functie die een heel onregelmatig puntsgewijs gedrag vertoont. -

Estimating the Growth Rate of the Zeta-Function Using the Technique of Exponent Pairs

Estimating the Growth Rate of the Zeta-function Using the Technique of Exponent Pairs Shreejit Bandyopadhyay July 1, 2014 Abstract The Riemann-zeta function is of prime interest, not only in number theory, but also in many other areas of mathematics. One of the greatest challenges in the study of the zeta function is the understanding of its behaviour in the critical strip 0 < Re(s) < 1, where s = σ+it is a complex number. In this note, we first obtain a crude bound for the growth of the zeta function in the critical strip directly from its approximate functional equation and then show how the estimation of its growth reduces to a problem of evaluating an exponential sum. We then apply the technique of exponent pairs to get a much improved bound on the growth rate of the zeta function in the critical strip. 1 Introduction The Riemann zeta function ζ(s) with s = σ+it a complex number can be defined P 1 Q 1 −1 in either of the two following ways - ζ(s) = n ns or ζ(s) = p(1− ps ) , where in the first expression we sum over all natural numbers while in the second, we take the product over all primes. That these two expressions are equivalent fol- lows directly from the expression of n as a product of primes and the fundamen- tal theorem of arithmetic. It's easy to check that both the Dirichlet series, i.e., the first sum and the infinite product converge uniformly in any finite region with σ > 1. -

New Equidistribution Estimates of Zhang Type

NEW EQUIDISTRIBUTION ESTIMATES OF ZHANG TYPE D.H.J. POLYMATH Abstract. We prove distribution estimates for primes in arithmetic progressions to large smooth squarefree moduli, with respect to congruence classes obeying Chinese 1 7 Remainder Theorem conditions, obtaining an exponent of distribution 2 ` 300 . Contents 1. Introduction 1 2. Preliminaries 8 3. Applying the Heath-Brown identity 14 4. One-dimensional exponential sums 23 5. Type I and Type II estimates 38 6. Trace functions and multidimensional exponential sum estimates 59 7. The Type III estimate 82 8. An improved Type I estimate 94 References 105 1. Introduction In May 2013, Y. Zhang [52] proved the existence of infinitely many pairs of primes with bounded gaps. In particular, he showed that there exists at least one h ¥ 2 such that the set tp prime | p ` h is primeu is infinite. (In fact, he showed this for some even h between 2 and 7 ˆ 107, although the precise value of h could not be extracted from his method.) Zhang's work started from the method of Goldston, Pintz and Yıldırım [23], who had earlier proved the bounded gap property, conditionally on distribution estimates arXiv:1402.0811v3 [math.NT] 3 Sep 2014 concerning primes in arithmetic progressions to large moduli, i.e., beyond the reach of the Bombieri{Vinogradov theorem. Based on work of Fouvry and Iwaniec [11, 12, 13, 14] and Bombieri, Friedlander and Iwaniec [3, 4, 5], distribution estimates going beyond the Bombieri{Vinogradov range for arithmetic functions such as the von Mangoldt function were already known. However, they involved restrictions concerning the residue classes which were incompatible with the method of Goldston, Pintz and Yıldırım. -

Exponential Sums and the Distribution of Prime Numbers

Exponential Sums and the Distribution of Prime Numbers Jori Merikoski HELSINGIN YLIOPISTO HELSINGFORS UNIVERSITET UNIVERSITY OF HELSINKI Tiedekunta/Osasto Fakultet/Sektion Faculty Laitos Institution Department Faculty of Science Department of Mathematics and Statistics Tekijä Författare Author Jori Merikoski Työn nimi Arbetets titel Title Exponential Sums and the Distribution of Prime Numbers Oppiaine Läroämne Subject Mathematics Työn laji Arbetets art Level Aika Datum Month and year Sivumäärä Sidoantal Number of pages Master's thesis February 2016 102 p. Tiivistelmä Referat Abstract We study growth estimates for the Riemann zeta function on the critical strip and their implications to the distribution of prime numbers. In particular, we use the growth estimates to prove the Hoheisel-Ingham Theorem, which gives an upper bound for the dierence between consecutive prime numbers. We also investigate the distribution of prime pairs, in connection which we oer original ideas. The Riemann zeta function is dened as s in the half-plane Re We extend ζ(s) := n∞=1 n− s > 1. it to a meromorphic function on the whole plane with a simple pole at s = 1, and show that it P satises the functional equation. We discuss two methods, van der Corput's and Vinogradov's, to give upper bounds for the growth of the zeta function on the critical strip 0 Re s 1. Both of ≤ ≤ these are based on the observation that ζ(s) is well approximated on the critical strip by a nite exponential sum T s T Van der Corput's method uses the Poisson n=1 n− = n=1 exp s log n . -

On Certain Exponential and Character Sums

On certain exponential and character sums Bryce Kerr A thesis in fulfilment of the requirements for the degree of Doctor of Philosophy School of Mathematics and Statistics Faculty of Science March 2017 On certain exponential and character sums Bryce Kerr A thesis in fulfilment of the requirements for the degree of Doctor of Philosophy School of Mathematics and Statistics Faculty of Science November 2016 Contents 1 Introduction1 1.1 Introduction....................................1 1.2 Illustration of Vinogradov's method......................2 1.3 Outline of Thesis.................................6 1.3.1 Rational Exponential Sums Over the Divisor Function........6 1.3.2 Character Sums Over Shifted Primes..................6 1.3.3 Mixed Character Sums..........................7 1.3.4 The Fourth Moment of Character Sums................7 1.4 Notation......................................7 1.5 Acknowledgement.................................8 2 Rational Exponential Sums over the Divisor Function 11 2.1 Introduction.................................... 11 2.1.1 Notation.................................. 12 2.2 Main Results................................... 13 2.3 Combinatorial Decomposition.......................... 13 2.4 Approximation of Preliminary Sums...................... 14 2.5 Dirichlet Series Involving the Divisor Function................ 19 2.6 Proof of Theorem 2.1............................... 24 2.7 Proof of Theorem 2.2............................... 27 2.8 Proof of Theorem 2.3............................... 29 2.9 Proof of Theorem 2.4............................... 30 3 Character Sums over Shifted Primes 33 3.1 Introduction.................................... 33 3.2 Main Result.................................... 34 3.3 Reduction to Bilinear Forms........................... 34 3.4 The P´olya-Vinogradov Bound.......................... 35 3.5 Burgess Bounds.................................. 35 3.5.1 The Case r = 2.............................. 36 3.5.2 The Case r = 3.............................. 40 3.6 Complete Sums................................. -

Lectures on Exponential Sums by Stephan Baier, JNU

Lectures on exponential sums by Stephan Baier, JNU 1. Lecture 1 - Introduction to exponential sums, Dirichlet divisor problem The main reference for these lecture notes is [4]. 1.1. Exponential sums. Throughout the sequel, we reserve the no- tation I for an interval (a; b], where a and b are integers, unless stated otherwise. Exponential sums are sums of the form X e(f(n)); n2I where we write e(z) := e2πiz; and f is a real-valued function, defined on the interval I = (a; b]. The function f is referred to as the amplitude function. Note that the summands e(f(n)) lie on the unit circle. In applications, f will have some "nice" properties such as to be differentiable, possibly several times, and to satisfy certain bounds on its derivatives. Under suitable P conditions, we can expect cancellations in the sum n2I e(f(n)). The object of the theory of exponential sums is to detect such cancellations, i.e. to bound the said sum non-trivially. By the triangle inequality, the trivial bound is X e(f(n)) jIj; n2I where jIj = b − a is the length of the interval I = (a; b]. Exponential sums play a key role in analytic number theory. Often, error terms can be written in terms of exponential sums. Important examples for applications of exponential sums are the Dirichlet divisor problem (estimates for the average order of the divisor function), the Gauss circle problem (counting lattice points enclosed by a circle) and the growth of the Riemann zeta function in the critical strip. -

Exponential Sums with Multiplicative Coefficients Without the Ramanujan Conjecture

Exponential sums with multiplicative coefficients without the Ramanujan conjecture Yujiao Jiang, Guangshi Lü, Zhiwei Wang To cite this version: Yujiao Jiang, Guangshi Lü, Zhiwei Wang. Exponential sums with multiplicative coefficients without the Ramanujan conjecture. Mathematische Annalen, Springer Verlag, 2021, 379 (1-2), pp.589-632. 10.1007/s00208-020-02108-z. hal-03283544 HAL Id: hal-03283544 https://hal.archives-ouvertes.fr/hal-03283544 Submitted on 11 Jul 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. EXPONENTIAL SUMS WITH MULTIPLICATIVE COEFFICIENTS WITHOUT THE RAMANUJAN CONJECTURE YUJIAO JIANG, GUANGSHI LÜ, AND ZHIWEI WANG Abstract. We study the exponential sum involving multiplicative function f under milder conditions on the range of f, which generalizes the work of Montgomery and Vaughan. As an application, we prove cancellation in the sum of additively twisted coefficients of automorphic L-function on GLm (m > 4), uniformly in the additive character. 1. Introduction Let M be the class of all complex valued multiplicative functions. For f 2 M , the exponential sum involving multiplicative function f is defined by X S(N; α) := f(n)e(nα); (1.1) n6N where e(t) = e2πit. -

Exponential Sums with Multiplicative Coefficients

EXPONENTIAL SUMS WITH MULTIPLICATIVE COEFFICIENTS Abstract. We show that if an exponential sum with multiplicative coefficients is large then the associated multiplicative function is \pretentious". This leads to applications in the circle method. 1. Introduction 1a. Exponential sums with multiplicative coefficients. Throughout f is a totally multiplicative function with jf(n)j ≤ 1 for all n. Diverse investigations in analytic number theory involve sums like X S(α; N) := f(n)e(nα) n≤N where e(t) = e2iπt. Typically one wants non-trivial upper bounds on jS(α; N)j, which is usually less tractable on the major arcs (when α is close to a rational with small denomi- nator) than on the minor arcs (that is, all other α 2 [0; 1]). Moreover one also often wants asymptotic estimates for S(α; N) on the major arcs, though hitherto this has only been achieved in certain special cases. Our main result is to give such an estimate for all such f (and we will explore several applications). First though we need to introduce a distance function D between two multiplicative functions f and g with jf(n)j; jg(n)j ≤ 1:1 X 1 − Re f(p)g(p) (f(n); g(n); x)2 := : Dm p p≤x p-m Suppose that x ≥ R and T ≥ 1 are given. Select a primitive character (mod r) with r ≤ R, and a real number t with jtj ≤ T for which D(f(n); (n)nit; x) is minimized (where D = D1). Define κ(m) = 0 unless m is an integer, in which case Y κ(m) := ((f(p)=pit)b − (p)(f(p)=pit)b−1): pbkm 1 This distance function is particularly useful since it satisfies the triangle inequality D(f1(n); g1(n); x)+ D(f2(n); g2(n); x) ≥ D((f1f2)(n); (g1g2)(n); x), (see Lemma 3.1 of [7], or the general discussion in [8]). -

A Survey on Pure and Mixed Exponential Sums Modulo Prime Powers

A SURVEY ON PURE AND MIXED EXPONENTIAL SUMS MODULO PRIME POWERS TODD COCHRANE AND ZHIYONG ZHENG 1. Introduction In this paper we give a survey of recent work by the authors and others on pure and mixed exponential sums of the type pm pm X X epm (f(x)); χ(g(x))epm (f(x)); x=1 x=1 m 2πix=pm where p is a prime power, epm (·) is the additive character epm (x) = e and χ is a multiplicative character (mod pm). The goals of this paper are threefold; first, to point out the similarity between exponential sums over finite fields and exponential sums over residue class rings (mod pm) with m ≥ 2; second, to show how mixed exponential sums can be reduced to pure exponential sums when m ≥ 2 and third, to make a thorough review of the formulae and upper bounds that are available for such sums. Included are some new observations and consequences of the methods we have developed as well as a number of open questions, some very deep and some readily accessible, inviting the reader to a further investigation of these sums. 2. Pure Exponential Sums We start with a discussion of pure exponential sums of the type q X (2.1) S(f; q) = eq(f(x)) x=1 2πif(x)=q where f(x) is a polynomial over Z, q is any positive integer and eq(f(x)) = e . These sums enjoy the multiplicative property k Y mi S(f; q) = S(λif; pi ) i=1 Qk mi Pk mi where q = i=1 pi and i=1 λiq=pi = 1, reducing their evaluation to the case of prime power moduli. -

A Survey of Algebraic Exponential Sums and Some Applications

A SURVEY OF ALGEBRAIC EXPONENTIAL SUMS AND SOME APPLICATIONS E. KOWALSKI 1. Introduction This survey is a written and slightly expanded version of the talk given at the ICMS workshop on motivic integration and its interactions with model theory and non-archi- medean geometry. Its presence may seem to require a few preliminary words of expla- nation: not only does the title apparently fail to reflect any of the three components of that of the conference itself, but also the author is far from being an expert in any of these. However, one must remember that there is but a small step from summation to integration. Moreover, as I will argue in the last section, there are some basic problems in the theory of exponential sums (and their applications) for which it seems not impossible that logical ideas could be useful, and hence presenting the context to model-theorists in particular could well be useful. In fact, the most direct connection between exponential sums and the topics of the workshop will be a survey of the extension to exponential sums of the beautiful counting results of Chatzidakis, van den Dries and McIntyre's [CDM]. These may also have some further applications. Acknowledgements. I wish to thank warmly the organizers of the workshop for preparing a particularly rich and inspiring program, and for inviting me to participate. I also thank B. Conrad for pointing out a potentially misleading statement related to Poincar´eduality on page 10. 2. Where exponential sums come from Exponential sums, in the most general sense, are any type of finite sums of complex numbers X S = e(θn) 16n6N where we write e(z) = exp(2iπz), as is customary in analytic number theory, and where the phases θn are real numbers.