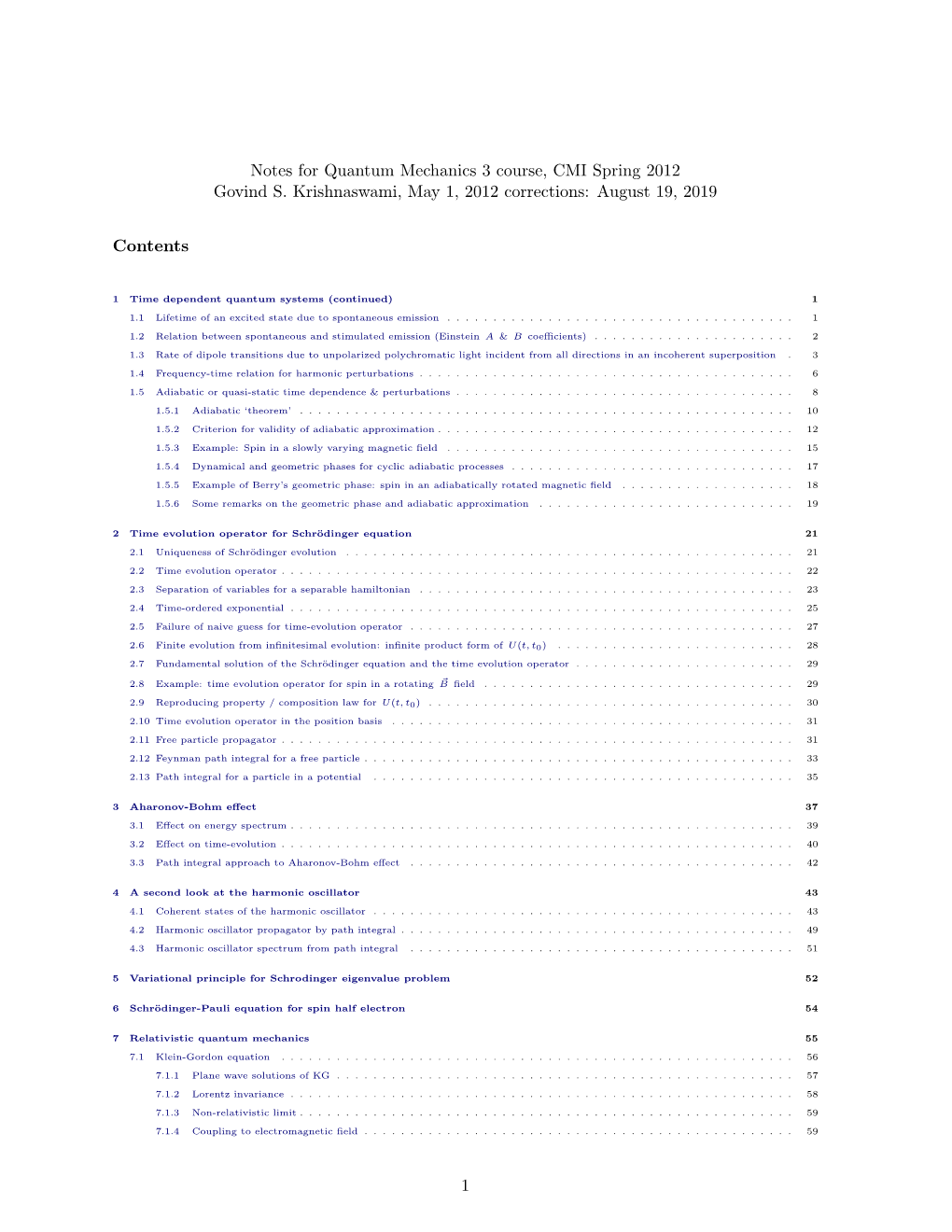

Notes for Quantum Mechanics 3 Course, CMI Spring 2012 Govind S

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quantum-Classical Correspondence of Shortcuts to Adiabaticity”

Quantum-Classical Correspondence of Shortcuts to Adiabaticity Manaka Okuyama and Kazutaka Takahashi Department of Physics, Tokyo Institute of Technology, Tokyo 152-8551, Japan (Dated: September 1, 2018) We formulate the theory of shortcuts to adiabaticity in classical mechanics. For a reference Hamiltonian, the counterdiabatic term is constructed from the dispersionless Korteweg–de Vries (KdV) hierarchy. Then the adiabatic theorem holds exactly for an arbitrary choice of time-dependent parameters. We use the Hamilton–Jacobi theory to define the generalized action. The action is independent of the history of the parameters and is directly related to the adiabatic invariant. The dispersionless KdV hierarchy is obtained from the classical limit of the KdV hierarchy for the quantum shortcuts to adiabaticity. This correspondence suggests some relation between the quantum and classical adiabatic theorems. Shortcuts to adiabaticity (STA) is a method control- formulations will allow us to make a link between two ling dynamical systems. The implementation of the theorems. In this letter, we develop the theory of the method results in dynamics that are free from nona- classical STA. First, we formulate the classical STA in a diabatic transitions for an arbitrary choice of time- general way so that the counterdiabatic term can be cal- dependent parameters in a reference Hamiltonian. It was culated, in principle, from the derived formula. Second, developed in quantum systems [1–4] and its applications we show that the adiabatic theorem holds exactly in the have been studied in various fields of physics and engi- classical STA and the adiabatic invariant is obtained di- neering [5]. It is important to notice that this method, rectly from the nonperiodic trajectory. -

The Adiabatic Theorem and Linear Response Theory for Extended Quantum Systems

THE ADIABATIC THEOREM AND LINEAR RESPONSE THEORY FOR EXTENDED QUANTUM SYSTEMS SVEN BACHMANN, WOJCIECH DE ROECK, AND MARTIN FRAAS Abstract. The adiabatic theorem refers to a setup where an evolution equation contains a time- dependent parameter whose change is very slow, measured by a vanishing parameter . Under suitable assumptions the solution of the time-inhomogenous equation stays close to an instanta- neous fixpoint. In the present paper, we prove an adiabatic theorem with an error bound that is independent of the number of degrees of freedom. Our setup is that of quantum spin systems where the manifold of ground states is separated from the rest of the spectrum by a spectral gap. One im- portant application is the proof of the validity of linear response theory for such extended, genuinely interacting systems. In general, this is a long-standing mathematical problem, which can be solved in the present particular case of a gapped system, relevant e.g. for the integer quantum Hall effect. 1. Introduction 1.1. Adiabatic Theorems. The adiabatic theorem stands for a rather broad principle that can be described as follows. Consider an equation giving the evolution in time t of some '(t) 2 B, with B some Banach space: d (1.1) '(t) = L('(t); α ); dt t where L : B × R ! B is a smooth function and αt is a parameter depending parametrically on time. Now, assume that for αt = α frozen in time, the equation exhibits some tendency towards a fixpoint 1 R T 'α, as expressed, for example, by convergence in Cesaro mean T 0 '(t)dt ! 'α, as T ! 1 for any initial '(0). -

Westfälische-Wilhelms-Universität Adiabatic Approximation and Berry

Westfälische-Wilhelms-Universität Seminar zur Theorie der Atome, Kerne und kondensierten Materie Adiabatic Approximation and Berry Phase with regard to the Derivation Thomas Grottke [email protected] WS 14\15 21st January, 2015 Contents 1 Adiabatic Approximation 1 1.1 Motivation . 1 1.2 Derivation of the Adiabatic Theorem . 3 2 Berry Phase 5 2.1 Nonholonomic System . 5 2.2 Derivation of the Berry Phase . 5 2.3 Berry Potential and Berry Curvature . 6 2.4 Summary . 7 Thomas Grottke 1 ADIABATIC APPROXIMATION 1 Adiabatic Approximation 1.1 Motivation Figure 1: Mathematical pendulum with the mass m, the length l and the deflection ϕ To give a short motivation let us have a look at the mathematical pendulum (c.f. Figure 1) with the mass m, the length l and the deflection ϕ. The differential equation is given by g ϕ(t) + ϕ(t) = 0 (1.1) l where g is the acceleration of gravity. Solving this equation leads us to the period T with s l T = 2π . (1.2) g If we move the pendulum from a location A to a location B we can consider two ways doing this. If the pendulum is moved fast it will execute a complicated oscillation not easy to be described. But if the motion is slow enough, the pendulum remains in its oscillation. This motion is a good example for an adiabatic process which means that the conditions respectively the environment are changed slowly. Oscillation is also well known in quantum mechanics systems. A good example is the potential well (c.f. -

Get Full Text

Adiabatic critical quantum metrology cannot reach the Heisenberg limit even when shortcuts to adiabaticity are applied Karol Gietka, Friederike Metz, Tim Keller, and Jing Li Quantum Systems Unit, Okinawa Institute of Science and Technology Graduate University, Onna, Okinawa 904-0495, Japan We show that the quantum Fisher information attained in an adia- batic approach to critical quantum metrology cannot lead to the Heisen- berg limit of precision and therefore regular quantum metrology under optimal settings is always superior. Furthermore, we argue that even though shortcuts to adiabaticity can arbitrarily decrease the time of preparing critical ground states, they cannot be used to achieve or over- come the Heisenberg limit for quantum parameter estimation in adia- batic critical quantum metrology. As case studies, we explore the appli- cation of counter-diabatic driving to the Landau-Zener model and the quantum Rabi model. 1 Introduction Quantum metrology [1] is concerned with harnessing quantum resources following from the quantum-mechanical framework (in particular, quantum entanglement) to increase the sensitivity of unknown parameter estimation beyond the standard quantum limit [2]. This limitation is a consequence of the central limit theorem which states that if N independent particles (such as photons or atoms) are used in the process of√ estimation of an unknown parameter θ, the error on average decreases as Var[θ] ∼ 1/ N. Taking advantage of quantum resources can further decrease the average error leading to the Heisenberg scaling of precision Var[θ] ∼ 1/N [3] which in arXiv:2103.12939v2 [quant-ph] 25 Jun 2021 the optimal case becomes the Heisenberg limit Var[θ] = 1/N. -

Quantum Adiabatic Theorem and Berry's Phase Factor Page Tyler Department of Physics, Drexel University

Quantum Adiabatic Theorem and Berry's Phase Factor Page Tyler Department of Physics, Drexel University Abstract A study is presented of Michael Berry's observation of quantum mechanical systems transported along a closed, adiabatic path. In this case, a topological phase factor arises along with the dynamical phase factor predicted by the adiabatic theorem. 1 Introduction In 1984, Michael Berry pointed out a feature of quantum mechanics (known as Berry's Phase) that had been overlooked for 60 years at that time. In retrospect, it seems astonishing that this result escaped notice for so long. It is most likely because our classical preconceptions can often be misleading in quantum mechanics. After all, we are accustomed to thinking that the phases of a wave functions are somewhat arbitrary. Physical quantities will involve Ψ 2 so the phase factors cancel out. It was Berry's insight that if you move the Hamiltonian around a closed, adiabatic loop, the relative phase at the beginning and at the end of the process is not arbitrary, and can be determined. There is a good, classical analogy used to develop the notion of an adiabatic transport that uses something like a Foucault pendulum. Or rather a pendulum whose support is moved about a loop on the surface of the Earth to return it to its exact initial state or one parallel to it. For the process to be adiabatic, the support must move slow and steady along its path and the period of oscillation for the pendulum must be much smaller than that of the Earth's. -

The Adiabatic Theorem in the Presence of Noise, Perturbations, and Decoherence

The Adiabatic Theorem in the Presence of Noise Michael J. O’Hara ∗ and Dianne P. O’Leary † December 27, 2013 Abstract We provide rigorous bounds for the error of the adiabatic approximation of quan- tum mechanics under four sources of experimental error: perturbations in the ini- tial condition, systematic time-dependent perturbations in the Hamiltonian, coupling to low-energy quantum systems, and decoherent time-dependent perturbations in the Hamiltonian. For decoherent perturbations, we find both upper and lower bounds on the evolution time to guarantee the adiabatic approximation performs within a pre- scribed tolerance. Our new results include explicit definitions of constants, and we apply them to the spin-1/2 particle in a rotating magnetic field, and to the supercon- ducting flux qubit. We compare the theoretical bounds on the superconducting flux qubit to simulation results. 1 Introduction Adiabatic quantum computation [7] (AQC) is a model of quantum computation equivalent to the standard model [1]. A physical system is slowly evolved from the ground state of a simple system, to one whose ground state encodes the solution to the difficult problem. By a physical principle known as the adiabatic approximation, if the evolution is done sufficiently slowly, and the minimum energy gap separating the ground state from higher states is sufficiently large, then the final state of the system should be the state encoding the solution to some arXiv:0801.3872v1 [quant-ph] 25 Jan 2008 problem. The biggest hurdles facing many potential implementations of a quantum computer are the errors due to interaction of the qubits with the environment. -

The Quantum Adiabatic Theorem

Quantum algorithms (CO 781, Winter 2008) Prof. Andrew Childs, University of Waterloo LECTURE 18: The quantum adiabatic theorem In the last part of this course, we will discuss an approach to quantum computation based on the concept of adiabatic evolution. According to the quantum adiabatic theorem, a quantum system that begins in the nondegenerate ground state of a time-dependent Hamiltonian will remain in the instantaneous ground state provided the Hamiltonian changes sufficiently slowly. In this lecture we will prove the quantum adiabatic theorem, which quantifies this statement. Adiabatic evolution When the Hamiltonian of a quantum system does not depend on time, the dynamics of that system are fairly straightforward. Given a time-independent Hamiltonian H, the solution of the Schr¨odinger equation d i |ψ(t)i = H|ψ(t)i (1) dt with the initial quantum state |ψ(0)i is given by |ψ(t)i = exp(−iHt)|ψ(0)i. (2) So any eigenstate |Ei of the Hamiltonian, with H|Ei = E|Ei, simply acquires a phase exp(−iEt). In particular, there are no transitions between eigenstates. If the Hamiltonian varies in time, the evolution it generates can be considerably more compli- cated. However, if the change in the Hamiltonian occurs sufficiently slowly, the dynamics remain relatively simple: roughly speaking, if the system begins close to an eigenstate, it remains close to an eigenstate. The quantum adiabatic theorem is a formal description of this phenomenon. For a simple example of adiabatic evolution in action, consider a spin in a magnetic field that is rotated from the x direction to the z direction in a total time T : πt πt H(t) = − cos σ − sin σ . -

Fast-Forward of Adiabatic Dynamics in Quantum Mechanics

Fast-forward of adiabatic dynamics in quantum mechanics By Shumpei Masuda1 and Katsuhiro Nakamura2,3 1 Department of Physics, Kwansei Gakuin University, Gakuen, Sanda, Hyogo 669-1337, Japan 2 Heat Physics Department, Uzbek Academy of Sciences, 28 Katartal Str., 100135 Tashkent, Uzbekistan 3 Department of Applied Physics, Osaka City University, Sumiyoshi-ku, Osaka 558-8585, Japan We propose a way to accelerate adiabatic dynamics of wave functions in quantum mechanics to obtain a final adiabatic state except for the spatially uniform phase in any desired short time. We develop the previous theory of fast-forward (Masuda & Nakamura 2008) so as to derive a driving potential for the fast-forward of the adi- abatic dynamics. A typical example is the fast-forward of adiabatic transport of a wave function which is the ideal transport in the sense that a stationary wave func- tion is transported to an aimed position in any desired short time without leaving any disturbance at the final time of the fast-forward. As other important exam- ples we show accelerated manipulations of wave functions such as their splitting and squeezing. The theory is also applicable to macroscopic quantum mechanics described by the nonlinear Schr¨odinger equation. Keywords: atom manipulation, mechanical control of atoms, quantum transport 1. Introduction The adiabatic process occurs when the external parameter of Hamiltonian of the system is adiabatically changed. Quantum adiabatic theorem (Born & Fock 1928; Kato 1950; Messiah 1962), which states that, if the system is initially in an eigen- state of the instantaneous Hamiltonian, it remains so during the process, has been studied in various contexts (Berry 1984; Aharonov & Anandan 1987; Samuel & Bhandari 1988; Shapere & Wilczek 1989; Nakamura & Rice 1994; Bouwmeester et al. -

Building an Adiabatic Quantum Computer Simulation in the Classroom

This document is published at : Rodríguez-Laguna, J. y Santalla, S. (2018). Building an adiabatic quantum computer simulation in the classroom. American Journal of Physics, 86(5), pp. 360-367. DOI: https://doi.org/10.1119/1.5021360 © 2018 American Association of Physics Teachers. Building an adiabatic quantum computer simulation in the classroom Javier Rodrıguez-Laguna Dto. Fısica Fundamental, Universidad Nacional de Educacion a Distancia (UNED), Madrid 28040, Spain Silvia N. Santalla Dto. Fısica & GISC, Universidad Carlos III de Madrid, Madrid 28911, Spain (Received 18 August 2017; accepted 2 January 2018) We present a didactic introduction to adiabatic quantum computation (AQC) via the explicit construction of a classical simulator of quantum computers. This constitutes a suitable route to introduce several important concepts for advanced undergraduates in physics: quantum many- body systems, quantum phase transitions, disordered systems, spin-glasses, and computational complexity theory. VC 2018 American Association of Physics Teachers. https://doi.org/10.1119/1.5021360 I. INTRODUCTION absent. Therefore, it is closer to the concept of quasistatic process. 1,2 Interest in quantum computation is increasing lately, This article is organized as follows. In Sec. II, we intro- 3 since it might be the next quantum technology to take off. duce the type of problems that we intend to solve. Section III Exciting new spaces for exploration have appeared, such as discusses the general idea of analog computers, while the 4,5 the IBM Quantum Experience, where a few-qubit com- philosophy of adiabatic quantum computation is discussed in puters can be programmed remotely. Being a crossroad for Sec. -

Hypercomputability of Quantum Adiabatic Processes: Fact Versus

HYPERCOMPUTABILITY OF QUANTUM ADIABATIC PROCESSES: FACTS VERSUS PREJUDICES TIEN D. KIEU Abstract. We give an overview of a quantum adiabatic algorithm for Hilbert’s tenth problem, including some discussions on its fundamental aspects and the emphasis on the probabilistic cor- rectness of its findings. For the purpose of illustration, the numerical simulation results of some simple Diophantine equations are presented. We also discuss some prejudicial misunderstandings as well as some plausible difficulties faced by the algorithm in its physical implementations. “To believe otherwise is merely to cling to a prejudice which only gives rise to further prejudices ...” 1 H.A. Buchdahl 1. Introduction and outline of the paper Quantum computation has attracted much attention and investment lately through its theoret- ical potential to speed up some important computations as compared to classical Turing compu- tation. The most well-known and widely-studied form of quantum computation is the (standard) model of quantum circuits [36] which comprise several unitary quantum gates (belonging to a set of universal gates), arranged in a prescribed sequence to implement a quantum algorithm. Each quantum gate only needs to act singly on one qubit, the generalisation of the classical bit, or on a pair of qubits. In view of this promising potential of quantum computation, another question that then in- evitably arises is whether the class of Turing computable functions can be extended by applying quantum principles. This kind of computation beyond the Turing barrier is also known as hyper- computation [12, 37, 38]. Initial efforts have indicated that quantum computability is the same as classical computability [4]. -

Transport of Quantum States of Periodically Driven Systems H.P

Transport of quantum states of periodically driven systems H.P. Breuer, K. Dietz, M. Holthaus To cite this version: H.P. Breuer, K. Dietz, M. Holthaus. Transport of quantum states of periodically driven systems. Journal de Physique, 1990, 51 (8), pp.709-722. 10.1051/jphys:01990005108070900. jpa-00212403 HAL Id: jpa-00212403 https://hal.archives-ouvertes.fr/jpa-00212403 Submitted on 1 Jan 1990 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. J. Phys. France 51 (1990) 709-722 15 AVRIL 1990, 709 Classification Physics Abstracts 03.65 - 32.80 - 42.50 Transport of quantum states of periodically driven systems H. P. Breuer, K. Dietz and M. Holthaus Physikalisches Institut, Universität Bonn, Nussallee 12, D-5300 Bonn 1, F.R.G. (Reçu le 17 août 1989, accepté sous forme définitive le 23 novembre 1989) Résumé. 2014 On traite des holonomies quantiques sur des surfaces de quasi-énergie de systèmes soumis à une excitation périodique, et on établit leur topologie globale non triviale. On montre que cette dernière est causée par des transitions diabatiques entre niveaux au passage d’anti- croisements serrés. On donne brièvement quelques conséquences expérimentales concernant le transport adiabatique et les transitions de Landau-Zener entre états de Floquet. -

Variations on the Adiabatic Invariance: the Lorentz Pendulum

Variations on the adiabatic invariance: the Lorentz pendulum Luis L. Sanchez-Soto´ and Jesus´ Zoido Departamento de Optica,´ Facultad de F´ısica, Universidad Complutense, 28040 Madrid, Spain (Dated: October 17, 2012) We analyze a very simple variant of the Lorentz pendulum, in which the length is varied exponentially, instead of uniformly, as it is assumed in the standard case. We establish quantitative criteria for the condition of adiabatic changes in both pendula and put in evidence their substantially different physical behavior with regard to adiabatic invariance. I. INTRODUCTION concise mathematical viewpoint of Levi-Civitta12,13. The topic of adiabatic invariance has undergone a resur- As early as 1902, Lord Rayleigh1 investigated a pendu- gence of interest from various different fields, such as plasma 14 lum the length of which was being altered uniformly, but physics, thermonuclear research or geophysics , although 15 very slowly (some mathematical aspects of the problem were perhaps Berry’s work on geometric phases has put it again 16 treated in 1895 by Le Cornu2). He showed that if E(t) denotes in the spotlight. The two monographs by Sagdeev et al and 17 the energy and `(t) the length [or, equivalently, the frequency Lochak and Meunier reflect this revival. More recently, the n(t)] at a time t, then issue has renewed its importance in the context of quantum control (for example, concerning adiabatic passage between E(t) E(0) atomic energy levels18–23), as well as adiabatic quantum com- = : (1.1) 24–26 n(t) n(0) putation . Adiabatic invariants are presented in most textbooks in This expression is probably the first explicit example of an terms of action-angle variables, which involves a significant adiabatic invariant; i.e., a conservation law that only holds level of sophistication.