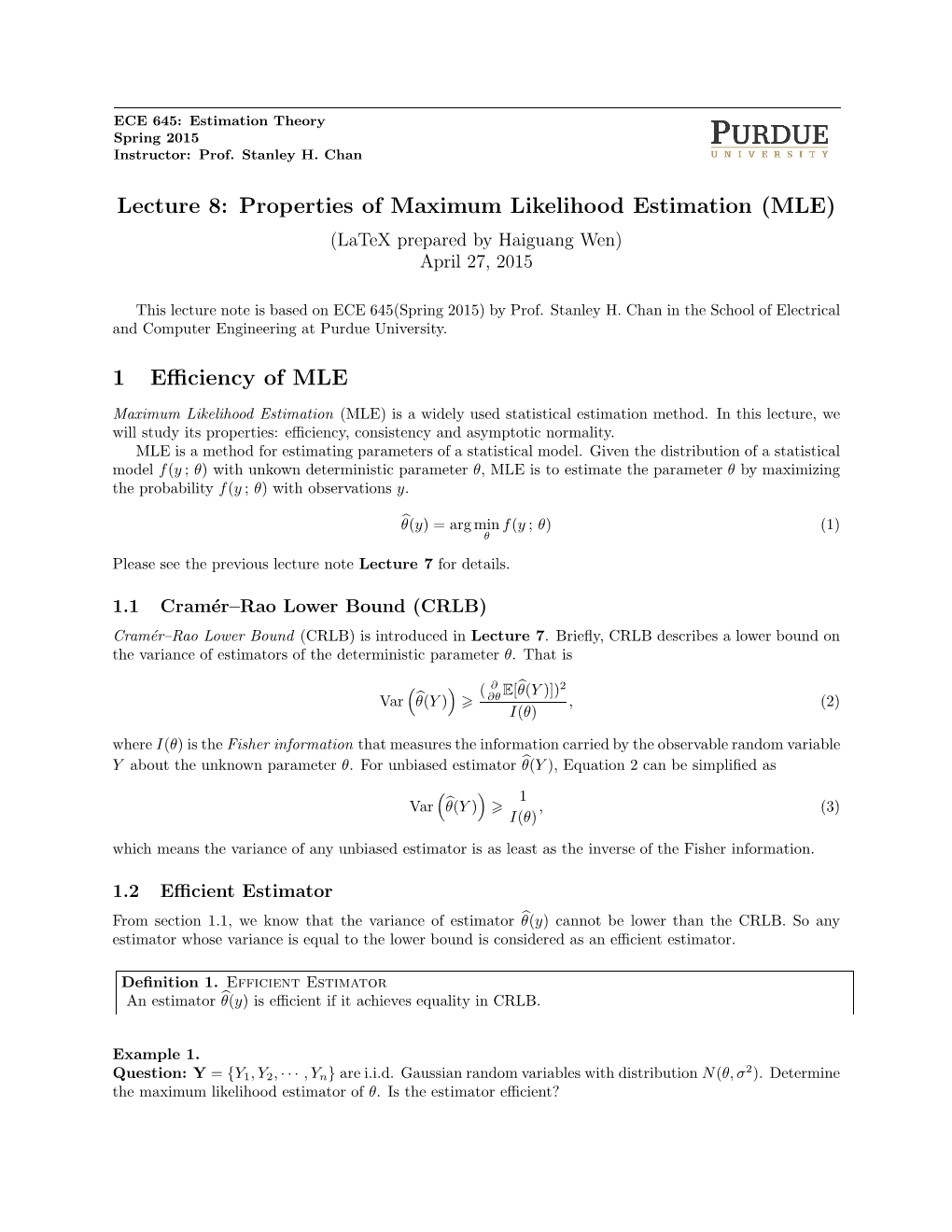

Properties of Maximum Likelihood Estimation (MLE) (Latex Prepared by Haiguang Wen) April 27, 2015

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lecture 12 Robust Estimation

Lecture 12 Robust Estimation Prof. Dr. Svetlozar Rachev Institute for Statistics and Mathematical Economics University of Karlsruhe Financial Econometrics, Summer Semester 2007 Prof. Dr. Svetlozar Rachev Institute for Statistics and MathematicalLecture Economics 12 Robust University Estimation of Karlsruhe Copyright These lecture-notes cannot be copied and/or distributed without permission. The material is based on the text-book: Financial Econometrics: From Basics to Advanced Modeling Techniques (Wiley-Finance, Frank J. Fabozzi Series) by Svetlozar T. Rachev, Stefan Mittnik, Frank Fabozzi, Sergio M. Focardi,Teo Jaˇsic`. Prof. Dr. Svetlozar Rachev Institute for Statistics and MathematicalLecture Economics 12 Robust University Estimation of Karlsruhe Outline I Robust statistics. I Robust estimators of regressions. I Illustration: robustness of the corporate bond yield spread model. Prof. Dr. Svetlozar Rachev Institute for Statistics and MathematicalLecture Economics 12 Robust University Estimation of Karlsruhe Robust Statistics I Robust statistics addresses the problem of making estimates that are insensitive to small changes in the basic assumptions of the statistical models employed. I The concepts and methods of robust statistics originated in the 1950s. However, the concepts of robust statistics had been used much earlier. I Robust statistics: 1. assesses the changes in estimates due to small changes in the basic assumptions; 2. creates new estimates that are insensitive to small changes in some of the assumptions. I Robust statistics is also useful to separate the contribution of the tails from the contribution of the body of the data. Prof. Dr. Svetlozar Rachev Institute for Statistics and MathematicalLecture Economics 12 Robust University Estimation of Karlsruhe Robust Statistics I Peter Huber observed, that robust, distribution-free, and nonparametrical actually are not closely related properties. -

5. Completeness and Sufficiency 5.1. Complete Statistics. Definition 5.1. a Statistic T Is Called Complete If Eg(T) = 0 For

5. Completeness and sufficiency 5.1. Complete statistics. Definition 5.1. A statistic T is called complete if Eg(T ) = 0 for all θ and some function g implies that P (g(T ) = 0; θ) = 1 for all θ. This use of the word complete is analogous to calling a set of vectors v1; : : : ; vn complete if they span the whole space, that is, any v can P be written as a linear combination v = ajvj of these vectors. This is equivalent to the condition that if w is orthogonal to all vj's, then w = 0. To make the connection with Definition 5.1, let's consider the discrete case. Then completeness means that P g(t)P (T = t; θ) = 0 implies that g(t) = 0. Since the sum may be viewed as the scalar prod- uct of the vectors (g(t1); g(t2);:::) and (p(t1); p(t2);:::), with p(t) = P (T = t), this is the analog of the orthogonality condition just dis- cussed. We also see that the terminology is somewhat misleading. It would be more accurate to call the family of distributions p(·; θ) complete (rather than the statistic T ). In any event, completeness means that the collection of distributions for all possible values of θ provides a sufficiently rich set of vectors. In the continuous case, a similar inter- pretation works. Completeness now refers to the collection of densities f(·; θ), and hf; gi = R fg serves as the (abstract) scalar product in this case. Example 5.1. Let's take one more look at the coin flip example. -

4.2 Variance and Covariance

4.2 Variance and Covariance The most important measure of variability of a random variable X is obtained by letting g(X) = (X − µ)2 then E[g(X)] gives a measure of the variability of the distribution of X. 1 Definition 4.3 Let X be a random variable with probability distribution f(x) and mean µ. The variance of X is σ 2 = E[(X − µ)2 ] = ∑(x − µ)2 f (x) x if X is discrete, and ∞ σ 2 = E[(X − µ)2 ] = ∫ (x − µ)2 f (x)dx if X is continuous. −∞ ¾ The positive square root of the variance, σ, is called the standard deviation of X. ¾ The quantity x - µ is called the deviation of an observation, x, from its mean. 2 Note σ2≥0 When the standard deviation of a random variable is small, we expect most of the values of X to be grouped around mean. We often use standard deviations to compare two or more distributions that have the same unit measurements. 3 Example 4.8 Page97 Let the random variable X represent the number of automobiles that are used for official business purposes on any given workday. The probability distributions of X for two companies are given below: x 12 3 Company A: f(x) 0.3 0.4 0.3 x 01234 Company B: f(x) 0.2 0.1 0.3 0.3 0.1 Find the variances of X for the two companies. Solution: µ = 2 µ A = (1)(0.3) + (2)(0.4) + (3)(0.3) = 2 B 2 2 2 2 σ A = (1− 2) (0.3) + (2 − 2) (0.4) + (3− 2) (0.3) = 0.6 2 2 4 σ B > σ A 2 2 4 σ B = ∑(x − 2) f (x) =1.6 x=0 Theorem 4.2 The variance of a random variable X is σ 2 = E(X 2 ) − µ 2. -

1. How Different Is the T Distribution from the Normal?

Statistics 101–106 Lecture 7 (20 October 98) c David Pollard Page 1 Read M&M §7.1 and §7.2, ignoring starred parts. Reread M&M §3.2. The eects of estimated variances on normal approximations. t-distributions. Comparison of two means: pooling of estimates of variances, or paired observations. In Lecture 6, when discussing comparison of two Binomial proportions, I was content to estimate unknown variances when calculating statistics that were to be treated as approximately normally distributed. You might have worried about the effect of variability of the estimate. W. S. Gosset (“Student”) considered a similar problem in a very famous 1908 paper, where the role of Student’s t-distribution was first recognized. Gosset discovered that the effect of estimated variances could be described exactly in a simplified problem where n independent observations X1,...,Xn are taken from (, ) = ( + ...+ )/ a normal√ distribution, N . The sample mean, X X1 Xn n has a N(, / n) distribution. The random variable X Z = √ / n 2 2 Phas a standard normal distribution. If we estimate by the sample variance, s = ( )2/( ) i Xi X n 1 , then the resulting statistic, X T = √ s/ n no longer has a normal distribution. It has a t-distribution on n 1 degrees of freedom. Remark. I have written T , instead of the t used by M&M page 505. I find it causes confusion that t refers to both the name of the statistic and the name of its distribution. As you will soon see, the estimation of the variance has the effect of spreading out the distribution a little beyond what it would be if were used. -

Should We Think of a Different Median Estimator?

Comunicaciones en Estad´ıstica Junio 2014, Vol. 7, No. 1, pp. 11–17 Should we think of a different median estimator? ¿Debemos pensar en un estimator diferente para la mediana? Jorge Iv´an V´eleza Juan Carlos Correab [email protected] [email protected] Resumen La mediana, una de las medidas de tendencia central m´as populares y utilizadas en la pr´actica, es el valor num´erico que separa los datos en dos partes iguales. A pesar de su popularidad y aplicaciones, muchos desconocen la existencia de dife- rentes expresiones para calcular este par´ametro. A continuaci´on se presentan los resultados de un estudio de simulaci´on en el que se comparan el estimador cl´asi- co y el propuesto por Harrell & Davis (1982). Mostramos que, comparado con el estimador de Harrell–Davis, el estimador cl´asico no tiene un buen desempe˜no pa- ra tama˜nos de muestra peque˜nos. Basados en los resultados obtenidos, se sugiere promover la utilizaci´on de un mejor estimador para la mediana. Palabras clave: mediana, cuantiles, estimador Harrell-Davis, simulaci´on estad´ısti- ca. Abstract The median, one of the most popular measures of central tendency widely-used in the statistical practice, is often described as the numerical value separating the higher half of the sample from the lower half. Despite its popularity and applica- tions, many people are not aware of the existence of several formulas to estimate this parameter. We present the results of a simulation study comparing the classic and the Harrell-Davis (Harrell & Davis 1982) estimators of the median for eight continuous statistical distributions. -

Bias, Mean-Square Error, Relative Efficiency

3 Evaluating the Goodness of an Estimator: Bias, Mean-Square Error, Relative Efficiency Consider a population parameter ✓ for which estimation is desired. For ex- ample, ✓ could be the population mean (traditionally called µ) or the popu- lation variance (traditionally called σ2). Or it might be some other parame- ter of interest such as the population median, population mode, population standard deviation, population minimum, population maximum, population range, population kurtosis, or population skewness. As previously mentioned, we will regard parameters as numerical charac- teristics of the population of interest; as such, a parameter will be a fixed number, albeit unknown. In Stat 252, we will assume that our population has a distribution whose density function depends on the parameter of interest. Most of the examples that we will consider in Stat 252 will involve continuous distributions. Definition 3.1. An estimator ✓ˆ is a statistic (that is, it is a random variable) which after the experiment has been conducted and the data collected will be used to estimate ✓. Since it is true that any statistic can be an estimator, you might ask why we introduce yet another word into our statistical vocabulary. Well, the answer is quite simple, really. When we use the word estimator to describe a particular statistic, we already have a statistical estimation problem in mind. For example, if ✓ is the population mean, then a natural estimator of ✓ is the sample mean. If ✓ is the population variance, then a natural estimator of ✓ is the sample variance. More specifically, suppose that Y1,...,Yn are a random sample from a population whose distribution depends on the parameter ✓.The following estimators occur frequently enough in practice that they have special notations. -

1 One Parameter Exponential Families

1 One parameter exponential families The world of exponential families bridges the gap between the Gaussian family and general dis- tributions. Many properties of Gaussians carry through to exponential families in a fairly precise sense. • In the Gaussian world, there exact small sample distributional results (i.e. t, F , χ2). • In the exponential family world, there are approximate distributional results (i.e. deviance tests). • In the general setting, we can only appeal to asymptotics. A one-parameter exponential family, F is a one-parameter family of distributions of the form Pη(dx) = exp (η · t(x) − Λ(η)) P0(dx) for some probability measure P0. The parameter η is called the natural or canonical parameter and the function Λ is called the cumulant generating function, and is simply the normalization needed to make dPη fη(x) = (x) = exp (η · t(x) − Λ(η)) dP0 a proper probability density. The random variable t(X) is the sufficient statistic of the exponential family. Note that P0 does not have to be a distribution on R, but these are of course the simplest examples. 1.0.1 A first example: Gaussian with linear sufficient statistic Consider the standard normal distribution Z e−z2=2 P0(A) = p dz A 2π and let t(x) = x. Then, the exponential family is eη·x−x2=2 Pη(dx) / p 2π and we see that Λ(η) = η2=2: eta= np.linspace(-2,2,101) CGF= eta**2/2. plt.plot(eta, CGF) A= plt.gca() A.set_xlabel(r'$\eta$', size=20) A.set_ylabel(r'$\Lambda(\eta)$', size=20) f= plt.gcf() 1 Thus, the exponential family in this setting is the collection F = fN(η; 1) : η 2 Rg : d 1.0.2 Normal with quadratic sufficient statistic on R d As a second example, take P0 = N(0;Id×d), i.e. -

Lecture 4: Sufficient Statistics 1 Sufficient Statistics

ECE 830 Fall 2011 Statistical Signal Processing instructor: R. Nowak Lecture 4: Sufficient Statistics Consider a random variable X whose distribution p is parametrized by θ 2 Θ where θ is a scalar or a vector. Denote this distribution as pX (xjθ) or p(xjθ), for short. In many signal processing applications we need to make some decision about θ from observations of X, where the density of X can be one of many in a family of distributions, fp(xjθ)gθ2Θ, indexed by different choices of the parameter θ. More generally, suppose we make n independent observations of X: X1;X2;:::;Xn where p(x1 : : : xnjθ) = Qn i=1 p(xijθ). These observations can be used to infer or estimate the correct value for θ. This problem can be posed as follows. Let x = [x1; x2; : : : ; xn] be a vector containing the n observations. Question: Is there a lower dimensional function of x, say t(x), that alone carries all the relevant information about θ? For example, if θ is a scalar parameter, then one might suppose that all relevant information in the observations can be summarized in a scalar statistic. Goal: Given a family of distributions fp(xjθ)gθ2Θ and one or more observations from a particular dis- tribution p(xjθ∗) in this family, find a data compression strategy that preserves all information pertaining to θ∗. The function identified by such strategyis called a sufficient statistic. 1 Sufficient Statistics Example 1 (Binary Source) Suppose X is a 0=1 - valued variable with P(X = 1) = θ and P(X = 0) = 1 − θ. -

On the Meaning and Use of Kurtosis

Psychological Methods Copyright 1997 by the American Psychological Association, Inc. 1997, Vol. 2, No. 3,292-307 1082-989X/97/$3.00 On the Meaning and Use of Kurtosis Lawrence T. DeCarlo Fordham University For symmetric unimodal distributions, positive kurtosis indicates heavy tails and peakedness relative to the normal distribution, whereas negative kurtosis indicates light tails and flatness. Many textbooks, however, describe or illustrate kurtosis incompletely or incorrectly. In this article, kurtosis is illustrated with well-known distributions, and aspects of its interpretation and misinterpretation are discussed. The role of kurtosis in testing univariate and multivariate normality; as a measure of departures from normality; in issues of robustness, outliers, and bimodality; in generalized tests and estimators, as well as limitations of and alternatives to the kurtosis measure [32, are discussed. It is typically noted in introductory statistics standard deviation. The normal distribution has a kur- courses that distributions can be characterized in tosis of 3, and 132 - 3 is often used so that the refer- terms of central tendency, variability, and shape. With ence normal distribution has a kurtosis of zero (132 - respect to shape, virtually every textbook defines and 3 is sometimes denoted as Y2)- A sample counterpart illustrates skewness. On the other hand, another as- to 132 can be obtained by replacing the population pect of shape, which is kurtosis, is either not discussed moments with the sample moments, which gives or, worse yet, is often described or illustrated incor- rectly. Kurtosis is also frequently not reported in re- ~(X i -- S)4/n search articles, in spite of the fact that virtually every b2 (•(X i - ~')2/n)2' statistical package provides a measure of kurtosis. -

On the Efficiency and Consistency of Likelihood Estimation in Multivariate

On the efficiency and consistency of likelihood estimation in multivariate conditionally heteroskedastic dynamic regression models∗ Gabriele Fiorentini Università di Firenze and RCEA, Viale Morgagni 59, I-50134 Firenze, Italy <fi[email protected]fi.it> Enrique Sentana CEMFI, Casado del Alisal 5, E-28014 Madrid, Spain <sentana@cemfi.es> Revised: October 2010 Abstract We rank the efficiency of several likelihood-based parametric and semiparametric estima- tors of conditional mean and variance parameters in multivariate dynamic models with po- tentially asymmetric and leptokurtic strong white noise innovations. We detailedly study the elliptical case, and show that Gaussian pseudo maximum likelihood estimators are inefficient except under normality. We provide consistency conditions for distributionally misspecified maximum likelihood estimators, and show that they coincide with the partial adaptivity con- ditions of semiparametric procedures. We propose Hausman tests that compare Gaussian pseudo maximum likelihood estimators with more efficient but less robust competitors. Fi- nally, we provide finite sample results through Monte Carlo simulations. Keywords: Adaptivity, Elliptical Distributions, Hausman tests, Semiparametric Esti- mators. JEL: C13, C14, C12, C51, C52 ∗We would like to thank Dante Amengual, Manuel Arellano, Nour Meddahi, Javier Mencía, Olivier Scaillet, Paolo Zaffaroni, participants at the European Meeting of the Econometric Society (Stockholm, 2003), the Sympo- sium on Economic Analysis (Seville, 2003), the CAF Conference on Multivariate Modelling in Finance and Risk Management (Sandbjerg, 2006), the Second Italian Congress on Econometrics and Empirical Economics (Rimini, 2007), as well as audiences at AUEB, Bocconi, Cass Business School, CEMFI, CREST, EUI, Florence, NYU, RCEA, Roma La Sapienza and Queen Mary for useful comments and suggestions. Of course, the usual caveat applies. -

The Probability Lifesaver: Order Statistics and the Median Theorem

The Probability Lifesaver: Order Statistics and the Median Theorem Steven J. Miller December 30, 2015 Contents 1 Order Statistics and the Median Theorem 3 1.1 Definition of the Median 5 1.2 Order Statistics 10 1.3 Examples of Order Statistics 15 1.4 TheSampleDistributionoftheMedian 17 1.5 TechnicalboundsforproofofMedianTheorem 20 1.6 TheMedianofNormalRandomVariables 22 2 • Greetings again! In this supplemental chapter we develop the theory of order statistics in order to prove The Median Theorem. This is a beautiful result in its own, but also extremely important as a substitute for the Central Limit Theorem, and allows us to say non- trivial things when the CLT is unavailable. Chapter 1 Order Statistics and the Median Theorem The Central Limit Theorem is one of the gems of probability. It’s easy to use and its hypotheses are satisfied in a wealth of problems. Many courses build towards a proof of this beautiful and powerful result, as it truly is ‘central’ to the entire subject. Not to detract from the majesty of this wonderful result, however, what happens in those instances where it’s unavailable? For example, one of the key assumptions that must be met is that our random variables need to have finite higher moments, or at the very least a finite variance. What if we were to consider sums of Cauchy random variables? Is there anything we can say? This is not just a question of theoretical interest, of mathematicians generalizing for the sake of generalization. The following example from economics highlights why this chapter is more than just of theoretical interest. -

Minimum Rates of Approximate Sufficient Statistics

1 Minimum Rates of Approximate Sufficient Statistics Masahito Hayashi,y Fellow, IEEE, Vincent Y. F. Tan,z Senior Member, IEEE Abstract—Given a sufficient statistic for a parametric family when one is given X, then Y is called a sufficient statistic of distributions, one can estimate the parameter without access relative to the family fPXjZ=zgz2Z . We may then write to the data. However, the memory or code size for storing the sufficient statistic may nonetheless still be prohibitive. Indeed, X for n independent samples drawn from a k-nomial distribution PXjZ=z(x) = PXjY (xjy)PY jZ=z(y); 8 (x; z) 2 X × Z with d = k − 1 degrees of freedom, the length of the code scales y2Y as d log n + O(1). In many applications, we may not have a (1) useful notion of sufficient statistics (e.g., when the parametric or more simply that X (−− Y (−− Z forms a Markov chain family is not an exponential family) and we also may not need in this order. Because Y is a function of X, it is also true that to reconstruct the generating distribution exactly. By adopting I(Z; X) = I(Z; Y ). This intuitively means that the sufficient a Shannon-theoretic approach in which we allow a small error in estimating the generating distribution, we construct various statistic Y provides as much information about the parameter approximate sufficient statistics and show that the code length Z as the original data X does. d can be reduced to 2 log n + O(1). We consider errors measured For concreteness in our discussions, we often (but not according to the relative entropy and variational distance criteria.