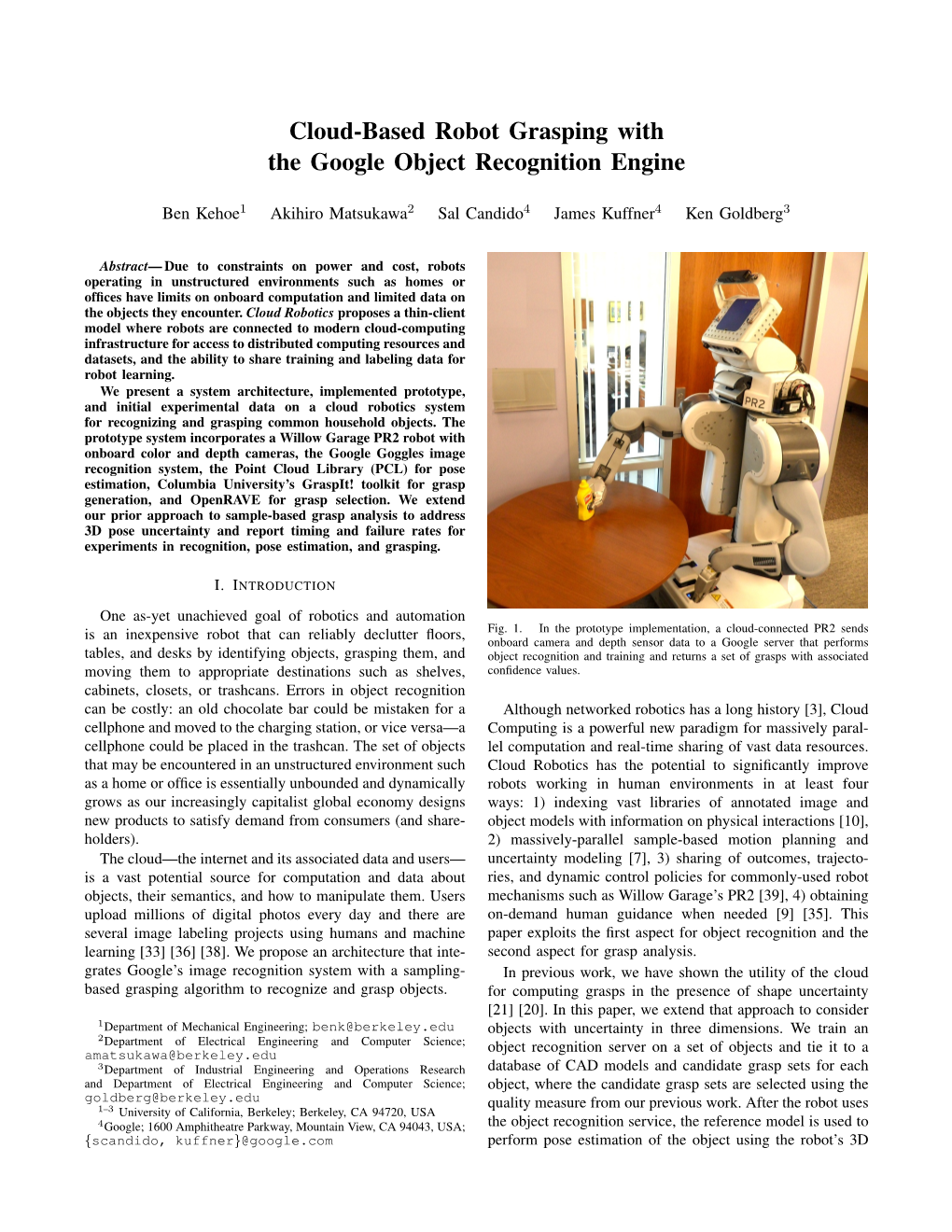

Cloud-Based Robot Grasping with the Google Object Recognition Engine

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Here Come the Robot Nurses () Rokid Air 4K AR Glasses Raise Over

Serving robot finds work at Victoria restaurant () Utopia VR - The Metaverse for Everyone () Utopia VR Promo Video () Facebook warned over ‘very small’ indicator LED on smart glasses, as EU DPAs flag privacy concerns () Google’s Former AI Ethics Chief Has a Plan to Rethink Big Tech () Introducing iPhone 13 Pro | Apple () Introducing Apple Watch Series 7 | Apple () Apple reveals Apple Watch Series 7, featuring a larger, more advanced display () Xiaomi shows off concept smart glasses with MicroLED display () Xiaomi Smart Glasses | Showcase | A display in front of your eyes () ‘Neurograins’ Could be the Next Brain-Computer Interfaces () Welcome back to the moment. With Ray-Ban x Facebook () Facebook's Ray-Ban Stories smart glasses: Cool or creepy? () Introducing WHOOP 4.0 - The Personalized Digital Fitness and Health Coach () Introducing WHOOP Body - The Future of Wearable Technology () The Future of Communication in the Metaverse () Making pancakes with Reachy through VR Teleoperation () Trump Camp Posts Fake Video Of Biden () More than 50 robots are working at Singapore's high-tech hospital () The manual for Facebook’s Project Aria AR glasses shows what it’s like to wear them () Tesla Bot explained () Tesla Bot Takes Tech Demos to Their Logical, Absurd Conclusion () Watch brain implant help man 'speak' for the first time in 15 years () An Artificial Intelligence Helped Write This Play. It May Contain Racism () Elon Musk REVEALS Tesla Bot () Meet Grace, a humanoid robot designed for healthcare () Horizon Workrooms - Remote Collaboration Reimagined () Atlas | Partners in Parkour () Page 1 of 165 Inside the lab: How does Atlas work? () Neural recording and stimulation using wireless networks of microimplants () The Metaverse is a Dystopian Nightmare. -

Converting an Internal Combustion Engine Vehicle to an Electric Vehicle

AC 2011-1048: CONVERTING AN INTERNAL COMBUSTION ENGINE VEHICLE TO AN ELECTRIC VEHICLE Ali Eydgahi, Eastern Michigan University Dr. Eydgahi is an Associate Dean of the College of Technology, Coordinator of PhD in Technology program, and Professor of Engineering Technology at the Eastern Michigan University. Since 1986 and prior to joining Eastern Michigan University, he has been with the State University of New York, Oak- land University, Wayne County Community College, Wayne State University, and University of Maryland Eastern Shore. Dr. Eydgahi has received a number of awards including the Dow outstanding Young Fac- ulty Award from American Society for Engineering Education in 1990, the Silver Medal for outstanding contribution from International Conference on Automation in 1995, UNESCO Short-term Fellowship in 1996, and three faculty merit awards from the State University of New York. He is a senior member of IEEE and SME, and a member of ASEE. He is currently serving as Secretary/Treasurer of the ECE Division of ASEE and has served as a regional and chapter chairman of ASEE, SME, and IEEE, as an ASEE Campus Representative, as a Faculty Advisor for National Society of Black Engineers Chapter, as a Counselor for IEEE Student Branch, and as a session chair and a member of scientific and international committees for many international conferences. Dr. Eydgahi has been an active reviewer for a number of IEEE and ASEE and other reputedly international journals and conferences. He has published more than hundred papers in refereed international and national journals and conference proceedings such as ASEE and IEEE. Mr. Edward Lee Long IV, University of Maryland, Eastern Shore Edward Lee Long IV graduated from he University of Maryland Eastern Shore in 2010, with a Bachelors of Science in Engineering. -

Android (Operating System) 1 Android (Operating System)

Android (operating system) 1 Android (operating system) Android Home screen displayed by Samsung Nexus S with Google running Android 2.3 "Gingerbread" Company / developer Google Inc., Open Handset Alliance [1] Programmed in C (core), C++ (some third-party libraries), Java (UI) Working state Current [2] Source model Free and open source software (3.0 is currently in closed development) Initial release 21 October 2008 Latest stable release Tablets: [3] 3.0.1 (Honeycomb) Phones: [3] 2.3.3 (Gingerbread) / 24 February 2011 [4] Supported platforms ARM, MIPS, Power, x86 Kernel type Monolithic, modified Linux kernel Default user interface Graphical [5] License Apache 2.0, Linux kernel patches are under GPL v2 Official website [www.android.com www.android.com] Android is a software stack for mobile devices that includes an operating system, middleware and key applications.[6] [7] Google Inc. purchased the initial developer of the software, Android Inc., in 2005.[8] Android's mobile operating system is based on a modified version of the Linux kernel. Google and other members of the Open Handset Alliance collaborated on Android's development and release.[9] [10] The Android Open Source Project (AOSP) is tasked with the maintenance and further development of Android.[11] The Android operating system is the world's best-selling Smartphone platform.[12] [13] Android has a large community of developers writing applications ("apps") that extend the functionality of the devices. There are currently over 150,000 apps available for Android.[14] [15] Android Market is the online app store run by Google, though apps can also be downloaded from third-party sites. -

Istep News 05-10

newsletter A publication from ifm efector featuring innovation steps in technology from around the world EDITORIAL TECHNOLOGY NEWS Dear Readers, Buckle your seatbelt. Augmented Reality This issue of i-Step Augmented Reality newsletter is designed to take you to a new Technology dimension – it’s called augmented reality (AR). The picture of the train to the right is not what it seems. Using your smartphone or tablet, take a moment to follow the three steps explained below the picture and watch what happens. AR will soon be infiltrating your everyday life. From the newspapers and magazines that you read to the buildings and restaurants that you walk by, AR bridges physical imagery The start up company Aurasma has developed the latest technology in with virtual reality to deliver content in augmented reality. To see what we’re talking about, follow these three steps a way that’s never been seen before. that explain how to get started and let the fun begin! Many of the images in this issue Step 1: Download the free Step 2: Scan this Step 3: Point your will come alive. Simply point your Aurasma Lite app QR code with any QR device’s camera at the device wherever your see the and to your smart phone reader on your device. complete photo above experience the fun of this new or tablet from the App This code connects you and be surprised! technology. or Android Stores. to the ifm channel. Enjoy the issue! There’s an adage that states “there’s is deemed old the minute the nothing older than yesterday’s news.” newspapers roll of the press. -

Physics 170 - Thermodynamic Lecture 40

Physics 170 - Thermodynamic Lecture 40 ! The second law of thermodynamic 1 The Second Law of Thermodynamics and Entropy There are several diferent forms of the second law of thermodynamics: ! 1. In a thermal cycle, heat energy cannot be completely transformed into mechanical work. ! 2. It is impossible to construct an operational perpetual-motion machine. ! 3. It’s impossible for any process to have as its sole result the transfer of heat from a cooler to a hotter body ! 4. Heat flows naturally from a hot object to a cold object; heat will not flow spontaneously from a cold object to a hot object. ! ! Heat Engines and Thermal Pumps A heat engine converts heat energy into work. According to the second law of thermodynamics, however, it cannot convert *all* of the heat energy supplied to it into work. Basic heat engine: hot reservoir, cold reservoir, and a machine to convert heat energy into work. Heat Engines and Thermal Pumps 4 Heat Engines and Thermal Pumps This is a simplified diagram of a heat engine, along with its thermal cycle. Heat Engines and Thermal Pumps An important quantity characterizing a heat engine is the net work it does when going through an entire cycle. Heat Engines and Thermal Pumps Heat Engines and Thermal Pumps Thermal efciency of a heat engine: ! ! ! ! ! ! From the first law, it follows: Heat Engines and Thermal Pumps Yet another restatement of the second law of thermodynamics: No cyclic heat engine can convert its heat input completely to work. Heat Engines and Thermal Pumps A thermal pump is the opposite of a heat engine: it transfers heat energy from a cold reservoir to a hot one. -

Internal and External Combustion Engine Classifications: Gasoline

Internal and External Combustion Engine Classifications: Gasoline and diesel engine classifications: A gasoline (Petrol) engine or spark ignition (SI) burns gasoline, and the fuel is metered into the intake manifold. Spark plug ignites the fuel. A diesel engine or (compression ignition Cl) bums diesel oil, and the fuel is injected right into the engine combustion chamber. When fuel is injected into the cylinder, it self ignites and bums. Preparation of fuel air mixture (gasoline engine): * High calorific value: (Benzene (Gasoline) 40000 kJ/kg, Diesel 45000 kJ/kg). * Air-fuel ratio: A chemically correct air-fuel ratio is called stoichiometric mixture. It is an ideal ratio of around 14.7:1 (14.7 parts of air to 1 part fuel by weight). Under steady-state engine condition, this ratio of air to fuel would assure that all of the fuel will blend with all of the air and be burned completely. A lean fuel mixture containing a lot of air compared to fuel, will give better fuel economy and fewer exhaust emissions (i.e. 17:1). A rich fuel mixture: with a larger percentage of fuel, improves engine power and cold engine starting (i.e. 8:1). However, it will increase emissions and fuel consumption. * Gasoline density = 737.22 kg/m3, air density (at 20o) = 1.2 kg/m3 The ratio 14.7 : 1 by weight equal to 14.7/1.2 : 1/737.22 = 12.25 : 0.0013564 The ratio is 9,030 : 1 by volume (one liter of gasoline needs 9.03 m3 of air to have complete burning). -

The 87 Best Android Apps of 2014 Source

The 87 Best Android Apps of 2014 Source: PCMAG Categories: Social Media Travel Productivity Health Fun Communication Security Tools Food Write Read Google Hangouts Google Hangouts Cost: Free Google has replaced Talk with Google Hangouts. It is a web- based and mobile video chat service. Translators and interpreters can easily host a video meeting with up to 12 participants. All participants will need a Google+ account. For those camera shy, it is simple turn off video to allow audio to stream through. Other features include text chat, share pictures and videos. Just drop your image or share your message in Hangouts chat box, and your clients receive it instantly. In iOS and Android Hangouts supports virtually every device and version. Another nice feature is the ability to use your front or rear-facing camera in your video chat at any time. Google Voice Google Voice Cost: Free Google Voice integrates seamlessly with your Android phone. Voice offers a single number that will ring through to as many devices as you wish. To protect your privacy, use the spam and blocking filters. You can also use Google Voice make cheap international calls. Voice also can send and receive free text from any device, even the desktop PC. Viber Viber Cost: Free Viber is a mobile app that allows you to make phone calls and send text messages to all other Viber users for free. Viber is available over WiFi or 3G. Sound quality is excellent. From your Android, you can easily transfer a voice call to the Viber PC app and keep talking. -

Building Android Apps with HTML, CSS, and Javascript

SECOND EDITION Building Android Apps with HTML, CSS, and JavaScript wnload from Wow! eBook <www.wowebook.com> o D Jonathan Stark with Brian Jepson Beijing • Cambridge • Farnham • Köln • Sebastopol • Tokyo Building Android Apps with HTML, CSS, and JavaScript, Second Edition by Jonathan Stark with Brian Jepson Copyright © 2012 Jonathan Stark. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Brian Jepson Cover Designer: Karen Montgomery Production Editor: Kristen Borg Interior Designer: David Futato Proofreader: O’Reilly Production Services Illustrator: Robert Romano September 2010: First Edition. January 2012: Second Edition. Revision History for the Second Edition: 2012-01-10 First release See http://oreilly.com/catalog/errata.csp?isbn=9781449316419 for release details. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Building Android Apps with HTML, CSS, and JavaScript, the image of a maleo, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and authors assume no responsibility for errors or omissions, or for damages resulting from the use of the information con- tained herein. -

Robosuite: a Modular Simulation Framework and Benchmark for Robot Learning

robosuite: A Modular Simulation Framework and Benchmark for Robot Learning Yuke Zhu Josiah Wong Ajay Mandlekar Roberto Mart´ın-Mart´ın robosuite.ai Abstract robosuite is a simulation framework for robot learning powered by the MuJoCo physics engine. It offers a modular design for creating robotic tasks as well as a suite of benchmark environments for reproducible re- search. This paper discusses the key system modules and the benchmark environments of our new release robosuite v1.0. 1 Introduction We introduce robosuite, a modular simulation framework and benchmark for robot learning. This framework is powered by the MuJoCo physics engine [15], which performs fast physical simulation of contact dynamics. The overarching goal of this framework is to facilitate research and development of data-driven robotic algorithms and techniques. The development of this framework was initiated from the SURREAL project [3] on distributed reinforcement learning for robot manipulation, and is now part of the broader Advancing Robot In- telligence through Simulated Environments (ARISE) Initiative, with the aim of lowering the barriers of entry for cutting-edge research at the intersection of AI and Robotics. Data-driven algorithms [9], such as reinforcement learning [13,7] and imita- tion learning [12], provide a powerful and generic tool in robotics. These learning arXiv:2009.12293v1 [cs.RO] 25 Sep 2020 paradigms, fueled by new advances in deep learning, have achieved some excit- ing successes in a variety of robot control problems. Nonetheless, the challenges of reproducibility and the limited accessibility of robot hardware have impaired research progress [5]. In recent years, advances in physics-based simulations and graphics have led to a series of simulated platforms and toolkits [1, 14,8,2, 16] that have accelerated scientific progress on robotics and embodied AI. -

2(D) Citation Watch – Google Inc Towergatesoftware Towergatesoftware.Com 1 866 523 TWG8

2(d) Citation Watch – Google inc towergatesoftware towergatesoftware.com 1 866 523 TWG8 Firm/Corresp Owner (cited) Mark (cited) Mark (refused) Owner (refused) ANDREW ABRAMS Google Inc. G+ EXHIBIA SOCIAL SHOPPING F OR Exhibía OY 85394867 G+ ACCOUNT REQUIRED TO BID 86325474 Andrew Abrams Google Inc. GOOGLE CURRENTS THE GOOGLE HANDSHAKE Goodway Marketing Co. 85564666 85822092 Andrew Abrams Google Inc. GOOGLE TAKEOUT GOOGLEBEERS "Munsch, Jim" 85358126 86048063 Annabelle Danielvarda Google Inc. BROADCAST YOURSELF ORR TUBE BROADCAST MYSELF "Orr, Andrew M" 78802315 85206952 Annabelle Danielvarda Google Inc. BROADCAST YOURSELF WEBCASTYOURSELF Todd R Saunders 78802315 85213501 Annabelle Danielvarda Google Inc. YOUTUBE ORR TUBE BROADCAST MYSELF "Orr, Andrew M" 77588871 85206952 Annabelle Danielvarda Google Inc. YOUTUBE YOU PHOTO TUBE Jorge David Candido 77588871 85345360 Annabelle Danielvarda Google Inc. YOUTUBE YOUTOO SOCIAL TV "Youtoo Technologies, Llc" 77588871 85192965 Building 41 Google Inc. GMAIL GOT GMAIL? "Kuchlous, Ankur" 78398233 85112794 Building 41 Google Inc. GMAIL "VOG ART, KITE, SURF, SKATE, "Kruesi, Margaretta E." 78398233 LIFE GRETTA KRUESI WWW.GRETTAKRUESI.COM [email protected]" 85397168 "BUMP TECHNOLOGIES, INC." GOOGLE INC. BUMP PAY BUMPTOPAY Nexus Taxi Inc 85549958 86242487 1 Copyright 2015 TowerGate Software Inc 2(d) Citation Watch – Google inc towergatesoftware towergatesoftware.com 1 866 523 TWG8 Firm/Corresp Owner (cited) Mark (cited) Mark (refused) Owner (refused) "BUMP TECHNOLOGIES, INC." GOOGLE INC. BUMP BUMP.COM Bump Network 77701789 85287257 "BUMP TECHNOLOGIES, INC." GOOGLE INC. BUMP BUMPTOPAY Nexus Taxi Inc 77701789 86242487 Christine Hsieh Google Inc. GLASS GLASS "Border Stylo, Llc" 85661672 86063261 Christine Hsieh Google Inc. GOOGLE MIRROR MIRROR MIX "Digital Audio Labs, Inc." 85793517 85837648 Christine Hsieh Google Inc. -

Google Starts Visual Search for Mobile Devices and Realtime Search in the Internet

Communiqué de presse New Search Functions: Google starts visual search for mobile devices and realtime search in the internet Zurich, December 7, 2009 – Google was founded as a search company, and we continue to dedicate more time and energy to search than anything else we do. Today at an event in California, Google announced some innovative new additions to the search family: Google Goggles (Labs) is a new visual search application for Android devices that allows you to search with a picture instead of words. When you take a picture of an object with the camera on your phone, we attempt to recognize the item, and return relevant search results. Have you ever seen a photo of a beautiful place and wanted to know where it is? Or stumbled across something in the newspaper and wanted to learn more? Have you ever wanted more information about a landmark you’re visiting? Or wanted to store the contact details from a business card while you’re on the go? Goggles can help with all of these, and more. Google Goggles works by attempting to match portions of a picture against our corpus of images. When a match is found, we return search terms that are relevant to the matched image. Goggles currently recognizes tens of millions of objects, including places, famous works of art, and logos. For places, you don't even need to take a picture. Just open Google Goggles and hold your phone in front of the place you're interested in, then using the device’s GPS and compass, Goggles will recognise what that place is and display its name on the camera viewfinder. -

Toward Multi-Engine Machine Translation Sergei Nirenburg and Robert Frederlcing Center for Machine Translation Carnegie Mellon University Pittsburgh, PA 15213

Toward Multi-Engine Machine Translation Sergei Nirenburg and Robert Frederlcing Center for Machine Translation Carnegie Mellon University Pittsburgh, PA 15213 ABSTRACT • a knowledge-based MT (K.BMT) system, the mainline Pangloss Current MT systems, whatever translation method they at present engine[l]; employ, do not reach an optimum output on free text. Our hy- • an example-based MT (EBMT) system (see [2, 3]; the original pothesis for the experiment reported in this paper is that if an MT idea is due to Nagao[4]); and environment can use the best results from a variety of MT systems • a lexical transfer system, fortified with morphological analysis working simultaneously on the same text, the overallquality will im- and synthesis modules and relying on a number of databases prove. Using this novel approach to MT in the latest version of the - a machine-readable dictionary (the Collins Spanish/English), Pangloss MT project, we submit an input text to a battery of machine the lexicons used by the KBMT modules, a large set of user- translation systems (engines), coLlect their (possibly, incomplete) re- generated bilingual glossaries as well as a gazetteer and a List sults in a joint chaR-like data structure and select the overall best of proper and organization names. translation using a set of simple heuristics. This paper describes the simple mechanism we use for combining the findings of the various translation engines. The results (target language words and phrases) were recorded in a chart whose initial edges corresponded to words in the source language input. As a result of the operation of each of the MT 1.