End-To-End Deep Diagnosis of X-Ray Images

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gvirtualxray: Open-Source Library to Simulate Transmission X-Ray Images Presented at IBFEM-4I 2018

gVirtualXRay: Open-source Library to Simulate Transmission X-ray Images Presented at IBFEM-4i 2018 Franck P. Vidal Lecturer in Visualisation School of Computer Science and Electronic Engineering Bangor University Wed 5 Sep 2018 Outline 1 Statement 2 Background 3 Simulation Model 4 Validation 5 Application examples 6 Case study 7 Conclusions 2 / 61 Parts of this work have been funded by: FP7 Career Integration Grant: Fly4PET: Fly Algorithm in PET Reconstruction for Radiotherapy Treatment Planning http://fly4pet.fpvidal.net/ FP7 ICT Collaborative project: RASimAs: Regional Anaesthesia Simulator and Assistant http://www.rasimas.eu/ The Titan Xp used for this research was donated by Thanks to Dr Jean-Michel L´etang,INSA de Lyon, for his support during the data acquisition. 3 / 61 Outline 1 Statement 2 Background 3 Simulation Model 4 Validation 5 Application examples 6 Case study 7 Conclusions 4 / 61 Rational for this Research (in Medical VR) Simulation of X-Ray attenuation extensively studied in physics; Different physically-based simulation code available; Physically-based simulation usually performed using Monte Carlo methods on CPU (often used in dosimetry for radiotherapy); Computing an image requires a very very very long time; Ray-tracing techniques are an alternative, but still relatively slow on CPU; Need for an fast open-source graphics processing unit (GPU) implementation. 5 / 61 Timeline N. Freud, \Modelling and simulation of X- and γ-ray imaging systems", PhD thesis, INSA de Lyon, France, 2003 F. P. Vidal, \Modelling the response of x-ray detectors and removing artefacts in 3D tomography", Master's thesis, INSA de Lyon, France, Sep. -

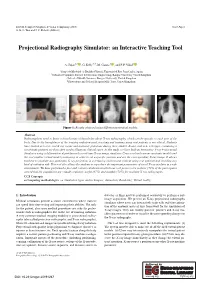

Projectional Radiography Simulator: an Interactive Teaching Tool

EG UK Computer Graphics & Visual Computing (2019) Short Paper G. K. L. Tam and J. C. Roberts (Editors) Projectional Radiography Simulator: an Interactive Teaching Tool A. Sujar1,2 , G. Kelly3,4, M. García1 , and F. P. Vidal2 1Grupo de Modelado y Realidad Virtual, Universidad Rey Juan Carlos, Spain 2School of Computer Science & Electronic Engineering, Bangor University United Kingdom 3School of Health Sciences, Bangor University, United Kingdom 4Shrewsbury and Telford Hospital NHS Trust, United Kingdom Figure 1: Results obtained using different anatomical models. Abstract Radiographers need to know a broad range of knowledge about X-ray radiography, which can be specific to each part of the body. Due to the harmfulness of the ionising radiation used, teaching and training using real patients is not ethical. Students have limited access to real X-ray rooms and anatomic phantoms during their studies. Books, and now web apps, containing a set of static pictures are then often used to illustrate clinical cases. In this study, we have built an Interactive X-ray Projectional Simulator using a deformation algorithm with a real-time X-ray image simulator. Users can load various anatomic models and the tool enables virtual model positioning in order to set a specific position and see the corresponding X-ray image. It allows teachers to simulate any particular X-ray projection in a lecturing environment without using real patients and avoiding any kind of radiation risk. This tool also allows the students to reproduce the important parameters of a real X-ray machine in a safe environment. We have performed a face and content validation in which our tool proves to be realistic (72% of the participants agreed that the simulations are visually realistic), useful (67%) and suitable (78%) for teaching X-ray radiography. -

Radiologists : Threatened by a Veritable Identity Crisis?

Current Trends in Clinical & Medical Imaging ISSN: 2573-2609 Opinion Curr Trends Clin Med Imaging Volume 2 Issue 1 - September 2017 Copyright © All rights are reserved by Werner Albert Golder DOI: 10.19080/CTCMI.2017.02.555578 Radiologists : Threatened by a Veritable Identity Crisis? Werner Albert Golder* Association D`Imagerie Medicale, France Submission: September 11, 2017 ; Published: September 18, 2017 *Corresponding author: Email: Werner Albert Golder, Md Phd, Professor Of Radiology 23, Rue De L`Oriflamme, F-84000 Avignon, France; Introduction Radiologists have always found it harder than representatives Unlike the conventional radiograph, the digital radiograph of other disciplines to ensure that their patients perceive is nolonger a definitive, unique document, this product can and recognize them as true physicians rather than medical no longer claim unrestricted copyright. Although radiologists technicians. They are too strongly and too unilaterally associated obtain the dataset, they have no influence over what is done with with the machines they operate and with their products; in many it and what is made of it. The radiographic representation they cases, they are also too far removed from the pain and anxiety have chosen is only one of many alternative ways of presenting that prompted the patients to see a physician. the dataset optically. Many radiologists have come to terms with this situation, This limitation applies both to projectional radiography and accepting their role as representatives of a paramedical science diagnostic cross-sectional imaging. Whoever receives the raw more or less uncomplainingly and trying to make the best of data of an examination with the data pack can use the integrated things. -

Patient Dose Audit in Computed Tomography at Cancer Institute of Guyana

al Diag ic no d s e t M i c f o M l e a t h n r o d u s o J ISSN: 2168-9784 Journal of Medical Diagnostic Methods Research Article Patient Dose Audit in Computed Tomography at Cancer Institute of Guyana Ramzee Small1, Petal P Surujpaul2*, and Sayan Chakraborty3 1Medical Imaging Technologist, University of Guyana, Guyana 2Medical Physics, Georgetown Public Hospital Cooperation (GPHC), University of Guyana, Guyana Abstract Objective: This study is the first investigation on computed tomography (CT) unit in Guyana at the Cancer Institute of Guyana (CIG), aimed at performing an audit on the radiation dose estimated by the GE LightSpeed QXi CT unit for common computed tomography examinations Method: A RaySafe X2 CT calibration detector were used to obtained measurements for common CT examinations (head, neck, chest, abdomen, pelvis, upper extremity and lower extremity) done in free air as the control (36 data) and also with patients (35 data). Patient’s measurement were limited to the head, chest, abdomen and pelvis CT examinations. Exposure/electro-technical parameters and dose metrics (CTDIvol, DLP) were recorded and the k- coefficient conversion factor established by National Radiology Protection Board (NRPB) were used to calculate the effective dose associated with each examination. Results: The results indicated that the CT unit overestimated the dose for patient measurements and underestimated the dose for measurements taken In-Air with the exception of the head protocol which showed overestimation with the patient measurements. Both over- and underestimation were documented for the neck protocol. Comparison of estimated dose between published data shows that there are variations in techniques and radiation dose across institution for similar examinations and that the protocols reported by CIG documented an overall higher effective dose. -

Gvirtualxray: Virtual X-Ray Imaging Library on GPU Aaron Sujar, Andreas Meuleman, Pierre-Frédéric Villard, Marcos Garcia, Franck Vidal

gVirtualXRay: Virtual X-Ray Imaging Library on GPU Aaron Sujar, Andreas Meuleman, Pierre-Frédéric Villard, Marcos Garcia, Franck Vidal To cite this version: Aaron Sujar, Andreas Meuleman, Pierre-Frédéric Villard, Marcos Garcia, Franck Vidal. gVir- tualXRay: Virtual X-Ray Imaging Library on GPU. Tao Ruan Wan and Franck Vi- dal. Computer Graphics and Visual Computing , Sep 2017, Manchester, United King- dom. The Eurographics Association, pp.61-68, 2017, Computer Graphics and Visual Computing <https://diglib.eg.org/handle/10.2312/2631686>. <10.2312/cgvc.20171279>. <hal-01588532> HAL Id: hal-01588532 https://hal.archives-ouvertes.fr/hal-01588532 Submitted on 15 Sep 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons CC BY - Attribution 4.0 International License EG UK Computer Graphics & Visual Computing (2017) Tao Ruan Wan and Franck Vidal (Editors) gVirtualXRay: Virtual X-Ray Imaging Library on GPU A. Sujar1;2;3, A. Meuleman3;4, P.-F. Villard5;6;7, M. García1;2, and F. P. Vidal3 1 URJC Universidad Rey Juan Carlos, España, 2 GMRV Grupo de Modelado y Realidad Virtual 3 Bangor University, United Kingdom 4 Institut National des Sciences Appliquées de Rouen, France 5 Universite de Lorraine, LORIA, UMR 7503, Vandoeuvre-les-Nancy F-54506, France 6 Inria, Villers-les-Nancy F-54600, France 7 CNRS, LORIA, UMR 7503, Vandoeuvre-les-Nancy F-54506, France Abstract We present an Open-source library called gVirtualXRay to simulate realistic X-ray images in realtime. -

A Porcine Model

Journal of Forensic Radiology and Imaging 2 (2014) 20–24 Contents lists available at ScienceDirect Journal of Forensic Radiology and Imaging journal homepage: www.elsevier.com/locate/jofri Technical note The impact of analogue and digital radiography for the identification of occult post-mortem rib fractures in neonates: A porcine model Jonathan P. McNulty a,n, Niall P. Burke a, Natalie A. Pelletier b, Tania Grgurich b, Robert B. Lombardo c, William F. Hennessy b, Gerald J. Conlogue b,c a Diagnostic Imaging, Health Sciences Centre, School of Medicine and Medical Science, University College Dublin, Belfield, Dublin 4, Ireland b Diagnostic Imaging, Quinnipiac University, Hamden, CT, USA c Bioanthropology Research Institute, Quinnipiac University, Hamden, CT, USA article info abstract Article history: Objectives: Conventional radiography remains a valuable tool in forensic imaging; particularly where Received 8 July 2013 resources are limited. However, employing radiography to document occult fractures in infants less than Received in revised form 1 year old can be challenging. In order to clearly visualise these subtle fractures several technical factors 19 August 2013 must be taken into consideration. This study will explore and validate a range of radiographic approaches Accepted 9 September 2013 to such forensic cases. Available online 16 September 2013 Materials and methods: This study compares three imaging systems; a standard radiographic unit, a Keywords: mammographic unit and an X-ray cabinet unit. All images were recorded using mammographic film or a Post-mortem imaging digital, computed radiography (CR), system using varying exposure factors and a foetal pig with a post- Forensic radiology mortem fracture of the right third rib. -

Sudan Academy of Science Atomic Energy Concil

SUDAN ACADEMY OF SCIENCE ATOMIC ENERGY CONCIL ASSESSMENT OF PATIENT RADIATION DOSES DURING ROUTINE DIAGNOSTIC RADIOGRAPHY EXAMINATIONS ATHESIS SUBMITTED FOR THE REQUIREMENTS OF MASTER DEGREE IN RADIATION PROTECTION By: Asim Karam Aldden Adam Abas Adam B.Sc. in Physics(honors) Supervisor Dr.Abdelmoneim Adam Mohamed Sulieman November2015 Dedication To whom I have always loved my parents, My brothers and sisters, My wife I Acknowledgment I deeply thanks for Dr. Abdelmoneim Adam Mohamed for his support and guidance till accomplished this work. My thanks go to staff technologists in Khartoum hospital teaching , Fedail hospital, Enaze center in bahary and the national Ribat University hospital. My thanks extend to everyone who helped me in different ways to make this work possible. Finally I would like sincerely thanks to my brother Almobarak to help and encouraged . II Contents ID Title No. Chapter One: Introduction 1.1 Introduction 1 1.2 Source of ionizing radiation 3 1.2.1 Medical radiation 4 1.3 Radiation Risks from Medical X-rays 9 1.4 Biological effect 10 1.5 Stochastic effect 12 1.6 Quality assurance 13 1.7 Problem of the study 14 1.8 Objectives of the study 15 1.9 Signification of the Study 16 Chapter Two: Theoretical Background 2.1 X-Ray machine components 17 2.1.1 The X-ray tube 18 2.2 Properties of X-rays 23 2.3 Production of X-ray 28 2.4 The interaction of x‐ray with matter 32 2.6 The design of x-ray room 34 2.7 X-ray images 35 2.8 Radiation dosimetry 37 2.9 UNITS 39 2.11 Conventional radiography 47 III 2.13 Principles of adiation -

Artificial Intelligence in Diagnostic Imaging: Impact on the Radiography Profession

Artificial intelligence in diagnostic imaging: impact on the radiography profession Item Type Article Authors Hardy, Maryann L.; Harvey, H. Citation Hardy M and Harvey H (2020) Artificial intelligence in diagnostic imaging: impact on the radiography profession. British Journal of Radiology. 93(1108): 20190840. Rights © 2020 The Authors. Published by the British Institute of Radiology. Reproduced in accordance with the publisher's self- archiving policy. Download date 24/09/2021 16:11:34 Link to Item http://hdl.handle.net/10454/17732 BJR © 2020 The Authors. Published by the British Institute of Radiology https://doi. org/ 10. 1259/ bjr. 20190840 Received: Revised: Accepted: 30 September 2019 29 November 2019 04 December 2019 Cite this article as: Hardy M, Harvey H. Artificial intelligence in diagnostic imaging: impact on the radiography profession. Br J Radiol 2020; 93: 20190840. REVIEW ARTICLE Artificial intelligence in diagnostic imaging: impact on the radiography profession 1MARYANN HARDY, PhD, MSc, BSc(Hons), DCR(R) and 2HUGH HARVEY, MBBS BSc(Hons) FRCR MD(Res) 1University of Bradford, Bradford, England 2Hardian Health, Haywards Heath, UK Address correspondence to: Professor Maryann Hardy E-mail: M. L. Hardy1@ bradford. ac. uk ABSTRACT The arrival of artificially intelligent systems into the domain of medical imaging has focused attention and sparked much debate on the role and responsibilities of the radiologist. However, discussion about the impact of such tech- nology on the radiographer role is lacking. This paper discusses the potential impact of artificial intelligence (AI) on the radiography profession by assessing current workflow and cross- mapping potential areas of AI automation such as procedure planning, image acquisition and processing. -

Virtual X-Ray Imaging Library on GPU Aaron Sujar, Andreas Meuleman, Pierre-Frédéric Villard, Marcos Garcia, Franck Vidal

gVirtualXRay: Virtual X-Ray Imaging Library on GPU Aaron Sujar, Andreas Meuleman, Pierre-Frédéric Villard, Marcos Garcia, Franck Vidal To cite this version: Aaron Sujar, Andreas Meuleman, Pierre-Frédéric Villard, Marcos Garcia, Franck Vidal. gVirtu- alXRay: Virtual X-Ray Imaging Library on GPU. Computer Graphics and Visual Computing , Sep 2017, Manchester, United Kingdom. pp.61-68, 10.2312/cgvc.20171279. hal-01588532 HAL Id: hal-01588532 https://hal.archives-ouvertes.fr/hal-01588532 Submitted on 15 Sep 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License EG UK Computer Graphics & Visual Computing (2017) Tao Ruan Wan and Franck Vidal (Editors) gVirtualXRay: Virtual X-Ray Imaging Library on GPU A. Sujar1;2;3, A. Meuleman3;4, P.-F. Villard5;6;7, M. García1;2, and F. P. Vidal3 1 URJC Universidad Rey Juan Carlos, España, 2 GMRV Grupo de Modelado y Realidad Virtual 3 Bangor University, United Kingdom 4 Institut National des Sciences Appliquées de Rouen, France 5 Universite de Lorraine, LORIA, UMR 7503, Vandoeuvre-les-Nancy F-54506, France 6 Inria, Villers-les-Nancy F-54600, France 7 CNRS, LORIA, UMR 7503, Vandoeuvre-les-Nancy F-54506, France Abstract We present an Open-source library called gVirtualXRay to simulate realistic X-ray images in realtime. -

How Do We Measure Dose and Estimate Risk?

Invited Paper How do we measure dose and estimate risk? Christoph Hoeschen*a, Dieter Regulla a, Helmut Schlattl a, Nina Petoussi-Henss a, Wei Bo Li a, Maria Zankl a aDepartment of Medical Radiation Physics and Diagnostics, Helmholtz Zentrum München, Ingolstädter Landstr.1, 85764 Neuherberg, Germany ABSTRACT Radiation exposure due to medical imaging is a topic of emerging importance. In Europe this topic has been dealt with for a long time and in other countries it is getting more and more important and it gets an aspect of public interest in the latest years. This is mainly true due to the fact that the average dose per person in developed countries is increasing rapidly since threedimensional imaging is getting more and more available and useful for diagnosis. This paper introduces the most common dose quantities used in medical radiation exposure characterization, discusses usual ways for determination of such quantities as well as some considerations how these values are linked to radiation risk estimation. For this last aspect the paper will refer to the linear non threshold theory for an imaging application. Keywords: dose quantities, risk estimates, dose measurements, simulation tools 1. INTRODUCTION Projectional radiography and even more threedimensional (3D) medical imaging technologies like CT are of great importance for the diagnosis of diseases and therefore for the patient. The digital x-ray imaging technologies and the possibilities of fast and large volume data aquisition in Multislice-CT and more and more also in nuclear medical imaging technologies like SPECT or PET have changed the clinical praxis to a large extend. -

High Resolution MRI for Quantitative Assessment of Inferior Alveolar Nerve Impairment in Course of Mandible Fractures

www.nature.com/scientificreports OPEN High resolution MRI for quantitative assessment of inferior alveolar nerve impairment in course of mandible fractures: an imaging feasibility study Egon Burian1*, Nico Sollmann1, Lucas M. Ritschl2, Benjamin Palla3, Lisa Maier1, Claus Zimmer1, Florian Probst4, Andreas Fichter2, Michael Miloro3 & Monika Probst1 The purpose of this study was to evaluate a magnetic resonance imaging (MRI) protocol for direct visualization of the inferior alveolar nerve in the setting of mandibular fractures. Fifteen patients sufering from unilateral mandible fractures involving the inferior alveolar nerve (15 afected IAN and 15 unafected IAN from contralateral side) were examined on a 3 T scanner (Elition, Philips Healthcare, Best, the Netherlands) and compared with 15 healthy volunteers (30 IAN in total). The sequence protocol consisted of a 3D STIR, 3D DESS and 3D T1 FFE sequence. Apparent nerve-muscle contrast-to-noise ratio (aNMCNR), apparent signal-to-noise ratio (aSNR), nerve diameter and fracture dislocation were evaluated by two radiologists and correlated with nerve impairment. Furthermore, dislocation as depicted by MRI was compared to computed tomography (CT) images. Patients with clinically evident nerve impairment showed a signifcant increase of aNMCNR, aSNR and nerve diameter compared to healthy controls and to the contralateral side (p < 0.05). Furthermore, the T1 FFE sequence allowed dislocation depiction comparable to CT. This prospective study provides a rapid imaging protocol using the 3D STIR and 3D T1 FFE sequence that can directly assess both mandible fractures and IAN damage. In patients with hypoesthesia following mandibular fractures, increased aNMCNR, aSNR and nerve diameter on MRI imaging may help identify patients with a risk of prolonged or permanent hypoesthesia at an early time. -

Wo 2011/003902 A2

(12) INTERNATIONAL APPLICATION PUBLISHED UNDER THE PATENT COOPERATION TREATY (PCT) (19) World Intellectual Property Organization International Bureau (10) International Publication Number (43) International Publication Date 13 January 2011 (13.01.2011) WO 2011/003902 A2 (51) International Patent Classification: (81) Designated States (unless otherwise indicated, for every A61K 49/00 (2006.01) kind of national protection available): AE, AG, AL, AM, AO, AT, AU, AZ, BA, BB, BG, BH, BR, BW, BY, BZ, (21) International Application Number: CA, CH, CL, CN, CO, CR, CU, CZ, DE, DK, DM, DO, PCT/EP2010/05963 1 DZ, EC, EE, EG, ES, FI, GB, GD, GE, GH, GM, GT, (22) International Filing Date: HN, HR, HU, ID, IL, IN, IS, JP, KE, KG, KM, KN, KP, 6 July 2010 (06.07.2010) KR, KZ, LA, LC, LK, LR, LS, LT, LU, LY, MA, MD, ME, MG, MK, MN, MW, MX, MY, MZ, NA, NG, NI, (25) Filing Language: English NO, NZ, OM, PE, PG, PH, PL, PT, RO, RS, RU, SC, SD, (26) Publication Language: English SE, SG, SK, SL, SM, ST, SV, SY, TH, TJ, TM, TN, TR, TT, TZ, UA, UG, US, UZ, VC, VN, ZA, ZM, ZW. (30) Priority Data: 10 2009 032 189.6 7 July 2009 (07.07.2009) DE (84) Designated States (unless otherwise indicated, for every kind of regional protection available): ARIPO (BW, GH, (72) Inventor; and GM, KE, LR, LS, MW, MZ, NA, SD, SL, SZ, TZ, UG, (71) Applicant : BARTLING, Soenke [DE/DE]; Bussemer- ZM, ZW), Eurasian (AM, AZ, BY, KG, KZ, MD, RU, TJ, gasse 20, 691 17 Heidelberg (DE).