Touch Sensitive User Interface Module for ARM Cortex-M3

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cinos-Lg-Digital-Signage-Brochure.Pdf

Archived resources For further resources and documentation please visit us: www.cinos.net 2020 GLOBAL LG DIGITAL SIGNAGE Commercial Displays and Solutions * All images used are for illustrative purposes only. Download www.lg.com/global/business/information-display LG C-Display+ www.youtube.com/c/LGECommercialDisplay Mobile App www.facebook.com/LGInformationDisplay www.linkedin.com/company/lginformationdisplay Google Play Store twitter.com/LG_ID_HQ LG may make changes to specifications and product descriptions without notice. Copyright © 2020 LG Electronics Inc. All rights reserved. “LG Life’s Good” is a registered trademark of LG Corp. The names of products and brands mentioned here may be the trademarks of their respective owners. Shape Immersive Moments. Share Innovative Experience. Believe your business should be more impactful and iconic. LG Business Solution is here to realize the true potential of your business. Display all your boundless ideas on digital signage and watch the technology of LG Business Solutions shapes them into immersive moments. Make your ideas more outstanding through reality and vividness beyond your imagination and interactivity that allows people to engage. All immersive moments combine to create innovative experience for your business. CONTENTS LG Digital Signage Introductions - LED 06 - OLED 16 - High Brightness 22 - Touch 30 - Video Wall 36 - Stretch 44 - UHD 48 - FHD 56 - webOS Smart Signage Platform 64 - SuperSign Solution 66 - Extended Care 70 LED Various Designs for Customer’s Demands, Offers Great Visual Effects Through High Durability LG boasts unprecedented visual performance and stability by expanding its industry-leading display technology to LED signage. LG also has a variety of lineups to offer customized solutions in the indoor/outdoor environment. -

IDW09 Cover.Fm 1 ページ 2009年11月2日 月曜日 午後1時34分

IDW09_cover.fm 1 ページ 2009年11月2日 月曜日 午後1時34分 IDW ’09 THE 16TH INTERNATIONAL DISPLAY WORKSHOPS Workshops on • LC Science and Technologies (LCT) • Active Matrix Displays (AMD) • FPD Manufacturing, Materials and Components (FMC) • Plasma Displays (PDP) • EL Displays and Phosphors (PH) • Field Emission Display and CRT (FED) • Organic LED Displays (OLED) • 3D/Hyper-Realistic Displays and Systems (3D) • Applied Vision and Human Factors (VHF) • Projection and Large-Area Displays, and Their Components (LAD) • Electronic Paper (EP) • MEMS for Future Displays and Related Electron Devices (MEMS) • Display Electronic Systems (DES) Topical Sessions on • Flexible Displays (FLX) • Input Technologies (INP) • Final Program World Convention Center Summit Miyazaki, Japan December 9(Wed) – 11(Fri), 2009 IDW ’09 Secretariat: c/o Bilingual Group Ltd. 3-3-6 Kudan Minami, Chiyoda-ku Tokyo 102-0074, Japan IDW ’09 FINAL PROGRAM CONTENTS Program Highlights............................................................................... 5 General Information.............................................................................. 9 Hotel and Travel Information............................................................... 12 Plenary Sessions Wednesday, December 9 IDW ’09 Opening ............................................................................... 18 IDW ’09 Keynote Addresses.............................................................. 18 IDW ’09 Invited Addresses ................................................................ 19 Workshop on LC Science -

Information Display Magazine Jan/Feb 2016 Vol. 32, No. 1

Radiant.InformationDisplay11.2015_outlines.indd 1 11/12/2015 3:06:34 PM ID TOC Issue1 p1_Layout 1 1/2/2016 1:49 PM Page 1 SOCIETY FOR INFORMATION DISPLAY Information SID JANUARY/FEBRUARY 2016 DISPLAY VOL. 32, NO. 1 ON THE COVER: The growth in digital signage remains significant for the foreseeable future. Increasing market demand, which is being met with an aggressive push to develop new products, are enabling improvements in all current tech- contents nologies, especially those that are LED based. Many new applications are emerging based on these new 2 Editorial: Sign of the Times innovations. The ever-widening availability of n By Stephen P. Atwood content is also driving the need for more displays. 3 Industry News n By Jenny Donelan 4 Guest Editorial: Amorphous? Again?? n By John F. Wager 6 Frontline Technology: Elemental Evolution of Digital-Signage Components The strong market growth of digital signage over the past 10 years will be outshone by the expanded performance and applications made possible through recent display and system advances. n By Gary Feather 12 Frontline Technology: New Directions for Digital Signage and Public Displays Part one of our two-part series on the market dynamics of digital-signage applications for commercial (or public) displays looks at the growth of the industry and the technologies that Cover Design: Jodi Buckley are helping shape that growth into the future. n By Todd Fender 16 Frontline Technology: Oxide TFTs: A Progress Report In the Next Issue of For flat-panel-display backplane applications, oxide-TFT technology is the new kid on the Information Display block – recently conceived and in its early stages of commercialization. -

Front Cover See Separate 4Pp Cover Artwork

FRONT COVER SEE SEPARATE 4PP COVER ARTWORK Corporate and Education solutions range guide February 2018 www.pro.sony.eu The Sony Solution 4-19 The Sony Solution When it comes to professional AV technology, Service & Support 20-21 Sony provides much more than just great products - we create solutions. Education and Business Projectors 22-47 Complete solutions that make visual communications and knowledge sharing even smarter and more efficient. INSIDEINSIDE FRONTFRONT We empower all kinds of organisations with advanced audio-visual Professional Displays 48-53 tools that help them go further. Solutions designed for every industry COVERCOVER SEESEE SEPARATESEPARATE and sector, from schools to universities, small business to big business, retail to automotive and more. 4PP4PPVisual Imaging COVERCOVER Cameras ARTWORKARTWORK54-63 Solutions like our Laser Light Source Projectors that deliver powerful images with virtually no maintenance. Our Collaboration solution that takes active learning and sharing material to new levels. Our BRAVIA Visual Simulation and Visual Entertainment 64-70 Professional Displays that enable dynamic digital signage. And our desktop and portable projectors that provide uncompromised quality with low ownership costs for businesses and education. Whatever size the organisation, whatever the requirement, we have the perfect solution. And always with our full support. For full product features and specifications please visit pro.sony.eu Professional AV technology Sony Solutions Professional AV technology Sony Solutions Green management settings Schedule on and off times to save energy when devices aren’t required, for example at evenings or weekends. Complete device management solution Managing, scheduling and monitoring content TEOS Manage can be controlled by just one person, reducing that’s displayed on all your networked screens and Complete the need for extra staff and giving a single point of contact for projectors can be a challenge for any organisation. -

Electronic Displays Technical Report

California Energy Commission DOCKETED 12-AAER-2A TN 72475 Electronic DisplaysJAN 08 2014 Technical Report - Engineering and Cost Analysis In Support of the Codes and Standards Enhancement (CASE) Initiative For PY 2013: Title 20 Standards Development Supplemental to CASE Report submitted on July 29, 2013 Docket #12-AAER-2A January 8, 2014 Prepared for: PACIFIC GAS & ELECTRIC SOUTHERN CALIFORNIA SAN DIEGO GAS AND SOUTHERN CALIFORNIA COMPANY EDISON ELECTRIC GAS COMPANY Prepared by: Clancy Donnelly, Katherine Dayem, and Brendan Trimboli, Ecova This report was prepared by the California Statewide Utility Codes and Standards Program and funded by the California utility customers under the auspices of the California Public Utilities Commission. Copyright 2014 Pacific Gas and Electric Company, Southern California Edison, Southern California Gas Company, San Diego Gas & Electric. All rights reserved, except that this document may be used, copied, and distributed without modification. Neither PG&E, SCE, SoCalGas, SDG&E, nor any of its employees makes any warranty, express of implied; or assumes any legal liability or responsibility for the accuracy, completeness or usefulness of any data, information, method, product, policy or process disclosed in this document; or represents that its use will not infringe any privately-owned rights including, but not limited to, patents, trademarks or copyrights. Table of Contents 1 EXECUTIVE SUMMARY ............................................................................................................ 1 -

Mini-LED Vs OLED in Apple Products: Why You Should Be Excited!

Mini-LED vs OLED in Apple products: Why you should be excited! Technology is evolving. And with it, are the tools we use to interact with the world. Displays are an integral part of any device. And while OLED displays may be the best display around, this Mini-LED vs OLED comparison pits the underdog against the industry dominator. Increasing reports are suggesting that Apple is planning to adopt a new Mini-LED display for future iPads and MacBooks. It got me thinking… Why isn’t Apple planning on using OLED instead? There’s a simple answer. Producing OLED displays isn’t cheap for bigger screens like the ones on the MacBook and the iPad. Plus there are additional manufacturing complexities that deter Apple from using OLED in devices with a larger display. But, since OLED is a hard choice, in this case, there is an alternative that the Cupertino giant can use in its MacBook and iPad that closely compares to it. Here’s how good is Mini-LED vs OLED in this extensive, in-depth comparison between the two display technologies. I’ll also explain why it’s better for Apple to use Mini-LED in MacBooks and iPads later on in this article. Let’s get to it! Table Of Contents Here’s a “Table of Contents” for better navigation inside this post. OLED has been around for some time now. Apple first used the display technology in its Apple Watch back in 2015. Then, in 2017, it came toiPhones with the iPhone X. Apple uses an OLED display even in its higher-end MacBook Pro models in the form of a slim rectangular strip meant to replace regular function keys, known as TouchBar. -

Display Comparison: OLED Vs. IPS on Notebooks Why OLED?

2/24/2021 Display Comparison: OLED vs. IPS on Notebooks - NotebookCheck.net Reviews Reviews News Benchmarks / Tech Buyers Guide Library Search Jobs Contact ⟩ Survey: Laptop & Phone Support Satisfaction 2021 RTX 3070 unleashed: Asus Strix G15 review Cuts for the RTX 3070: Aorus 15G review 2021 Razer Blade Pro 17 RTX 3080 Review Display Comparison: OLED vs. IPS on Notebooks Welcome to the dark side. OLED displays: undeniably one of the greatest revolutions in the realm of display technology since the first LCD monitor. They lack a backlight, display perfect black and feature vivid colors as well as incredibly low response times. For the first time, the technology has made its way into notebooks. Till Schönborn, Tanja Hinum (translated by Bernie Pechlaner), 07/13/2016 Laptop Touchscreen 82% MSI Stealth 15M GeForce RTX Laptop 77% Realme 7i Review: Faster Graphics Smartphone Review - than the Dell XPS 15, Cell phone for little HP Spectre x360, or money with real triple Asus ZenBook cam For the original German article, see here. 75% Samsung Galaxy The technology isn't exactly new: OLED displays are based on light-emitting organic Nokia Streaming Box A12 review: Budget diodes and have been used for smartphones, tablets and TVs for a few years now. So far, 8000 Set Top Box Samsung smartphone notebooks have been the exception, which has technical reasons as well as financial ones. Review - Even for with NFC and huge analog TVs battery life Next Page ⟩ That's changing, however, as several notebook manufactures - Lenovo, Alienware, and HP among them - have announced OLED notebooks for 2016. -

Citations Listed by Award Date

CITATIONS LISTED BY AWARD DATE KEY: KFB - Karl Ferdinand Braun Prize; RJ - Jan Rajchman Prize; LBW - Lewis and Beatrice Winner Award; JG - Johann Gutenberg Prize; OTTO - Otto Schade Prize; Slottow -Slottow-Owaki Prize; SPREC - Special Recognition Award; Fellow - SID Fellow; FRD - Frances Rice Darne Memorial Award FIRST LAST NAME NAME AWARD YEAR AWARD CITATION Ruth Davis Fellow 1963 Fellow James H. Howard Fellow 1963 Fellow Anthony Debons Fellow 1964 Fellow Rudolph L. Kuehn Fellow 1965 Fellow Edith Bardain Fellow 1966 Fellow William Bethke Fellow 1966 Fellow Carlo Crocetti Fellow 1966 Fellow Frances Darne Fellow 1966 Fellow Harold Luxenberg Fellow 1966 Fellow Petro Vlahos Fellow 1966 Fellow William Aiken Fellow 1967 Fellow Sid Deutsch Fellow 1967 Fellow George Dorion Fellow 1967 Fellow Solomon Sherr Fellow 1967 Fellow Fordyce Brown Fellow 1968 Fellow Robert Carpenter Fellow 1968 Fellow Phillip Damon Fellow 1968 Fellow Carl Machover Fellow 1969 Fellow James Redman Fellow 1969 Fellow Lou Seeberger Fellow 1969 Fellow Leo Beiser Fellow 1970 Fellow Nobuo John Koda Fellow 1970 Fellow Bernard Lechner Fellow 1970 Fellow Harry Poole Fellow 1970 Fellow Bernard Lechner FRD 1971 Frances Rice Darne Memorial Award Benjamin Kazan Fellow 1971 Fellow Harold Law Fellow 1971 Fellow Pierce Siglin Fellow 1972 Fellow Malcolm Ritchie SPREC 1972 Special Recognition Award Solomon Sherr SPREC 1972 Special Recognition Award H. Gene Slottow FRD 1973 Frances Rice Darne Memorial Award Irving Reingold Fellow 1973 Fellow Norman Lehrer FRD 1974 Frances Rice Darne Memorial -

Comparing Smartphone Displays Smartphone Displays Are Getting Sharper, Clearer, and More Scratch Resistant

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++ ++++++++++++++++++++++++ +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++ ++++++++++++++++++++++++ +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++ ++++++++++++++++++++++++ +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++ ++++++++++++++++++++++++ +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++ -

N 2 N-31 Zzzzzzzzzzzzzzzz

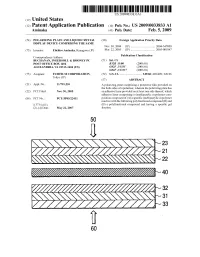

US 20090033833A1 (19) United States (12) Patent Application Publication (10) Pub. No.: US 2009/0033833 A1 Aminaka (43) Pub. Date: Feb. 5, 2009 (54) POLARIZING PLATE AND LIQUID CRYSTAL (30) Foreign Application Priority Data DISPLAY DEVICE COMPRISING THE SAME Nov.30, 2004 (JP) ................................. 2004-347OO3 (75) Inventor: Eiichiro Aminaka, Kanagawa (JP) Mar. 22, 2005 (JP) ................................. 2005-08 1847 Correspondence Address: PublicationDCOSSO Classificati BUCHANAN, INGERSOLL & ROONEY PC (51) Int. Cl. POST OFFICE BOX 1404 B32B33/00 (2006.01) ALEXANDRIA, VA 22313-1404 (US) GO2F I/335 (2006.01) GO2F I/3357 (2006.01) (73) Assignee: FUJIFILM CORPORATION, (52) U.S. Cl. .............................. 349/68; 428/409; 349/96 Tokyo (JP) (57) ABSTRACT (21) Appl. No.: 11/791,226 A polarizing plate comprising a protective film provided on the both sides of a polarizer, wherein the polarizing plate has (22) PCT Filed: Nov.30, 2005 an adhesive layer provided on at least one side thereof, which adhesive layer comprising a (meth)acrylic copolymer com (86). PCT No.: PCT/UP05/22411 position composed of (A) a specific (meth)acrylic copolymer reactive with the following polyfunctional compound (B) and S371 (c)(1), (B) a polyfunctional compound and having a specific gel (2), (4) Date: May 22, 2007 fraction. 50 N 23 YZZZZZZZZZZZZZZZZZZZZZZ 21 Š 22 % - 40 2 32 N-31 ZZZZZZZZZZZZZZZZ 60 Patent Application Publication Feb. 5, 2009 Sheet 1 of 3 US 2009/0033833 A1 FIG. 1 Patent Application Publication Feb. 5, 2009 Sheet 2 of 3 US 2009/0033833 A1 Š-14 FIG. 2 N-112-13 77/7777/7/777-12 50 N-23 N-223-2 FIG 3 X %-40 2-32 N-31 ZZZZZZZZZZZZZZZZ-33 60 Patent Application Publication Feb. -

Does Panel Type Matter for LCD Monitors? a Study Examining the Effects of S-IPS, S-PVA, and TN Panels in Video Gaming and Movie Viewing

Does Panel Type Matter for LCD Monitors? A Study Examining the Effects of S-IPS, S-PVA, and TN Panels in Video Gaming and Movie Viewing Ki Joon Kim1 and S. Shyam Sundar1,2 1Department of Interaction Science, Sungkyunkwan University, Seoul, Korea 110-745 [email protected] 2Media Effects Research Laboratory, College of Communications, Pennsylvania State University, University Park, PA 16802 [email protected] Abstract. As computer-based devices become the primary media via which users view movies and play interactive games, display technologies (e.g., LCD monitors) have focused increasingly on quality of video fidelity, with much debate surrounding the relative efficacy of different panel types of LCD monitors. A 3 (S-IPS panel vs. S-PVA panel vs. TN panel) x 2 (game vs. movie) between-subjects experiment was conducted to examine the effects of LCD panel type in facilitating regular viewing as well as enhanced interactive TV experiences. Data from the experiment showed that LCD panel and stimulus type as well as computer literacy were important factors affecting users’ viewing and interaction experiences. Limitations and implications for theory and ongoing research are discussed. Keywords: LCD panel, response rate, contrast ratio, viewing angle, computer literacy. 1 Introduction Choosing an LCD monitor that best fits a user’s needs and preferences has become relatively difficult in recent years; a number of factors such as screen-size, brand reputation, price, and technical specifications (e.g., response rate, contrast ratio, and viewing angle) are offered as considerations when purchasing a monitor. Although screen-size has been perhaps the most dominant factor affecting consumer’s choice since the debut of LCD monitors, recent marketing emphasis on LCD panel types such as S-IPS, S-PVA, and TN has raised the question whether users should factor panel type into their decision-making. -

Digital Signage Information Display and Solutions

DIGITAL SIGNAGE INFORMATION DISPLAY AND SOLUTIONS 2017 Spring Edition 02 Contents 1. HIGHLIGHT 04 2. LG SIGNAGE INTRODUCTIONS • OLED 08 • ULTRA STRETCH 12 • ULTRA HD 14 • VIDEO-WALL 16 • STANDARD SIGNAGE 18 • TRANSPARENT 21 • HIGH BRIGHTNESS 22 • INTERACTIVE 24 • SUPERSIGN TV 26 • CONTENT PLAYER 28 3. SPECIFICATIONS 30 I 0302 LG STRENGTH IN DIGITAL SIGNAGE HIGHLIGHTS LEADING TECHNOLOGY Take an exciting leap into the latest technologies and change the way that you reach and inform your customers. TRULY STATE OF THE ART, LG OLED is a new display technology that overcomes the limits of current displays in LG OLED SIGNAGE terms of picture quality and design. Perfect Black Perfect Color Pure black with self- Accurate, stable color lighting pixels. reproduction. Perfect Viewing Angle Perfect Design No color distortion from Future displays with any viewing angle. free form factors. Key LG OLED technologies OLED LED / LCD VS OLED Back No Backlight LED / LCD Color Backlight Panel Cover Unit Panel Filter Unit 86” 58:9 ULTRA STRETCH New experience with Ultra HD based on its unique 58:9 ratio. 58:9 Extended Wide Format 16:9 58:9 THE WORLD BEST CLASS World’s narrowest bezel* display delivers stunning high picture quality in a virtually seamless VIDEO-WALL video wall. Borderless Design 0.9 mm 0.9 mm ・0.9 mm (Even Bezel) ・Bezel to Bezel : 1.8 mm *Nemko (Nogerois Elektriske Materiellkontroll) was established Verification Letter Order No. 305449 This verification letter is issued for LG Electronics Co., Ltd. to confirm that LG has successfully met below BtB and AtA size after verified testing.