ETSI TR 102 479 V1.1.1 (2006-02) Technical Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TS 102 261 V1.1.1 (2003-09) Technical Specification

ETSI TS 102 261 V1.1.1 (2003-09) Technical Specification Open Network Services and Architecture (ONSA); Abstract architecture and reference points definition; Mapping of functional architectures and requirements for NGN 2 ETSI TS 102 261 V1.1.1 (2003-09) Reference DTS/SPAN-140006 Keywords architecture, configuration, functional, internet, network, protocol, telephony, VoIP ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice Individual copies of the present document can be downloaded from: http://www.etsi.org The present document may be made available in more than one electronic version or in print. In any case of existing or perceived difference in contents between such versions, the reference version is the Portable Document Format (PDF). In case of dispute, the reference shall be the printing on ETSI printers of the PDF version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://portal.etsi.org/tb/status/status.asp If you find errors in the present document, send your comment to: [email protected] Copyright Notification No part may be reproduced except as authorized by written permission. The copyright and the foregoing restriction extend to reproduction in all media. -

Etsi En 303 645 V2.1.1 (2020-06)

ETSI EN 303 645 V2.1.1 (2020-06) EUROPEAN STANDARD CYBER; Cyber Security for Consumer Internet of Things: Baseline Requirements 2 ETSI EN 303 645 V2.1.1 (2020-06) Reference REN/CYBER-0048 Keywords cybersecurity, IoT, privacy ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice The present document can be downloaded from: http://www.etsi.org/standards-search The present document may be made available in electronic versions and/or in print. The content of any electronic and/or print versions of the present document shall not be modified without the prior written authorization of ETSI. In case of any existing or perceived difference in contents between such versions and/or in print, the prevailing version of an ETSI deliverable is the one made publicly available in PDF format at www.etsi.org/deliver. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at https://portal.etsi.org/TB/ETSIDeliverableStatus.aspx If you find errors in the present document, please send your comment to one of the following services: https://portal.etsi.org/People/CommiteeSupportStaff.aspx Copyright Notification No part may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm except as authorized by written permission of ETSI. -

Back to the Future: GSM

JULY 2021 JULY THE INTERVIEW Stephen Temple GSM story 30 years later. p.4-5 TECH HIGHLIGHTS A Security Token for GSM. p.20-21 IN THE SPOTLIGHT Back to the future: GSM. p.13-16 BACK TO THE FUTURE: GSM Editorial The Interview Stephen Temple, GSM story and its legacy 30 years later. P4/5 Meet the New Standards People This edition pays P6/7 tribute to the big New Member bang that GSM Interview brought about in Tommi Flink, CEO of Magister Solution. ICT. P8/9 CCSA Interview Zemin Yang, On 1 July 1991 the former Finnish prime within the GSM committee, reminds us Former Secretary General minister Harri Holkeri made the world’s of the SMS invention, yet another form of of CCSA. first GSM call, calling the deputy mayor communication GSM enabled. of the city of Tampere. That was 30 years P10/11 In the Spotlight section, I outline why ago. without GSM, we would probably not This “back to the future” July edition pays be talking about 5G or 6G as we do In the Spotlight tribute to the big bang that GSM brought today and how this mobile system in ICT. Of course, GSM was one of the set the foundations of the current Back to the future: GSM first success stories of ETSI and one of international standardization principles P13-16 the reasons why we were set up in the and its ecosystem. Zemin Yang, the first place, but more importantly it was former Secretary General of China going to benefit the world’s population, Communications Standards Association Tech Highlights facilitating global access to mobile (CCSA), granted us an exclusive communications. -

WELCOME to the WORLD of ETSI an Overview of the European Telecommunication Standards Institute

WELCOME TO THE WORLD OF ETSI An overview of the European Telecommunication Standards Institute © ETSI 2016. All rights reserved © ETSI 2016. All rights reserved European roots, global outreach ETSI is a world-leading standards developing organization for Information and Communication Technologies (ICT) Founded initially to serve European needs, ETSI has become highly- respected as a producer of technical standards for worldwide use © ETSI 2016. All rights reserved Products & services Technical specifications and standards with global application Support to industry and European regulation Specification & testing methodologies Interoperability testing © ETSI 2016. All rights reserved Membership Over 800 companies, big and small, from 66 countries on 5 continents Manufacturers, network operators, service and content providers, national administrations, ministries, universities, research bodies, consultancies, user organizations A powerful and dynamic mix of skills, resources and ambitions © ETSI 2016. All rights reserved Independence Independent of all other organizations and structures Respected for neutrality and trustworthiness Esteemed for our world-leading Intellectual Property Rights (IPR) Policy © ETSI 2016. All rights reserved Collaboration Strategic collaboration with numerous global and regional standards-making organizations and industry groupings Formally recognized as a European Standards Organization, with a global perspective Contributing technical standards to support regulation Defining radio frequency requirements for -

Mobile Edge Computing a Key Technology Towards 5G

ETSI White Paper No. 11 Mobile Edge Computing A key technology towards 5G First edition – September 2015 ISBN No. 979-10-92620-08-5 Authors: Yun Chao Hu, Milan Patel, Dario Sabella, Nurit Sprecher and Valerie Young ETSI (European Telecommunications Standards Institute) 06921 Sophia Antipolis CEDEX, France Tel +33 4 92 94 42 00 [email protected] www.etsi.org About the authors Yun Chao Hu Contributor, Huawei, Vice Chair ETSI MEC ISG, Chair MEC IEG Working Group Milan Patel Contributor, Huawei Dario Sabella Contributor, Telecom Italia; Vice-Chair MEC IEG Working Group Nurit Sprecher Contributor, Nokia; Chair ETSI MEC ISG Valerie Young Contributor, Intel Mobile Edge Computing - a key technology towards 5G 2 Contents About the authors 2 Contents 3 Introduction 4 Market Drivers 5 Business Value 6 Mobile Edge Computing Service Scenarios 7 General 7 Augmented Reality 8 Intelligent Video Acceleration 9 Connected Cars 9 Internet of Things Gateway 11 Deployment Scenarios 11 ETSI Industry Specification Group on Mobile Edge Computing 12 Proofs of Concept 13 Conclusions 14 References 15 Mobile Edge Computing - a key technology towards 5G 3 Introduction Mobile Edge Computing (MEC) is a new technology which is currently being standardized in an ETSI Industry Specification Group (ISG) of the same name. Mobile Edge Computing provides an IT service environment and cloud-computing capabilities at the edge of the mobile network, within the Radio Access Network (RAN) and in close proximity to mobile subscribers. The aim is to reduce latency, ensure highly efficient network operation and service delivery, and offer an improved user experience. Mobile Edge Computing is a natural development in the evolution of mobile base stations and the convergence of IT and telecommunications networking. -

GSM 05.01 TECHNICAL May 1996 SPECIFICATION Version 5.0.0

GSM GSM 05.01 TECHNICAL May 1996 SPECIFICATION Version 5.0.0 Source: ETSI TC-SMG Reference: TS/SMG-020501Q ICS: 33.060.50 Key words: Digital cellular telecommunications system, Global System for Mobile communications (GSM) Digital cellular telecommunications system (Phase 2+); Physical layer on the radio path; General description (GSM 05.01) ETSI European Telecommunications Standards Institute ETSI Secretariat Postal address: F-06921 Sophia Antipolis CEDEX - FRANCE Office address: 650 Route des Lucioles - Sophia Antipolis - Valbonne - FRANCE X.400: c=fr, a=atlas, p=etsi, s=secretariat - Internet: [email protected] * Tel.: +33 92 94 42 00 - Fax: +33 93 65 47 16 Copyright Notification: No part may be reproduced except as authorized by written permission. The copyright and the foregoing restriction extend to reproduction in all media. © European Telecommunications Standards Institute 1996. All rights reserved. Page 2 GSM 05.01 Version 5.0.0: May 1996 Whilst every care has been taken in the preparation and publication of this document, errors in content, typographical or otherwise, may occur. If you have comments concerning its accuracy, please write to "ETSI Editing and Committee Support Dept." at the address shown on the title page. Page 3 GSM 05.01 Version 5.0.0: May 1996 Contents Foreword .......................................................................................................................................................5 1 Scope ..................................................................................................................................................7 -

Improving Mobile IP Handover Latency on End-To-End TCP in UMTS/WCDMA Networks

Improving Mobile IP Handover Latency on End-to-End TCP in UMTS/WCDMA Networks Chee Kong LAU A thesis submitted in fulfilment of the requirements for the degree of Master of Engineering (Research) in Electrical Engineering School of Electrical Engineering and Telecommunications The University of New South Wales March, 2006 CERTIFICATE OF ORIGINALITY I hereby declare that this submission is my own work and to the best of my knowledge it contains no materials previously published or written by another person, nor material which to a substantial extent has been accepted for the award of any other degree or diploma at UNSW or any other educational institution, except where due acknowledgement is made in the thesis. Any contribution made to the research by others, with whom I have worked at UNSW or elsewhere, is explicitly acknowledged in the thesis. I also declare that the intellectual content of this thesis is the product of my own work, except to the extent that assistance from others in the project's design and conception or in style, presentation and linguistic expression is acknowledged. (Signed)_________________________________ i Dedication To: My girl-friend (Jeslyn) for her love and caring, my parents for their endurance, and to my friends and colleagues for their understanding; because while doing this thesis project I did not manage to accompany them. And Lord Buddha for His great wisdom and compassion, and showing me the actual path to liberation. For this, I take refuge in the Buddha, the Dharma, and the Sangha. ii Acknowledgement There are too many people for their dedications and contributions to make this thesis work a reality; without whom some of the contents of this thesis would not have been realised. -

ITU-T Security Initiatives

International Telecommunication Union ITU-T Network Security Initiatives Mike Harrop Rapporteur SG17 Q4 ETSI Security Workshop January 2007 Overview of Presentation ITU-T o Show the context of ITU-T security standards activities o Highlight some of key areas of focus o Report on some of the results being achieved ITU-T Context of ITU-T security standards work High Level Security Drivers ITU-T o ITU Plenipotentiary Conference (PP-02) ¾ Intensify efforts on security o World Telecommunications Standardization Assembly (WTSA-04) ¾ Security robustness of protocols ¾ Combating/Countering spam o World Summit on the Information Society (WSIS- 05) ¾ Cyber security ITU-T Organizational Structure ITU-T WORLDWORLD T TEELLEECOMMUNICATIOCOMMUNICATIONN Workshops, STANDARDIZATIONSTANDARDIZATION ASSEMBL ASSEMBLYY Seminars, TETELLEECOMMUNICATIONCOMMUNICATION Symposia, … STANDARDIZATIONSTANDARDIZATION ADVISORYADVISORY GROUP GROUP IPR ad hoc STUDY GROUP STUDY GROUP STUDY GROUP STUDY GROUP STUDY GROUP STUDY GROUP WORKING WORKING WORKING PARTY PARTY PARTY Focus Groups Q Q Q Q Joint Rapporteurs Groups ITU-T Study Groups ITU-T work is divided up between Study Groups (SGs). ITU-T o SG 2: Operational aspects of service provision, networks and performance o SG 4: Telecommunication management o SG 5: Protection against electromagnetic environment effects o SG 6 Outside Plant and related indoor installations o SG 9 Integrated broadband cable networks and television and sound transmission o SG 11 Signaling requirements and protocols o SG 12 Performance and quality of service o SG 13 Next Generation Networks o SG 15: Optical and other transport networks o SG 16: Multimedia services, systems and terminals o SG 17: Security, languages and telecommunication software o SG 19: Mobile Telecommunications Networks Note: SG17 has overall security responsibility but almost all SGs have work with security implications and requirements. -

TR 101 112 V3.2.0 (1998-04) Technical Report

TR 101 112 V3.2.0 (1998-04) Technical Report Universal Mobile Telecommunications System (UMTS); Selection procedures for the choice of radio transmission technologies of the UMTS (UMTS 30.03 version 3.2.0) Universal Mobile Telecommunications System European Telecommunications Standards Institute UMTS 30.03 version 3.2.0 2 TR 101 112 V3.2.0 (1998-04) Reference RTR/SMG-023003UR1 (aao01ic4.PDF) Keywords ETSI, SMG-5, Selection of radio transmission technology, radio interface, UMTS ETSI Secretariat Postal address F-06921 Sophia Antipolis Cedex - FRANCE Office address 650 Route des Lucioles - Sophia Antipolis Valbonne - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Internet [email protected] http://www.etsi.fr http://www.etsi.org Copyright Notification No part may be reproduced except as authorized by written permission. The copyright and the foregoing restriction extend to reproduction in all media. © European Telecommunications Standards Institute 1998. All rights reserved. UMTS 30.03 version 3.2.0 3 TR 101 112 V3.2.0 (1998-04) Contents Intellectual Property Rights................................................................................................................................5 Foreword ............................................................................................................................................................5 Introduction ........................................................................................................................................................6 -

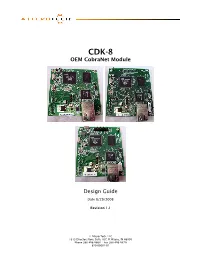

OEM Cobranet Module Design Guide

CDK-8 OEM CobraNet Module Design Guide Date 8/29/2008 Revision 1.1 © Attero Tech, LLC 1315 Directors Row, Suite 107, Ft Wayne, IN 46808 Phone 260-496-9668 • Fax 260-496-9879 620-00001-01 CDK-8 Design Guide Contents 1 – Overview .....................................................................................................................................................................................................................................2 1.1 – Notes on Modules .................................................................................................................................................... 2 2 – Digital Audio Interface Connectivity...........................................................................................................................................................................3 2.1 – Pin Descriptions ....................................................................................................................................................... 3 2.1.1 - Audio clocks ..................................................................................................................................................... 3 2.1.2 – Digital audio..................................................................................................................................................... 3 2.1.3 – Serial bridge ..................................................................................................................................................... 4 2.1.4 – Control............................................................................................................................................................ -

Public Address System Network Design Considerations

AtlasIED APPLICATION NOTE Public Address System Network Design Considerations Background AtlasIED provides network based Public Address Systems (PAS) that are deployed on a wide variety of networks at end user facilities worldwide. As such, a primary factor, directly impacting the reliability of the PAS, is a properly configured, reliable, well-performing network on which the PAS resides/functions. AtlasIED relies solely upon the end user’s network owner/manager for the design, provision, configuration and maintenance of the network, in a manner that enables proper PAS functionability/functionality. Should the network on which the PAS resides be improperly designed, configured, maintained, malfunctions or undergoes changes or modifications, impacts to the reliability, functionality or stability of the PAS can be expected, resulting in system anomalies that are outside the control of AtlasIED. In such instances, AtlasIED can be a resource to, and support the end user’s network owner/manager in diagnosing the problems and restoring the PAS to a fully functioning and reliable state. However, for network related issues, AtlasIED would look to the end user to recover the costs associated with such activities. While AtlasIED should not be expected to actually design a facility’s network, nor make formal recommendations on specific network equipment to use, this application note provides factors to consider – best practices – when designing a network for public address equipment, along with some wisdom and possible pitfalls that have been gleaned from past experiences in deploying large scale systems. This application note is divided into the following sections: n Local Network – The network that typically hosts one announcement controller and its peripherals. -

Aperformance Analysis for Umts Packet Switched

International Journal of Next-Generation Networks (IJNGN), Vol.2, No.1, M arch 2010 A PERFORMANCE ANALYSIS FOR UMTS PACKET SWITCHED NETWORK BASED ON MULTIVARIATE KPIS Ye Ouyang and M. Hosein Fallah, Ph.D., P.E. Howe School of Technology Management Stevens Institute of Technology, Hoboken, NJ, USA 07030 [email protected] [email protected] ABSTRACT Mobile data services are penetrating mobile markets rapidly. The mobile industry relies heavily on data service to replace the traditional voice services with the evolution of the wireless technology and market. A reliable packet service network is critical to the mobile operators to maintain their core competence in data service market. Furthermore, mobile operators need to develop effective operational models to manage the varying mix of voice, data and video traffic on a single network. Application of statistical models could prove to be an effective approach. This paper first introduces the architecture of Universal Mobile Telecommunications System (UMTS) packet switched (PS) network and then applies multivariate statistical analysis to Key Performance Indicators (KPI) monitored from network entities in UMTS PS network to guide the long term capacity planning for the network. The approach proposed in this paper could be helpful to mobile operators in operating and maintaining their 3G packet switched networks for the long run. KEYWORDS UMTS; Packet Switch; Multidimensional Scaling; Network Operations, Correspondence Analysis; GGSN; SGSN; Correlation Analysis. 1. INTRODUCTION Packet switched domain of 3G UMTS network serves all data related services for the mobile subscribers. Nowadays people have a certain expectation for their experience of mobile data services that the mobile wireless environment has not fully met since the speed at which they can access their packet switching services has been limited.