Week 3 Tradeoffs Choosing Codeword Lengths the Kraft-Mcmillan Inequalities Imply That to Make Some Codewords Shorter, We Will Have to Make Others Longer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Compilers & Translator Writing Systems

Compilers & Translators Compilers & Translator Writing Systems Prof. R. Eigenmann ECE573, Fall 2005 http://www.ece.purdue.edu/~eigenman/ECE573 ECE573, Fall 2005 1 Compilers are Translators Fortran Machine code C Virtual machine code C++ Transformed source code Java translate Augmented source Text processing language code Low-level commands Command Language Semantic components Natural language ECE573, Fall 2005 2 ECE573, Fall 2005, R. Eigenmann 1 Compilers & Translators Compilers are Increasingly Important Specification languages Increasingly high level user interfaces for ↑ specifying a computer problem/solution High-level languages ↑ Assembly languages The compiler is the translator between these two diverging ends Non-pipelined processors Pipelined processors Increasingly complex machines Speculative processors Worldwide “Grid” ECE573, Fall 2005 3 Assembly code and Assemblers assembly machine Compiler code Assembler code Assemblers are often used at the compiler back-end. Assemblers are low-level translators. They are machine-specific, and perform mostly 1:1 translation between mnemonics and machine code, except: – symbolic names for storage locations • program locations (branch, subroutine calls) • variable names – macros ECE573, Fall 2005 4 ECE573, Fall 2005, R. Eigenmann 2 Compilers & Translators Interpreters “Execute” the source language directly. Interpreters directly produce the result of a computation, whereas compilers produce executable code that can produce this result. Each language construct executes by invoking a subroutine of the interpreter, rather than a machine instruction. Examples of interpreters? ECE573, Fall 2005 5 Properties of Interpreters “execution” is immediate elaborate error checking is possible bookkeeping is possible. E.g. for garbage collection can change program on-the-fly. E.g., switch libraries, dynamic change of data types machine independence. -

Chapter 2 Basics of Scanning And

Chapter 2 Basics of Scanning and Conventional Programming in Java In this chapter, we will introduce you to an initial set of Java features, the equivalent of which you should have seen in your CS-1 class; the separation of problem, representation, algorithm and program – four concepts you have probably seen in your CS-1 class; style rules with which you are probably familiar, and scanning - a general class of problems we see in both computer science and other fields. Each chapter is associated with an animating recorded PowerPoint presentation and a YouTube video created from the presentation. It is meant to be a transcript of the associated presentation that contains little graphics and thus can be read even on a small device. You should refer to the associated material if you feel the need for a different instruction medium. Also associated with each chapter is hyperlinked code examples presented here. References to previously presented code modules are links that can be traversed to remind you of the details. The resources for this chapter are: PowerPoint Presentation YouTube Video Code Examples Algorithms and Representation Four concepts we explicitly or implicitly encounter while programming are problems, representations, algorithms and programs. Programs, of course, are instructions executed by the computer. Problems are what we try to solve when we write programs. Usually we do not go directly from problems to programs. Two intermediate steps are creating algorithms and identifying representations. Algorithms are sequences of steps to solve problems. So are programs. Thus, all programs are algorithms but the reverse is not true. -

Scripting: Higher- Level Programming for the 21St Century

. John K. Ousterhout Sun Microsystems Laboratories Scripting: Higher- Cybersquare Level Programming for the 21st Century Increases in computer speed and changes in the application mix are making scripting languages more and more important for the applications of the future. Scripting languages differ from system programming languages in that they are designed for “gluing” applications together. They use typeless approaches to achieve a higher level of programming and more rapid application development than system programming languages. or the past 15 years, a fundamental change has been ated with system programming languages and glued Foccurring in the way people write computer programs. together with scripting languages. However, several The change is a transition from system programming recent trends, such as faster machines, better script- languages such as C or C++ to scripting languages such ing languages, the increasing importance of graphical as Perl or Tcl. Although many people are participat- user interfaces (GUIs) and component architectures, ing in the change, few realize that the change is occur- and the growth of the Internet, have greatly expanded ring and even fewer know why it is happening. This the applicability of scripting languages. These trends article explains why scripting languages will handle will continue over the next decade, with more and many of the programming tasks in the next century more new applications written entirely in scripting better than system programming languages. languages and system programming -

Information Content and Error Analysis

43 INFORMATION CONTENT AND ERROR ANALYSIS Clive D Rodgers Atmospheric, Oceanic and Planetary Physics University of Oxford ESA Advanced Atmospheric Training Course September 15th – 20th, 2008 44 INFORMATION CONTENT OF A MEASUREMENT Information in a general qualitative sense: Conceptually, what does y tell you about x? We need to answer this to determine if a conceptual instrument design actually works • to optimise designs • Use the linear problem for simplicity to illustrate the ideas. y = Kx + ! 45 SHANNON INFORMATION The Shannon information content of a measurement of x is the change in the entropy of the • probability density function describing our knowledge of x. Entropy is defined by: • S P = P (x) log(P (x)/M (x))dx { } − Z M(x) is a measure function. We will take it to be constant. Compare this with the statistical mechanics definition of entropy: • S = k p ln p − i i i X P (x)dx corresponds to pi. 1/M (x) is a kind of scale for dx. The Shannon information content of a measurement is the change in entropy between the p.d.f. • before, P (x), and the p.d.f. after, P (x y), the measurement: | H = S P (x) S P (x y) { }− { | } What does this all mean? 46 ENTROPY OF A BOXCAR PDF Consider a uniform p.d.f in one dimension, constant in (0,a): P (x)=1/a 0 <x<a and zero outside.The entropy is given by a 1 1 S = ln dx = ln a − a a Z0 „ « Similarly, the entropy of any constant pdf in a finite volume V of arbitrary shape is: 1 1 S = ln dv = ln V − V V ZV „ « i.e the entropy is the log of the volume of state space occupied by the p.d.f. -

Chapter 4 Information Theory

Chapter Information Theory Intro duction This lecture covers entropy joint entropy mutual information and minimum descrip tion length See the texts by Cover and Mackay for a more comprehensive treatment Measures of Information Information on a computer is represented by binary bit strings Decimal numb ers can b e represented using the following enco ding The p osition of the binary digit 3 2 1 0 Bit Bit Bit Bit Decimal Table Binary encoding indicates its decimal equivalent such that if there are N bits the ith bit represents N i the decimal numb er Bit is referred to as the most signicant bit and bit N as the least signicant bit To enco de M dierent messages requires log M bits 2 Signal Pro cessing Course WD Penny April Entropy The table b elow shows the probability of o ccurrence px to two decimal places of i selected letters x in the English alphab et These statistics were taken from Mackays i b o ok on Information Theory The table also shows the information content of a x px hx i i i a e j q t z Table Probability and Information content of letters letter hx log i px i which is a measure of surprise if we had to guess what a randomly chosen letter of the English alphab et was going to b e wed say it was an A E T or other frequently o ccuring letter If it turned out to b e a Z wed b e surprised The letter E is so common that it is unusual to nd a sentence without one An exception is the page novel Gadsby by Ernest Vincent Wright in which -

Shannon Entropy and Kolmogorov Complexity

Information and Computation: Shannon Entropy and Kolmogorov Complexity Satyadev Nandakumar Department of Computer Science. IIT Kanpur October 19, 2016 This measures the average uncertainty of X in terms of the number of bits. Shannon Entropy Definition Let X be a random variable taking finitely many values, and P be its probability distribution. The Shannon Entropy of X is X 1 H(X ) = p(i) log : 2 p(i) i2X Shannon Entropy Definition Let X be a random variable taking finitely many values, and P be its probability distribution. The Shannon Entropy of X is X 1 H(X ) = p(i) log : 2 p(i) i2X This measures the average uncertainty of X in terms of the number of bits. The Triad Figure: Claude Shannon Figure: A. N. Kolmogorov Figure: Alan Turing Just Electrical Engineering \Shannon's contribution to pure mathematics was denied immediate recognition. I can recall now that even at the International Mathematical Congress, Amsterdam, 1954, my American colleagues in probability seemed rather doubtful about my allegedly exaggerated interest in Shannon's work, as they believed it consisted more of techniques than of mathematics itself. However, Shannon did not provide rigorous mathematical justification of the complicated cases and left it all to his followers. Still his mathematical intuition is amazingly correct." A. N. Kolmogorov, as quoted in [Shi89]. Kolmogorov and Entropy Kolmogorov's later work was fundamentally influenced by Shannon's. 1 Foundations: Kolmogorov Complexity - using the theory of algorithms to give a combinatorial interpretation of Shannon Entropy. 2 Analogy: Kolmogorov-Sinai Entropy, the only finitely-observable isomorphism-invariant property of dynamical systems. -

Understanding Shannon's Entropy Metric for Information

Understanding Shannon's Entropy metric for Information Sriram Vajapeyam [email protected] 24 March 2014 1. Overview Shannon's metric of "Entropy" of information is a foundational concept of information theory [1, 2]. Here is an intuitive way of understanding, remembering, and/or reconstructing Shannon's Entropy metric for information. Conceptually, information can be thought of as being stored in or transmitted as variables that can take on different values. A variable can be thought of as a unit of storage that can take on, at different times, one of several different specified values, following some process for taking on those values. Informally, we get information from a variable by looking at its value, just as we get information from an email by reading its contents. In the case of the variable, the information is about the process behind the variable. The entropy of a variable is the "amount of information" contained in the variable. This amount is determined not just by the number of different values the variable can take on, just as the information in an email is quantified not just by the number of words in the email or the different possible words in the language of the email. Informally, the amount of information in an email is proportional to the amount of “surprise” its reading causes. For example, if an email is simply a repeat of an earlier email, then it is not informative at all. On the other hand, if say the email reveals the outcome of a cliff-hanger election, then it is highly informative. -

The Future of DNA Data Storage the Future of DNA Data Storage

The Future of DNA Data Storage The Future of DNA Data Storage September 2018 A POTOMAC INSTITUTE FOR POLICY STUDIES REPORT AC INST M IT O U T B T The Future O E P F O G S R IE of DNA P D O U Data LICY ST Storage September 2018 NOTICE: This report is a product of the Potomac Institute for Policy Studies. The conclusions of this report are our own, and do not necessarily represent the views of our sponsors or participants. Many thanks to the Potomac Institute staff and experts who reviewed and provided comments on this report. © 2018 Potomac Institute for Policy Studies Cover image: Alex Taliesen POTOMAC INSTITUTE FOR POLICY STUDIES 901 North Stuart St., Suite 1200 | Arlington, VA 22203 | 703-525-0770 | www.potomacinstitute.org CONTENTS EXECUTIVE SUMMARY 4 Findings 5 BACKGROUND 7 Data Storage Crisis 7 DNA as a Data Storage Medium 9 Advantages 10 History 11 CURRENT STATE OF DNA DATA STORAGE 13 Technology of DNA Data Storage 13 Writing Data to DNA 13 Reading Data from DNA 18 Key Players in DNA Data Storage 20 Academia 20 Research Consortium 21 Industry 21 Start-ups 21 Government 22 FORECAST OF DNA DATA STORAGE 23 DNA Synthesis Cost Forecast 23 Forecast for DNA Data Storage Tech Advancement 28 Increasing Data Storage Density in DNA 29 Advanced Coding Schemes 29 DNA Sequencing Methods 30 DNA Data Retrieval 31 CONCLUSIONS 32 ENDNOTES 33 Executive Summary The demand for digital data storage is currently has been developed to support applications in outpacing the world’s storage capabilities, and the life sciences industry and not for data storage the gap is widening as the amount of digital purposes. -

How Do You Know Your Search Algorithm and Code Are Correct?

Proceedings of the Seventh Annual Symposium on Combinatorial Search (SoCS 2014) How Do You Know Your Search Algorithm and Code Are Correct? Richard E. Korf Computer Science Department University of California, Los Angeles Los Angeles, CA 90095 [email protected] Abstract Is a Given Solution Correct? Algorithm design and implementation are notoriously The first question to ask of a search algorithm is whether the error-prone. As researchers, it is incumbent upon us to candidate solutions it returns are valid solutions. The algo- maximize the probability that our algorithms, their im- rithm should output each solution, and a separate program plementations, and the results we report are correct. In should check its correctness. For any problem in NP, check- this position paper, I argue that the main technique for ing candidate solutions can be done in polynomial time. doing this is confirmation of results from multiple in- dependent sources, and provide a number of concrete Is a Given Solution Optimal? suggestions for how to achieve this in the context of combinatorial search algorithms. Next we consider whether the solutions returned are opti- mal. In most cases, there are multiple very different algo- rithms that compute optimal solutions, starting with sim- Introduction and Overview ple brute-force algorithms, and progressing through increas- Combinatorial search results can be theoretical or experi- ingly complex and more efficient algorithms. Thus, one can mental. Theoretical results often consist of correctness, com- compare the solution costs returned by the different algo- pleteness, the quality of solutions returned, and asymptotic rithms, which should all be the same. -

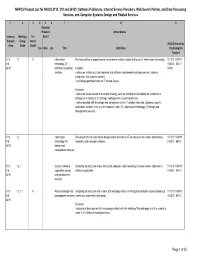

NAPCS Product List for NAICS 5112, 518 and 54151: Software

NAPCS Product List for NAICS 5112, 518 and 54151: Software Publishers, Internet Service Providers, Web Search Portals, and Data Processing Services, and Computer Systems Design and Related Services 1 2 3 456 7 8 9 National Product United States Industry Working Tri- Detail Subject Group lateral NAICS Industries Area Code Detail Can Méx US Title Definition Producing the Product 5112 1.1 X Information Providing advice or expert opinion on technical matters related to the use of information technology. 511210 518111 518 technology (IT) 518210 54151 54151 technical consulting Includes: 54161 services • advice on matters such as hardware and software requirements and procurement, systems integration, and systems security. • providing expert testimony on IT related issues. Excludes: • advice on issues related to business strategy, such as advising on developing an e-commerce strategy, is in product 2.3, Strategic management consulting services. • advice bundled with the design and development of an IT solution (web site, database, specific application, network, etc.) is in the products under 1.2, Information technology (IT) design and development services. 5112 1.2 Information Providing technical expertise to design and/or develop an IT solution such as custom applications, 511210 518111 518 technology (IT) networks, and computer systems. 518210 54151 54151 design and development services 5112 1.2.1 Custom software Designing the structure and/or writing the computer code necessary to create and/or implement a 511210 518111 518 application design software application. 518210 54151 54151 and development services 5112 1.2.1.1 X Web site design and Designing the structure and content of a web page and/or of writing the computer code necessary to 511210 518111 518 development services create and implement a web page. -

Information, Entropy, and the Motivation for Source Codes

MIT 6.02 DRAFT Lecture Notes Last update: September 13, 2012 CHAPTER 2 Information, Entropy, and the Motivation for Source Codes The theory of information developed by Claude Shannon (MIT SM ’37 & PhD ’40) in the late 1940s is one of the most impactful ideas of the past century, and has changed the theory and practice of many fields of technology. The development of communication systems and networks has benefited greatly from Shannon’s work. In this chapter, we will first develop the intution behind information and formally define it as a mathematical quantity, then connect it to a related property of data sources, entropy. These notions guide us to efficiently compress a data source before communicating (or storing) it, and recovering the original data without distortion at the receiver. A key under lying idea here is coding, or more precisely, source coding, which takes each “symbol” being produced by any source of data and associates it with a codeword, while achieving several desirable properties. (A message may be thought of as a sequence of symbols in some al phabet.) This mapping between input symbols and codewords is called a code. Our focus will be on lossless source coding techniques, where the recipient of any uncorrupted mes sage can recover the original message exactly (we deal with corrupted messages in later chapters). ⌅ 2.1 Information and Entropy One of Shannon’s brilliant insights, building on earlier work by Hartley, was to realize that regardless of the application and the semantics of the messages involved, a general definition of information is possible. -

Media Theory and Semiotics: Key Terms and Concepts Binary

Media Theory and Semiotics: Key Terms and Concepts Binary structures and semiotic square of oppositions Many systems of meaning are based on binary structures (masculine/ feminine; black/white; natural/artificial), two contrary conceptual categories that also entail or presuppose each other. Semiotic interpretation involves exposing the culturally arbitrary nature of this binary opposition and describing the deeper consequences of this structure throughout a culture. On the semiotic square and logical square of oppositions. Code A code is a learned rule for linking signs to their meanings. The term is used in various ways in media studies and semiotics. In communication studies, a message is often described as being "encoded" from the sender and then "decoded" by the receiver. The encoding process works on multiple levels. For semiotics, a code is the framework, a learned a shared conceptual connection at work in all uses of signs (language, visual). An easy example is seeing the kinds and levels of language use in anyone's language group. "English" is a convenient fiction for all the kinds of actual versions of the language. We have formal, edited, written English (which no one speaks), colloquial, everyday, regional English (regions in the US, UK, and around the world); social contexts for styles and specialized vocabularies (work, office, sports, home); ethnic group usage hybrids, and various kinds of slang (in-group, class-based, group-based, etc.). Moving among all these is called "code-switching." We know what they mean if we belong to the learned, rule-governed, shared-code group using one of these kinds and styles of language.