Unit 7 Using Available Corpora

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Student Research Workshop Associated with RANLP 2011, Pages 1–8, Hissar, Bulgaria, 13 September 2011

RANLPStud 2011 Proceedings of the Student Research Workshop associated with The 8th International Conference on Recent Advances in Natural Language Processing (RANLP 2011) 13 September, 2011 Hissar, Bulgaria STUDENT RESEARCH WORKSHOP ASSOCIATED WITH THE INTERNATIONAL CONFERENCE RECENT ADVANCES IN NATURAL LANGUAGE PROCESSING’2011 PROCEEDINGS Hissar, Bulgaria 13 September 2011 ISBN 978-954-452-016-8 Designed and Printed by INCOMA Ltd. Shoumen, BULGARIA ii Preface The Recent Advances in Natural Language Processing (RANLP) conference, already in its eight year and ranked among the most influential NLP conferences, has always been a meeting venue for scientists coming from all over the world. Since 2009, we decided to give arena to the younger and less experienced members of the NLP community to share their results with an international audience. For this reason, further to the first successful and highly competitive Student Research Workshop associated with the conference RANLP 2009, we are pleased to announce the second edition of the workshop which is held during the main RANLP 2011 conference days on 13 September 2011. The aim of the workshop is to provide an excellent opportunity for students at all levels (Bachelor, Master, and Ph.D.) to present their work in progress or completed projects to an international research audience and receive feedback from senior researchers. We have received 31 high quality submissions, among which 6 papers have been accepted as regular oral papers, and 18 as posters. Each submission has been reviewed by -

MASC: the Manually Annotated Sub-Corpus of American English

MASC: The Manually Annotated Sub-Corpus of American English Nancy Ide*, Collin Baker**, Christiane Fellbaum†, Charles Fillmore**, Rebecca Passonneau†† *Vassar College Poughkeepsie, New York USA **International Computer Science Institute Berkeley, California USA †Princeton University Princeton, New Jersey USA ††Columbia University New York, New York USA E-mail: [email protected], [email protected], [email protected], [email protected], [email protected] Abstract To answer the critical need for sharable, reusable annotated resources with rich linguistic annotations, we are developing a Manually Annotated Sub-Corpus (MASC) including texts from diverse genres and manual annotations or manually-validated annotations for multiple levels, including WordNet senses and FrameNet frames and frame elements, both of which have become significant resources in the international computational linguistics community. To derive maximal benefit from the semantic information provided by these resources, the MASC will also include manually-validated shallow parses and named entities, which will enable linking WordNet senses and FrameNet frames within the same sentences into more complex semantic structures and, because named entities will often be the role fillers of FrameNet frames, enrich the semantic and pragmatic information derivable from the sub-corpus. All MASC annotations will be published with detailed inter-annotator agreement measures. The MASC and its annotations will be freely downloadable from the ANC website, thus providing -

Lexical Ambiguity • Syntactic Ambiguity • Semantic Ambiguity • Pragmatic Ambiguity

Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing (NLP)(NLP) Professors:Marta Gatius Vila Horacio Rodríguez Hontoria Hours per week: 2h theory + 1h laboratory Web page: http://www.cs.upc.edu/~gatius/engpln2017.html Main goal Understand the fundamental concepts of NLP • Most well-known techniques and theories • Most relevant existing resources • Most relevant applications NLP Introduction 1 Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing Content 1. Introduction to Language Processing 2. Applications. 3. Language models. 4. Morphology and lexicons. 5. Syntactic processing. 6. Semantic and pragmatic processing. 7. Generation NLP Introduction 2 Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing Assesment • Exams Mid-term exam- November End-of-term exam – Final exams period- all the course contents • Development of 2 Programs – Groups of two or three students Course grade = maximum ( midterm exam*0.15 + final exam*0.45, final exam * 0.6) + assigments *0.4 NLP Introduction 3 Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing Related (or the same) disciplines: •Computational Linguistics, CL •Natural Language Processing, NLP •Linguistic Engineering, LE •Human Language Technology, HLT NLP Introduction 4 Linguistic Engineering (LE) • LE consists of the application of linguistic knowledge to the development of computer systems able to recognize, understand, interpretate and generate human language in all its forms. • LE includes: • Formal models (representations of knowledge of language at the different levels) • Theories and algorithms • Techniques and tools • Resources (Lingware) • Applications NLP Introduction 5 Linguistic knowledge levels – Phonetics and phonology. Language models – Morphology: Meaningful components of words. Lexicon doors is plural – Syntax: Structural relationships between words. -

From CHILDES to Talkbank

From CHILDES to TalkBank Brian MacWhinney Carnegie Mellon University MacWhinney, B. (2001). New developments in CHILDES. In A. Do, L. Domínguez & A. Johansen (Eds.), BUCLD 25: Proceedings of the 25th annual Boston University Conference on Language Development (pp. 458-468). Somerville, MA: Cascadilla. a similar article appeared as: MacWhinney, B. (2001). From CHILDES to TalkBank. In M. Almgren, A. Barreña, M. Ezeizaberrena, I. Idiazabal & B. MacWhinney (Eds.), Research on Child Language Acquisition (pp. 17-34). Somerville, MA: Cascadilla. Recent years have seen a phenomenal growth in computer power and connectivity. The computer on the desktop of the average academic researcher now has the power of room-size supercomputers of the 1980s. Using the Internet, we can connect in seconds to the other side of the world and transfer huge amounts of text, programs, audio and video. Our computers are equipped with programs that allow us to view, link, and modify this material without even having to think about programming. Nearly all of the major journals are now available in electronic form and the very nature of journals and publication is undergoing radical change. These new trends have led to dramatic advances in the methodology of science and engineering. However, the social and behavioral sciences have not shared fully in these advances. In large part, this is because the data used in the social sciences are not well- structured patterns of DNA sequences or atomic collisions in super colliders. Much of our data is based on the messy, ill-structured behaviors of humans as they participate in social interactions. Categorizing and coding these behaviors is an enormous task in itself. -

The General Regionally Annotated Corpus of Ukrainian (GRAC, Uacorpus.Org): Architecture and Functionality

The General Regionally Annotated Corpus of Ukrainian (GRAC, uacorpus.org): Architecture and Functionality Maria Shvedova[0000-0002-0759-1689] Kyiv National Linguistic University [email protected] Abstract. The paper presents the General Regionally Annotated Corpus of Ukrainian, which is publicly available (GRAC: uacorpus.org), searchable online and counts more than 400 million tokens, representing most genres of written texts. It also features regional annotation, i. e. about 50 percent of the texts are attributed with regard to the different regions of Ukraine or countries of the di- aspora. If the author is known, the text is linked to their home region(s). The journalistic texts are annotated with regard to the place where the edition is pub- lished. This feature differs the GRAC from a majority of general linguistic cor- pora. Keywords: Ukrainian language, corpus, diachronic evolution, regional varia- tion. 1 Introduction Currently many major national languages have large universal corpora, known as “reference” corpora (cf. das Deutsche Referenzkorpus – DeReKo) or “national” cor- pora (a label dating back ultimately to the British National Corpus). These corpora are large, representative for different genres of written language, have a certain depth of (usually morphological and metatextual) annotation and can be used for many differ- ent linguistic purposes. The Ukrainian language lacks a publicly available linguistic corpus. Still there is a need of a corpus in the present-day linguistics. Independently researchers compile dif- ferent corpora of Ukrainian for separate research purposes with different size and functionality. As the community lacks a universal tool, a researcher may build their own corpus according to their needs. -

Conference Abstracts

EIGHTH INTERNATIONAL CONFERENCE ON LANGUAGE RESOURCES AND EVALUATION Held under the Patronage of Ms Neelie Kroes, Vice-President of the European Commission, Digital Agenda Commissioner MAY 23-24-25, 2012 ISTANBUL LÜTFI KIRDAR CONVENTION & EXHIBITION CENTRE ISTANBUL, TURKEY CONFERENCE ABSTRACTS Editors: Nicoletta Calzolari (Conference Chair), Khalid Choukri, Thierry Declerck, Mehmet Uğur Doğan, Bente Maegaard, Joseph Mariani, Asuncion Moreno, Jan Odijk, Stelios Piperidis. Assistant Editors: Hélène Mazo, Sara Goggi, Olivier Hamon © ELRA – European Language Resources Association. All rights reserved. LREC 2012, EIGHTH INTERNATIONAL CONFERENCE ON LANGUAGE RESOURCES AND EVALUATION Title: LREC 2012 Conference Abstracts Distributed by: ELRA – European Language Resources Association 55-57, rue Brillat Savarin 75013 Paris France Tel.: +33 1 43 13 33 33 Fax: +33 1 43 13 33 30 www.elra.info and www.elda.org Email: [email protected] and [email protected] Copyright by the European Language Resources Association ISBN 978-2-9517408-7-7 EAN 9782951740877 All rights reserved. No part of this book may be reproduced in any form without the prior permission of the European Language Resources Association ii Introduction of the Conference Chair Nicoletta Calzolari I wish first to express to Ms Neelie Kroes, Vice-President of the European Commission, Digital agenda Commissioner, the gratitude of the Program Committee and of all LREC participants for her Distinguished Patronage of LREC 2012. Even if every time I feel we have reached the top, this 8th LREC is continuing the tradition of breaking previous records: this edition we received 1013 submissions and have accepted 697 papers, after reviewing by the impressive number of 715 colleagues. -

Gold Standard Annotations for Preposition and Verb Sense With

Gold Standard Annotations for Preposition and Verb Sense with Semantic Role Labels in Adult-Child Interactions Lori Moon Christos Christodoulopoulos Cynthia Fisher University of Illinois at Amazon Research University of Illinois at Urbana-Champaign [email protected] Urbana-Champaign [email protected] [email protected] Sandra Franco Dan Roth Intelligent Medical Objects University of Pennsylvania Northbrook, IL USA [email protected] [email protected] Abstract This paper describes the augmentation of an existing corpus of child-directed speech. The re- sulting corpus is a gold-standard labeled corpus for supervised learning of semantic role labels in adult-child dialogues. Semantic role labeling (SRL) models assign semantic roles to sentence constituents, thus indicating who has done what to whom (and in what way). The current corpus is derived from the Adam files in the Brown corpus (Brown, 1973) of the CHILDES corpora, and augments the partial annotation described in Connor et al. (2010). It provides labels for both semantic arguments of verbs and semantic arguments of prepositions. The semantic role labels and senses of verbs follow Propbank guidelines (Kingsbury and Palmer, 2002; Gildea and Palmer, 2002; Palmer et al., 2005) and those for prepositions follow Srikumar and Roth (2011). The corpus was annotated by two annotators. Inter-annotator agreement is given sepa- rately for prepositions and verbs, and for adult speech and child speech. Overall, across child and adult samples, including verbs and prepositions, the κ score for sense is 72.6, for the number of semantic-role-bearing arguments, the κ score is 77.4, for identical semantic role labels on a given argument, the κ score is 91.1, for the span of semantic role labels, and the κ for agreement is 93.9. -

A Massively Parallel Corpus: the Bible in 100 Languages

Lang Resources & Evaluation DOI 10.1007/s10579-014-9287-y ORIGINAL PAPER A massively parallel corpus: the Bible in 100 languages Christos Christodouloupoulos • Mark Steedman Ó The Author(s) 2014. This article is published with open access at Springerlink.com Abstract We describe the creation of a massively parallel corpus based on 100 translations of the Bible. We discuss some of the difficulties in acquiring and processing the raw material as well as the potential of the Bible as a corpus for natural language processing. Finally we present a statistical analysis of the corpora collected and a detailed comparison between the English translation and other English corpora. Keywords Parallel corpus Á Multilingual corpus Á Comparative corpus linguistics 1 Introduction Parallel corpora are a valuable resource for linguistic research and natural language processing (NLP) applications. One of the main uses of the latter kind is as training material for statistical machine translation (SMT), where large amounts of aligned data are standardly used to learn word alignment models between the lexica of two languages (for example, in the Giza?? system of Och and Ney 2003). Another interesting use of parallel corpora in NLP is projected learning of linguistic structure. In this approach, supervised data from a resource-rich language is used to guide the unsupervised learning algorithm in a target language. Although there are some techniques that do not require parallel texts (e.g. Cohen et al. 2011), the most successful models use sentence-aligned corpora (Yarowsky and Ngai 2001; Das and Petrov 2011). C. Christodouloupoulos (&) Department of Computer Science, UIUC, 201 N. -

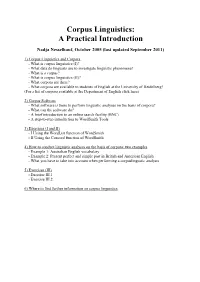

Corpus Linguistics: a Practical Introduction

Corpus Linguistics: A Practical Introduction Nadja Nesselhauf, October 2005 (last updated September 2011) 1) Corpus Linguistics and Corpora - What is corpus linguistics (I)? - What data do linguists use to investigate linguistic phenomena? - What is a corpus? - What is corpus linguistics (II)? - What corpora are there? - What corpora are available to students of English at the University of Heidelberg? (For a list of corpora available at the Department of English click here) 2) Corpus Software - What software is there to perform linguistic analyses on the basis of corpora? - What can the software do? - A brief introduction to an online search facility (BNC) - A step-to-step introduction to WordSmith Tools 3) Exercises (I and II) - I Using the WordList function of WordSmith - II Using the Concord function of WordSmith 4) How to conduct linguistic analyses on the basis of corpora: two examples - Example 1: Australian English vocabulary - Example 2: Present perfect and simple past in British and American English - What you have to take into account when performing a corpuslingustic analysis 5) Exercises (III) - Exercise III.1 - Exercise III.2 6) Where to find further information on corpus linguistics 1) Corpus Linguistics and Corpora What is corpus linguistics (I)? Corpus linguistics is a method of carrying out linguistic analyses. As it can be used for the investigation of many kinds of linguistic questions and as it has been shown to have the potential to yield highly interesting, fundamental, and often surprising new insights about language, it has become one of the most wide-spread methods of linguistic investigation in recent years. -

Background and Context for CLASP

Background and Context for CLASP Nancy Ide, Vassar College The Situation Standards efforts have been on-going for over 20 years Interest and activity mainly in Europe in 90’s and early 2000’s Text Encoding Initiative (TEI) – 1987 Still ongoing, used mainly by humanities EAGLES/ISLE Developed standards for morpho-syntax, syntax, sub-categorization, etc. (links on CLASP wiki) Corpus Encoding Standard (now XCES - http://www.xces.org) Main Aspects" ! Harmonization of formats for linguistic data and annotations" ! Harmonization of descriptors in linguistic annotation" ! These two are often mixed, but need to deal with them separately (see CLASP wiki)" Formats: The Past 20 Years" 1987 TEI Myriad of formats 1994 MULTEXT, CES ~1996 XML 2000 ISO TC37 SC4 2001 LAF model introduced now LAF/GrAF, ISO standards Myriad of formats Actually…" ! Things are better now" ! XML use" ! Moves toward common models, especially in Europe" ! US community seeing the need for interoperability " ! Emergence of common processing platforms (GATE, UIMA) with underlying common models " Resources 1990 ! WordNet gains ground as a “standard” LR ! Penn Treebank, Wall Street Journal Corpus World Wide Web ! British National Corpus ! EuroWordNet XML ! Comlex ! FrameNet ! American National Corpus Semantic Web ! Global WordNet ! More FrameNets ! SUMO ! VerbNet ! PropBank, NomBank ! MASC present NLP software 1994 ! MULTEXT > LT tools, LT XML 1995 ! GATE (Sheffield) 1996 1998 ! Alembic Workbench ! ATLAS (NIST) 2003 ! What happened to this? 200? ! Callisto ! UIMA Now: GATE -

Informatics 1: Data & Analysis

Informatics 1: Data & Analysis Lecture 12: Corpora Ian Stark School of Informatics The University of Edinburgh Friday 27 February 2015 Semester 2 Week 6 http://www.inf.ed.ac.uk/teaching/courses/inf1/da Student Survey Final Day ! ESES: The Edinburgh Student Experience Survey http://www.ed.ac.uk/students/surveys Please log on to MyEd before 1 March to complete the survey. Help guide what we do at the University of Edinburgh, improving your future experience here and that of the students to follow. Ian Stark Inf1-DA / Lecture 12 2015-02-27 Lecture Plan XML We start with technologies for modelling and querying semistructured data. Semistructured Data: Trees and XML Schemas for structuring XML Navigating and querying XML with XPath Corpora One particular kind of semistructured data is large bodies of written or spoken text: each one a corpus, plural corpora. Corpora: What they are and how to build them Applications: corpus analysis and data extraction Ian Stark Inf1-DA / Lecture 12 2015-02-27 Homework ! Tutorial Exercises Tutorial 5 exercises went online earlier this week. In these you use the xmllint command-line tool to check XML validity and run your own XPath queries. Reading T. McEnery and A. Wilson. Corpus Linguistics. Second edition, Edinburgh University Press, 2001. Chapter 2: What is a corpus and what is in it? (§2.2.2 optional) Photocopied handout, also available from the ITO. Ian Stark Inf1-DA / Lecture 12 2015-02-27 Remote Access to DICE ! Much coursework can be done on your own machines, but sometimes it’s important to be able to connect to and use DICE systems. -

A New Venture in Corpus-Based Lexicography: Towards a Dictionary of Academic English

A New Venture in Corpus-Based Lexicography: Towards a Dictionary of Academic English Iztok Kosem1 and Ramesh Krishnamurthy1 1. Introduction This paper asserts the increasing importance of academic English in an increasingly Anglophone world, and looks at the differences between academic English and general English, especially in terms of vocabulary. The creation of wordlists has played an important role in trying to establish the academic English lexicon, but these wordlists are not based on appropriate data, or are implemented inappropriately. There is as yet no adequate dictionary of academic English, and this paper reports on new efforts at Aston University to create a suitable corpus on which such a dictionary could be based. 2. Academic English The increasing percentage of academic texts published in English (Swales, 1990; Graddol, 1997; Cargill and O’Connor, 2006) and the increasing numbers of students (both native and non-native speakers of English) at universities where English is the language of instruction (Graddol, 2006) testify to the important role of academic English. At the same time, research has shown that there is a significant difference between academic English and general English. The research has focussed mainly on vocabulary: the lexical differences between academic English and general English have been thoroughly discussed by scholars (Coxhead and Nation, 2001; Nation, 2001, 1990; Coxhead, 2000; Schmitt, 2000, Nation and Waring, 1997; Xue and Nation, 1984), and Coxhead and Nation (2001: 254–56) list the following four distinguishing features of academic vocabulary: “1. Academic vocabulary is common to a wide range of academic texts, and generally not so common in non-academic texts.