Math 304 (Spring 2010)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Multishift Qr Algorithm. Part I: Maintaining Well-Focused Shifts and Level 3 Performance∗

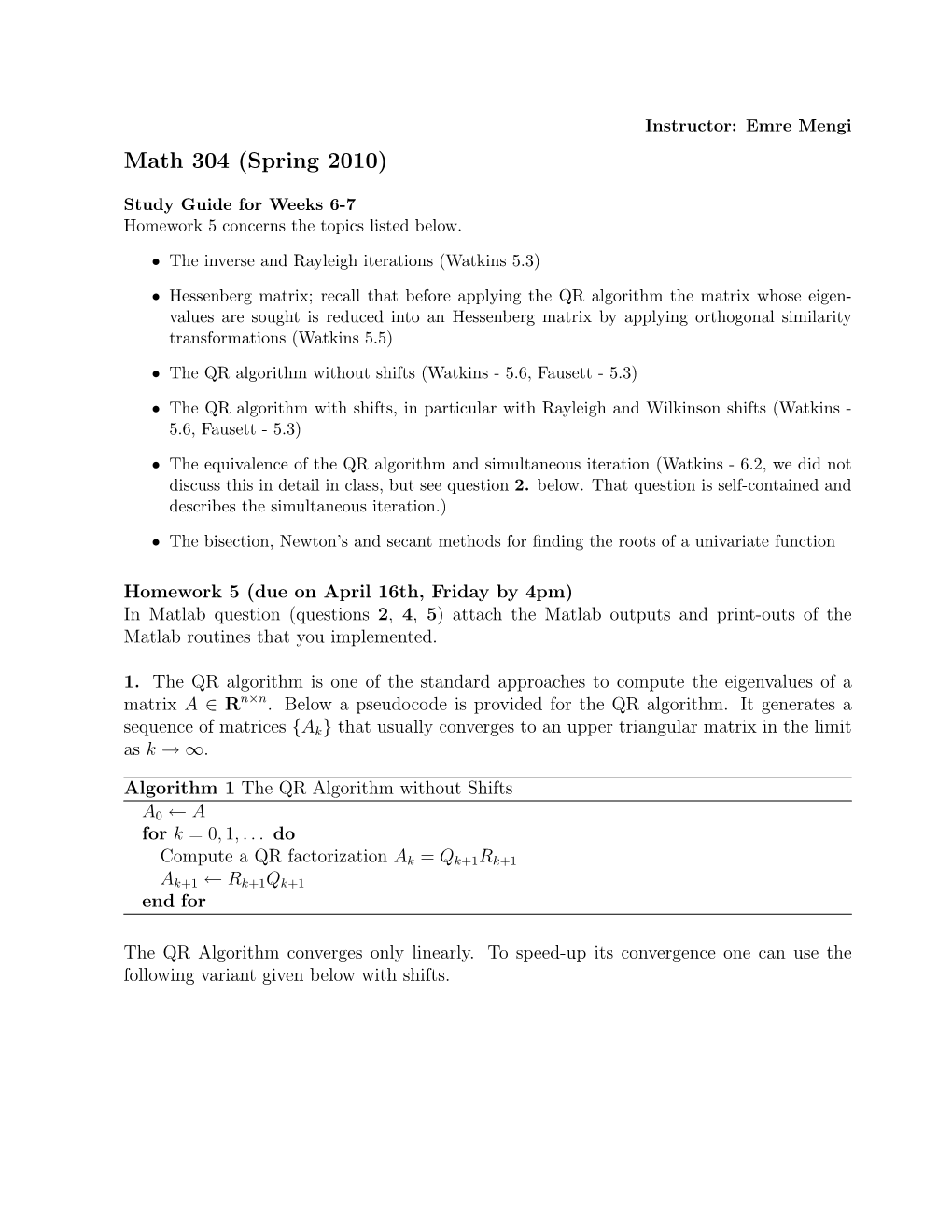

SIAM J. MATRIX ANAL. APPL. c 2002 Society for Industrial and Applied Mathematics Vol. 23, No. 4, pp. 929–947 THE MULTISHIFT QR ALGORITHM. PART I: MAINTAINING WELL-FOCUSED SHIFTS AND LEVEL 3 PERFORMANCE∗ KAREN BRAMAN† , RALPH BYERS† , AND ROY MATHIAS‡ Abstract. This paper presents a small-bulge multishift variation of the multishift QR algorithm that avoids the phenomenon ofshiftblurring, which retards convergence and limits the number of simultaneous shifts. It replaces the large diagonal bulge in the multishift QR sweep with a chain of many small bulges. The small-bulge multishift QR sweep admits nearly any number ofsimultaneous shifts—even hundreds—without adverse effects on the convergence rate. With enough simultaneous shifts, the small-bulge multishift QR algorithm takes advantage ofthe level 3 BLAS, which is a special advantage for computers with advanced architectures. Key words. QR algorithm, implicit shifts, level 3 BLAS, eigenvalues, eigenvectors AMS subject classifications. 65F15, 15A18 PII. S0895479801384573 1. Introduction. This paper presents a small-bulge multishift variation of the multishift QR algorithm [4] that avoids the phenomenon of shift blurring, which re- tards convergence and limits the number of simultaneous shifts that can be used effec- tively. The small-bulge multishift QR algorithm replaces the large diagonal bulge in the multishift QR sweep with a chain of many small bulges. The small-bulge multishift QR sweep admits nearly any number of simultaneous shifts—even hundreds—without adverse effects on the convergence rate. It takes advantage of the level 3 BLAS by organizing nearly all the arithmetic workinto matrix-matrix multiplies. This is par- ticularly efficient on most modern computers and especially efficient on computers with advanced architectures. -

The SVD Algorithm

Jim Lambers CME 335 Spring Quarter 2010-11 Lecture 6 Notes The SVD Algorithm Let A be an m × n matrix. The Singular Value Decomposition (SVD) of A, A = UΣV T ; where U is m × m and orthogonal, V is n × n and orthogonal, and Σ is an m × n diagonal matrix with nonnegative diagonal entries σ1 ≥ σ2 ≥ · · · ≥ σp; p = minfm; ng; known as the singular values of A, is an extremely useful decomposition that yields much informa- tion about A, including its range, null space, rank, and 2-norm condition number. We now discuss a practical algorithm for computing the SVD of A, due to Golub and Kahan. Let U and V have column partitions U = u1 ··· um ;V = v1 ··· vn : From the relations T Avj = σjuj;A uj = σjvj; j = 1; : : : ; p; it follows that T 2 A Avj = σj vj: That is, the squares of the singular values are the eigenvalues of AT A, which is a symmetric matrix. It follows that one approach to computing the SVD of A is to apply the symmetric QR algorithm T T T T to A A to obtain a decomposition A A = V Σ ΣV . Then, the relations Avj = σjuj, j = 1; : : : ; p, can be used in conjunction with the QR factorization with column pivoting to obtain U. However, this approach is not the most practical, because of the expense and loss of information incurred from computing AT A. Instead, we can implicitly apply the symmetric QR algorithm to AT A. As the first step of the symmetric QR algorithm is to use Householder reflections to reduce the matrix to tridiagonal form, we can use Householder reflections to instead reduce A to upper bidiagonal form 2 3 d1 f1 6 d2 f2 7 6 7 T 6 . -

Communication-Optimal Parallel and Sequential QR and LU Factorizations

Communication-optimal parallel and sequential QR and LU factorizations James Demmel Laura Grigori Mark Frederick Hoemmen Julien Langou Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2008-89 http://www.eecs.berkeley.edu/Pubs/TechRpts/2008/EECS-2008-89.html August 4, 2008 Copyright © 2008, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Communication-optimal parallel and sequential QR and LU factorizations James Demmel, Laura Grigori, Mark Hoemmen, and Julien Langou August 4, 2008 Abstract We present parallel and sequential dense QR factorization algorithms that are both optimal (up to polylogarithmic factors) in the amount of communication they perform, and just as stable as Householder QR. Our first algorithm, Tall Skinny QR (TSQR), factors m × n matrices in a one-dimensional (1-D) block cyclic row layout, and is optimized for m n. Our second algorithm, CAQR (Communication-Avoiding QR), factors general rectangular matrices distributed in a two-dimensional block cyclic layout. It invokes TSQR for each block column factorization. The new algorithms are superior in both theory and practice. We have extended known lower bounds on communication for sequential and parallel matrix multiplication to provide latency lower bounds, and show these bounds apply to the LU and QR decompositions. -

Massively Parallel Poisson and QR Factorization Solvers

Computers Math. Applic. Vol. 31, No. 4/5, pp. 19-26, 1996 Pergamon Copyright~)1996 Elsevier Science Ltd Printed in Great Britain. All rights reserved 0898-1221/96 $15.00 + 0.00 0898-122 ! (95)00212-X Massively Parallel Poisson and QR Factorization Solvers M. LUCK£ Institute for Control Theory and Robotics, Slovak Academy of Sciences DdbravskA cesta 9, 842 37 Bratislava, Slovak Republik utrrluck@savba, sk M. VAJTERSIC Institute of Informatics, Slovak Academy of Sciences DdbravskA cesta 9, 840 00 Bratislava, P.O. Box 56, Slovak Republic kaifmava©savba, sk E. VIKTORINOVA Institute for Control Theoryand Robotics, Slovak Academy of Sciences DdbravskA cesta 9, 842 37 Bratislava, Slovak Republik utrrevka@savba, sk Abstract--The paper brings a massively parallel Poisson solver for rectangle domain and parallel algorithms for computation of QR factorization of a dense matrix A by means of Householder re- flections and Givens rotations. The computer model under consideration is a SIMD mesh-connected toroidal n x n processor array. The Dirichlet problem is replaced by its finite-difference analog on an M x N (M + 1, N are powers of two) grid. The algorithm is composed of parallel fast sine transform and cyclic odd-even reduction blocks and runs in a fully parallel fashion. Its computational complexity is O(MN log L/n2), where L = max(M + 1, N). A parallel proposal of QI~ factorization by the Householder method zeros all subdiagonal elements in each column and updates all elements of the given submatrix in parallel. For the second method with Givens rotations, the parallel scheme of the Sameh and Kuck was chosen where the disjoint rotations can be computed simultaneously. -

QR Decomposition on Gpus

QR Decomposition on GPUs Andrew Kerr, Dan Campbell, Mark Richards Georgia Institute of Technology, Georgia Tech Research Institute {andrew.kerr, dan.campbell}@gtri.gatech.edu, [email protected] ABSTRACT LU, and QR decomposition, however, typically require ¯ne- QR decomposition is a computationally intensive linear al- grain synchronization between processors and contain short gebra operation that factors a matrix A into the product of serial routines as well as massively parallel operations. Achiev- a unitary matrix Q and upper triangular matrix R. Adap- ing good utilization on a GPU requires a careful implementa- tive systems commonly employ QR decomposition to solve tion informed by detailed understanding of the performance overdetermined least squares problems. Performance of QR characteristics of the underlying architecture. decomposition is typically the crucial factor limiting problem sizes. In this paper, we focus on QR decomposition in particular and discuss the suitability of several algorithms for imple- Graphics Processing Units (GPUs) are high-performance pro- mentation on GPUs. Then, we provide a detailed discussion cessors capable of executing hundreds of floating point oper- and analysis of how blocked Householder reflections may be ations in parallel. As commodity accelerators for 3D graph- used to implement QR on CUDA-compatible GPUs sup- ics, GPUs o®er tremendous computational performance at ported by performance measurements. Our real-valued QR relatively low costs. While GPUs are favorable to applica- implementation achieves more than 10x speedup over the tions with much inherent parallelism requiring coarse-grain native QR algorithm in MATLAB and over 4x speedup be- synchronization between processors, methods for e±ciently yond the Intel Math Kernel Library executing on a multi- utilizing GPUs for algorithms computing QR decomposition core CPU, all in single-precision floating-point. -

QR Factorization for the CELL Processor 1 Introduction

QR Factorization for the CELL Processor { LAPACK Working Note 201 Jakub Kurzak Department of Electrical Engineering and Computer Science, University of Tennessee Jack Dongarra Department of Electrical Engineering and Computer Science, University of Tennessee Computer Science and Mathematics Division, Oak Ridge National Laboratory School of Mathematics & School of Computer Science, University of Manchester ABSTRACT ScaLAPACK [4] are good examples. These imple- The QR factorization is one of the most mentations work on square or rectangular submatri- important operations in dense linear alge- ces in their inner loops, where operations are encap- bra, offering a numerically stable method for sulated in calls to Basic Linear Algebra Subroutines solving linear systems of equations includ- (BLAS) [5], with emphasis on expressing the compu- ing overdetermined and underdetermined sys- tation as level 3 BLAS (matrix-matrix type) opera- tems. Classic implementation of the QR fac- tions. torization suffers from performance limita- The fork-and-join parallelization model of these li- tions due to the use of matrix-vector type op- braries has been identified as the main obstacle for erations in the phase of panel factorization. achieving scalable performance on new processor ar- These limitations can be remedied by using chitectures. The arrival of multi-core chips increased the idea of updating of QR factorization, ren- the demand for new algorithms, exposing much more dering an algorithm, which is much more scal- thread-level parallelism of much finer granularity. able and much more suitable for implementa- This paper presents an implementation of the QR tion on a multi-core processor. It is demon- factorization based on the idea of updating the QR strated how the potential of the CELL proces- factorization. -

A Fast and Stable Parallel QR Algorithm for Symmetric Tridiagonal Matrices

CORE Metadata, citation and similar papers at core.ac.uk Provided by Elsevier - Publisher Connector A Fast and Stable Parallel QR Algorithm for Symmetric Tridiagonal Matrices Ilan Bar-On Department of Computer Science Technion Haifa 32000, Israel and Bruno Codenotti* IEZ-CNR Via S. Maria 46 561 OO- Piss, ltaly Submitted by Daniel Hershkowitz ABSTRACT We present a new, fast, and practical parallel algorithm for computing a few eigenvalues of a symmetric tridiagonal matrix by the explicit QR method. We present a new divide and conquer parallel algorithm which is fast and numerically stable. The algorithm is work efficient and of low communication overhead, and it can be used to solve very large problems infeasible by sequential methods. 1. INTRODUCTION Very large band linear systems arise frequently in computational science, directly from finite difference and finite element schemes, and indirectly from applying the symmetric block Lanczos algorithm to sparse systems, or the symmetric block Householder transformation to dense systems. In this paper we present a new divide and conquer parallel algorithm for computing *Partially supported by ESPRIT B.R.A. III of the EC under contract n. 9072 (project CEPPCOM). LINEAR ALGEBRA AND ITS APPLICATlONS 220:63-95 (1995) 0 Elsevier Science Inc., 199s 0024-3795/95/$9.50 6.55 Avenw of the Americas. New York. NY 10010 SSDI 0024-3795(93)00360-C 64 ILAN BAR-ON AND BRUNO CODENO’ITI a few eigenvalues of a symmetric tridiagonal matrix by the explicit QR method. Our algorithm is fast and numerically stable. Moreover, the algo- rithm is work efficient, with low communication overhead, and it can be used in practice to solve very large problems on massively parallel systems, problems infeasible on sequential machines. -

QR Factorization for the Cell Broadband Engine

Scientific Programming 17 (2009) 31–42 31 DOI 10.3233/SPR-2009-0268 IOS Press QR factorization for the Cell Broadband Engine Jakub Kurzak a,∗ and Jack Dongarra a,b,c a Department of Electrical Engineering and Computer Science, University of Tennessee, Knoxville, TN, USA b Computer Science and Mathematics Division, Oak Ridge National Laboratory, Oak Ridge, TN, USA c School of Mathematics and School of Computer Science, University of Manchester, Manchester, UK Abstract. The QR factorization is one of the most important operations in dense linear algebra, offering a numerically stable method for solving linear systems of equations including overdetermined and underdetermined systems. Modern implementa- tions of the QR factorization, such as the one in the LAPACK library, suffer from performance limitations due to the use of matrix–vector type operations in the phase of panel factorization. These limitations can be remedied by using the idea of updat- ing of QR factorization, rendering an algorithm, which is much more scalable and much more suitable for implementation on a multi-core processor. It is demonstrated how the potential of the cell broadband engine can be utilized to the fullest by employing the new algorithmic approach and successfully exploiting the capabilities of the chip in terms of single instruction multiple data parallelism, instruction level parallelism and thread-level parallelism. Keywords: Cell broadband engine, multi-core, numerical algorithms, linear algebra, matrix factorization 1. Introduction size of private memory associated with each computa- tional core. State of the art, numerical linear algebra software Section 2 provides a brief discussion of related utilizes block algorithms in order to exploit the mem- work. -

The QR Algorithm Revisited

SIAM REVIEW c 2008 Society for Industrial and Applied Mathematics Vol. 50, No. 1, pp. 133–145 ∗ The QR Algorithm Revisited † David S. Watkins Abstract. The QR algorithm is still one of the most important methods for computing eigenvalues and eigenvectors of matrices. Most discussions of the QR algorithm begin with a very basic version and move by steps toward the versions of the algorithm that are actually used. This paper outlines a pedagogical path that leads directly to the implicit multishift QR algorithms that are used in practice, bypassing the basic QR algorithm completely. Key words. eigenvalue, eigenvector, QR algorithm, Hessenberg form AMS subject classifications. 65F15, 15A18 DOI. 10.1137/060659454 1. Introduction. For many years the QR algorithm [7], [8], [9], [25] has been our most important tool for computing eigenvalues and eigenvectors of matrices. In recent years other algorithms have arisen [1], [4], [5], [6], [11], [12] that may supplant QR in the arena of symmetric eigenvalue problems. However, for the general, nonsymmetric eigenvalue problem, QR is still king. Moreover, the QR algorithm is a key auxiliary routine used by most algorithms for computing eigenpairs of large sparse matrices, for example, the implicitly restarted Arnoldi process [10], [13], [14] and the Krylov–Schur algorithm [15]. Early in my career I wrote an expository paper entitled “Understanding the QR algorithm,” which was published in SIAM Review in 1982 [16]. It was not my first publication, but it was my first paper in numerical linear algebra, and it marked a turning point in my career: I’ve been stuck on eigenvalue problems ever since. -

A Nonlinear QR Algorithm for Banded Nonlinear Eigenvalue Problems

A Nonlinear QR Algorithm for Banded Nonlinear Eigenvalue Problems C. KRISTOPHER GARRETT, Computational Physics and Methods, Los Alamos National Laboratory ZHAOJUN BAI, Department of Computer Science and Department of Mathematics, University of California, Davis REN-CANG LI, Department of Mathematics, University of Texas at Arlington A variation of Kublanovskaya’s nonlinear QR method for solving banded nonlinear eigenvalue problems is presented in this article. The new method is iterative and specifically designed for problems too large to use dense linear algebra techniques. For the unstructurally banded nonlinear eigenvalue problem, a new data structure is used for storing the matrices to keep memory and computational costs low. In addition, an algorithm is presented for computing several nearby nonlinear eigenvalues to already-computed ones. Finally, numerical examples are given to show the efficacy of the new methods, and the source code has been made publicly available. r → CCS Concepts: Mathematics of computing Solvers; Computations on matrices 4 Additional Key Words and Phrases: Nonlinear eigenvalue problem, banded, Kublanovskaya ACM Reference Format: C. Kristopher Garrett, Zhaojun Bai, and Ren-Cang Li. 2016. A nonlinear QR algorithm for banded nonlinear eigenvalue problems. ACM Trans. Math. Softw. 43, 1, Article 4 (August 2016), 19 pages. DOI: http://dx.doi.org/10.1145/2870628 1. INTRODUCTION Given an n × n (complex) matrix-valued function H(λ), the associated nonlinear eigenvalue problem (NLEVP) is to determine nonzero n-vectors x and y and a scalar μ such that H(μ)x = 0, y∗ H(μ) = 0. (1.1) When this equation holds, we call μ a nonlinear eigenvalue, x an associated right eigenvector (or simply eigenvector), and y an associated left eigenvector. -

LAPACK Working Note #216: a Novel Parallel QR Algorithm for Hybrid Distributed Memory HPC Systems∗

LAPACK Working Note #216: A novel parallel QR algorithm for hybrid distributed memory HPC systems¤ R. Granat1 Bo KºagstrÄom1 D. Kressner2 Abstract A novel variant of the parallel QR algorithm for solving dense nonsymmetric eigenvalue problems on hybrid distributed high performance computing (HPC) systems is presented. For this purpose, we introduce the concept of multi-window bulge chain chasing and parallelize aggressive early deflation. The multi-window approach ensures that most com- putations when chasing chains of bulges are performed in level 3 BLAS operations, while the aim of aggressive early deflation is to speed up the convergence of the QR algorithm. Mixed MPI-OpenMP coding techniques are utilized for porting the codes to distributed memory platforms with multithreaded nodes, such as multicore processors. Numerous numerical experiments con¯rm the superior performance of our parallel QR algorithm in comparison with the existing ScaLAPACK code, leading to an implementation that is one to two orders of magnitude faster for su±ciently large problems, including a number of examples from applications. Keywords: Eigenvalue problem, nonsymmetric QR algorithm, multishift, bulge chasing, parallel computations, level 3 performance, aggressive early deflation, parallel algorithms, hybrid distributed memory systems. 1 Introduction Computing the eigenvalues of a matrix A 2 Rn£n is at the very heart of numerical linear algebra, with applications coming from a broad range of science and engineering. With the increased complexity of mathematical models and availability of HPC systems, there is a growing demand to solve large-scale eigenvalue problems. Iterative eigensolvers, such as Krylov subspace or Jacobi-Davidson methods [8], have been developed with the aim of addressing such large-scale problems. -

An Implementation of the MRRR Algorithm on a Data-Parallel Coprocessor

An Implementation of the MRRR Algorithm on a Data-Parallel Coprocessor Christian Lessig∗ Abstract The Algorithm of Multiple Relatively Robust Representations (MRRRR) is one of the most efficient and most accurate solvers for the symmetric tridiagonal eigenvalue problem. We present an implementation of the MRRR algorithm on a data-parallel coprocessor using the CUDA pro- gramming environment. We obtain up to 50-fold speedups over LA- PACK’s MRRR implementation and demonstrate that the algorithm can be mapped efficiently onto a data-parallel architecture. The accuracy of the obtained results is currently inferior to LAPACK’s but we believe that the problem can be overcome in the near future. 1 Introduction 1 n×n The eigenvalue problem Aui = λiui for a real symmetric matrix A ∈ R with eigen- n values λi ∈ R and (right) eigenvectors ui ∈ R is important in many disciplines. This lead to the development of a variety of algorithms for the symmetric eigenvalue prob- lem [33, 51]. The efficacy of these eigen-solver depends on the required computational resources and the accuracy of the obtained results. With the advent of mainstream parallel architectures such as multi-core CPUs and data-parallel coprocessors also the amenability to parallelization is becoming an important property. Many eigen-solver are however difficult to parallelize. Notable exceptions are the Divide and Conquer algorithm [14] and the Algorithm of Multiple Relatively Robust Represen- tations (MRRR) [21] for which efficient parallel implementations exist. Both algorithms provide additionally highly accurate results [19]. The computational costs of the Divide and Conquer algorithm are with O(n3) however significantly higher than the O(n2) op- erations required by the MRRR algorithm.