Railroad Operational Safety

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Federal Railroad Administration Office of Safety Headquarters Assigned Accident Investigation Report HQ-2006-88

Federal Railroad Administration Office of Safety Headquarters Assigned Accident Investigation Report HQ-2006-88 Union Pacific Midas, CA November 9, 2006 Note that 49 U.S.C. §20903 provides that no part of an accident or incident report made by the Secretary of Transportation/Federal Railroad Administration under 49 U.S.C. §20902 may be used in a civil action for damages resulting from a matter mentioned in the report. DEPARTMENT OF TRANSPORTATION FRA FACTUAL RAILROAD ACCIDENT REPORT FRA File # HQ-2006-88 FEDERAL RAILROAD ADMINISTRATION 1.Name of Railroad Operating Train #1 1a. Alphabetic Code 1b. Railroad Accident/Incident No. Union Pacific RR Co. [UP ] UP 1106RS011 2.Name of Railroad Operating Train #2 2a. Alphabetic Code 2b. Railroad Accident/Incident N/A N/A N/A 3.Name of Railroad Responsible for Track Maintenance: 3a. Alphabetic Code 3b. Railroad Accident/Incident No. Union Pacific RR Co. [UP ] UP 1106RS011 4. U.S. DOT_AAR Grade Crossing Identification Number 5. Date of Accident/Incident 6. Time of Accident/Incident Month Day Year 11 09 2006 11:02: AM PM 7. Type of Accident/Indicent 1. Derailment 4. Side collision 7. Hwy-rail crossing 10. Explosion-detonation 13. Other (single entry in code box) 2. Head on collision 5. Raking collision 8. RR grade crossing 11. Fire/violent rupture (describe in narrative) 3. Rear end collision 6. Broken Train collision 9. Obstruction 12. Other impacts 01 8. Cars Carrying 9. HAZMAT Cars 10. Cars Releasing 11. People 12. Division HAZMAT Damaged/Derailed HAZMAT Evacuated 0 0 0 0 Roseville 13. Nearest City/Town 14. -

Prices and Costs in the Railway Sector

ÉCOLE POLYTECHNIQUE FÉDÉRALEDE LAUSANNE ENAC - INTER PRICESPRICES AND AND COSTS COSTS ININ THE THE RAILWAY RAILWAY SECTOR SECTOR J.P.J.P. Baumgartner Baumgartner ProfessorProfessor JanuaryJanuary2001 2001 EPFL - École Polytechnique Fédérale de Lausanne LITEP - Laboratoire d'Intermodalité des Transports et de Planification Bâtiment de Génie civil CH - 1015 Lausanne Tél. : + 41 21 693 24 79 Fax : + 41 21 693 50 60 E-mail : [email protected] LIaboratoire d' ntermodalité des TEP ransports t de lanification URL : http://litep.epfl.ch TABLE OF CONTENTS Page 1. FOREWORD 1 2. PRELIMINARY REMARKS 1 2.1 The railway equipment market 1 2.2 Figures and scenarios 1 3. INFRASTRUCTURES AND FIXED EQUIPMENT 2 3.1 Linear infrastructures and equipment 2 3.1.1 Studies 2 3.1.2 Land and rights 2 3.1.2.1 Investments 2 3.1.3 Infrastructure 2 3.1.3.1 Investments 2 3.1.3.2 Economic life 3 3.1.3.3 Maintenance costs 3 3.1.4 Track 3 3.1.4.1 Investment 3 3.1.4.2 Economic life of a main track 4 3.1.4.3 Track maintenance costs 4 3.1.5 Fixed equipment for electric traction 4 3.1.5.1 Investments 4 3.1.5.2 Economic life 5 3.1.5.3 Maintenance costs 5 3.1.6 Signalling 5 3.1.6.1 Investments 5 3.1.6.2 Economic life 6 3.1.6.3 Maintenance costs 6 3.2 Spot fixed equipment 6 3.2.1 Investments 7 3.2.1.1 Points, switches, turnouts, crossings 7 3.2.1.2 Stations 7 3.2.1.3 Service and light repair facilities 7 3.2.1.4 Maintenance and heavy repair shops for rolling stock 7 3.2.1.5 Central shops for the maintenance of fixed equipment 7 3.2.2 Economic life 8 3.2.3 Maintenance costs 8 4. -

Rulebook for Link Light Rail

RULEBOOK FOR LINK LIGHT RAIL EFFECTIVE MARCH 31 2018 RULEBOOK FOR LINK LIGHT RAIL Link Light Rail Rulebook Effective March 31, 2018 CONTENTS SAFETY ................................................................................................................................................... 1 INTRODUCTION ..................................................................................................................................... 2 ABBREVIATIONS .................................................................................................................................. 3 DEFINITIONS .......................................................................................................................................... 5 SECTION 1 ............................................................................................................................20 OPERATIONS DEPARTMENT GENERAL RULES ...............................................................20 1.1 APPLICABILITY OF RULEBOOK ............................................................................20 1.2 POSSESSION OF OPERATING RULEBOOK .........................................................20 1.3 RUN CARDS ...........................................................................................................20 1.4 REQUIRED ITEMS ..................................................................................................20 1.5 KNOWLEDGE OF RULES, PROCEDURES, TRAIN ORDERS, SPECIAL INSTRUCTIONS, DIRECTIVES, AND NOTICES .....................................................20 -

Records of Wolverton Carriage and Wagon Works

Records of Wolverton Carriage and Wagon Works A cataloguing project made possible by the Friends of the National Railway Museum Trustees of the National Museum of Science & Industry Contents 1. Description of Entire Archive: WOLV (f onds level description ) Administrative/Biographical History Archival history Scope & content System of arrangement Related units of description at the NRM Related units of descr iption held elsewhere Useful Publications relating to this archive 2. Description of Management Records: WOLV/1 (sub fonds level description) Includes links to content 3. Description of Correspondence Records: WOLV/2 (sub fonds level description) Includes links to content 4. Description of Design Records: WOLV/3 (sub fonds level description) (listed on separate PDF list) Includes links to content 5. Description of Production Records: WOLV/4 (sub fonds level description) Includes links to content 6. Description of Workshop Records: WOLV/5 (sub fonds level description) Includes links to content 2 1. Description of entire archive (fonds level description) Title Records of Wolverton Carriage and Wagon Works Fonds reference c ode GB 0756 WOLV Dates 1831-1993 Extent & Medium of the unit of the 87 drawing rolls, fourteen large archive boxes, two large bundles, one wooden box containing glass slides, 309 unit of description standard archive boxes Name of creators Wolverton Carriage and Wagon Works Administrative/Biographical Origin, progress, development History Wolverton Carriage and Wagon Works is located on the northern boundary of Milton Keynes. It was established in 1838 for the construction and repair of locomotives for the London and Birmingham Railway. In 1846 The London and Birmingham Railway joined with the Grand Junction Railway to become the London North Western Railway (LNWR). -

Disaster Management of India

DISASTER MANAGEMENT IN INDIA DISASTER MANAGEMENT 2011 This book has been prepared under the GoI-UNDP Disaster Risk Reduction Programme (2009-2012) DISASTER MANAGEMENT IN INDIA Ministry of Home Affairs Government of India c Disaster Management in India e ACKNOWLEDGEMENT The perception about disaster and its management has undergone a change following the enactment of the Disaster Management Act, 2005. The definition of disaster is now all encompassing, which includes not only the events emanating from natural and man-made causes, but even those events which are caused by accident or negligence. There was a long felt need to capture information about all such events occurring across the sectors and efforts made to mitigate them in the country and to collate them at one place in a global perspective. This book has been an effort towards realising this thought. This book in the present format is the outcome of the in-house compilation and analysis of information relating to disasters and their management gathered from different sources (domestic as well as the UN and other such agencies). All the three Directors in the Disaster Management Division, namely Shri J.P. Misra, Shri Dev Kumar and Shri Sanjay Agarwal have contributed inputs to this Book relating to their sectors. Support extended by Prof. Santosh Kumar, Shri R.K. Mall, former faculty and Shri Arun Sahdeo from NIDM have been very valuable in preparing an overview of the book. This book would have been impossible without the active support, suggestions and inputs of Dr. J. Radhakrishnan, Assistant Country Director (DM Unit), UNDP, New Delhi and the members of the UNDP Disaster Management Team including Shri Arvind Sinha, Consultant, UNDP. -

Railroad Horn Systems Research RR997/R9069 6

REPORT DOCUMENTATION PAGE Form Approved OMB No. 0704-0188 Public reporting burden for this collection of information is estimated to average 1 hour per response, including the time for reviewing instructions, searching existing data sources, gathering and maintaining the data needed, and completing and reviewing the collection of information. Send comments regarding this burden estimate or any other aspect of this collection of information, including suggestions for reducing this burden, to Washington Headquarters Services, Directorate for Information Operations and Reports, 1215 Jefferson Davis Highway, Suite 1204, Arlington, VA 22202-4302, and to the Office of Management and Budget, Paperwork Reduction Project (0704-0188), Washington, DC 20503. 1. AGENCY USE ONLY (Leave 2. REPORT DATE 3. REPORT TYPE & DATES blank) January 1999 COVERED Final Report January 1992 - December 1995 4. TITLE AND SUBTITLE 5. FUNDING NUMBERS Railroad Horn Systems Research RR997/R9069 6. AUTHOR(S) Amanda S. Rapoza, Thomas G. Raslear,* and Edward J. Rickley 7. PERFORMING ORGANIZATION NAME(S) AND ADDRESS(ES) 8. PERFORMING ORGANIZATION U.S Department of Transportation REPORT NUMBER Research and Special Programs Administrations DOT-VNTSC-FRA-98-2 9. SPONSORING/MONITORING AGENCY NAME(S) AND ADDRESS(ES) 10. SPONSORING OR MONITORING *U.S. Department of Transportation AGENCY REPORT NUMBER Federal Railroad Administration Office of Research and Development DOT/FRA/ORD-99/10 Equipment and Operating Practices Research Division Washington, DC 20590 11. SUPPLEMENTARY NOTES Safety of Highway-Railroad Grade Crossings Series 12a. DISTRIBUTION/AVAILABILITY 12b. DISTRIBUTION CODE This document is available to the public through the National Technical Information Service, Springfield, VA 22161 13. ABSTRACT (Maximum 200 words) The U.S. -

Federal Railroad Administration Office Of

FEDERAL RAILROAD ADMINISTRATION OFFICE OF RAILROAD SAFETY HIGHWAY-RAIL GRADE CROSSING and TRESPASS PREVENTION: COMPLIANCE, PROCEDURES, AND POLICIES PROGRAMS MANUAL October 2019 This page intentionally left blank Contents Chapter 1 – General ....................................................................................... 6 Introduction ................................................................................................................................. 6 Purpose ........................................................................................................................................ 6 Program Goals ............................................................................................................................ 7 Personal Safety ........................................................................................................................... 7 On-the-Job Training (OJT) Program .......................................................................................... 8 Railroad Crossing Safety and Trespass Prevention Resources .................................................. 9 Chapter 2 – Personnel ................................................................................ 10 HRGX&TP Headquarters (HQ) Division Staff (RRS-23) ....................................................... 10 HRGX&TP Staff Director .................................................................................................... 10 HRGX&TP HQ Transportation Analyst (Railroad Trespassing) ........................................ -

WSK Commuter Rail Study

Oregon Department of Transportation – Rail Division Oregon Rail Study Appendix I Wilsonville to Salem Commuter Rail Assessment Prepared by: Parsons Brinckerhoff Team Parsons Brinckerhoff Simpson Consulting Sorin Garber Consulting Group Tangent Services Wilbur Smith and Associates April 2010 Table of Contents EXECUTIVE SUMMARY.......................................................................................................... 1 INTRODUCTION................................................................................................................... 3 WHAT IS COMMUTER RAIL? ................................................................................................... 3 GLOSSARY OF TERMS............................................................................................................ 3 STUDY AREA....................................................................................................................... 4 WES COMMUTER RAIL.......................................................................................................... 6 OTHER PASSENGER RAIL SERVICES IN THE CORRIDOR .................................................................. 6 OUTREACH WITH RAILROADS: PNWR AND BNSF .................................................................. 7 PORTLAND & WESTERN RAILROAD........................................................................................... 7 BNSF RAILWAY COMPANY ..................................................................................................... 7 ROUTE CHARACTERISTICS.................................................................................................. -

Rail Safety in Context 2019/20 a Summary of Health and Safety Performance, Operational Learning and Risk Reduction Activities on Britain’S Railway

A Better, Safer Railway Rail Safety in Context 2019/20 A summary of health and safety performance, operational learning and risk reduction activities on Britain’s railway. 1 Introduction Black swans. Sometimes something happens that you didn’t see coming. Mostly things happen that could have been spotted a mile off. To some, the Clapham collision of 1988 was one such out-of-the-blue event. But when the resulting inquiry delved deeper, a series of similar wrong-side failures was soon revealed. The same could, and doubtless will, be said of the Covid-19 pandemic, at least as far as Britain is concerned. It was seen in China, it came to Italy, it was inevitable that it would come to our islands…because there are no islands in our hyper-connected world. It is too early to see the full effects of the Covid-19 lockdown, as it came into effect on 23 March—just 8 days before the data cut-off for this suite of reports. The data summarised below represents a railway in full effect, with trendlines looking the way we’ve come to expect. The lockdown will distort that picture for 2020/21, so RSSB will be conducting a deep dive on the changes it has brought about, what rail did well and should keep doing after it ends, and the lessons that need to be taken forward. RSSB will also keep on monitoring. It’s how you spot a black swan coming, after all… Headlines 2019/20 • 31 people died as a result of accidents in 2019/20; 499 received major injuries – both represent a decrease on recent years. -

Transportation Research Circular E-C085

TRANSPORTATION RESEARCH Number E-C085 January 2006 Railroad Operational Safety Status and Research Needs TRANSPORTATION RESEARCH BOARD 2005 EXECUTIVE COMMITTEE OFFICERS Chair: John R. Njord, Executive Director, Utah Department of Transportation, Salt Lake City Vice Chair: Michael D. Meyer, Professor, School of Civil and Environmental Engineering, Georgia Institute of Technology, Atlanta Division Chair for NRC Oversight: C. Michael Walton, Ernest H. Cockrell Centennial Chair in Engineering, University of Texas, Austin Executive Director: Robert E. Skinner, Jr., Transportation Research Board TRANSPORTATION RESEARCH BOARD 2005 TECHNICAL ACTIVITIES COUNCIL Chair: Neil J. Pedersen, State Highway Administrator, Maryland State Highway Administration, Baltimore Technical Activities Director: Mark R. Norman, Transportation Research Board Christopher P. L. Barkan, Associate Professor and Director, Railroad Engineering, University of Illinois at Urbana–Champaign, Rail Group Chair Christina S. Casgar, Office of the Secretary of Transportation, Office of Intermodalism, Washington, D.C., Freight Systems Group Chair Larry L. Daggett, Vice President/Engineer, Waterway Simulation Technology, Inc., Vicksburg, Mississippi, Marine Group Chair Brelend C. Gowan, Deputy Chief Counsel, California Department of Transportation, Sacramento, Legal Resources Group Chair Robert C. Johns, Director, Center for Transportation Studies, University of Minnesota, Minneapolis, Policy and Organization Group Chair Patricia V. McLaughlin, Principal, Moore Iacofano Golstman, Inc., Pasadena, California, Public Transportation Group Chair Marcy S. Schwartz, Senior Vice President, CH2M HILL, Portland, Oregon, Planning and Environment Group Chair Agam N. Sinha, Vice President, MITRE Corporation, McLean, Virginia, Aviation Group Chair Leland D. Smithson, AASHTO SICOP Coordinator, Iowa Department of Transportation, Ames, Operations and Maintenance Group Chair L. David Suits, Albany, New York, Design and Construction Group Chair Barry M. -

Section 10 Locomotive and Rolling Stock Data

General Instruction Pages Locomotive and Rolling Stock Data SECTION 10 LOCOMOTIVE AND ROLLING STOCK DATA General Instruction Pages Locomotive and Rolling Stock Data SECTION 10 Contents 3801 Limited Eveleigh - Locomotives................................................................................................................3 3801 Limited Eveleigh - Passenger Rolling Stock...............................................................................................3 3801 Limited Eveleigh - Freight Rolling Stock ...................................................................................................3 Australian Traction Corporation - Locomotives ................................................................................................3 Australian Traction Corporation - Freight Rolling Stock....................................................................................3 Australian Railway Historical Society A.C.T. Division – Locomotives................................................................3 Australian Railway Historical Society A.C.T. Division – Rail Motors ..................................................................4 Australian Railway Historical Society A.C.T. Division – Passenger Rolling Stock...............................................4 Australian Railway Historical Society A.C.T. Division – Freight Rolling Stock....................................................4 Australian Rail Track Corporation Ltd - Special Purpose Rolling Stock..............................................................4 -

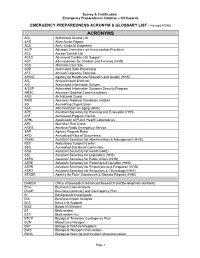

ACRONYM & GLOSSARY LIST - Revised 9/2008

Survey & Certification Emergency Preparedness Initiative – All Hazards EMERGENCY PREPAREDNESS ACRONYM & GLOSSARY LIST - Revised 9/2008 ACRONYMS AAL Authorized Access List AAR After-Action Report ACE Army Corps of Engineers ACIP Advisory Committee on Immunization Practices ACL Access Control List ACLS Advanced Cardiac Life Support ACF Administration for Children and Families (HHS) ACS Alternate Care Site ADP Automated Data Processing AFE Annual Frequency Estimate AHRQ Agency for Healthcare Research and Quality (HHS) AIE Annual Impact Estimate AIS Automated Information System AISSP Automated Information Systems Security Program AMSC American Satellite Communications ANG Air National Guard ANSI American National Standards Institute AO Accrediting Organization AoA Administration on Aging (HHS) APE Assistant Secretary for Planning and Evaluation (HHS) APF Authorized Program Facility APHL Association of Public Health Laboratories ARC American Red Cross ARES Amateur Radio Emergency Service ARF Agency Request Form ARO Annualized Rate of Occurrence ASAM Assistant Secretary for Administration & Management (HHS) ASC Ambulatory Surgical Center ASC Accredited Standards Committee ASH Assistant Secretary for Health (HHS) ASL Assistant Secretary for Legislation (HHS) ASPA Assistant Secretary for Public Affairs (HHS) ASPE Assistant Secretary for Planning & Evaluation (HHS) ASPR Assistant Secretary for Preparedness & Response (HHS) ASRT Assistant Secretary for Resources & Technology (HHS) ATSDR Agency for Toxic Substances & Disease Registry (HHS) BARDA