10-Millisecond Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

HOW FAST IS RF? by RON HRANAC

Originally appeared in the September 2008 issue of Communications Technology. HOW FAST IS RF? By RON HRANAC Maybe a better question is, "What's the speed of an electromagnetic signal in a vacuum?" After all, what we call RF refers to signals in the lower frequency or longer wavelength part of the electromagnetic spectrum. Light is an electromagnetic signal, too, existing in the higher frequency or shorter wavelength part of the electromagnetic spectrum. One way to look at it is to assume that RF is nothing more than really, really low frequency light, or that light is really, really high frequency RF. As you might imagine, the numbers involved in describing RF and light can be very large or very small. For more on this, see my June 2007 CT column, "Big Numbers, Small Numbers" (www.cable360.net/ct/operations/techtalk/23783.html). According to the National Institute of Standards and Technology, the speed of light in a vacuum, c0, is 299,792,458 meters per second (http://physics.nist.gov/cgi-bin/cuu/Value?c). The designation c0 is one that NIST uses to reference the speed of light in a vacuum, while c is occasionally used to reference the speed of light in some other medium. Most of the time the subscript zero is dropped, with c being a generic designation for the speed of light. Let's put c0 in terms that may be more familiar. You probably recall from junior high or high school science class that the speed of light is 186,000 miles per second. -

Low Latency – How Low Can You Go?

WHITE PAPER Low Latency – How Low Can You Go? Low latency has always been an important consideration in telecom networks for voice, video, and data, but recent changes in applications within many industry sectors have brought low latency right to the forefront of the industry. The finance industry and algorithmic trading in particular, or algo-trading as it is known, is a commonly quoted example. Here latency is critical, and to quote Information Week magazine, “A 1-millisecond advantage in trading applications can be worth $100 million a year to a major brokerage firm.” This drives a huge focus on all aspects of latency, including the communications systems between the brokerage firm and the exchange. However, while the finance industry is spending a lot of money on low- latency services between key locations such as New York and Chicago or London and Frankfurt, this is actually only a small part of the wider telecom industry. Many other industries are also now driving lower and lower latency in their networks, such as for cloud computing and video services. Also, as mobile operators start to roll out 5G services, latency in the xHaul mobile transport network, especially the demanding fronthaul domain, becomes more and more important in order to reach the stringent 5G requirements required for the new class of ultra-reliable low-latency services. This white paper will address the drivers behind the recent rush to low- latency solutions and networks and will consider how network operators can remove as much latency as possible from their networks as they also race to zero latency. -

Timestamp Precision

Timestamp Precision Kevin Bross 15 April 2016 15 April 2016 IEEE 1904 Access Networks Working Group, San Jose, CA USA 1 Timestamp Packets The orderInfo field can be used as a 32-bit timestamp, down to ¼ ns granularity: 1 s 30 bits of nanoseconds 2 ¼ ms µs ns ns Two main uses of timestamp: – Indicating start or end time of flow – Indicating presentation time of packets for flows with non-constant data rates s = second ms = millisecond µs = microsecond ns = nanosecond 15 April 2016 IEEE 1904 Access Networks Working Group, San Jose, CA USA 2 Presentation Time To reduce bandwidth during idle periods, some protocols will have variable rates – Fronthaul may be variable, even if rate to radio unit itself is a constant rate Presentation times allows RoE to handle variable data rates – Data may experience jitter in network – Egress buffer compensates for network jitter – Presentation time is when the data is to exit the RoE node • Jitter cleaners ensure data comes out cleanly, and on the right bit period 15 April 2016 IEEE 1904 Access Networks Working Group, San Jose, CA USA 3 Jitter vs. Synchronization Synchronization requirements for LTE are only down to ~±65 ns accuracy – Each RoE node may be off from TAI by up to 65 ns (or more in some circumstances) – Starting and ending a stream may be off by this amount …but jitter from packet to packet must be much tighter – RoE nodes should be able to output data at precise relative times if timestamp is used for a given packet – Relative bit time within a flow is important 15 April 2016 IEEE 1904 Access -

Discovery of Frequency-Sweeping Millisecond Solar Radio Bursts Near 1400 Mhz with a Small Interferometer Physics Department Senior Honors Thesis 2006 Eric R

Discovery of frequency-sweeping millisecond solar radio bursts near 1400 MHz with a small interferometer Physics Department Senior Honors Thesis 2006 Eric R. Evarts Brandeis University Abstract While testing the Small Radio Telescope Interferometer at Haystack Observatory, we noticed occasional strong fringes on the Sun. Upon closer study of this activity, I find that each significant burst corresponds to an X-ray solar flare and corresponds to data observed by RSTN. At very short integrations (512 µs), most bursts disappear in the noise, but a few still show very strong fringes. Studying these <25 events at 512 µs integration reveals 2 types of burst: one with frequency structure and one without. Bursts with frequency structure have a brightness temperature ~1013K, while bursts without frequency structure have a brightness temperature ~108K. These bursts are studied at higher frequency resolution than any previous solar study revealing a very distinct structure that is not currently understood. 1 Table of Contents Abstract ..........................................................................................................................1 Table of Contents...........................................................................................................3 Introduction ...................................................................................................................5 Interferometry Crash Course ........................................................................................5 The Small Radio Telescope -

Microsecond and Millisecond Dynamics in the Photosynthetic

Microsecond and millisecond dynamics in the photosynthetic protein LHCSR1 observed by single-molecule correlation spectroscopy Toru Kondoa,1,2, Jesse B. Gordona, Alberta Pinnolab,c, Luca Dall’Ostob, Roberto Bassib, and Gabriela S. Schlau-Cohena,1 aDepartment of Chemistry, Massachusetts Institute of Technology, Cambridge, MA 02139; bDepartment of Biotechnology, University of Verona, 37134 Verona, Italy; and cDepartment of Biology and Biotechnology, University of Pavia, 27100 Pavia, Italy Edited by Catherine J. Murphy, University of Illinois at Urbana–Champaign, Urbana, IL, and approved April 11, 2019 (received for review December 13, 2018) Biological systems are subjected to continuous environmental (5–9) or intensity correlation function analysis (10). CPF analysis fluctuations, and therefore, flexibility in the structure and func- bins the photon data, obscuring fast dynamics. In contrast, inten- tion of their protein building blocks is essential for survival. sity correlation function analysis characterizes fluctuations in the Protein dynamics are often local conformational changes, which photon arrival rate, accessing dynamics down to microseconds. allows multiple dynamical processes to occur simultaneously and However, the fluorescence lifetime is a powerful indicator of rapidly in individual proteins. Experiments often average over conformation for chromoproteins and for lifetime-based FRET these dynamics and their multiplicity, preventing identification measurements, yet it is ignored in intensity-based analyses. of the molecular origin and impact on biological function. Green 2D fluorescence lifetime correlation (2D-FLC) analysis was plants survive under high light by quenching excess energy, recently introduced as a method to both resolve fast dynam- and Light-Harvesting Complex Stress Related 1 (LHCSR1) is the ics and use fluorescence lifetime information (11, 12). -

Challenges Using Linux As a Real-Time Operating System

https://ntrs.nasa.gov/search.jsp?R=20200002390 2020-05-24T04:26:54+00:00Z View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by NASA Technical Reports Server Challenges Using Linux as a Real-Time Operating System Michael M. Madden* NASA Langley Research Center, Hampton, VA, 23681 Human-in-the-loop (HITL) simulation groups at NASA and the Air Force Research Lab have been using Linux as a real-time operating system (RTOS) for over a decade. More recently, SpaceX has revealed that it is using Linux as an RTOS for its Falcon launch vehicles and Dragon capsules. As Linux makes its way from ground facilities to flight critical systems, it is necessary to recognize that the real-time capabilities in Linux are cobbled onto a kernel architecture designed for general purpose computing. The Linux kernel contain numerous design decisions that favor throughput over determinism and latency. These decisions often require workarounds in the application or customization of the kernel to restore a high probability that Linux will achieve deadlines. I. Introduction he human-in-the-loop (HITL) simulation groups at the NASA Langley Research Center and the Air Force TResearch Lab have used Linux as a real-time operating system (RTOS) for over a decade [1, 2]. More recently, SpaceX has revealed that it is using Linux as an RTOS for its Falcon launch vehicles and Dragon capsules [3]. Reference 2 examined an early version of the Linux Kernel for real-time applications and, using black box testing of jitter, found it suitable when paired with an external hardware interval timer. -

The Effect of Long Timescale Gas Dynamics on Femtosecond Filamentation

The effect of long timescale gas dynamics on femtosecond filamentation Y.-H. Cheng, J. K. Wahlstrand, N. Jhajj, and H. M. Milchberg* Institute for Research in Electronics and Applied Physics, University of Maryland, College Park, Maryland 20742, USA *[email protected] Abstract: Femtosecond laser pulses filamenting in various gases are shown to generate long- lived quasi-stationary cylindrical depressions or ‘holes’ in the gas density. For our experimental conditions, these holes range up to several hundred microns in diameter with gas density depressions up to ~20%. The holes decay by thermal diffusion on millisecond timescales. We show that high repetition rate filamentation and supercontinuum generation can be strongly affected by these holes, which should also affect all other experiments employing intense high repetition rate laser pulses interacting with gases. ©2013 Optical Society of America OCIS codes: (190.5530) Pulse propagation and temporal solitons; (350.6830) Thermal lensing; (320.6629) Supercontinuum generation; (260.5950) Self-focusing. References and Links 1. A. Couairon and A. Mysyrowicz, “Femtosecond filamentation in transparent media,” Phys. Rep. 441(2-4), 47– 189 (2007). 2. P. B. Corkum, C. Rolland, and T. Srinivasan-Rao, “Supercontinuum generation in gases,” Phys. Rev. Lett. 57(18), 2268–2271 (1986). S. A. Trushin, K. Kosma, W. Fuss, and W. E. Schmid, “Sub-10-fs supercontinuum radiation generated by filamentation of few-cycle 800 nm pulses in argon,” Opt. Lett. 32(16), 2432–2434 (2007). 3. N. Zhavoronkov, “Efficient spectral conversion and temporal compression of femtosecond pulses in SF6.,” Opt. Lett. 36(4), 529–531 (2011). 4. C. P. Hauri, R. B. -

1 Evolution and Practice: Low-Latency Distributed Applications in Finance

DISTRIBUTED COMPUTING Evolution and Practice: Low-latency Distributed Applications in Finance The finance industry has unique demands for low-latency distributed systems Andrew Brook Virtually all systems have some requirements for latency, defined here as the time required for a system to respond to input. (Non-halting computations exist, but they have few practical applications.) Latency requirements appear in problem domains as diverse as aircraft flight controls (http://copter.ardupilot.com/), voice communications (http://queue.acm.org/detail.cfm?id=1028895), multiplayer gaming (http://queue.acm.org/detail.cfm?id=971591), online advertising (http:// acuityads.com/real-time-bidding/), and scientific experiments (http://home.web.cern.ch/about/ accelerators/cern-neutrinos-gran-sasso). Distributed systems—in which computation occurs on multiple networked computers that communicate and coordinate their actions by passing messages—present special latency considerations. In recent years the automation of financial trading has driven requirements for distributed systems with challenging latency requirements (often measured in microseconds or even nanoseconds; see table 1) and global geographic distribution. Automated trading provides a window into the engineering challenges of ever-shrinking latency requirements, which may be useful to software engineers in other fields. This article focuses on applications where latency (as opposed to throughput, efficiency, or some other metric) is one of the primary design considerations. Phrased differently, “low-latency systems” are those for which latency is the main measure of success and is usually the toughest constraint to design around. The article presents examples of low-latency systems that illustrate the external factors that drive latency and then discusses some practical engineering approaches to building systems that operate at low latency. -

TIME 1. Introduction 2. Time Scales

TIME 1. Introduction The TCS requires access to time in various forms and accuracies. This document briefly explains the various time scale, illustrate the mean to obtain the time, and discusses the TCS implementation. 2. Time scales International Atomic Time (TIA) is a man-made, laboratory timescale. Its units the SI seconds which is based on the frequency of the cesium-133 atom. TAI is the International Atomic Time scale, a statistical timescale based on a large number of atomic clocks. Coordinated Universal Time (UTC) – UTC is the basis of civil timekeeping. The UTC uses the SI second, which is an atomic time, as it fundamental unit. The UTC is kept in time with the Earth rotation. However, the rate of the Earth rotation is not uniform (with respect to atomic time). To compensate a leap second is added usually at the end of June or December about every 18 months. Also the earth is divided in to standard-time zones, and UTC differs by an integral number of hours between time zones (parts of Canada and Australia differ by n+0.5 hours). You local time is UTC adjusted by your timezone offset. Universal Time (UT1) – Universal time or, more specifically UT1, is counted from 0 hours at midnight, with unit of duration the mean solar day, defined to be as uniform as possible despite variations in the rotation of the Earth. It is observed as the diurnal motion of stars or extraterrestrial radio sources. It is continuous (no leap second), but has a variable rate due the Earth’s non-uniform rotational period. -

Millisecond Pulsar Rivals Best Atomic Clock Stability

41st AnnualFrequency Control Symposium - 1987 MILLISECOND PULSAR RIVALS BEST ATOMIC CLOCK STABILITY by David W. Allan Time and Frequency Division National Bureau of Standards 325 Broadway, Boulder, CO 80303 Abstract pulsar the drift rate is exceedingly constant; i.e., no second derivative of the period has been The measurement time residuals between the observed.[4] The very steady slowing down of the millisecond pulsar PSR 1937+21 and atomic time have pulsar is believed to be caused by the pulsar been significantly reduced. Analysisof data for the radiating electromagnetic and gravitational waves.[5] most recent 865 day period indicates a fractional frequency stability (square KOOt of the modified Time comparisonson the millisecond pulsar require Allan variance) of less than 2 x for the best of measurement systems and metrology integration times of about 113 year.The reasons for techniques. This pulsar is estimated to be about the improved stability will be discussed; these a are 12,000 to 15,000 light years away. The basic result of the combined efforts of several elements in the measurement link between the individuals. millisecond pulsar and the atomic clock are the dispersion and scintillation due to the interstellar Analysis of the measurements taken in two frequency medium, the computationof the ephemeris of the earth bands revealed a random walk behavior for dispersion in barycentric coordinates, the relativistic along the 12,000 to 15,000 light year path from the transformations becauseof the dynamics and pulsar to the earth. This random walk accumulates to gravitational potentials of the atomic clocks about 1,000 nanoseconds (ns) over 265 days. -

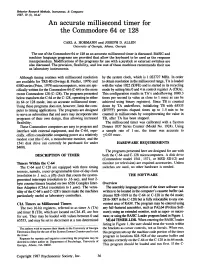

An Accurate Millisecond Timer for the Commodore 64 Or 128

Behavior Research Methods. Instruments. &: Computers 1987. 19 (l). 36-41 An accurate millisecond timer for the Commodore 64 or 128 CARL A. HORMANN and JOSEPH D. ALLEN University of Georgia. Athens. Georgia The use of the Commodore 64 or 128 as an accurate millisecond timer is discussed. BASIC and machine language programs are provided that allow the keyboard to be used as the response manipulandum. Modifications of the programs for use with a joystick or external switches are also discussed. The precision, flexibility, and low cost of these machines recommends their use as laboratory instruments. Although timing routines with millisecond resolution by the system clock, which is 1.022727 MHz. In order are available for TRS-80 (Owings & Fiedler, 1979) and to obtain resolution in the millisecond range, TA is loaded 6500 series (Price, 1979) microcomputers, none are spe with the value 1022 ($3FE) and is started in its recycling cifically written for the Commodore 64 (C-64) or the more mode by setting bits 0 and 4 in control register A (CRA). recent Commodore 128 (C-128). The programs presented This configuration results in TA's underflowing 1000.3 below transform the C-64 or the C-128, operating ineither times per second (a value as close to 1 msec as can be its 64 or 128 mode, into an accurate millisecond timer. achieved using binary registers). Since TB is counted Using these programs does not, however, limit the com down by TA underflows, initializing TB with 65535 puter to timing applications. The programs are designed ($FFFF) permits elapsed times up to 1.5 min to be to serve as subroutines that end users may incorporate into counted in milliseconds by complementing the value in programs of their own design, thus allowing increased TB, after TA has been stopped. -

Time, Delays, and Deferred Work

,ch07.9142 Page 183 Friday, January 21, 2005 10:47 AM Chapter 7 CHAPTER 7 Time, Delays, and Deferred Work At this point, we know the basics of how to write a full-featured char module. Real- world drivers, however, need to do more than implement the operations that control a device; they have to deal with issues such as timing, memory management, hard- ware access, and more. Fortunately, the kernel exports a number of facilities to ease the task of the driver writer. In the next few chapters, we’ll describe some of the ker- nel resources you can use. This chapter leads the way by describing how timing issues are addressed. Dealing with time involves the following tasks, in order of increasing complexity: • Measuring time lapses and comparing times • Knowing the current time • Delaying operation for a specified amount of time • Scheduling asynchronous functions to happen at a later time Measuring Time Lapses The kernel keeps track of the flow of time by means of timer interrupts. Interrupts are covered in detail in Chapter 10. Timer interrupts are generated by the system’s timing hardware at regular intervals; this interval is programmed at boot time by the kernel according to the value of HZ, which is an architecture-dependent value defined in <linux/param.h> or a subplat- form file included by it. Default values in the distributed kernel source range from 50 to 1200 ticks per second on real hardware, down to 24 for software simulators. Most platforms run at 100 or 1000 interrupts per second; the popular x86 PC defaults to 1000, although it used to be 100 in previous versions (up to and including 2.4).