Computing and Predicting Winning Hands in the Trick-Taking Game of Klaverjas

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Examining Different Strategies for the Card Game Sueca

Universiteit Leiden Opleiding Informatica & Economie Examining different strategies for the card game Sueca Name: Vanessa Lopes Carreiro Date: July 13, 2016 1st supervisor: Walter Kosters 2nd supervisor: Rudy van Vliet BACHELOR THESIS Leiden Institute of Advanced Computer Science (LIACS) Leiden University Niels Bohrweg 1 2333 CA Leiden The Netherlands Examining different strategies for the card game Sueca Vanessa Lopes Carreiro Abstract Sueca is a point-trick card game with trumps popular in Portugal, Brazil and Angola. There has not been done any research into Sueca. In this thesis we will study the card game into detail and examine different playing strategies, one of them being basic Monte-Carlo Tree Search. The purpose is to see what strategies can be used to play the card game best. It turns out that the basic Monte-Carlo strategy plays best when both team members play that strategy. i ii Acknowledgements I would like to thank my supervisor Walter Kosters for brainstorming with me about the research and support but also for the conversations about life. It was a pleasure working with him. I am also grateful for Rudy van Vliet for being my second reader and taking time to read this thesis and providing feedback. iii iv Contents Abstract i Acknowledgements iii 1 Introduction 1 2 The game 2 2.1 The deck and players . 2 2.2 The deal . 3 2.3 Theplay ................................................... 3 2.4 Scoring . 4 3 Similar card games 5 3.1 Klaverjas . 5 3.2 Bridge . 6 4 How to win Sueca 7 4.1 Leading the first trick . -

American Skat : Or, the Game of Skat Defined : a Descriptive and Comprehensive Guide on the Rules and Plays of This Interesting

'JTV American Skat, OR, THE GAME OF SKAT DEFINED. BY J. CHARLES EICHHORN A descriptive and comprehensive Guide on the Rules and Plays of if. is interesting game, including table, definition of phrases, finesses, and a full treatise on Skat as played to-day. CHICAGO BREWER. BARSE & CO. Copyrighted, January 21, 1898 EICHHORN By J. CHARLES SKAT, with its many interesting features and plays, has now gained such a firm foothold in America, that my present edition in the English language on this great game of cards, will not alone serve as a guide for beginners, but will be a complete compendium on this absorbing game. It is just a decade since my first book was pub- lished. During the past 10 years, the writer has visited, and been in personal touch with almost all the leading authorities on the game of Skat in Amer- ica as well as in Europe, besides having been contin- uously a director of the National Skat League, as well as a committeeman on rules. In pointing out the features of the game, in giving the rules, defining the plays, tables etc., I shall be as concise as possible, using no complicated or lengthy remarks, but in short and comprehensive manner, give all the points and information required. The game of Skat as played today according to the National Skat League values and rulings, with the addition of Grand Guckser and Passt Nicht as variations, is as well balanced a game, as the leading authorities who have given the same both thorough study and consideration, can make. -

The Game of Whist

THE GAME OF WHIST “WHIST” comes from an English word meaning silent and attentive. Whist is a trick-based card game similar to Euchre and Hearts. Originally developed in eighteenth-century English social circles, it quickly became one of the most popular games in the American colonies and early United States. LAWS OF THE GAME Whist is played with 4 people in pairs of 2. Cut the deck: the player who draws a high card is the dealer! The dealer deals each player 13 cards. The last card (to the dealer) is dealt face up, indicating the trump suit. The player to the dealer’s left leads the first “trick” with any card they choose. “A Pig in a Poke, Whist, Whist” Hand-coloured etching Aces are high. Twos are low. by James Gillray, 1788. National Portrait Gallery, London Moving clockwise, each player plays a card on that trick. If they can, players must follow the suit of the card led or they may play a trump card. Players with no card of that suit can play any card, including a trump card. The player with the highest card of the led suit or the highest trump card wins the trick and places the 4 cards to their side. TRICKS The winning player leads the next trick. When all of the cards have been played, for total of 12 tricks, each pair earns 1 point for every trick they won in excess of 6. For example, a player who wins 8 tricks would earn 2 points. A trick penalty is given to anyone who fails to follow suit when they have that suit in hand. -

Table of Contents

TABLE OF CONTENTS System Requirements . 3 Installation . 3. Introduction . 5 Signing In . 6 HOYLE® PLAYER SERVICES Making a Face . 8 Starting a Game . 11 Placing a Bet . .11 Bankrolls, Credit Cards, Loans . 12 HOYLE® ROYAL SUITE . 13. HOYLE® PLAYER REWARDS . 14. Trophy Case . 15 Customizing HOYLE® CASINO GAMES Environment . 15. Themes . 16. Playing Cards . 17. Playing Games in Full Screen . 17 Setting Game Rules and Options . 17 Changing Player Setting . 18 Talking Face Creator . 19 HOYLE® Computer Players . 19. Tournament Play . 22. Short cut Keys . 23 Viewing Bet Results and Statistics . 23 Game Help . 24 Quitting the Games . 25 Blackjack . 25. Blackjack Variations . 36. Video Blackjack . 42 1 HOYLE® Card Games 2009 Bridge . 44. SYSTEM REQUIREMENTS Canasta . 50. Windows® XP (Home & Pro) SP3/Vista SP1¹, Catch The Ten . 57 Pentium® IV 2 .4 GHz processor or faster, Crazy Eights . 58. 512 MB (1 GB RAM for Vista), Cribbage . 60. 1024x768 16 bit color display, Euchre . 63 64MB VRAM (Intel GMA chipsets supported), 3 GB Hard Disk Space, Gin Rummy . 66. DVD-ROM drive, Hearts . 69. 33 .6 Kbps modem or faster and internet service provider Knockout Whist . 70 account required for internet access . Broadband internet service Memory Match . 71. recommended .² Minnesota Whist . 73. Macintosh® Old Maid . 74. OS X 10 .4 .10-10 .5 .4 Pinochle . 75. Intel Core Solo processor or better, Pitch . 81 1 .5 GHz or higher processor, Poker . 84. 512 MB RAM, 64MB VRAM (Intel GMA chipsets supported), Video Poker . 86 3 GB hard drive space, President . 96 DVD-ROM drive, Rummy 500 . 97. 33 .6 Kbps modem or faster and internet service provider Skat . -

Whist Points and How to Make Them

We inuBt speak by the card, or equivocation will undo us, —Hamlet wtii5T • mm And How to Make Them By C. R. KEILEY G V »1f-TMB OONTINBNTIkL OLUMj NBWYOH 1277 F'RIOE 2 5 Cknxs K^7 PUBLISHED BY C. M. BRIGHT SYRACUSE, N. Y. 1895 WHIST POINTS And How to ^Make Them ^W0 GUL ^ COPYRIGHT, 1895 C. M. BRIGHT INTRODUCTORY. This little book presupposes some knowledge of "whist on the part of the reader, and is intended to contain suggestions rather than fiats. There is nothing new in it. The compiler has simply placed before the reader as concisely as possible some of the points of whist which he thinks are of the most importance in the development of a good game. He hopes it may be of assistance to the learner in- stead of befogging him, as a more pretentious effort might do. There is a reason for every good play in whist. If the manner of making the play is given, the reason for it will occur to every one who can ever hope to become a whist player. WHIST POINTS. REGULAR LEADS. Until you have mastered all the salient points of the game, and are blessed with a partner possessing equal intelligence and information, you will do well to follow the general rule:—Open your hand by leading your longest suit. There are certain well established leads from combinations of high cards, which it would be well for every student of the game to learn. They are easy to remember, and are of great value in giving^ information regarding numerical and trick taking strength simultaneously, ACE. -

The Penguin Book of Card Games

PENGUIN BOOKS The Penguin Book of Card Games A former language-teacher and technical journalist, David Parlett began freelancing in 1975 as a games inventor and author of books on games, a field in which he has built up an impressive international reputation. He is an accredited consultant on gaming terminology to the Oxford English Dictionary and regularly advises on the staging of card games in films and television productions. His many books include The Oxford History of Board Games, The Oxford History of Card Games, The Penguin Book of Word Games, The Penguin Book of Card Games and the The Penguin Book of Patience. His board game Hare and Tortoise has been in print since 1974, was the first ever winner of the prestigious German Game of the Year Award in 1979, and has recently appeared in a new edition. His website at http://www.davpar.com is a rich source of information about games and other interests. David Parlett is a native of south London, where he still resides with his wife Barbara. The Penguin Book of Card Games David Parlett PENGUIN BOOKS PENGUIN BOOKS Published by the Penguin Group Penguin Books Ltd, 80 Strand, London WC2R 0RL, England Penguin Group (USA) Inc., 375 Hudson Street, New York, New York 10014, USA Penguin Group (Canada), 90 Eglinton Avenue East, Suite 700, Toronto, Ontario, Canada M4P 2Y3 (a division of Pearson Penguin Canada Inc.) Penguin Ireland, 25 St Stephen’s Green, Dublin 2, Ireland (a division of Penguin Books Ltd) Penguin Group (Australia) Ltd, 250 Camberwell Road, Camberwell, Victoria 3124, Australia -

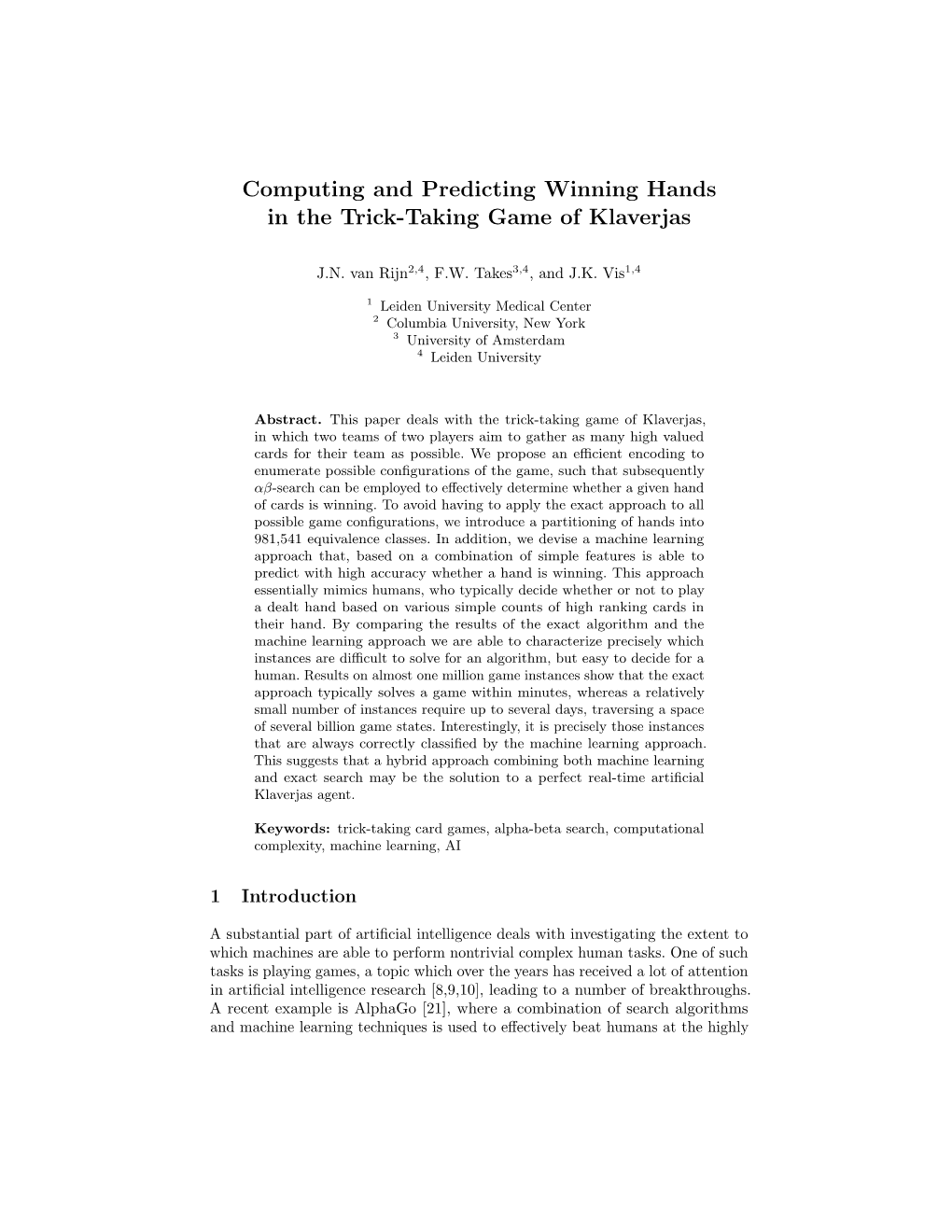

Computing and Predicting Winning Hands in the Trick-Taking Game of Klaverjas

Computing and Predicting Winning Hands in the Trick-Taking Game of Klaverjas Jan N. van Rijn2;4, Frank W. Takes3;4, and Jonathan K. Vis1;4 1 Leiden University Medical Center 2 Columbia University, New York 3 University of Amsterdam 4 Leiden University Abstract. This paper deals with the trick-taking game of Klaverjas, in which two teams of two players aim to gather as many high valued cards for their team as possible. We propose an efficient encoding to enumerate possible configurations of the game, such that subsequently αβ-search can be employed to effectively determine whether a given hand of cards is winning. To avoid having to apply the exact approach to all possible game configurations, we introduce a partitioning of hands into 981;541 equivalence classes. In addition, we devise a machine learning approach that, based on a combination of simple features is able to predict with high accuracy whether a hand is winning. This approach essentially mimics humans, who typically decide whether or not to play a dealt hand based on various simple counts of high ranking cards in their hand. By comparing the results of the exact algorithm and the machine learning approach we are able to characterize precisely which instances are difficult to solve for an algorithm, but easy to decide for a human. Results on almost one million game instances show that the exact approach typically solves a game within minutes, whereas a relatively small number of instances require up to several days, traversing a space of several billion game states. Interestingly, it is precisely those instances that are always correctly classified by the machine learning approach. -

Red Book of Contract Bridge

The RED BOOK of CONTRACT BRIDGE A DIGEST OF ALL THE POPULAR SYSTEMS E. J. TOBIN RED BOOK of CONTRACT BRIDGE By FRANK E. BOURGET and E. J. TOBIN I A Digest of The One-Over-One Approach-Forcing (“Plastic Valuation”) Official and Variations INCLUDING Changes in Laws—New Scoring Rules—Play of the Cards AND A Recommended Common Sense Method “Sound Principles of Contract Bridge” Approved by the Western Bridge Association albert?whitman £7-' CO. CHICAGO 1933 &VlZ%z Copyright, 1933 by Albert Whitman & Co. Printed in U. S. A. ©CIA 67155 NOV 15 1933 PREFACE THE authors of this digest of the generally accepted methods of Contract Bridge have made an exhaustive study of the Approach- Forcing, the Official, and the One-Over-One Systems, and recog¬ nize many of the sound principles advanced by their proponents. While the Approach-Forcing contains some of the principles of the One-Over-One, it differs in many ways with the method known strictly as the One-Over-One, as advanced by Messrs. Sims, Reith or Mrs, Kerwin. We feel that many of the millions of players who have adopted the Approach-Forcing method as advanced by Mr. and Mrs. Culbertson may be prone to change their bidding methods and strategy to conform with the new One-Over-One idea which is being fused with that system, as they will find that, by the proper application of the original Approach- Forcing System, that method of Contract will be entirely satisfactory. We believe that the One-Over-One, by Mr. Sims and adopted by Mrs. -

Pinochle-Rules.Pdf

Pinochle From Wikipedia, the free encyclopedia Pinochle (sometimes pinocle, or penuchle) is a trick-taking Pinochle game typically for two, three or four players and played with a 48 card deck. Derived from the card game bezique, players score points by trick-taking and also by forming combinations of cards into melds. It is thus considered part of a "trick-and- meld" category which also includes a cousin, belote. Each hand is played in three phases: bidding, melds, and tricks. In some areas of the United States, such as Oklahoma and Texas, thumb wrestling is often referred to as "pinochle". [citation needed] The two games, however, are not related. The jack of diamonds and the queen of spades are Contents the "pinochle" meld of pinochle. 1 History Type Trick-taking 2 The deck Players 4 in partnerships or 3 3 Dealing individually, variants exist for 2- 4 The auction 6 or 8 players 5 Passing cards 6 Melding Skills required Strategy 7 Playing tricks Social skills 8 Scoring tricks Teamwork 9 Game variations 9.1 Two-handed Pinochle Card counting 9.2 Three-handed Pinochle Cards 48 (double 24 card deck) or 80 9.3 Cutthroat Pinochle (quadruple 20 card deck) 9.4 Four-handed Pinochle 9.5 Five-handed and larger Pinochle Deck Anglo-American 9.6 Check Pinochle 9.7 Double-deck Pinochle Play Clockwise 9.8 Racehorse Pinochle Card rank A 10 K Q J 9 9.9 Double-deck Pinochle for eight players (highest to 10 See also lowest) 11 References 12 External links Playing time 1 to 5 hours Random chance Medium History Related games Pinochle derives from the game bezique. -

History of the Modern Point Count System in Large Part from Frank Hacker’S Article from Table Talk in January 2013

History of the Modern Point Count System In large part from Frank Hacker’s article from Table Talk in January 2013 Contract bridge (as opposed to auction bridge) was invented in the late 1920s. During the first 40 years of contract bridge: Ely Culbertson (1891 – 1955) and Charles Goren (1901 -1991) were the major contributors to hand evaluation. It’s of interest to note that Culbertson died in Brattleboro, Vermont. Ely Culbertson did not use points to evaluate hands. Instead he used honor tricks. Goren and Culbertson overlapped somewhat. Charles Goren popularized the point count with his 1949 book “Point Count Bidding in Contract Bridge.” This book sold 3 million copies and went through 12 reprintings in its first 5 years. Charles Goren does not deserve the credit for introducing or developing the point count. Bryant McCampbell introduced the 4-3-2-1 point count in 1915, not for auction bridge, but for auction pitch. Bryant McCampbell was also an expert on auction bridge and he published a book on auction bridge in 1916. This book is still in print and available from Amazon. Bryant Mc Campbell was only 50 years old when he died at 103 East Eighty-fourth Street in NY City on May 6 1927 after an illness of more than two years. He was born on January 2, 1877 in St Louis? to Amos Goodwin Mc Campbell and Sarah Leavelle Bryant. He leaves a wife, Irene Johnson Gibson of Little Rock, Ark. They were married on October 16, 1915. Bryant was a retired Textile Goods Merchant and was in business with his younger brother, Leavelle who was born on May 28, 1879. -

Het Vrije Volk : Democratisch-Socialistisch Dagblad

Maandag. 12 April 1954 ; Negende Jaargang Nummer 2717 UITG. N.V. Dfc ARBEIDERSPERS, DIRECTIB H. t. KUILENBURG en C f. d. WAERDEN PRIJS 50 et. pel week, / 6.50 pei kwartaal LOSSE NUMMERS 10 Ct. GIKO «55333 BUREAU JACOj. PERKSTR —9, ROTTERDAM Het VolkVrije TEL. 111030 -' REDAt'T'E 28693, 11394». 84412 Democratisch-socialistisch dagblad HOOFDREDACTEUR: K. VOSKUIL ... ADVERTENTIES: 28446, 28.113, 28:102- Grote ommezwaai BijBelgische verkiezingen SOCIALISTISCHE PARTIJ IS Marie Besnard DE GROTE : komt vrij WINNAAR Vrienden en verwanten van Marie Besnard, de' 57-jarige Franse weduwe die beschuldigd was van vele gifmoor- den, hebben de borgsom van 12.000 Belgische verkiezingen gulden bijeengebracht, die voor haar voorlopige vrijlating ■ was geëist. Katholieken verliezen Waarschijnlijk zal mevrouw Bes- nard, die bijna vijf jaar in de gevan- genis heeft gezeten, vandaag of mor- gen op vrije voeten worden gesteld. Een felle brand brak uit aan boord van het Duitse ms. Tanga in. de Merwehaven. Zes uur lang was. de de meerderheid bezig, eer Rotterdamse brandweer \. Brandstichter in actie duidelijk werd, dat het schip niet ten onze onder zou gaan. Oorzaak: een haven- (Van Brusselse correspondent) arbeider zocht met een. brandende DE SOCIALISTISCHE PARTIJ is als de aansteker naar, een verloren tabaks- % doos '■*. .Ï".'-S."s voorschijn getreden uit de algemene verkiezingen van Zondag bij Eindhoven; vijf ■ in België. De Katholieke Volkspartij heeft haar meerderheid ' ' verloren en is met ongeveer tien pet. achteruitgegaan; de Kenya trekt socialisten wonnen een groot deel van dit katholieke verlies bos- en heidebranden aanbod aan en gingen bovendien strijken met het verlies der communisten. (Van een onzer,verslaggevers) De socialistische stemmenwinst zal tussen détwaalf en vijftien VIJF BOS- EN HEIDEBRANDEN hebben dit weekeind in de driehoek pet. -

A Sampling of Card Games

A Sampling of Card Games Todd W. Neller Introduction • Classifications of Card Games • A small, diverse, simple sample of card games using the standard (“French”) 52-card deck: – Trick-Taking: Oh Hell! – Shedding: President – Collecting: Gin Rummy – Patience/Solitaire: Double Freecell Card Game Classifications • Classification of card games is difficult, but grouping by objective/mechanism clarifies similarities and differences. • Best references: – http://www.pagat.com/ by John McLeod (1800+ games) – “The Penguin Book of Card Games” by David Parlett (250+) Parlett’s Classification • Trick-Taking (or Trick-Avoiding) Games: – Plain-Trick Games: aim for maximum tricks or ≥/= bid tricks • E.g. Bridge, Whist, Solo Whist, Euchre, Hearts*, Piquet – Point-Trick Games: aim for maximum points from cards in won tricks • E.g. Pitch, Skat, Pinochle, Klabberjass, Tarot games *While hearts is more properly a point-trick game, many in its family have plain-trick scoring elements. Piquet is another fusion of scoring involving both tricks and cards. Parlett’s Classification (cont.) • Card-Taking Games – Catch-and-collect Games (e.g. GOPS), Fishing Games (e.g. Scopa) • Adding-Up Games (e.g. Cribbage) • Shedding Games – First-one-out wins (Stops (e.g. Newmarket), Eights (e.g. Crazy 8’s, Uno), Eleusis, Climbing (e.g. President), last-one-in loses (e.g. Durak) • Collecting Games – Forming sets (“melds”) for discarding/going out (e.g. Gin Rummy) or for scoring (e.g. Canasta) • Ordering Games, i.e. Competitive Patience/Solitaire – e.g. Racing Demon (a.k.a. Race/Double Canfield), Poker Squares • Vying Games – Claiming (implicitly via bets) that you have the best hand (e.g.