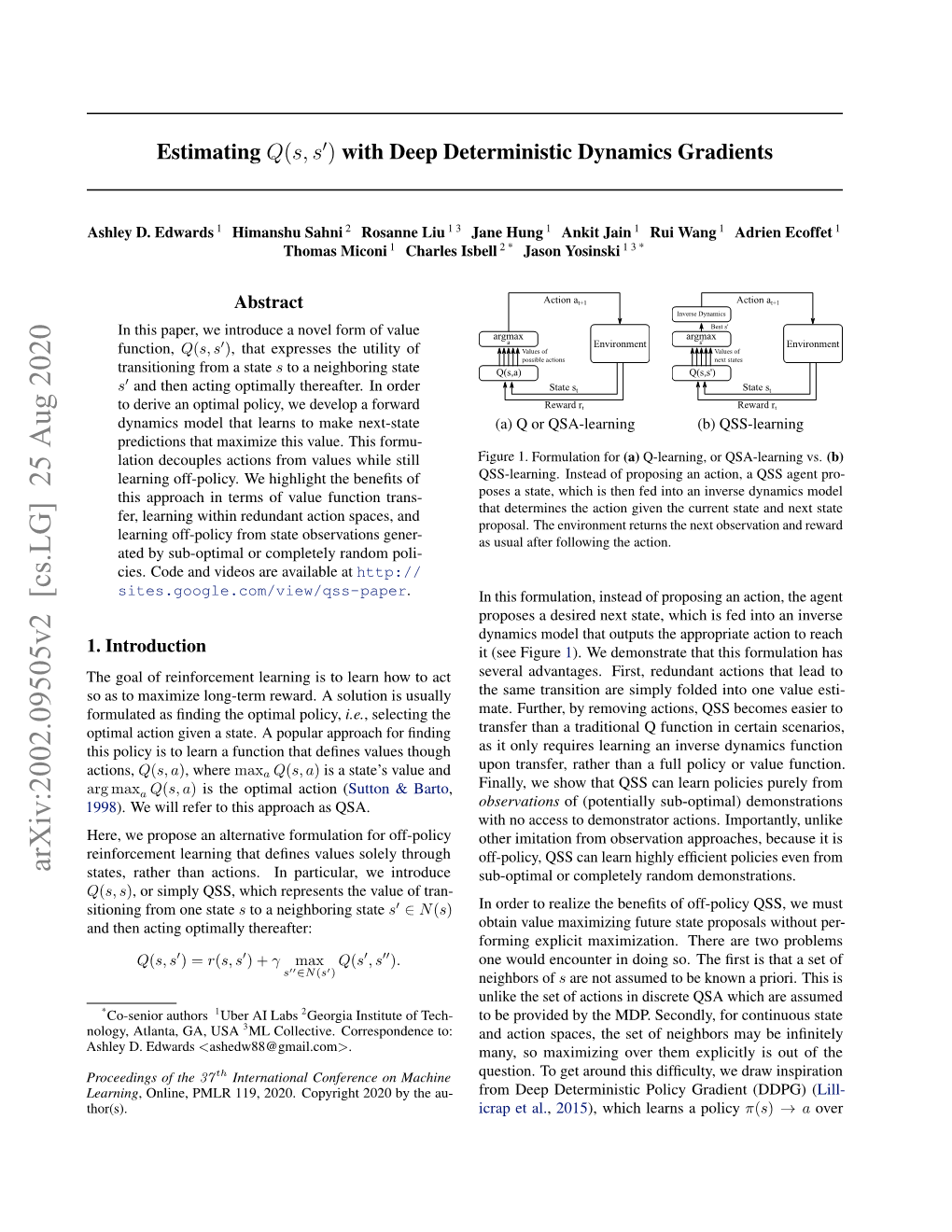

Estimating Q(S,S') with Deep Deterministic Dynamics Gradients

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Origin of the Peculiarities of the Vietnamese Alphabet André-Georges Haudricourt

The origin of the peculiarities of the Vietnamese alphabet André-Georges Haudricourt To cite this version: André-Georges Haudricourt. The origin of the peculiarities of the Vietnamese alphabet. Mon-Khmer Studies, 2010, 39, pp.89-104. halshs-00918824v2 HAL Id: halshs-00918824 https://halshs.archives-ouvertes.fr/halshs-00918824v2 Submitted on 17 Dec 2013 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Published in Mon-Khmer Studies 39. 89–104 (2010). The origin of the peculiarities of the Vietnamese alphabet by André-Georges Haudricourt Translated by Alexis Michaud, LACITO-CNRS, France Originally published as: L’origine des particularités de l’alphabet vietnamien, Dân Việt Nam 3:61-68, 1949. Translator’s foreword André-Georges Haudricourt’s contribution to Southeast Asian studies is internationally acknowledged, witness the Haudricourt Festschrift (Suriya, Thomas and Suwilai 1985). However, many of Haudricourt’s works are not yet available to the English-reading public. A volume of the most important papers by André-Georges Haudricourt, translated by an international team of specialists, is currently in preparation. Its aim is to share with the English- speaking academic community Haudricourt’s seminal publications, many of which address issues in Southeast Asian languages, linguistics and social anthropology. -

21Ia'b;Q'c/Ablc (C/A)(C/B).__(C)(C/Ab)

ON THE q-ANALOG OF KUMMER’$ THEOREM AND APPLICATIONS GEORGE E. ANDREWS 1. Introduction. The q-analogs for Gauss’s summation of 2Fl[a, b; c; 1] and Saalschutz’s summation of 3F2[a, b, -n; c, a b c n 1; 1] are well known, namely, E. Heine [8; p. 107, Equation (6)] showed that (1.1) 21Ia’ b;q’c/ablc (c/a)(c/b).__(c)(c/ab) where - - and (a). (a; q), (1 -a)(1 -aq) (1 -aq-a), (a) (a; q),(R) lim,. (a),. (See also [12; p. 97, Equation (3.3.2.2)].) F. H. Jackson [9; p. 145] showed that b, q-"; q, (c/a),,(c/b). (c),,(c/ab), a2ia, c, abq/cq ql The q-analog of Dixon’s summation of 3F[a, b, c; 1 a b, 1 -[- a c; 1] was more difficult to find, and indeed only a partial analog is true; namely, W. N. Bailey [5] and F. tI. Jackson [10; p. 167, Equation (2)] proved that if a where n is a positive integer, then b, c; q, qa[ bc | (b/a),(c/a)(qa)(bca-). (1.3) 34 | ba-)-(ai (a- qa) bca-1), b,c There are three other well-known summations for the .F1 series, namely, Kummer’s theorem [12; p. 243, Equation (III. 5)] r(1%a-- b) (1.4) 2F[a, b; 1 a- b;--1] r(1 Gauss’s second theorem [12; p. 243, Equation III. 6)] Received December 23, 1972. The author ws partiMly supported by NtioaM Science Foundation Grat GP-23774. -

Proposal for Generation Panel for Latin Script Label Generation Ruleset for the Root Zone

Generation Panel for Latin Script Label Generation Ruleset for the Root Zone Proposal for Generation Panel for Latin Script Label Generation Ruleset for the Root Zone Table of Contents 1. General Information 2 1.1 Use of Latin Script characters in domain names 3 1.2 Target Script for the Proposed Generation Panel 4 1.2.1 Diacritics 5 1.3 Countries with significant user communities using Latin script 6 2. Proposed Initial Composition of the Panel and Relationship with Past Work or Working Groups 7 3. Work Plan 13 3.1 Suggested Timeline with Significant Milestones 13 3.2 Sources for funding travel and logistics 16 3.3 Need for ICANN provided advisors 17 4. References 17 1 Generation Panel for Latin Script Label Generation Ruleset for the Root Zone 1. General Information The Latin script1 or Roman script is a major writing system of the world today, and the most widely used in terms of number of languages and number of speakers, with circa 70% of the world’s readers and writers making use of this script2 (Wikipedia). Historically, it is derived from the Greek alphabet, as is the Cyrillic script. The Greek alphabet is in turn derived from the Phoenician alphabet which dates to the mid-11th century BC and is itself based on older scripts. This explains why Latin, Cyrillic and Greek share some letters, which may become relevant to the ruleset in the form of cross-script variants. The Latin alphabet itself originated in Italy in the 7th Century BC. The original alphabet contained 21 upper case only letters: A, B, C, D, E, F, Z, H, I, K, L, M, N, O, P, Q, R, S, T, V and X. -

Defining Z in Q 75

Annals of Mathematics 183 (2016), 73{93 http://dx.doi.org/10.4007/annals.2016.183.1.2 Defining Z in Q By Jochen Koenigsmann Abstract We show that Z is definable in Q by a universal first-order formula in the language of rings. We also present an 89-formula for Z in Q with just one universal quantifier. We exhibit new diophantine subsets of Q like the complement of the image of the norm map under a quadratic extension, and we give an elementary proof for the fact that the set of nonsquares is diophantine. 1. Z is universally definable in Q Hilbert's 10th problem was to find a general algorithm for deciding, given n any n and any polynomial f 2 Z[x1; : : : ; xn], whether or not f has a zero in Z . Building on earlier work by Martin Davis, Hilary Putnam and Julia Robinson, Yuri Matiyasevich proved in 1970 that there can be no such algorithm. In particular, the existential first-order theory Th9(Z) of Z (in the language of rings Lring := f+; ·; 0; 1g) is undecidable. Hilbert's 10th problem over Q, i.e., the question whether Th9(Q) is decidable, is still open. If one had an existential (or diophantine) definition of Z in Q (i.e., a definition by an existential first-order Lring-formula), then Th9(Z) would be interpretable in Th9(Q), and the answer would, by Matiyasevich's Theorem, again be no. But it is still open whether Z is existentially definable in Q. The earliest first-order definition of Z in Q, which is due to Julia Robinson ([Rob49]), can be expressed by an 898-formula of the shape φ(t): 8x18x29y1 · · · 9y78z1 · · · 8z6 f(t; x1; x2; y1; : : : ; y7; z1; : : : ; z6) = 0 for some f 2 Z[t; x1; x2; y1; : : : ; y7; z1; : : : ; z6]; i.e., for any t 2 Q, t 2 Z if and only if φ(t) holds in Q: The research on this paper started while the author enjoyed the hospitality of the Max- Planck-Institut Bonn. -

Special Characters in Aletheia

Special Characters in Aletheia Last Change: 28 May 2014 The following table comprises all special characters which are currently available through the virtual keyboard integrated in Aletheia. The virtual keyboard aids re-keying of historical documents containing characters that are mostly unsupported in other text editing tools (see Figure 1). Figure 1: Text input dialogue with virtual keyboard in Aletheia 1.2 Due to technical reasons, like font definition, Aletheia uses only precomposed characters. If required for other applications the mapping between a precomposed character and the corresponding decomposed character sequence is given in the table as well. When writing to files Aletheia encodes all characters in UTF-8 (variable-length multi-byte representation). Key: Glyph – the icon displayed in the virtual keyboard. Unicode – the actual Unicode character; can be copied and pasted into other applications. Please note that special characters might not be displayed properly if there is no corresponding font installed for the target application. Hex – the hexadecimal code point for the Unicode character Decimal – the decimal code point for the Unicode character Description – a short description of the special character Origin – where this character has been defined Base – the base character of the special character (if applicable), used for sorting Combining Character – combining character(s) to modify the base character (if applicable) Pre-composed Character Decomposed Character (used in Aletheia) (only for reference) Combining Glyph -

The Role of Gut Microbiota in an Ischemic Stroke

International Journal of Molecular Sciences Review The Role of Gut Microbiota in an Ischemic Stroke Ryszard Pluta 1,* , Sławomir Januszewski 1 and Stanisław J. Czuczwar 2 1 Laboratory of Ischemic and Neurodegenerative Brain Research, Mossakowski Medical Research Institute, Polish Academy of Sciences, 02-106 Warsaw, Poland; [email protected] 2 Department of Pathophysiology, Medical University of Lublin, 20-090 Lublin, Poland; [email protected] * Correspondence: [email protected]; Tel.: +48-22-6086-540 Abstract: The intestinal microbiome, the largest reservoir of microorganisms in the human body, plays an important role in neurological development and aging as well as in brain disorders such as an ischemic stroke. Increasing knowledge about mediators and triggered pathways has contributed to a better understanding of the interaction between the gut-brain axis and the brain-gut axis. Intestinal bacteria produce neuroactive compounds and can modulate neuronal function, which affects behavior after an ischemic stroke. In addition, intestinal microorganisms affect host metabolism and immune status, which in turn affects the neuronal network in the ischemic brain. Here we discuss the latest results of animal and human research on two-way communication along the gut-brain axis in an ischemic stroke. Moreover, several reports have revealed the impact of an ischemic stroke on gut dysfunction and intestinal dysbiosis, highlighting the delicate play between the brain, intestines and microbiome after this acute brain injury. Despite our growing knowledge of intestinal microflora in shaping brain health, host metabolism, the immune system and disease progression, its therapeutic options in an ischemic stroke have not yet been fully utilized. -

Q: a Super-Quick, Articulation-Invariant Stroke-Gesture Recognizer for Low-Resource Devices

$Q: A Super-Quick, Articulation-Invariant Stroke-Gesture Recognizer for Low-Resource Devices Radu-Daniel Vatavu Lisa Anthony Jacob O. Wobbrock MintViz Lab MANSiD Center Department of CISE Information School DUB Group University Stefan cel Mare of Suceava University of Florida University of Washington Suceava 720229, Romania Gainesville, FL 32611, USA Seattle, WA 98195, USA [email protected] [email protected]fl.edu [email protected] ABSTRACT We introduce $Q, a super-quick, articulation-invariant point- cloud stroke-gesture recognizer for mobile, wearable, and embedded devices with low computing resources. $Q ran up to 142× faster than its predecessor $P in our benchmark eval- uations on several mobile CPUs, and executed in less than 3% of $P’s computations without any accuracy loss. In our most extreme evaluation demanding over 99% user-independent recognition accuracy, $P required 9.4s to run a single classifi- cation, while $Q completed in just 191ms (a 49× speed-up) on a Cortex-A7, one of the most widespread CPUs on the mobile market. $Q was even faster on a low-end 600-MHz processor, on which it executed in only 0.7% of $P’s computa- Figure 1. Classification times for $P and $Q (left) on five CPU architec- tures common for today’s mobile, wearable, and embedded platforms tions (a 142× speed-up), reducing classification time from two for a set of 16 distinct stroke-gestures with user-independent accuracy re- minutes to less than one second. $Q is the next major step for quirements of >99%. Speed-ups of 46× on average and up to 142× over the “$-family” of gesture recognizers: articulation-invariant, $P [50] make $Q very fast and suitable for next generation miniaturized extremely fast, accurate, and implementable on top of $P with low-resource devices, like the Gear Fit smartwatch shown at right. -

As Structure Group. Denote by Ci Thechern Olasses Of

110 MATHEMATICS: F. HIRZEBRUCH PROC. X. A. S. the Institute for Advanced Study Electronic Computer, and Mr. E. Hovmoller who analyzed the charts used in the forecasts. * This work was sponsored jointly by O.N.R. and the Geophysics Researah Directorate of the Air Force Cambridge Research Center, under contract N6-ori-139 with O.N.R. It was presented at a joint meeting of the American Meteorological Society and the Fluid Dynamics Section of the American Physical Society in Washington, D. C., May 1953. 1 Charney, J. G., and Phillips, N. A., "Numerical Integration of the Quasi-geostrophic Equations for Barotropic and Simple Baroclinic Flows," J. Meteorol., 10, No. 2, 71-99 (1953). 2Charney, J. G., "On the Scale of Atmospheric Motions," Geofye. Publikcasjoner, 0810, 17, No. 2, 17 pp. (1948). 8 This iteration method is essentially the extrapolated Liebmann method. See Frankel, S. P., "Convergence Rates of Iterative-Treatments of Partial Differential Equations," Math. Tables and Other Aids to Computation, 4, No. 30, 65-75 (1950).; and Young, D., "Iterative Methods for Solving Partial Difference Equations of Elliptic Type" (1951). Seminar given at Numerical Analysis Seminar of Ballistic Research Laboratories,-Aberdeen Proving Ground, Md. (based on Ph.D. thesis by author, Harvard University, Cambridge, Mass., 1950). ARITHMETIC GENERA AND THE THEOREM OF RIEMANN-ROCH FOR ALGEBRAIC VARIETIES BY FRIEDRICH HIRZEBRUCH THE INSTITUTE FOR ADVANCED STUDY Communicated by S. Lefschetz, December 21, 1963 Introduction.-In a preceding note' we posed the problem whether the arithmetic genus II(V.) of a (non-singular) algebraic variety2 coincides with the Todd genus T(V.). -

Continuous Stroke Printing

Children’s Rehabilitation Services CONTINUOUS STROKE PRINTING The more times a beginning writer has to lift a pencil, the harder it becomes to make a legible letter. Every pencil lift creates another opportunity to make a mistake. Fewer strokes means fewer steps to remember in forming each letter and decreases directionality confusion. It also increases the chances the student will develop the correct “motor memory” for the letter formation. The development of motor “memories” for the patterns used in printing is what helps the student’s printing to become fast and automatic, without sacrificing legibility. Continuous stroke printing may also help eliminate letter reversals. As the student is not lifting his/her pencil, there is less opportunity for confusion around which side of the “ball” to place the “stick” on (e.g., ‘b’ vs. ‘d’). Likewise, as the student does not have to pause and think about where to place each connecting stroke, the speed and flow of printing often improves. Which Letters Are Non-Lifting? Uppercase lifting letters include: A, E, F, H, I, J, K, Q, T, X, Y Uppercase non-lifting letters include: B, C, D, G, L, M, N, O, P, R, S, U, V, W, Z Lowercase lifting letters include: f, i, j, k, t, x, y Lowercase non-lifting letters include: a, b, c, d, e, g, h, l, m, n, o, p, q, r, s, u, v, w, z Please see the following page for a stroke directionality chart. Tips to Make Teaching Easier Continuous stroke Encourage the student to begin all uppercase letters from printing helps: the top and lowercase letters from the middle or top, as . -

Fonts for Latin Paleography

FONTS FOR LATIN PALEOGRAPHY Capitalis elegans, capitalis rustica, uncialis, semiuncialis, antiqua cursiva romana, merovingia, insularis majuscula, insularis minuscula, visigothica, beneventana, carolina minuscula, gothica rotunda, gothica textura prescissa, gothica textura quadrata, gothica cursiva, gothica bastarda, humanistica. User's manual 5th edition 2 January 2017 Juan-José Marcos [email protected] Professor of Classics. Plasencia. (Cáceres). Spain. Designer of fonts for ancient scripts and linguistics ALPHABETUM Unicode font http://guindo.pntic.mec.es/jmag0042/alphabet.html PALEOGRAPHIC fonts http://guindo.pntic.mec.es/jmag0042/palefont.html TABLE OF CONTENTS CHAPTER Page Table of contents 2 Introduction 3 Epigraphy and Paleography 3 The Roman majuscule book-hand 4 Square Capitals ( capitalis elegans ) 5 Rustic Capitals ( capitalis rustica ) 8 Uncial script ( uncialis ) 10 Old Roman cursive ( antiqua cursiva romana ) 13 New Roman cursive ( nova cursiva romana ) 16 Half-uncial or Semi-uncial (semiuncialis ) 19 Post-Roman scripts or national hands 22 Germanic script ( scriptura germanica ) 23 Merovingian minuscule ( merovingia , luxoviensis minuscula ) 24 Visigothic minuscule ( visigothica ) 27 Lombardic and Beneventan scripts ( beneventana ) 30 Insular scripts 33 Insular Half-uncial or Insular majuscule ( insularis majuscula ) 33 Insular minuscule or pointed hand ( insularis minuscula ) 38 Caroline minuscule ( carolingia minuscula ) 45 Gothic script ( gothica prescissa , quadrata , rotunda , cursiva , bastarda ) 51 Humanist writing ( humanistica antiqua ) 77 Epilogue 80 Bibliography and resources in the internet 81 Price of the paleographic set of fonts 82 Paleographic fonts for Latin script 2 Juan-José Marcos: [email protected] INTRODUCTION The following pages will give you short descriptions and visual examples of Latin lettering which can be imitated through my package of "Paleographic fonts", closely based on historical models, and specifically designed to reproduce digitally the main Latin handwritings used from the 3 rd to the 15 th century. -

Stability Testing of Biotechnological/Biological Products

European Medicines Agency July 1996 CPMP/ICH/138/95 ICH Topic Q 5 C Quality of Biotechnological Products: Stability Testing of Biotechnological/Biological Products Step 5 NOTE FOR GUIDANCE ON QUALITY OF BIOTECHNOLO-GICAL PRODUCTS: STABILITY TESTING OF BIOTECHNOLOGICAL/BIOLOGICAL PRODUCTS (CPMP/ICH/138/95) TRANSMISSION TO CPMP December 1995 FINAL APPROVAL BY CPMP December 1995 PROPOSED DATE FOR COMING INTO OPERATION July 1996 7 Westferry Circus, Canary Wharf, London, E14 4HB, UK Tel. (44-20) 74 18 85 75 Fax (44-20) 75 23 70 40 E-mail: [email protected] http://www.emea.eu.int EMEA 2006 Reproduction and/or distribution of this document is authorised for non commercial purposes only provided the EMEA is acknowledged QUALITY OF BIOTECHNOLOGICAL PRODUCTS: STABILITY TESTING OF BIOTECHNOLOGICAL/BIOLOGICAL PRODUCTS Annex to the ICH Harmonised Tripartite Guideline for the Stability Testing of New Drug Substances and Products 1. PREAMBLE The guidance stated in the ICH Harmonised Tripartite Guideline ‘Stability Testing of New Drug Substances and Products’ (27 October 1993) applies in general to biotechnological/biological products. However, biotechnological/biological products do have distinguishing characteristics to which consideration should be given in any well-defined testing program designed to confirm their stability during the intended storage period. For such products, in which the active components are typically proteins and/or polypeptides, maintenance of molecular conformation and, hence of biological activity, is dependent on noncovalent as well as covalent forces. The products are particularly sensitive to environmental factors such as temperature changes, oxidation, light, ionic content, and shear. In order to ensure maintenance of biological activity and to avoid degradation, stringent conditions for their storage are usually necessary. -

MPS-450A-Q-KIT 450W Pulse Start Metal Halide

MPS-450A-Q-KIT 450W PULSE START METAL HALIDE PRODUCT FEATURES • ANSI Designation: M144 • Capacitor Voltage Rating: 400 V • Line Voltage: 120/208/240/277 • Capacitor Temperature Rating: 90ºC • Regulation: 10% • Winding Materials: Cu/Cu • Frequency: 60 Hz • Lamp Starter: XG-04 • Recommended Fuse (A):10/8/5/5 • Agency Certification: RU, cRU • Circuit Type: CWA • Maximum Distance to Lamp: N/A • Insulation Class: 180ºC • 2 Year Warranty • Capacitor Value: 26.5 MF LAMP TYPE: 450W MH-PS INPUT VOLTAGE: Quad Tap (120/208/240/277) CIRCUIT TYPE: CWA ELECTRICAL SPECIFICATIONS LINE VOLT POWER LINE CURRENT INPUT OPEN CIRCUIT MINIMUM UL TEMP WIRING (V) FACTOR OPERATING OPEN START POWER (W) VOLTAGE (V) START TEMP CODE DIAGRAM 120 High 4.41 4.20 4.79 505 313 -30ºC C H3 208 High 2.56 2.49 1.93 505 313 -30ºC C H3 240 High 2.20 2.12 1.71 505 313 -30ºC C H3 277 High 1.93 1.87 1.49 505 313 -30ºC C H3 WIRING DIAGRAMS DIAGRAM H3 BALLAST/TRANSFORMER CAP ALL UNUSED WIRES 277V LAMP LAMP LINE HOT 277V 240V SOCKET BLACK 208V PIN (BLACK) 120V X3 240V COM X3 X1 LINE NEUTRAL LAMP SOCKET 208V WHITE SHELL (WHITE) COM IGNITOR X1 LAMP X3 120V CAP CAP IGNITOR LAMP X2 COM CAP X2 LINE RESISTOR CAPACITOR IS CAP NOT OPTIONAL CAPACITOR COLORS SHOWN REPRESENT THE MOST COMMON GONFIGURATION. LAMP ON CWA MODELS COM HOWEVER, DEPENDING ON THE APPLICATION AND LOCAL CODE, COM SLIGHT DIFFERENCES MAY EXIST. Keystone Technologies, LLC • P.O. Box 246, Ambler PA 19002 • Phone (800) 464-2680 • Fax (215) 628-4412 • www.keystoneballast.com Specifications subject to change.