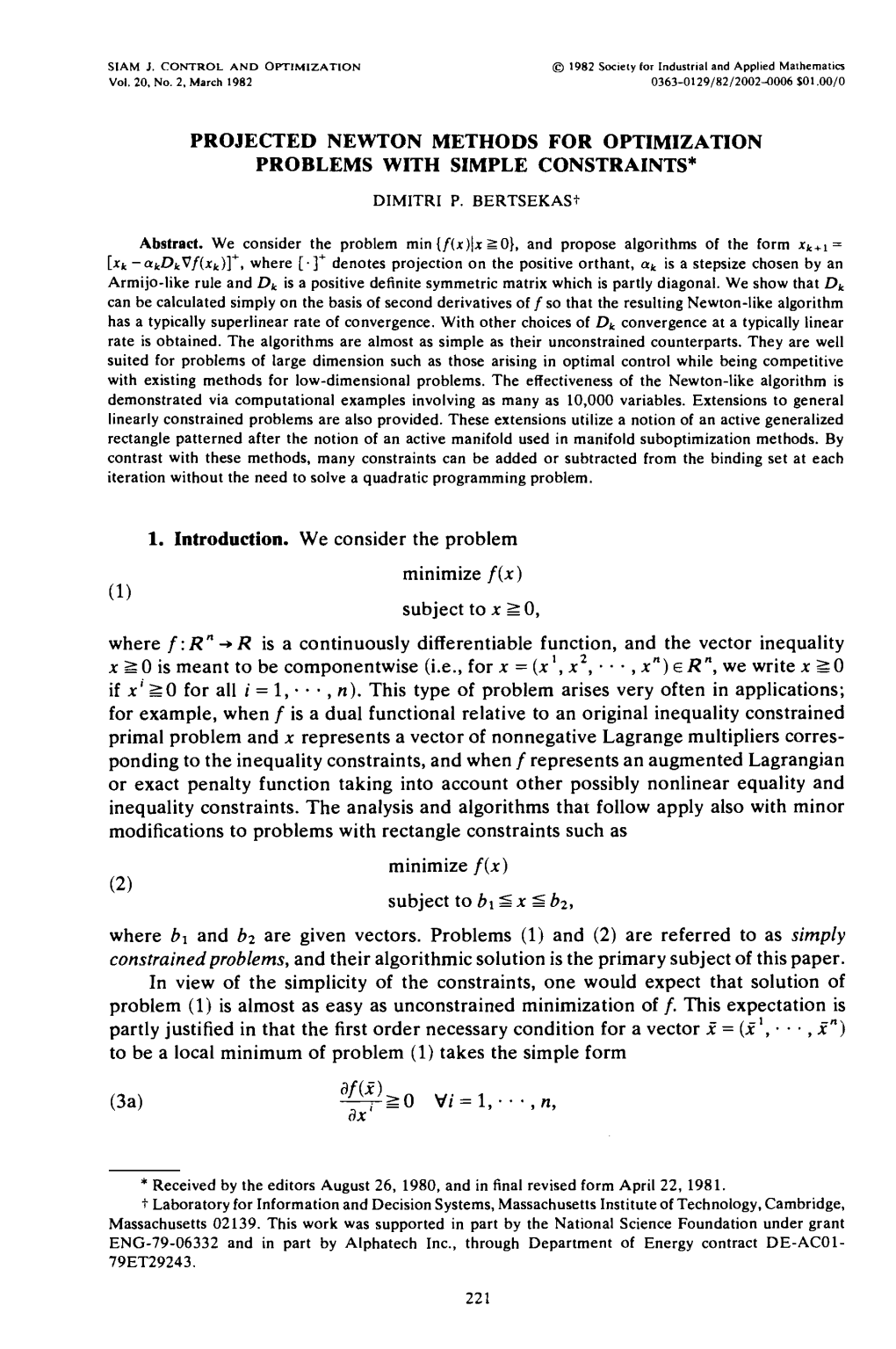

Projected Newton Methods for Optimization Problems with Simple Constraints*

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

War Memorials in Organizational Memory: a Case Study of the Bank of England

War memorials in organizational memory: a case study of the Bank of England Article Published Version Creative Commons: Attribution-Noncommercial-No Derivative Works 4.0 Open Access Newton, L. and Barnes, V. (2018) War memorials in organizational memory: a case study of the Bank of England. Management and Organizational History, 13 (4). pp. 309-333. ISSN 1744-9367 doi: https://doi.org/10.1080/17449359.2018.1534596 Available at http://centaur.reading.ac.uk/79070/ It is advisable to refer to the publisher’s version if you intend to cite from the work. See Guidance on citing . To link to this article DOI: http://dx.doi.org/10.1080/17449359.2018.1534596 Publisher: Taylor and Francis All outputs in CentAUR are protected by Intellectual Property Rights law, including copyright law. Copyright and IPR is retained by the creators or other copyright holders. Terms and conditions for use of this material are defined in the End User Agreement . www.reading.ac.uk/centaur CentAUR Central Archive at the University of Reading Reading’s research outputs online Management & Organizational History ISSN: 1744-9359 (Print) 1744-9367 (Online) Journal homepage: https://www.tandfonline.com/loi/rmor20 War memorials in organizational memory: a case study of the Bank of England Victoria Barnes & Lucy Newton To cite this article: Victoria Barnes & Lucy Newton (2018) War memorials in organizational memory: a case study of the Bank of England, Management & Organizational History, 13:4, 309-333, DOI: 10.1080/17449359.2018.1534596 To link to this article: https://doi.org/10.1080/17449359.2018.1534596 © 2018 The Author(s). -

Michael Faraday: Scientific Insights from the Burning of a Candle a Presentation for the 3 Rd World Candle Congress

Michael Faraday: Scientific Insights from the Burning of a Candle A presentation for The 3 rd World Candle Congress by Carl W. Hudson, Ph.D. Wax Technical Advisor, Retired & Winemaker 8-July-2010 M. Faraday Presentation by C.W. Hudson 1 Two-Part Presentation 1. Michael Faraday – Scientist - Researcher Lecturer - Inventor 2. The Chemical History of a Candle -- The Lectures The Experiments The Teachings & Learnings 8-July-2010 M. Faraday Presentation by C.W. Hudson 2 1 Information Sources • Michael Faraday – The Chemical History of a Candle 2002 (Dover, Mineola, NY) • J.G. Crowther - Men of Science 1936 (W.W. Norton & Co., NY, NY) • The Internet; Wikipedia 8-July-2010 M. Faraday Presentation by C.W. Hudson 3 Michael Faraday (1791-1867): Monumental Scientist • J.G. Crowther called Faraday “The greatest physicist of the 19th century & the greatest of all experimental investigators of physical nature.” • Albert Einstein recognized Faraday’s importance by comparing his place in scientific history to that of Galileo • Einstein kept a photograph of Faraday alongside a painting of Sir Isaac Newton in his study 8-July-2010 M. Faraday Presentation by C.W. Hudson 4 2 Faraday: a Prolific Reader & Writer • Born poor, Faraday adapted well to living by simple means & developed a strong work ethic • Completed 7 yr apprenticeship as a bookbinder & bookseller • Apprenticeship afforded opportunity to read extensively, especially books on science • In 1812 (20 yr old) Faraday attended lectures by Sir Humphrey Davy at Royal Institution • Faraday later sent Davy 300-page book (!) based on notes taken during the lectures 8-July-2010 M. -

The Old Pangbournian Record Volume 2

The Old Pangbournian Record Volume 2 Casualties in War 1917-2020 Collected and written by Robin Knight (56-61) The Old Pangbournian Society The Old angbournianP Record Volume 2 Casualties in War 1917-2020 Collected and written by Robin Knight (56-61) The Old Pangbournian Society First published in the UK 2020 The Old Pangbournian Society Copyright © 2020 The moral right of the Old Pangbournian Society to be identified as the compiler of this work is asserted in accordance with Section 77 of the Copyright, Design and Patents Act 1988. All rights reserved. No part of this publication may be reproduced, “Beloved by many. stored in a retrieval system or transmitted in any form or by any Death hides but it does not divide.” * means electronic, mechanical, photocopying, recording or otherwise without the prior consent of the Old Pangbournian Society in writing. All photographs are from personal collections or publicly-available free sources. Back Cover: © Julie Halford – Keeper of Roll of Honour Fleet Air Arm, RNAS Yeovilton ISBN 978-095-6877-031 Papers used in this book are natural, renewable and recyclable products sourced from well-managed forests. Typeset in Adobe Garamond Pro, designed and produced *from a headstone dedication to R.E.F. Howard (30-33) by NP Design & Print Ltd, Wallingford, U.K. Foreword In a global and total war such as 1939-45, one in Both were extremely impressive leaders, soldiers which our national survival was at stake, sacrifice and human beings. became commonplace, almost routine. Today, notwithstanding Covid-19, the scale of losses For anyone associated with Pangbourne, this endured in the World Wars of the 20th century is continued appetite and affinity for service is no almost incomprehensible. -

Or, If the Sine Functions Be Eliminated by Means of (11)

52 BINOMIAL THEOREM AND NEWTONS MONUMENT. [Nov., or, if the sine functions be eliminated by means of (11), e <*i : <** : <xz = X^ptptf : K(P*ptf : A3(Pi#*) . (53) While (52) does not enable us to construct the point of least attraction, it furnishes a solution of the converse problem : to determine the ratios of the masses of three points so as to make the sum of their attractions on a point P within their triangle a minimum. If, in (50), we put n = 2 and ax + a% = 1, and hence pt + p% = 1, this equation can be regarded as that of a curve whose ordinate s represents the sum of the attractions exerted by the points et and e2 on the foot of the ordinate. This curve approaches asymptotically the perpendiculars erected on the vector {ex — e2) at ex and e% ; and the point of minimum attraction corresponds to its lowest point. Similarly, in the case n = 3, the sum of the attractions exerted by the vertices of the triangle on any point within this triangle can be rep resented by the ordinate of a surface, erected at this point at right angles to the plane of the triangle. This suggestion may here suffice. 22. Concluding remark.—Further results concerning gen eralizations of the problem of the minimum sum of distances are reserved for a future communication. WAS THE BINOMIAL THEOEEM ENGKAVEN ON NEWTON'S MONUMENT? BY PKOFESSOR FLORIAN CAJORI. Moritz Cantor, in a recently published part of his admir able work, Vorlesungen über Gescliichte der Mathematik, speaks of the " Binomialreihe, welcher man 1727 bei Newtons Tode . -

London in One Day Itinerary

Thursday's post for London Love was Part 1 of 2 of 'London in One Day' where I gave you a short list of essentials to make your day out run as smoothly and comfortably as possible, along with information to purchase your Tube and London Eye tickets . Now that we've got all that taken care off we're off on a busy day filled with many of London's best landmarks and attractions. *Please note that all times listed on here are approximate and will be based on things like how fast you walk, possible train delays, or unexpected crowds. I've done my best to estimate these based on my experiences in London to show you as much as possible in one day (albeit a fairly long, but definitely enjoyable, day). (9:00 a.m. - 10:00 a.m.) WESTMINSTER & WHITEHALL ©2014 One Trip at a Time |www.onetripatatime.com| Yep it's an early start but you've got places to go and things to see! You won't be sorry you got up bright and early especially when you exit Westminster Station and look up and there it is... Big Ben ! Unarguably London's best known landmark and where our tour begins. Take some selfies or have your travel companions take your photo with Big Ben and then save them for later when you have free WiFi (at lunch) to post on all your social network sites. Don't worry that lunch is a few hours off because with time zone differences people back home probably aren't awake to see them yet anyway. -

Insect Flight: from Newton's Law to Neurons

CO07CH12-Wang ARI 22 February 2016 19:10 ANNUAL REVIEWS Further Click here to view this article's online features: • Download figures as PPT slides • Navigate linked references • Download citations Insect Flight: From Newton’s • Explore related articles • Search keywords Law to Neurons Z. Jane Wang1,2 1Department of Physics, Cornell University, Ithaca, New York 14853; email: [email protected] 2Sibley School of Mechanical and Aerospace Engineering, Cornell University, Ithaca, New York 14853 Annu. Rev. Condens. Matter Phys. 2016. Keywords 7:281–300 physics of behavior, animal locomotion, aerodynamics, flight stability and The Annual Review of Condensed Matter Physics is online at conmatphys.annualreviews.org control, flight reflex, neural feedback control, halteres, motor neurons, steering muscles This article’s doi: 10.1146/annurev-conmatphys-031113-133853 Abstract Copyright c 2016 by Annual Reviews. All rights reserved Why do animals move the way they do? Bacteria, insects, birds, and fish Access provided by Cornell University on 03/21/16. For personal use only. share with us the necessity to move so as to live. Although each organism follows its own evolutionary course, it also obeys a set of common laws. At the very least, the movement of animals, like that of planets, is governed Annu. Rev. Condens. Matter Phys. 2016.7:281-300. Downloaded from www.annualreviews.org by Newton’s law: All things fall. On Earth, most things fall in air or water, and their motions are thus subject to the laws of hydrodynamics. Through trial and error, animals have found ways to interact with fluid so they can float, drift, swim, sail, glide, soar, and fly. -

August 2012 1

Heritage Gazette of the Trent Valley Volume 17, number 2 August 2012 1 ISSN 1206-4394 The Heritage Gazette of the Trent Valley Volume 17, number 2, August 2012 Editor’s Corner ……………………………………………………….…….……………………………Elwood Jones 2 The Curious Case of the Stolen Body in the Trunk ……………………………………...……… Colum M. Diamond 3 Hazelbrae Barnardo Home Memorial: Listings 1892-1896 ……………………………………………… John Sayers 11 Ups and Downs: the Barnardo magazine ………………………………………………………………… Gail Corbett 18 Calling All Friends of Little Lake Cemetery …………………………………………………………… Shelagh Neck 20 Peterborough County Council Assists Trent Valley Archives’ County Photographs …………..……… Elwood Jones 21 More Good News for Trent Valley Archives …………………………………………………………………………… 24 Backwoods Canada: Catharine Parr Traill’s early impressions of the Trent Valley ……………...…….. Elwood Jones 25 The Summer of 1832: the Traills encounter backwoods Peterborough ………………………………………………… 29 Samuel Strickland’s First Wife ……………………………………………..……………………… Gordon A. Young 30 Greetings from Thunder Bay …………………………………………………………………………… Diane Robnik 31 Then and Now: The east side of Water Street Looking North from North of Charlotte …………………. Ron Briegel 32 Pioneer Peterboro Plants Used to Harness Water Power to Machines ………………… Peterborough Examiner, 1945 33 News, Views and Reviews ……………………………………………………………………………………………. 35 Strickland Lumber picture (35); Upcoming Events at Trent Valley Archives (35), New Horizons Band Concert; Down Memory Lane The National Archival Scene …………………………………………………………………….……… Elwood Jones 37 The national archival scene, Elwood Jones, 37; Archivists’ On To Ottawa Trek, 38; Saving Tangible Records from the Past, Jim Burant (39); A mouldering paper monument to breakthroughs in Bureaucracy, Stephanie Nolen, Toronto Globe and Mail, 26 June 2012 (40) The Art and Science of Heraldry: or what the heck is that thing hanging on the wall? ……...………… David Rumball 41 Trent Valley Archives’ Guitar Raffle ……………………………………………………………………………………. -

Overview of Section H

Overview of Section H Section page FULL OVERVIEW REFERENCES Tracing a little of the History of the Parish Then and Now 283 Overview of section H H 284-286 Glimpses of the changing church and parish surroundings H 287-297 The Church Schools H 298-304 The ‘Barnardo connection’ with the parishes H 305-312 Views in and around the parish, both then and now - 283 - Then and Now … from 1858 to 2008 - 284 - - 285 - 1720 After 1770 1745 1831 1882 1914 1952 2000 - 286 - The Church Schools In this brief look back over the two parishes and churches and the changes they have seen, mention must be made of the church schools. The work of the church has never been seen as just concerning itself with spiritual wellbeing but with the growth of the whole person, in particular through a rounded education of high standards. Both parishes of St. Paul and St. Luke built a church school early on, soon after the churches were built. St. Paul’s School: The commemorative booklet published in 1908 to mark the 50th Anniversary of the consecration of the first St. Paul’s, Bow Common recorded the origins of the church school. Churches took their schools very seriously and church schools are still seen as desirable places within which to place ones children and parents still make great efforts to find a place for their children in a church school. When I arrived at Bow Common it then had the smallest church electoral roll in the diocese. Growth came, however, through parents attending church for their children to qualify for admission to our church school and for the ‘church letter’ to be signed by the Vicar! However, many of these parents stayed and grew in commitment and became the new core for the church alongside those who had been there from the beginning. -

Large Print Guide

Large Print Guide You can download this document from www.manchesterartgallery.org Sponsored by While principally a fashion magazine, Vogue has never been just that. Since its first issue in 1916, it has assumed a central role on the cultural stage with a history spanning the most inventive decades in fashion and taste, and in the arts and society. It has reflected events shaping the nation and Vogue 100: A Century of Style has been organised by the world, while setting the agenda for style and fashion. the National Portrait Gallery, London in collaboration with Tracing the work of era-defining photographers, models, British Vogue as part of the magazine’s centenary celebrations. writers and designers, this exhibition moves through time from the most recent versions of Vogue back to the beginning of it all... 24 June – 30 October Free entrance A free audio guide is available at: bit.ly/vogue100audio Entrance wall: The publication Vogue 100: A Century of Style and a selection ‘Mighty Aphrodite’ Kate Moss of Vogue inspired merchandise is available in the Gallery Shop by Mert Alas and Marcus Piggott, June 2012 on the ground floor. For Vogue’s Olympics issue, Versace’s body-sculpting superwoman suit demanded ‘an epic pose and a spotlight’. Archival C-type print Photography is not permitted in this exhibition Courtesy of Mert Alas and Marcus Piggott Introduction — 3 FILM ROOM THE FUTURE OF FASHION Alexa Chung Drawn from the following films: dir. Jim Demuth, September 2015 OUCH! THAT’S BIG Anna Ewers HEAT WAVE Damaris Goddrie and Frederikke Sofie dir. -

Lessons from Faraday, Maxwell, Kepler and Newton

Science Education International Vol. 26, Issue 1, 2015, 3-23 Personal Beliefs as Key Drivers in Identifying and Solving Seminal Problems: Lessons from Faraday, Maxwell, Kepler and Newton I. S. CALEON*, MA. G. LOPEZ WUI†, MA. H. P. REGAYA‡ ABSTRACT: The movement towards the use of the history of science and problem-based approaches in teaching serves as the impetus for this paper. This treatise aims to present and examine episodes in the lives of prominent scientists that can be used as resources by teachers in relation to enhancing students’ interest in learning, fostering skills about problem solving and developing scientific habits of mind. The paper aims to describe the nature and basis of the personal beliefs, both religious and philosophical, of four prominent scientists—Faraday, Maxwell, Kepler and Newton. P atterns of how these scientists set the stage for a fruitful research endeavor within the context of an ill-structured problem situation a re e x a m i n e d and how their personal beliefs directed their problem-solving trajectories was elaborated. T h e analysis of these key seminal works provide evidence that rationality and religion need not necessarily lie on opposite fences: both can serve as useful resources to facilitate the fruition of notable scientific discoveries. KEY WORDS: history of science; science and religion; personal beliefs; problem solving; Faraday; Maxwell; Kepler; Newton INTRODUCTION Currently, there has been a movement towards the utilization of historical perspectives in science teaching (Irwin, 2000; Seker, 2012; Solbes & Traver, 2003). At the same time, contemporary science education reform efforts have suggested that teachers utilize problem-based instructional approaches (Faria, Freire, Galvão, Reis & Baptista, 2012; Sadeh & Zion, 2009; Wilson, Taylor, Kowalski, & Carlson, 2010). -

Illinois Military Museums & Veterans Memorials

ILLINOIS enjoyillinois.com i It is for us the living, rather, to be dedicated here to the unfinished work which they who fought here have thus far nobly advanced. Abraham Lincoln Illinois State Veterans Memorials are located in Oak Ridge Cemetery in Springfield. The Middle East Conflicts Wall Memorial is situated along the Illinois River in Marseilles. Images (clockwise from top left): World War II Illinois Veterans Memorial, Illinois Vietnam Veterans Memorial (Vietnam Veterans Annual Vigil), World War I Illinois Veterans Memorial, Lincoln Tomb State Historic Site (Illinois Department of Natural Resources), Illinois Korean War Memorial, Middle East Conflicts Wall Memorial, Lincoln Tomb State Historic Site (Illinois Office of Tourism), Illinois Purple Heart Memorial Every effort was made to ensure the accuracy of information in this guide. Please call ahead to verify or visit enjoyillinois.com for the most up-to-date information. This project was partially funded by a grant from the Illinois Department of Commerce and Economic Opportunity/Office of Tourism. 12/2019 10,000 What’s Inside 2 Honoring Veterans Annual events for veterans and for celebrating veterans Honor Flight Network 3 Connecting veterans with their memorials 4 Historic Forts Experience history up close at recreated forts and historic sites 6 Remembering the Fallen National and state cemeteries provide solemn places for reflection is proud to be home to more than 725,000 8 Veterans Memorials veterans and three active military bases. Cities and towns across the state honor Illinois We are forever indebted to Illinois’ service members and their veterans through memorials, monuments, and equipment displays families for their courage and sacrifice. -

Names / Regiments / Service Details on Hoole & Newton World War II Memorial

Names / Regiments / service details on Hoole & Newton World War II Memorial NAME FIRST NAME(S) DATE AGE REGIMENT / SERVICE RANK NUMBER BURIED / COMMEMORATED 1 BN ROYAL WELSH ABBEVILLE COMMUNAL, ALLMAN STANLEY ARTHUR 25/05/1940 19 FUSILIER 4196017 FUSILIERS FRANCE SS Serooskerk Netherlands SECOND ANWYL JOHN LLOYD 02/12/1942 33 TOWER HILL MEMORIAL, UK MERCHANT NAVY OFFICER 382 BTY 116 LT AA Rgt ST MANVIEU CEMY CHEUX, BERRY LESLIE 12/07/1944 32 GUNNER 4205422 ROYAL ARTILLERY FRANCE 2 BN ROYAL BOYER FREDERICK 24/09/1944 23 PRIVATE 2091478 MIERLO CEMY NETHERLANDS WARWICKSHIRE RGT 61 SQN SGT / REICHSWALD FOREST, BROCKLEY DONALD CHARLES 26/03/1942 n/k 971199 ROYAL AIR FORCE WOPAG GERMANY S H (information not yet COOPER found) 460 SQN SGT / OVERLEIGH CEMETERY DUTTON FREDERICK 17/02/1942 20 1006728 ROYAL AUS AIR FORCE WOPAG CHESTER, UK 196 Field Ambulance ROYAL DUTTON JAMES HENRY 26/08/1943 23 PRIVATE 7394425 KANCHANABURI, THAILAND ARMY MEDICAL CORPS 3 Survey Regiment SANGRO RIVER CEMETERY FEILD THOMAS RICHARD 30/11/1943 20 GUNNER 14221718 ROYAL ARTILLERY ITALY 103 SQN SGT FLIGHT MAUBEUGE CENTRE FISHER FRANK 21/12/1942 19 577301 ROYAL AIR FORCE ENGINEER CEMETERY, FRANCE HENRY CHESHIRE REGIMENT KEREN WAR CEMETERY FROST 05/03/1941 27 CAPTAIN 51425 SHELMERDINE Att. 51 Commando ERITREA Cheshire Yeomanry ROYAL DURBAN STELLAWOOD GANDER ALFRED JOHN 16/09/1941 42 WO2 SQMS 547021 ARMOURED CORPS SOUTH AFRICA ROYAL AIR FORCE Comms LEADING AIR TEHRAN WAR CEMETERY GATLEY STANLEY ELLIS 18/01/1943 23 970215 Flight RAF Iraq & Persia CRAFTMAN IRAN 30 SQN PHALERON