Common Internet File System (CIFS) Technical Reference Revision: 1.0

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Copy on Write Based File Systems Performance Analysis and Implementation

Copy On Write Based File Systems Performance Analysis And Implementation Sakis Kasampalis Kongens Lyngby 2010 IMM-MSC-2010-63 Technical University of Denmark Department Of Informatics Building 321, DK-2800 Kongens Lyngby, Denmark Phone +45 45253351, Fax +45 45882673 [email protected] www.imm.dtu.dk Abstract In this work I am focusing on Copy On Write based file systems. Copy On Write is used on modern file systems for providing (1) metadata and data consistency using transactional semantics, (2) cheap and instant backups using snapshots and clones. This thesis is divided into two main parts. The first part focuses on the design and performance of Copy On Write based file systems. Recent efforts aiming at creating a Copy On Write based file system are ZFS, Btrfs, ext3cow, Hammer, and LLFS. My work focuses only on ZFS and Btrfs, since they support the most advanced features. The main goals of ZFS and Btrfs are to offer a scalable, fault tolerant, and easy to administrate file system. I evaluate the performance and scalability of ZFS and Btrfs. The evaluation includes studying their design and testing their performance and scalability against a set of recommended file system benchmarks. Most computers are already based on multi-core and multiple processor architec- tures. Because of that, the need for using concurrent programming models has increased. Transactions can be very helpful for supporting concurrent program- ming models, which ensure that system updates are consistent. Unfortunately, the majority of operating systems and file systems either do not support trans- actions at all, or they simply do not expose them to the users. -

NTFS • Windows Reinstallation – Bypass ACL • Administrators Privilege – Bypass Ownership

Windows Encrypting File System Motivation • Laptops are very integrated in enterprises… • Stolen/lost computers loaded with confidential/business data • Data Privacy Issues • Offline Access – Bypass NTFS • Windows reinstallation – Bypass ACL • Administrators privilege – Bypass Ownership www.winitor.com 01 March 2010 Windows Encrypting File System Mechanism • Principle • A random - unique - symmetric key encrypts the data • An asymmetric key encrypts the symmetric key used to encrypt the data • Combination of two algorithms • Use their strengths • Minimize their weaknesses • Results • Increased performance • Increased security Asymetric Symetric Data www.winitor.com 01 March 2010 Windows Encrypting File System Characteristics • Confortable • Applying encryption is just a matter of assigning a file attribute www.winitor.com 01 March 2010 Windows Encrypting File System Characteristics • Transparent • Integrated into the operating system • Transparent to (valid) users/applications Application Win32 Crypto Engine NTFS EFS &.[ßl}d.,*.c§4 $5%2=h#<.. www.winitor.com 01 March 2010 Windows Encrypting File System Characteristics • Flexible • Supported at different scopes • File, Directory, Drive (Vista?) • Files can be shared between any number of users • Files can be stored anywhere • local, remote, WebDav • Files can be offline • Secure • Encryption and Decryption occur in kernel mode • Keys are never paged • Usage of standardized cryptography services www.winitor.com 01 March 2010 Windows Encrypting File System Availibility • At the GUI, the availibility -

The Linux Kernel Module Programming Guide

The Linux Kernel Module Programming Guide Peter Jay Salzman Michael Burian Ori Pomerantz Copyright © 2001 Peter Jay Salzman 2007−05−18 ver 2.6.4 The Linux Kernel Module Programming Guide is a free book; you may reproduce and/or modify it under the terms of the Open Software License, version 1.1. You can obtain a copy of this license at http://opensource.org/licenses/osl.php. This book is distributed in the hope it will be useful, but without any warranty, without even the implied warranty of merchantability or fitness for a particular purpose. The author encourages wide distribution of this book for personal or commercial use, provided the above copyright notice remains intact and the method adheres to the provisions of the Open Software License. In summary, you may copy and distribute this book free of charge or for a profit. No explicit permission is required from the author for reproduction of this book in any medium, physical or electronic. Derivative works and translations of this document must be placed under the Open Software License, and the original copyright notice must remain intact. If you have contributed new material to this book, you must make the material and source code available for your revisions. Please make revisions and updates available directly to the document maintainer, Peter Jay Salzman <[email protected]>. This will allow for the merging of updates and provide consistent revisions to the Linux community. If you publish or distribute this book commercially, donations, royalties, and/or printed copies are greatly appreciated by the author and the Linux Documentation Project (LDP). -

File Systems and Disk Layout I/O: the Big Picture

File Systems and Disk Layout I/O: The Big Picture Processor interrupts Cache Memory Bus I/O Bridge Main I/O Bus Memory Disk Graphics Network Controller Controller Interface Disk Disk Graphics Network 1 Rotational Media Track Sector Arm Cylinder Platter Head Access time = seek time + rotational delay + transfer time seek time = 5-15 milliseconds to move the disk arm and settle on a cylinder rotational delay = 8 milliseconds for full rotation at 7200 RPM: average delay = 4 ms transfer time = 1 millisecond for an 8KB block at 8 MB/s Bandwidth utilization is less than 50% for any noncontiguous access at a block grain. Disks and Drivers Disk hardware and driver software provide basic facilities for nonvolatile secondary storage (block devices). 1. OS views the block devices as a collection of volumes. A logical volume may be a partition ofasinglediskora concatenation of multiple physical disks (e.g., RAID). 2. OS accesses each volume as an array of fixed-size sectors. Identify sector (or block) by unique (volumeID, sector ID). Read/write operations DMA data to/from physical memory. 3. Device interrupts OS on I/O completion. ISR wakes up process, updates internal records, etc. 2 Using Disk Storage Typical operating systems use disks in three different ways: 1. System calls allow user programs to access a “raw” disk. Unix: special device file identifies volume directly. Any process that can open thedevicefilecanreadorwriteany specific sector in the disk volume. 2. OS uses disk as backing storage for virtual memory. OS manages volume transparently as an “overflow area” for VM contents that do not “fit” in physical memory. -

Active @ UNDELETE Users Guide | TOC | 2

Active @ UNDELETE Users Guide | TOC | 2 Contents Legal Statement..................................................................................................4 Active@ UNDELETE Overview............................................................................. 5 Getting Started with Active@ UNDELETE........................................................... 6 Active@ UNDELETE Views And Windows......................................................................................6 Recovery Explorer View.................................................................................................... 7 Logical Drive Scan Result View.......................................................................................... 7 Physical Device Scan View................................................................................................ 8 Search Results View........................................................................................................10 Application Log...............................................................................................................11 Welcome View................................................................................................................11 Using Active@ UNDELETE Overview................................................................. 13 Recover deleted Files and Folders.............................................................................................. 14 Scan a Volume (Logical Drive) for deleted files..................................................................15 -

File Manager Manual

FileManager Operations Guide for Unisys MCP Systems Release 9.069W November 2017 Copyright This document is protected by Federal Copyright Law. It may not be reproduced, transcribed, copied, or duplicated by any means to or from any media, magnetic or otherwise without the express written permission of DYNAMIC SOLUTIONS INTERNATIONAL, INC. It is believed that the information contained in this manual is accurate and reliable, and much care has been taken in its preparation. However, no responsibility, financial or otherwise, can be accepted for any consequence arising out of the use of this material. THERE ARE NO WARRANTIES WHICH EXTEND BEYOND THE PROGRAM SPECIFICATION. Correspondence regarding this document should be addressed to: Dynamic Solutions International, Inc. Product Development Group 373 Inverness Parkway Suite 110, Englewood, Colorado 80112 (800)641-5215 or (303)754-2000 Technical Support Hot-Line (800)332-9020 E-Mail: [email protected] ii November 2017 Contents ................................................................................................................................ OVERVIEW .......................................................................................................... 1 FILEMANAGER CONSIDERATIONS................................................................... 3 FileManager File Tracking ................................................................................................ 3 File Recovery .................................................................................................................... -

Softnas Deployment Guide for High- Performance SQL Storage

SoftNAS Deployment Guide for High- Performance SQL Storage Introduction SoftNAS cloud NAS systems are based on an innovative, memory-centric storage architecture that delivers unparalleled NAS performance, efficiency, and value. They incorporate a hybrid disk storage technology that tailors the usage of data disks, log solid- state cache drives (SSDs), and read cache SSDs to the data share's specific needs. Additional features include variable storage record size, data compression, and multiple connectivity options. As a Cloud NAS solution, SoftNAS cloud NAS systems provide an excellent base for Microsoft Windows Server deployments by providing iSCSI or Fibre Channel block storage for Microsoft SQL Server, and network file system (NFS) or server message block (SMB) file storage for Microsoft Windows client access. This document covers the best practices to follow when deploying Microsoft SQL Server on a SoftNAS cloud NAS system. The intended audience is storage administrators and Microsoft SQL Server database administrators. Maintaining High Availability As with any business-critical application, high availability is a crucial design criterion to be considered when deploying a Microsoft SQL Server installation. Microsoft SQL Server 2016 can be installed on local and/or shared file systems, and SoftNAS cloud NAS systems can satisfy both of these options. Local file systems (from the Microsoft Windows Server perspective) are hosted as block volumes—iSCSI and/or Fibre-Channel-connected LUNs and file systems as SMB and/or NFS volumes. High availability starts with the network connectivity supporting the storage and server interconnectivity. Any design for the storage infrastructure should avoid single points of failure. Because many white papers and publications cover storage-area networking and network-attached storage resilience, those topics are not covered in detail in this paper. -

Devicelock® DLP 8.3 User Manual

DeviceLock® DLP 8.3 User Manual © 1996-2020 DeviceLock, Inc. All Rights Reserved. Information in this document is subject to change without notice. No part of this document may be reproduced or transmitted in any form or by any means for any purpose other than the purchaser’s personal use without the prior written permission of DeviceLock, Inc. Trademarks DeviceLock and the DeviceLock logo are registered trademarks of DeviceLock, Inc. All other product names, service marks, and trademarks mentioned herein are trademarks of their respective owners. DeviceLock DLP - User Manual Software version: 8.3 Updated: March 2020 Contents About This Manual . .8 Conventions . 8 DeviceLock Overview . .9 General Information . 9 Managed Access Control . 13 DeviceLock Service for Mac . 17 DeviceLock Content Security Server . 18 How Search Server Works . 18 ContentLock and NetworkLock . 20 ContentLock and NetworkLock Licensing . 24 Basic Security Rules . 25 Installing DeviceLock . .26 System Requirements . 26 Deploying DeviceLock Service for Windows . 30 Interactive Installation . 30 Unattended Installation . 35 Installation via Microsoft Systems Management Server . 36 Installation via DeviceLock Management Console . 36 Installation via DeviceLock Enterprise Manager . 37 Installation via Group Policy . 38 Installation via DeviceLock Enterprise Server . 44 Deploying DeviceLock Service for Mac . 45 Interactive Installation . 45 Command Line Utility . 47 Unattended Installation . 48 Installing Management Consoles . 49 Installing DeviceLock Enterprise Server . 52 Installation Steps . 52 Installing and Accessing DeviceLock WebConsole . 65 Prepare for Installation . 65 Install the DeviceLock WebConsole . 66 Access the DeviceLock WebConsole . 67 Installing DeviceLock Content Security Server . 68 Prepare to Install . 68 Start Installation . 70 Perform Configuration and Complete Installation . 71 DeviceLock Consoles and Tools . -

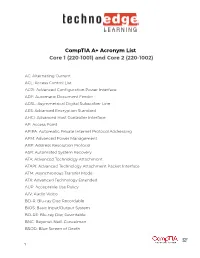

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications -

Your Performance Task Summary Explanation

Lab Report: 11.2.5 Manage Files Your Performance Your Score: 0 of 3 (0%) Pass Status: Not Passed Elapsed Time: 6 seconds Required Score: 100% Task Summary Actions you were required to perform: In Compress the D:\Graphics folderHide Details Set the Compressed attribute Apply the changes to all folders and files In Hide the D:\Finances folder In Set Read-only on filesHide Details Set read-only on 2017report.xlsx Set read-only on 2018report.xlsx Do not set read-only for the 2019report.xlsx file Explanation In this lab, your task is to complete the following: Compress the D:\Graphics folder and all of its contents. Hide the D:\Finances folder. Make the following files Read-only: D:\Finances\2017report.xlsx D:\Finances\2018report.xlsx Complete this lab as follows: 1. Compress a folder as follows: a. From the taskbar, open File Explorer. b. Maximize the window for easier viewing. c. In the left pane, expand This PC. d. Select Data (D:). e. Right-click Graphics and select Properties. f. On the General tab, select Advanced. g. Select Compress contents to save disk space. h. Click OK. i. Click OK. j. Make sure Apply changes to this folder, subfolders and files is selected. k. Click OK. 2. Hide a folder as follows: a. Right-click Finances and select Properties. b. Select Hidden. c. Click OK. 3. Set files to Read-only as follows: a. Double-click Finances to view its contents. b. Right-click 2017report.xlsx and select Properties. c. Select Read-only. d. Click OK. e. -

The Linux Device File-System

The Linux Device File-System Richard Gooch EMC Corporation [email protected] Abstract 1 Introduction All Unix systems provide access to hardware via de- vice drivers. These drivers need to provide entry points for user-space applications and system tools to access the hardware. Following the \everything is a file” philosophy of Unix, these entry points are ex- posed in the file name-space, and are called \device The Device File-System (devfs) provides a power- special files” or \device nodes". ful new device management mechanism for Linux. Unlike other existing and proposed device manage- This paper discusses how these device nodes are cre- ment schemes, it is powerful, flexible, scalable and ated and managed in conventional Unix systems and efficient. the limitations this scheme imposes. An alternative mechanism is then presented. It is an alternative to conventional disc-based char- acter and block special devices. Kernel device drivers can register devices by name rather than de- vice numbers, and these device entries will appear in the file-system automatically. 1.1 Device numbers Devfs provides an immediate benefit to system ad- ministrators, as it implements a device naming scheme which is more convenient for large systems Conventional Unix systems have the concept of a (providing a topology-based name-space) and small \device number". Each instance of a driver and systems (via a device-class based name-space) alike. hardware component is assigned a unique device number. Within the kernel, this device number is Device driver authors can benefit from devfs by used to refer to the hardware and driver instance. -

High Velocity Kernel File Systems with Bento

High Velocity Kernel File Systems with Bento Samantha Miller, Kaiyuan Zhang, Mengqi Chen, and Ryan Jennings, University of Washington; Ang Chen, Rice University; Danyang Zhuo, Duke University; Thomas Anderson, University of Washington https://www.usenix.org/conference/fast21/presentation/miller This paper is included in the Proceedings of the 19th USENIX Conference on File and Storage Technologies. February 23–25, 2021 978-1-939133-20-5 Open access to the Proceedings of the 19th USENIX Conference on File and Storage Technologies is sponsored by USENIX. High Velocity Kernel File Systems with Bento Samantha Miller Kaiyuan Zhang Mengqi Chen Ryan Jennings Ang Chen‡ Danyang Zhuo† Thomas Anderson University of Washington †Duke University ‡Rice University Abstract kernel-level debuggers and kernel testing frameworks makes this worse. The restricted and different kernel programming High development velocity is critical for modern systems. environment also limits the number of trained developers. This is especially true for Linux file systems which are seeing Finally, upgrading a kernel module requires either rebooting increased pressure from new storage devices and new demands the machine or restarting the relevant module, either way on storage systems. However, high velocity Linux kernel rendering the machine unavailable during the upgrade. In the development is challenging due to the ease of introducing cloud setting, this forces kernel upgrades to be batched to meet bugs, the difficulty of testing and debugging, and the lack of cloud-level availability goals. support for redeployment without service disruption. Existing Slow development cycles are a particular problem for file approaches to high-velocity development of file systems for systems.