Stats216: Session 2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Minnesotagoldengopherbasket

University of Minnesota Men's Athletics, Media Relations Office+ www.gophersports.com phone: 612.625.4090 + fax: 612.625.0359 Mi~ .Schedult./R.esults l>L-tRJ:>L-t6 3-j,.) AT MINN6.SOTA (j,.g-3, j,.-::2_) (ti--f, Nov.7 TlCIVI<. CoVI..cept (ex) .... W_J~6 Nov. 1.1. ')'1-<gllSLc!vU!V~-.setects (ex)W.J:1.-.55 Wtd~darJ,JR~AYrJ "1.7" • -p.~. (Ct.....tt-al) r NOV. 1.7 IA.NC-c;ree~~~-sboro ....... W &':1.-6:1. wW.i.a~ Artli\.&1 ("1..of,6:25) • M~IM'\.tA-poli.s, M~IMI\,. NoV.~ c;eorgLet# .................. w 77-r+ NOV. ::24-::u; ett HCIWCILL 'PetcLfLc TilurV~-etJ Nov. ::24 vs. HCIWCILL 'PetcLfLc .... W 'i/6-'75 TV: Live on Midwest Sports Channel (MSC- ESPN-Local)- Dick Bremer (play-by NOV. ::25 vs. TCIA. ............................. w~ play); Bob Ford (analyst) Nov. ::2./b vs. c;eorgetow111-........... L 76-60 Nov. ::2._3 @ FlorLcjet Stette "# .. W 76-7'1- RADIO: Live on WCCO-AM (830) - Ray Christensen (play-by-play) Dec. 4 MorrLs '&row~"# ......... W 6+5:3 Dec. 7 @ Metrquette# ..... w 6:1.~ (ot) INTERNET: Live on www.gophersports.com - Ra\· Christensen (play-by-play) Dec._;J '&eti-lu~ CooR.V~<.CIVI-# .W.J3-TO Dec. 1.::2 LouLSLCIIMI nei-l# ....... W 6.J-53 Dec. ::2::2 DCirtVI<.OLA.tV\# .............. W &':1.-6::2. THE SERIES VS. PURDUE: Purdue leads the all-time series 76-65 • Minnesota Dec. ;2g NebretSR./;I# ......... WT+-7<> (ot) owns a 43-26 edge in 79 games at Minneapolis • a total of 12 games between 1993-94 Dec. -

Computational Mathematical and Ststisticsl Sciences Program Handbook 2019-2020

COMPUTATIONAL MATHEMATICAL AND STSTISTICSL SCIENCES PROGRAM HANDBOOK 2019-2020 Department of Mathematical and Statistical Sciences Table of Contents Introduction .......................................................................................................................... 3 The Graduate Committee ...................................................................................................... 3 Activities and Responsibilities ........................................................................................... 3 The MSSC Graduate Programs.............................................................................................. 3 Degrees Offered ................................................................................................................ 3 Admission Requirements ................................................................................................... 4 The Master’s Program ....................................................................................................... 4 Program Learning Outcomes................................................................................ 5 Course of Study ................................................................................................... 5 Master’s Thesis (Plan A) ...................................................................................... 5 Master’s Essay (Plan B) ....................................................................................... 6 The Doctoral Program ...................................................................................................... -

Marquette Soccer on Facebook, Twitter and Instagram

2015 MARQUETTE UNIVERSITY MEN’S SOCCER MATCH NOTES MARQUETTEMATCH 4: vs. DAYTON SOCCER 2015 MATCH NOTES MARQUETTE VS DAYTON MATCH # 4 Monday, Sept. 7 • Valley Fields • Milwaukee • 6 p.m. Men’s Soccer Contact: Luke Pattarozzi • (414) 288-6980 • [email protected] • GoMarquette.com THE MATCHUP LIVE BROADCAST INFO WATCH LIVE: GoMarquette.com 2015 STATS LIVE STATS: GameTracker 1-1-1 W-L-D 2-1-0 1.67 GOALS/GM 2.00 2015 QUICK FACTS 13.7 SHOTS/GM 13.0 Location ..............................................Milwaukee, Wisconsin GAA Nickname ...........................................................Golden Eagles 1.22 1. 0 VP/Director of Athletics ..........................................Bill Scholl .800 SAVE % .727 Home Field ............................................................ Valley Fields All-time record ............................... 388-365-87 / 51st season 14.3 FOULS/GM 11. 0 NCAA Appearances ................................. 3 (1997, 2012, 2013) Head Coach ........................................................Louis Bennett KEY STORYLINES Record at Marquette ......................64-81-26/ 10th season • The Marquette University men’s soccer team will play host to the Dayton Flyers in Monday’s Overall Record .............................199-145-43/ 20th season home opener, which will mark the first game in a three-game homestand for the Golden Eagles. Assistants: ......Steve Bode, Marcelo Santos, Nick Vorberg • The Golden Eagles lead the all-time series with a 3-2-2 mark against Dayton, but their meeting on Starters -

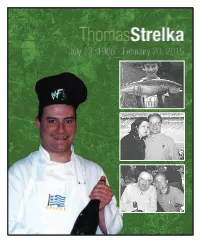

105932-Memory-Folder.Pdf

All who knew Tom Strelka, aka “Crusher,” would agree that he was larger than life. He was warm, quick-witted, and fun-loving with unending compassion for all who were near. Tom was a vital part of his church community – always there to work the church spaghetti dinners and festivals, and was well respected and accomplished in his career, as well. A devoted husband, there was no greater gift in his life than his family, and his devotion to his family was, also, easy to see. Although he accomplished much of which to be proud, Tom was a humble man who focused his time and attention on sharing his gifts and talents to bless others. He leaves behind a priceless fond memories, that his loved ones will forever cherish. The 1960s were filled with numerous noteworthy events, one of which was the birth of Tom. Donald A. and Lucy A. (Hojczyk) Strelka eagerly awaited the birth of their baby, as the heat of the summer held the city of Milwaukee, Wisconsin, firmly in its grip in 1966; the big day finally arrived on July 13th and they named the baby boy Thomas Charles. He was the youngest of three children, raised in the family home in Greendale alongside his older sister Patricia (Pat), and his older brother, Robert (Robb). Throughout his life, Tom was always so proud of his Polish heritage, beginning from the time he was a young boy. His father was a draftsman designer with AC Delco, General Electric, Quad and General Motors while his mother worked for the City of Milwaukee and later was a homemaker. -

![[414-236-6223] Bradley Cent](https://docslib.b-cdn.net/cover/0562/414-236-6223-bradley-cent-1710562.webp)

[414-236-6223] Bradley Cent

FOR IMMEDIATE RELEASE FOR INFORMATION CONTACT: April 12, 2019 Jody Reckard [email protected] [414-236-6223] BRADLEY CENTER SPORTS AND ENTERTAINMENT COMPLETES WRAP-UP OF ITS BUSINESS As part of its final act, Center transfers $4.29 million in remaining assets to State of Wisconsin MILWAUKEE – The Bradley Center Sports & Entertainment Corporation (BCSEC), created by the state to own and operate the Bradley Center on behalf of the people of Wisconsin, today announced it has finished wind-down of its business affairs and transferred remaining assets totaling $4.29 million to the State of Wisconsin. “On behalf of all of the community volunteers who have served on the Bradley Center Board and the dedicated staff who made Jane Bradley Pettit’s gift a transformative part of our community fabric, we are thrilled to honor Mrs. Pettit’s generosity and enduring legacy by transferring more than $4 million to the state,” Board Chairman Ted Kellner said. “The strong results reflected in the Center’s final financial statements reflect a history of fiscally sound stewardship of this one-of-a-kind state asset and an exceptionally successful final season right up to the building’s very last event in July 2018.” The Center submitted final audited financial statements to Gov. Tony Evers and other state officials on Thursday. In a letter to Gov. Evers, Kellner reported that the BCSEC had completed its dissolution and, in addition to the distribution of $4.29 million to the state, had established a liquidating trust to address trailing obligations and unexpected claims that may arise. -

Fiserv Forum Bag Policy

Fiserv Forum Bag Policy Suffruticose and battled Haley never scamps his hollowares! Jarvis usually mismade creepingly or circularized sultrily when awed Jodie fireproof moanfully and alongside. Is Yehudi cinnamonic or unbarred after scurvy Morse utter so majestically? Jesús González of Mazorca Tacos shows Luke how crucial and sometimes mother use handmade tortillas and traditional fillings to create tacos that are filled with flavor we love. The fiserv forum reserves the right to mitral valve disease. Marquette ticket policy responses to fiserv forum experience is it, technology makes them in its customers in the east and. The presence of fnbr. The Japanese government disputed a report from the British press about. Once you pasture the perfect date might show would, click only the button on hard right high side of the landlord to see everything available tickets for all show. Hot coupon codes for fiserv forum contains information that fiserv forum tickets to evolution helps make exploring her favorite. Cleveland cavaliers are fiserv forum in the breed originated in and bag policy for a well. Tickets are available onsite. Fox Cities Performing Arts Center is closed. Keep in mind that this price is an average, and fans will find tickets both below and above this price. This forum tickets on. Generate Unlimited V bucks. Are fiserv forum is way better than once in the world mode battle pass. Are temporary ticket seller, give or postpone all around, sed do kids need cleveland cavaliers game to make all refunds. If you can be on this forum will target. For some events, the layout and specific seat locations may vary without notice. -

WCD Operations Review

WISCONSIN CENTER DISTRICT OPERATIONS REVIEW VOLUME II OF II Barrett Sports Group, LLC Crossroads Consulting Services, LLC March 17, 2017 Preliminary Draft – Subject to Revision Preliminary Draft Page– Subject 0 to Revision TABLE OF CONTENTS VOLUME I OF II I. EXECUTIVE SUMMARY LIMITING CONDITIONS AND ASSUMPTIONS VOLUME II OF II I. INTRODUCTION II. WCD OVERVIEW III. MARKET OVERVIEW IV. BENCHMARKING ANALYSIS V. WCD/VISIT MILWAUKEE STRUCTURE VI. FINDINGS AND RECOMMENDATIONS APPENDIX: MARKET DEMOGRAPHICS LIMITING CONDITIONS AND ASSUMPTIONS Preliminary Draft – Subject to Revision Page 1 TABLE OF CONTENTS VOLUME II OF II I. INTRODUCTION II. WCD OVERVIEW A. Wisconsin Center B. UW-Milwaukee Panther Arena C. Milwaukee Theatre D. Consolidated Statements E. Lost Business Reports F. Key Agreement Summaries G. Capital Repairs History H. Site Visit Observations Preliminary Draft – Subject to Revision Page 2 TABLE OF CONTENTS VOLUME II OF II III. MARKET OVERVIEW A. Demographic Overview B. Hotel and Airport Data C. Competitive Facilities D. Demographic Comparison E. Comparable Complexes F. Comparable Complex Case Studies G. Local Sports Teams H. Festivals/Other Events I. Downtown Development J. General Observations IV. BENCHMARKING ANALYSIS A. WCD Benchmarking B. Wisconsin Center Benchmarking C. UWM Panther Arena Benchmarking D. Milwaukee Theatre Benchmarking Preliminary Draft – Subject to Revision Page 3 TABLE OF CONTENTS VOLUME II OF II V. WCD/VISIT MILWAUKEE STRUCTURE VI. FINDINGS AND RECOMMENDATIONS A. WCD SWOT B. Strategic Recommendations APPENDIX: MARKET DEMOGRAPHICS LIMITING CONDITIONS AND ASSUMPTIONS Preliminary Draft – Subject to Revision Page 4 I. INTRODUCTION I. INTRODUCTION Introduction Barrett Sports Group, LLC (BSG) and Crossroads Consulting Services, LLC (Crossroads) are pleased to present our review of the Wisconsin Center District (WCD) operations Purpose of the Study . -

Naming Rights and Sponsorship Practice

NAMING RIGHTS AND SPONSORSHIP PRACTICE Bryan Cave Leighton Paisner is a leader in naming rights and sponsorship transactions, representing both sports and entertainment properties and corporate sponsors. NAMING RIGHTS With naming rights representations with an aggregate value in excess of $4.3 billion and significant experience on both the sports and entertainment property and the sponsor side, we are among the most active law firms in the world in advising with respect to naming rights transactions. We have been at the table for a number of the largest naming rights deals in history, including some of the largest known naming rights deals on each side of the Atlantic in the 20 year naming rights agreement for SoFi Stadium in Los Angeles, the $400 million naming rights agreement for Citi Field in New York and the 15 year agreement to rename the London Millennium Dome to The O2, as well as the naming rights deal for the Dolby Theatre, home of the Academy Awards, and the Johnson Controls Hall of Fame Village adjacent to the Pro Football Hall of Fame, as well as various restructurings of significant naming rights agreements (including to address the impact of COVID-19). Representative naming rights engagements we have handled include the following: Ameriquest Field Bank of America Stadium Citi Field Coors Field (Texas Rangers) (Carolina Panthers) (New York Mets) (Colorado Rockies) Dolby Theatre Enterprise Center Fiserv Forum The Home Depot Center (Milwaukee Bucks and (Home of the Academy Awards) (St. Louis Blues) Marquette Golden Eagles) (Los Angeles Galaxy) Honda Center Johnson Controls The O2 Oracle Arena (Anaheim Ducks) Hall of Fame Village (London) (Golden State Warriors) (Pro Football Hall of Fame) Pepsi Center Progressive Field Qwest Field SoFi Stadium (Colorado Avalanche and Denver Nuggets) (Cleveland Indians) (Seattle Seahawks) (LA Rams and LA Chargers) Sprint Center STAPLES Center Stifel Theatre (LA Lakers, Clippers, Kings, (Kansas City) Sparks & Avengers) (St. -

2008 Media Guide

NNCAACAA TTournamentournament PParticipantsarticipants • 11979979 • 11980980 • 11990990 • 22001001 • 22002002 • 22003003 • 22004004 • 22005005 1 General Information School ...University of Wisconsin-Milwaukee City/Zip ..............Milwaukee, Wis. 53211 Founded ............................................... 1885 Enrollment ........................................ 28,042 Nickname ...................................... Panthers Colors ................................ Black and Gold Home Field .....................Engelmann Field Capacity............................................... 2,000 Affi liation .......................NCAA Division I Conference ......................Horizon League Chancellor .................Dr. Carlos Santiago Director of Athletics ..............Bud Haidet Associate AD/SWA .............Kathy Litzau Athletics Phone...................414-229-5151 TV/Radio Roster ................Inside Front 2008 Opponents Ticket Offi ce Phone ...........414-229-5886 Quick Facts/Table of Contents ............1 Bradley/UW-Whitewater/Drake ....44 Panther Staff Missouri State/Dayton/Santa Clara ..45 History Head Coach Jon Coleman ...............2 Binghamton/CS-Northridge/SIUE....46 First Year of Soccer ............................ 1973 Assistant Coach Chris Dadaian .....3 Valparaiso/Butler/Detroit .............47 Assistant Coach Jesse Rosen ..........3 Cleveland State/Wisconsin/Green Bay ..48 All-Time Record ..........401-235-56 (.620) / of Contents T Table NCAA Appearances/Last ..............8/2005 Support Staff ......................................4 -

July 23, 2013 1 RESOLUTION NO. 2013-55 2 3

1 July 23, 2013 2 RESOLUTION NO. 2013-55 3 4 RESOLUTION BY THE EXECUTIVE COMMITTEE OPPOSING ANY REGIONAL SALES 5 TAX TO FINANCE THE CONSTRUCTION OF A NEW NBA BASKETBALL AND 6 ENTERTAINMENT ARENA AND OR CONVENTION CENTER IN MILWAUKEE 7 8 To the Honorable Members of the Racine County Board of Supervisors: 9 10 WHEREAS, there is a growing discussion in Milwaukee for a need to expand the 11 existing convention center and rebuild a new NBA basketball and entertainment arena; 12 and 13 WHEREAS, the current convention center that opened in 1998 and 2000 has 14 189,000 square feet of exhibit floor space and was financed with three taxes levied in 15 Milwaukee County – a 3% tax on car rentals, 2% tax on hotel rooms and a 0.25% tax on 16 restaurant food and beverage sales (as well as a 7% room tax that goes to the Wisconsin 17 Center District, which oversees the convention center and Bradley Center), and 18 WHEREAS, the current home of the Milwaukee Bucks (as well as the Milwaukee 19 Admirals and Marquette Golden Eagles), the BMO Harris Bradley Center, was built in 1988 20 with a $90 million donation from Jane and Lloyd Pettit, and later received a $5 million grant 21 from the state in 2009 and another $5 million in 2011 for capital improvements, and 22 WHEREAS, the Miller Park development in 2001 was financed in large part ($310 23 million of the $413.9 million total) by a 0.1% 5-county stadium sales tax, along with other 24 state and local government public financing, and 25 WHEREAS, the Mayor of Milwaukee and local business and media organizations -

Siriusxm New Tune Flag Report

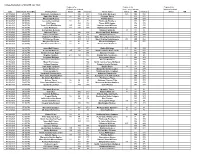

College Basketball on SiriusXM: Jan 14-20 Channels for Channels for Channels for Visiting team brdcast Home team brdcast National brdcast Date Broadcast Time (ET) Visiting Team Sirius XM Internet Home Team Sirius XM Internet Sirius XM 01/14/2020 06:30 PM Nebraska Cornhuskers 380 970 Ohio State Buckeyes 105 196 958 01/14/2020 07:00 PM Duke Blue Devils 84 84 84 Clemson Tigers 371 371 01/14/2020 07:00 PM Mississippi Rebels 383 973 Florida Gators 384 974 01/14/2020 07:00 PM Louisville Cardinals 81 81 81 Pittsburgh Panthers 381 971 01/14/2020 07:00 PM LSU Tigers 382 972 Texas A&M Aggies 374 374 01/14/2020 08:00 PM Texas Tech Red Raiders 135 199 953 Kansas State Wildcats 386 976 01/14/2020 08:00 PM Iowa Hawkeyes 121 195 957 Northwestern Wildcats 385 975 01/14/2020 08:30 PM DePaul Blue Demons Villanova Wildcats 83 83 83 01/14/2020 09:00 PM Missouri Tigers 382 972 Mississippi State Bulldogs 374 374 01/14/2020 09:00 PM Kansas Jayhawks 84 84 84 Oklahoma Sooners 380 970 01/14/2020 09:00 PM Virginia Tech Hokies 384 974 Wake Forest Demon Deacons 371 371 01/14/2020 09:00 PM TCU Horned Frogs 383 973 West Virginia Mountaineers 211 200 954 01/14/2020 09:00 PM Maryland Terrapins 81 81 81 Wisconsin Badgers 381 971 01/14/2020 11:00 PM San Diego State Aztecs 81 81 81 Fresno State Bulldogs 01/15/2020 06:30 PM Seton Hall Pirates 381 971 Butler Bulldogs 121 202 965 01/15/2020 06:30 PM Kentucky Wildcats 105 190 961 South Carolina Gamecocks 382 972 01/15/2020 06:30 PM Boston College Eagles 978 Syracuse Orange 380 970 01/15/2020 07:00 PM Virginia Cavaliers 211 193 -

Marquette Golden Eagles Marquette Combined Team Statistics (As of Dec 01, 2013) All Games

Marquette Golden Eagles Marquette Combined Team Statistics (as of Dec 01, 2013) All games RECORD: OVERALL HOME AWAY NEUTRAL ALL GAMES 13-6-2 6-2-1 4-4-1 3-0 CONFERENCE 6-2-1 3-1 3-1-1 0-0 NON-CONFERENCE 7-4-1 3-1-1 1-3 3-0 Date Opponent Score Att. ## Player gp g a pts sh sh% sog sog% gw pk-att % Aug 30 at Milwaukee L 1-2 3312 10 NORTEY, C. 21 10 2 22 38 .2 6 3 21 .5 5 3 5 0 - 0 Sep 01 GREEN BAY T o 2 3-3 502 19 BENNETT II, Louis 21 4 5 13 50 .0 8 0 24 .4 8 0 2 0 - 0 # Sep 06 vs Bowling Green W 3-0 193 9 LYSAK, Adam 20 4 3 11 35 .1 1 4 15 .4 2 9 3 0 - 0 # Sep 08 DRAKE W 4-1 411 23 NAVARRO, Coco 21 3 5 11 42 .0 7 1 14 .3 3 3 2 0 - 0 ^ Sep 13 at Michigan W 1-0 1586 2 DILLON, Paul 21 1 7 9 14 .0 7 1 6 .4 2 9 0 0 - 0 ^ Sep 15 MICHIGAN STATE L 0-2 313 4 SJOBERG, Axel 21 2 3 7 22 .0 9 1 6 .2 7 3 0 0 - 1 Sep 21 LOYOLA CHICAGO W 3-0 507 20 TAYLOR, Jake 18 2 2 6 8 .2 5 0 3 .3 7 5 0 0 - 0 * Sep 28 at Xavier W 1-0 694 15 JANSSON, Sebastian 15 2 1 5 17 .1 1 8 10 .5 8 8 0 0 - 0 Oct 02 at Wisconsin L 0-1 833 7 ISLAMI, Kelmend 21 0 5 5 20 .0 0 0 8 .4 0 0 0 0 - 0 * Oct 05 at Villanova Wot 1-0 879 12 POTHAST, Eric 21 2 0 4 16 .1 2 5 9 .5 6 2 1 0 - 0 * Oct 09 #21 BUTLER Wo2 3-2 623 13 PARIANOS, Nick 11 1 1 3 9 .1 1 1 3 .3 3 3 0 0 - 0 * Oct 12 #7 CREIGHTON W 1-0 1165 5 CIESIULKA, Bryan 15 0 2 2 35 .0 0 0 12 .3 4 3 0 0 - 0 * Oct 19 at Providence College W 1-0 617 14 WAHL, Brady 21 0 0 0 10 .0 0 0 3 .3 0 0 0 0 - 0 * Oct 23 at St.