Modeling User Emotion in Interactive Play Environments: a Fuzzy Physiological Approach EXAMINING COMMITTEE: Torsten Moller, Chair

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Xbox Cheats Guide Ght´ Page 1 10/05/2004 007 Agent Under Fire

Xbox Cheats Guide 007 Agent Under Fire Golden CH-6: Beat level 2 with 50,000 or more points Infinite missiles while in the car: Beat level 3 with 70,000 or more points Get Mp model - poseidon guard: Get 130000 points and all 007 medallions for level 11 Get regenerative armor: Get 130000 points for level 11 Get golden bullets: Get 120000 points for level 10 Get golden armor: Get 110000 points for level 9 Get MP weapon - calypso: Get 100000 points and all 007 medallions for level 8 Get rapid fire: Get 100000 points for level 8 Get MP model - carrier guard: Get 130000 points and all 007 medallions for level 12 Get unlimited ammo for golden gun: Get 130000 points on level 12 Get Mp weapon - Viper: Get 90000 points and all 007 medallions for level 6 Get Mp model - Guard: Get 90000 points and all 007 medallions for level 5 Mp modifier - full arsenal: Get 110000 points and all 007 medallions in level 9 Get golden clip: Get 90000 points for level 5 Get MP power up - Gravity boots: Get 70000 points and all 007 medallions for level 4 Get golden accuracy: Get 70000 points for level 4 Get mp model - Alpine Guard: Get 100000 points and all gold medallions for level 7 ghðtï Page 1 10/05/2004 Xbox Cheats Guide Get ( SWEET ) car Lotus Espirit: Get 100000 points for level 7 Get golden grenades: Get 90000 points for level 6 Get Mp model Stealth Bond: Get 70000 points and all gold medallions for level 3 Get Golden Gun mode for (MP): Get 50000 points and all 007 medallions for level 2 Get rocket manor ( MP ): Get 50000 points and all gold 007 medalions on first level Hidden Room: On the level Bad Diplomacy get to the second floor and go right when you get off the lift. -

You Are Who You Play You Are Tekofsky, Shoshannah

Tilburg University You are who you play you are Tekofsky, Shoshannah Publication date: 2017 Document Version Publisher's PDF, also known as Version of record Link to publication in Tilburg University Research Portal Citation for published version (APA): Tekofsky, S. (2017). You are who you play you are: Modeling Player Traits from Video Game Behavior. [s.n.]. General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. Download date: 26. sep. 2021 shoshannah tekofsky YOU ARE WHO YOU PLAY YOU ARE YOU ARE WHO YOU PLAY YOU ARE Modelling Player Traits from Video Game Behavior Proefschrift ter verkrijging van de graad van doctor aan Tilburg University op gezag van de rector magnificus, prof.dr. E.H.L. Aarts, in het openbaar te verdedigen ten overstaan van een door het college voor promoties aangewezen commissie in de aula van de Universiteit op maandag 19 juni 2017 om 16.00 uur door Shoshannah Tekofsky, geboren te Wapenveld. -

Exploring Quantitative Methods for Evaluating Sports Games

Exploring Quantitative Methods for Evaluating Sports Games Veronica Zammitto Abstract School of Interactive Arts and The evaluation of Game User Experience is an Technology emergent and important field in game and HCI research Simon Fraser University due to its direct impact on the multi-billion dollar 250 -13450 102 Avenue interactive entertainment industry. Special attention Surrey, BC V3T 0A3 Canada has been given to the shooter genre. This paper [email protected] focuses on evaluation of sports games. In particular, it presents an ongoing project that aims to assess the Magy Seif El-Nasr utility of triangulating several quantitative methods, School of Interactive Arts and including eye-tracking, psycho-physiological data and Technology game telemetry. This research is an on-going Simon Fraser University collaboration between Simon Fraser University and 250 -13450 102 Avenue Electronic Arts. Results of research including the Surrey, BC V3T 0A3 Canada triangulation of this data for the evaluation of the NBA [email protected] Live 10 title and evaluation of these methods by EA’s production team will be discussed. Paul Newton Electronic Arts Canada Keywords 4330 Sanderson Way User research, user experience, methodology, game Burnaby, BC V5G 4X1 Canada evaluation, usability, psycho-physiology, sport games, [email protected] telemetry, eye tracking, EMG, GSR, EKG, BVP ACM Classification Keywords Copyright is held by the author/owner(s). H.5.2 Information Interfaces and Presentation: User CHI 2010, April 10–15, 2010, Atlanta, Georgia, USA. Interfaces: Evaluation/methodology; K.8.0 Personal ACM 978-1-60558-930-5/10/04. Computing: General: Games 2 General Terms collected during a play session. -

Sony Playstation 2

Sony PlayStation 2 Last Updated on September 28, 2021 Title Publisher Qty Box Man Comments .hack//G.U. Vol. 1//Rebirth Namco Bandai Games .hack//G.U. Vol. 1//Rebirth: Demo Namco Bandai Games .hack//G.U. Vol. 1//Rebirth: Special Edition Bandai Namco Games .hack//G.U. Vol. 2//Reminisce Namco Bandai Games .hack//G.U. Vol. 3//Redemption Namco Bandai Games .hack//Infection Part 1: Demo Bandai .hack//Infection Part 1 Bandai .hack//Mutation Part 2 Bandai .hack//Mutation Part 2: Trade Demo Bandai .hack//Mutation Part 2: Demo Bandai .hack//Outbreak Part 3: Demo Bandai .hack//Outbreak Part 3 Bandai .hack//Quarantine Part 4 Bandai .hack//Quarantine Part 4: Demo Bandai 007: Agent Under Fire Electronic Arts 007: Agent Under Fire: Greatest Hits Electronic Arts 007: Everything or Nothing Electronic Arts 007: Everything or Nothing: Greatest Hits Electronic Arts 007: Everything or Nothing: Demo Electronic Arts 007: Nightfire Electronic Arts 007: Nightfire: Greatest Hits Electronic Arts 007: Quantum of Solace Activision 18 Wheeler: American Pro Trucker Acclaim 187 Ride or Die Ubisoft 2002 FIFA World Cup Electronic Arts 2006 FIFA World Cup EA Sports 24: The Game 2K Games 25 to Life Eidos 4x4 Evolution Godgames 50 Cent: Bulletproof Vivendi Universal Games 50 Cent: Bulletproof: Greatest Hits Vivendi Universal Games 7 Wonders of the Ancient World MumboJumbo 989 Sports 2004 Disc: Demo 989 Sports 989 Sports Sampler 2003: Demo 989 Sports AC/DC Live: Rock Band Track Pack MTV Games Ace Combat 04: Shattered Skies Namco Ace Combat 04: Shattered Skies: Greatest Hits -

Roster Installation Instructions

English Roster Installation Instructions Roster Installation Instructions No matter what game you have, you need the Toolbox Datei Wasserlasser Toolbox Das Schweizer Taschenmesser für AddOns Wasserlasser 27. September 2020 Copy it in your NHL Folder NHL09: 1. Copy and Paste or unzip exhibition.exh to your My Documents\NHL09 FOLDER. Say Yes to overwriting the file. R1.jpg 2. Copy and Paste or unzip exhibition.exha.tdb to your My Documents\NHL09 FOLDER. Say Yes to overwriting the file. R2.jpg 3. Copy and Paste or unzip db.viv to your C:\Program Files\EA Sports\NHL09\db FOLDER. Say Yes to overwriting the file. R3.jpg 4. Copy and Paste or unzip db.bh to your C:\Program Files\EA Sports\NHL09\db FOLDER. Say Yes to overwriting the file. R4.jpg 5. Copy and Paste or unzip local.viv to your C:\Program Files\EA Sports\NHL09\fe FOLDER. Say Yes to overwriting the file. R5.jpg 6. Run the Toolbox NHL08: 1. Copy and Paste or unzip exhibition.exh to your My Documents\NHL08 FOLDER. Say Yes to overwriting the file. 2. Copy and Paste or unzip exhibition.exha.tdb to your My Documents\NHL08 FOLDER. Say Yes to overwriting the file. 3. Copy and Paste or unzip db.viv to your C:\Program Files\EA Sports\NHL08\db FOLDER. Say Yes to overwriting the file. 4. Copy and Paste or unzip db.bh to your C:\Program Files\EA Sports\NHL08\db FOLDER. Say Yes to overwriting the file. 5. Copy and Paste or unzip local.viv to your C:\Program Files\EA Sports\NHL08\fe FOLDER. -

Nhlview NG User's Guide

Contents I Table of Contents Part I Introduction 1 1 About N...H..L..V..i.e..w... .N..G... .............................................................................................................. 1 2 System.. .R..e..q..u..i.r.e..m...e..n..t.s.. .......................................................................................................... 2 3 Installa..t.i.o..n.. ........................................................................................................................... 3 4 Getting.. .S..t.a..r.t.e..d.. ..................................................................................................................... 3 PS3 .......................................................................................................................................................... 3 XBox 360 .......................................................................................................................................................... 3 Part II User Interface 4 1 Main W..i.n..d..o..w... ....................................................................................................................... 4 2 Players.. .T..a..b.. .......................................................................................................................... 6 Personal Data .......................................................................................................................................................... 7 Appearance ......................................................................................................................................................... -

Computerspielemuseum-567

Super-Stars (computerspielemuseum-535) Ponky II ( Demo) (computerspielemuseum-567) Kids Demo-CD Ubisoft (computerspielemuseum-570) Screen Savers Collection (computerspielemuseum-630) ISM 97 (computerspielemuseum-636) 1869 (computerspielemuseum-661) Bewag - Das Berliner Energie-Programm (computerspielemuseum-667) Kings Quest 8 (computerspielemuseum-860) Addy( Klasse1+2) Mathe (computerspielemuseum-863) Roland Garros - French Open 2000 (computerspielemuseum-867) Master Clips( Publishing Edition) (computerspielemuseum-868) Start Klar( 2.Klasse Rechnen) (computerspielemuseum-893) Print Artist 6 (computerspielemuseum-894) Sacred (computerspielemuseum-1704) Der Brockhaus 2001 (computerspielemuseum-1733) F 1 Championship Season 2000 (computerspielemuseum-1766) Elite Starfighter (computerspielemuseum-1854) Crazy Machines (computerspielemuseum-1855) Spacetanks (computerspielemuseum-1856) Casino Pack 1 (computerspielemuseum-1989) Computer Bild Spiele 8/2001 TOMB RAIDER 2 (computerspielemuseum-2175) Unreal II - The Awakening (computerspielemuseum-2355) The Elder Scrolls III: Morrowind - Collector's Edition (computerspielemuseum-2362) HD HIDDEN & DANGEROUS DELUXE (computerspielemuseum-2555) SHOGUN TOTAL WAR (computerspielemuseum-2561) ZANZARAH (computerspielemuseum-2566) Print-Pack : Formulare (computerspielemuseum-2601) Auftragsverwaltung : Fuer kleine und mittlere Betriebe (computerspielemuseum-2602) Alarm fuer Cobra 11 (computerspielemuseum-2632) Page 1 of 93 Print Pack Tattoo (computerspielemuseum-2635) XDreamity : Get the Power to visualize your -

Gamecube Checklist.Docx

Multiple Regions Title Game Box Manual 1080° Avalanche - NA, PAL ⇫ ⇫ ⇫ 1080: Silver Storm - Japan 18 Wheeler: American Pro Trucker ⇫ ⇫ ⇫ 2002 FIFA World Cup ⇫ ⇫ ⇫ The Adventures of Jimmy Neutron Boy Genius: ⇫ ⇫ ⇫ Attack of the Twonkies The Adventures of Jimmy Neutron Boy Genius: Jet ⇫ ⇫ ⇫ Fusion Aggressive Inline ⇫ ⇫ ⇫ All-Star Baseball 2003 ⇫ ⇫ ⇫ Amazing Island ⇫ ⇫ ⇫ Kaijuu no Shima: Amazing Island - Japan Animal Crossing ⇫ ⇫ ⇫ Doubutsu no Mori Plus - Japan Animaniacs: The Great Edgar Hunt ⇫ ⇫ ⇫ ATV Quad Power Racing 2 ⇫ ⇫ ⇫ Auto Modellista ⇫ ⇫ ⇫ Auto Modellista: U.S.-tuned - Japan Avatar: The Last Airbender ⇫ ⇫ ⇫ Avatar: The Legend of Aang - Europe Bad Boys: Miami Takedown ⇫ ⇫ ⇫ Bad Boys II - PAL Baldur's Gate: Dark Alliance ⇫ ⇫ ⇫ Barbarian ⇫ ⇫ ⇫ Warrior Blade: Rastan vs. Barbarian - Japan Barnyard ⇫ ⇫ ⇫ Baten Kaitos: Eternal Wings and the Lost Ocean ⇫ ⇫ ⇫ Baten Kaitos: Owaranai Tsubasa to Ushinawareta Umi - Japan Baten Kaitos Origins ⇫ ⇫ ⇫ Baten Kaitos II: Hajimari no Tsubasa to Kamigami no Shishi - Japan Batman Begins ⇫ ⇫ ⇫ Batman: Dark Tomorrow ⇫ ⇫ ⇫ Batman: Rise of Sin Tzu ⇫ ⇫ ⇫ Batman Vengeance ⇫ ⇫ ⇫ Battalion Wars ⇫ ⇫ ⇫ Beach Spikers ⇫ ⇫ ⇫ Beyblade VForce: Super Tournament Battle ⇫ ⇫ ⇫ Bakuten Shoot Beyblade 2002: Nettou! Magne Tag Battle - Japan Beyond Good & Evil ⇫ ⇫ ⇫ Big Air Freestyle ⇫ ⇫ ⇫ Big Mutha Truckers ⇫ ⇫ ⇫ Billy Hatcher and the Giant Egg ⇫ ⇫ ⇫ Giant Egg: Billy Hatcher no Daibouken - Japan Bionicle ⇫ ⇫ ⇫ Black & Bruised ⇫ ⇫ ⇫ Blood Omen 2 ⇫ ⇫ ⇫ BloodRayne ⇫ ⇫ ⇫ Bloody Roar: Primal Fury ⇫ ⇫ ⇫ BMX XXX ⇫ ⇫ ⇫ Bomberman Generation ⇫ ⇫ ⇫ Bomberman Jetters ⇫ ⇫ ⇫ Bratz: Forever Diamondz ⇫ ⇫ ⇫ Bratz: Rock Angelz ⇫ ⇫ ⇫ Buffy the Vampire Slayer: Chaos Bleeds ⇫ ⇫ ⇫ Burnout ⇫ ⇫ ⇫ Burnout 2: Point of Impact ⇫ ⇫ ⇫ Bust-a-Move 3000 ⇫ ⇫ ⇫ Super Bust-a-Move All-Stars - PAL Super Puzzle Bobble All-Stars - Japan Call of Duty: Finest Hour ⇫ ⇫ ⇫ Call of Duty 2: Big Red One ⇫ ⇫ ⇫ Capcom vs. -

Nintendo Games

The Video Game Guy, Booths Corner Farmers Market - Garnet Valley, PA 19060 (302) 897-8115 www.thevideogameguy.com System Game Genre NES 720 Sports NES 1942 Other NES 10-Yard Fight Football NES 190 in 1 Cartridge Other NES 1943 the Battle of Midway Other NES 3-D WorldRunner Action & Adventure NES 76 in 1 Cartridge Other NES 8 Eyes Action & Adventure NES A Boy and His Blob Trouble on Blobolonia Action & Adventure NES Abadox Action & Adventure NES Action 52 Action & Adventure NES Addams Family Action & Adventure NES Addams Family Pugsley's Scavenger Hunt Action & Adventure NES Advanced Dungeons and Dragons Dragon Strike Strategy NES Advanced Dungeons and Dragons Heroes of the Lance Strategy NES Advanced Dungeons and Dragons Hillsfar Strategy NES Advanced Dungeons and Dragons Pool of Radiance Strategy NES Adventure Island Action & Adventure NES Adventure Island 3 Action & Adventure NES Adventure Island ii Action & Adventure NES Adventures in the Magic Kingdom Action & Adventure NES Adventures of Bayou Billy Action & Adventure NES Adventures of Dino Rikki Action & Adventure NES Adventures of Lolo Action & Adventure NES Adventures of Lolo 2 Action & Adventure NES Adventures of Lolo 3 Puzzle NES Adventures of Rocky and Bullwinkle and Friends Action & Adventure NES Adventures of Tom Sawyer Action & Adventure NES After Burner Other NES Air Fortress Other NES Airwolf Action & Adventure NES Al Unser Turbo Racing Racing NES Aladdin Action & Adventure NES Alfred Chicken Action & Adventure NES Alien 3 Action & Adventure NES Alien Syndrome Action & -

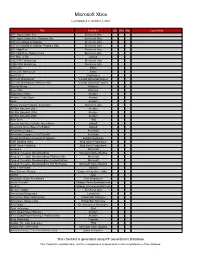

Microsoft Xbox

Microsoft Xbox Last Updated on October 2, 2021 Title Publisher Qty Box Man Comments 007: Agent Under Fire Electronic Arts 007: Agent Under Fire: Platinum Hits Electronic Arts 007: Everything or Nothing Electronic Arts 007: Everything or Nothing: Platinum Hits Electronic Arts 007: NightFire Electronic Arts 007: NightFire: Platinum Hits Electronic Arts 187 Ride or Die Ubisoft 2002 FIFA World Cup Electronic Arts 2006 FIFA World Cup Electronic Arts 25 to Life Eidos 25 to Life: Bonus CD Eidos 4x4 EVO 2 GodGames 50 Cent: Bulletproof Vivendi Universal Games 50 Cent: Bulletproof: Platinum Hits Vivendi Universal Games Advent Rising Majesco Aeon Flux Majesco Aggressive Inline Acclaim Airforce Delta Storm Konami Alias Acclaim Aliens Versus Predator: Extinction Electronic Arts All-Star Baseball 2003 Acclaim All-Star Baseball 2004 Acclaim All-Star Baseball 2005 Acclaim Alter Echo THQ America's Army: Rise of a Soldier: Special Edition Ubisoft America's Army: Rise of a Soldier Ubisoft American Chopper Activision American Chopper 2: Full Throttle Activision American McGee Presents Scrapland Enlight Interactive AMF Bowling 2004 Mud Duck Productions AMF Xtreme Bowling Mud Duck Productions Amped 2 Microsoft Amped: Freestyle Snowboarding Microsoft Game Studios Amped: Freestyle Snowboarding: Platinum Hits Microsoft Amped: Freestyle Snowboarding: Limited Edition Microsoft Amped: Freestyle Snowboarding: Not for Resale Microsoft Game Studios AND 1 Streetball UbiSoft Antz Extreme Racing Empire Interactive / Light... APEX Atari Aquaman: Battle For Atlantis TDK -

Madison Klarkowski Thesis

THE PSYCHOPHYSIOLOGICAL EVALUATION OF THE PLAYER EXPERIENCE Madison Klarkowski B. Games & Interactive Entertainment, Honours (IT). Written under the supervision of Assoc. Prof. Daniel Johnson & Assoc. Prof. Peta Wyeth Assoc. Prof. Simon Smith Submitted in fulfilment of the requirements for the degree of Doctor of Philosophy School of Electrical Engineering and Computer Science Science and Engineering Faculty Queensland University of Technology 2017 i Keywords Challenge; Challenge‒skill balance; Electrocardiography; Electrodermal activity; Electroencephalography; Electromyography; Enjoyment; Flow; Physiology; Player experience; Psychophysiology; Self-determination theory; Video games The Psychophysiological Evaluation of the Optimal Player Experience ii Abstract As video games emerge as a leading form of entertainment, so too does the need for a comprehensive understanding of the player experience. Player experience research thus expands upon this understanding through the lens of psychological constructs such as flow, presence, challenge, competence and self-determination theory. The psychophysiological method presents one approach for evaluating this player experience. A variety of psychophysiological investigations of the player experience have been undertaken, and have contributed novel results that expand upon the understanding of physiological response to video game play. However, these assessments often feature small sample sizes (occasionally only comprising participants of a single gender), or are restricted to employing one or two psychophysiological measures. The value of a study with a large sample size and multiple psychophysiological and subjective measures was thus identified, and undertaken for this program of research. For this study, pilot testing was undertaken to confirm the suitability of the chosen psychophysiological measures, refine the game artefacts and identify the most appropriate subjective measures to use. -

Copy of Games11111111111.Xlsx

Name System Region Case Manual 3DO Interactive Sampler 4 3DO USA No No Alone in the Dark 2 3DO USA No No Battle Chess 3DO USA No No BladeForce 3DO USA No No Crime Patrol 3DO USA No No Dragon's Lair 3DO Japanese Yes Yes Fifa International Soccer 3DO USA No No Flying Nightmares 3DO USA No No Gex 3DO Japanese Yes Yes Gex 3DO USA Yes Yes Gex 3DO USA No No Panasonic Special CD-Rom 3DO Japanese Yes Yes Policenauts 3DO Japanese Yes Yes Psychic Detective 3DO USA No No Puzzle Bobble 3DO Japanese Yes Yes Quarantine 3DO USA No No Road Rash 3DO Japanese Yes Yes Samurai Showdown 3DO Japanese Yes Yes Shockwave 3DO USA No No Shockwave 2: Beyond the Gate 3DO USA No No Space Ace 3DO USA No No Star Fighter 3DO USA No No Street Fighter II 3DO Japanese Yes Yes Super Street Fighter II Turbo 3DO USA No No The Last Bounty Hunter 3DO USA No No Twisted: The Game Show 3DO USA No No VR Stalker 3DO USA No No Waialae Country Club 3DO USA No No Way of the Warrior 3DO USA No No Wing Commander III 3DO USA No No Air-Sea Battle Atari 2600 USA No No Arcade Pinball Atari 2600 USA No No Asteroids Atari 2600 USA No No Atlantis Atari 2600 USA No No Backgammon Atari 2600 USA No No Baseball Atari 2600 USA No No Basic Programming Atari 2600 USA No No Berzerk Atari 2600 USA No No Blackjack Atari 2600 USA No No Brain Games Atari 2600 USA No No Breakout Atari 2600 USA No No Breakout Atari 2600 USA No No Carnival Atari 2600 USA No No Centipede Atari 2600 USA No No Chase Atari 2600 USA No No Circus Atari Atari 2600 USA No No Codebreaker Atari 2600 USA No No Combat Atari 2600 USA No