Piz Daint and Its Ecosystem Sadaf Alam Chief Technology Officer Swiss National Supercomputing Centre November 16, 2017 CSCS in a Nutshell

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Operational Perspective on a Hybrid and Heterogeneous Cray XC50 System

An Operational Perspective on a Hybrid and Heterogeneous Cray XC50 System Sadaf Alam, Nicola Bianchi, Nicholas Cardo, Matteo Chesi, Miguel Gila, Stefano Gorini, Mark Klein, Colin McMurtrie, Marco Passerini, Carmelo Ponti, Fabio Verzelloni CSCS – Swiss National Supercomputing Centre Lugano, Switzerland Email: {sadaf.alam, nicola.bianchi, nicholas.cardo, matteo.chesi, miguel.gila, stefano.gorini, mark.klein, colin.mcmurtrie, marco.passerini, carmelo.ponti, fabio.verzelloni}@cscs.ch Abstract—The Swiss National Supercomputing Centre added depth provides the necessary space for full-sized PCI- (CSCS) upgraded its flagship system called Piz Daint in Q4 e daughter cards to be used in the compute nodes. The use 2016 in order to support a wider range of services. The of a standard PCI-e interface was done to provide additional upgraded system is a heterogeneous Cray XC50 and XC40 system with Nvidia GPU accelerated (Pascal) devices as well as choice and allow the systems to evolve over time[1]. multi-core nodes with diverse memory configurations. Despite Figure 1 clearly shows the visible increase in length of the state-of-the-art hardware and the design complexity, the 37cm between an XC40 compute module (front) and an system was built in a matter of weeks and was returned to XC50 compute module (rear). fully operational service for CSCS user communities in less than two months, while at the same time providing significant improvements in energy efficiency. This paper focuses on the innovative features of the Piz Daint system that not only resulted in an adaptive, scalable and stable platform but also offers a very high level of operational robustness for a complex ecosystem. -

New CSC Computing Resources

New CSC computing resources Atte Sillanpää, Nino Runeberg CSC – IT Center for Science Ltd. Outline CSC at a glance New Kajaani Data Centre Finland’s new supercomputers – Sisu (Cray XC30) – Taito (HP cluster) CSC resources available for researchers CSC presentation 2 CSC’s Services Funet Services Computing Services Universities Application Services Polytechnics Ministries Data Services for Science and Culture Public sector Information Research centers Management Services Companies FUNET FUNET and Data services – Connections to all higher education institutions in Finland and for 37 state research institutes and other organizations – Network Services and Light paths – Network Security – Funet CERT – eduroam – wireless network roaming – Haka-identity Management – Campus Support – The NORDUnet network Data services – Digital Preservation and Data for Research Data for Research (TTA), National Digital Library (KDK) International collaboration via EU projects (EUDAT, APARSEN, ODE, SIM4RDM) – Database and information services Paituli: GIS service Nic.funet.fi – freely distributable files with FTP since 1990 CSC Stream Database administration services – Memory organizations (Finnish university and polytechnics libraries, Finnish National Audiovisual Archive, Finnish National Archives, Finnish National Gallery) 4 Current HPC System Environment Name Louhi Vuori Type Cray XT4/5 HP Cluster DOB 2007 2010 Nodes 1864 304 CPU Cores 10864 3648 Performance ~110 TFlop/s 34 TF Total memory ~11 TB 5 TB Interconnect Cray QDR IB SeaStar Fat tree 3D Torus CSC -

A Scheduling Policy to Improve 10% of Communication Time in Parallel FFT

A scheduling policy to improve 10% of communication time in parallel FFT Samar A. Aseeri Anando Gopal Chatterjee Mahendra K. Verma David E. Keyes ECRC, KAUST CC, IIT Kanpur Dept. of Physics, IIT Kanpur ECRC, KAUST Thuwal, Saudi Arabia Kanpur, India Kanpur, India Thuwal, Saudi Arabia [email protected] [email protected] [email protected] [email protected] Abstract—The fast Fourier transform (FFT) has applications A. FFT Algorithm in almost every frequency related studies, e.g. in image and signal Forward Fourier transform is given by the following equa- processing, and radio astronomy. It is also used to solve partial differential equations used in fluid flows, density functional tion. 3 theory, many-body theory, and others. Three-dimensional N X (−ikxx) (−iky y) (−ikz z) 3 f^(k) = f(x; y; z)e e e (1) FFT has large time complexity O(N log2 N). Hence, parallel algorithms are made to compute such FFTs. Popular libraries kx;ky ;kz 3 perform slab division or pencil decomposition of N data. None The beauty of this sum is that k ; k ; and k can be summed of the existing libraries have achieved perfect inverse scaling x y z of time with (T −1 ≈ n) cores because FFT requires all-to-all independent of each other. These sums are done at different communication and clusters hitherto do not have physical all- to-all connections. With advances in switches and topologies, we now have Dragonfly topology, which physically connects various units in an all-to-all fashion. -

View Annual Report

Fellow Shareholders, Headlined by strong growth in revenue and profitability, we had one of our best years ever in 2015, executing across each of our major focus areas and positioning our company for continued growth into the future. We achieved another year of record revenue, growing by nearly 30 percent compared to 2014. In fact, our revenue in 2015 was more than three times higher than just four years prior — driven by growth in both our addressable market and market share. Over the last five years, we have transformed from a company solely focused on the high-end of the supercomputing market — where we are now the clear market leader — to a company with multiple product lines serving multiple markets. We provide our customers with powerful computing, storage and analytics solutions that give them the tools to advance their businesses in ways never before possible. During 2015, we installed supercomputing and storage solutions at a number of customers around the world. In the U.S., we completed the first phase of the massive new “Trinity” system at Los Alamos National Laboratory. This Cray XC40 supercomputer with Sonexion storage serves as the National Nuclear Security Administration’s flagship supercomputer, supporting all three of the NNSA’s national laboratories. We installed the first petaflop supercomputer in India at the Indian Institute of Science. In Europe, we installed numerous XC and storage solutions, including a significant expansion of the existing XC40 supercomputer at the University of Stuttgart in Germany. This new system nearly doubles their computing capacity and is currently the fastest supercomputer in Germany. -

A New UK Service for Academic Research

The newsletter of EPCC, the supercomputing centre at the University of Edinburgh news Issue 74 Autumn 2013 In this issue Managing research data HPC for business Simulating soft materials Energy efficiency in HPC Intel Xeon Phi ARCHER A new UK service for academic research ARCHER is a 1.56 Petaflop Cray XC30 supercomputer that will provide the next UK national HPC service for academic research Also in this issue Dinosaurs! From the Directors Contents 3 PGAS programming 7th International Conference Autumn marks the start of the new ARCHER service: a 1.56 Petaflop Profile Cray XC30 supercomputer that will provide the next UK national Meet the people at EPCC HPC service for academic research. 4 New national HPC service We have been involved in running generation of exascale Introducing ARCHER national HPC services for over 20 supercomputers which will be years and ARCHER will continue many, many times more powerful Big Data our tradition of supporting science than ARCHER. Big Data activities 7 Data preservation and in the UK and in Europe. are also playing an increasingly infrastructure important part in our academic and Our engagement with industry work. supercomputing takes many forms 9 HPC for industry - from racing dinosaurs at science We have always prided ourselves Making business better festivals, to helping researchers get on the diversity of our activities. more from HPC by improving This issue of EPCC News 11 Simulation algorithms and creating new showcases just a fraction of them. Better synthesised sounds software tools. EPCC staff -

This Is Your Presentation Title

Introduction to GPU/Parallel Computing Ioannis E. Venetis University of Patras 1 Introduction to GPU/Parallel Computing www.prace-ri.eu Introduction to High Performance Systems 2 Introduction to GPU/Parallel Computing www.prace-ri.eu Wait, what? Aren’t we here to talk about GPUs? And how to program them with CUDA? Yes, but we need to understand their place and their purpose in modern High Performance Systems This will make it clear when it is beneficial to use them 3 Introduction to GPU/Parallel Computing www.prace-ri.eu Top 500 (June 2017) CPU Accel. Rmax Rpeak Power Rank Site System Cores Cores (TFlop/s) (TFlop/s) (kW) National Sunway TaihuLight - Sunway MPP, Supercomputing Center Sunway SW26010 260C 1.45GHz, 1 10.649.600 - 93.014,6 125.435,9 15.371 in Wuxi Sunway China NRCPC National Super Tianhe-2 (MilkyWay-2) - TH-IVB-FEP Computer Center in Cluster, Intel Xeon E5-2692 12C 2 Guangzhou 2.200GHz, TH Express-2, Intel Xeon 3.120.000 2.736.000 33.862,7 54.902,4 17.808 China Phi 31S1P NUDT Swiss National Piz Daint - Cray XC50, Xeon E5- Supercomputing Centre 2690v3 12C 2.6GHz, Aries interconnect 3 361.760 297.920 19.590,0 25.326,3 2.272 (CSCS) , NVIDIA Tesla P100 Cray Inc. DOE/SC/Oak Ridge Titan - Cray XK7 , Opteron 6274 16C National Laboratory 2.200GHz, Cray Gemini interconnect, 4 560.640 261.632 17.590,0 27.112,5 8.209 United States NVIDIA K20x Cray Inc. DOE/NNSA/LLNL Sequoia - BlueGene/Q, Power BQC 5 United States 16C 1.60 GHz, Custom 1.572.864 - 17.173,2 20.132,7 7.890 4 Introduction to GPU/ParallelIBM Computing www.prace-ri.eu How do -

Through the Years… When Did It All Begin?

& Through the years… When did it all begin? 1974? 1978? 1963? 2 CDC 6600 – 1974 NERSC started service with the first Supercomputer… ● A well-used system - Serial Number 1 ● On its last legs… ● Designed and built in Chippewa Falls ● Launch Date: 1963 ● Load / Store Architecture ● First RISC Computer! ● First CRT Monitor ● Freon Cooled ● State-of-the-Art Remote Access at NERSC ● Via 4 acoustic modems, manually answered capable of 10 characters /sec 3 50th Anniversary of the IBM / Cray Rivalry… Last week, CDC had a press conference during which they officially announced their 6600 system. I understand that in the laboratory developing this system there are only 32 people, “including the janitor”… Contrasting this modest effort with our vast development activities, I fail to understand why we have lost our industry leadership position by letting someone else offer the world’s most powerful computer… T.J. Watson, August 28, 1963 4 2/6/14 Cray Higher-Ed Roundtable, July 22, 2013 CDC 7600 – 1975 ● Delivered in September ● 36 Mflop Peak ● ~10 Mflop Sustained ● 10X sustained performance vs. the CDC 6600 ● Fast memory + slower core memory ● Freon cooled (again) Major Innovations § 65KW Memory § 36.4 MHz clock § Pipelined functional units 5 Cray-1 – 1978 NERSC transitions users ● Serial 6 to vector architectures ● An fairly easy transition for application writers ● LTSS was converted to run on the Cray-1 and became known as CTSS (Cray Time Sharing System) ● Freon Cooled (again) ● 2nd Cray 1 added in 1981 Major Innovations § Vector Processing § Dependency -

TECHNICAL GUIDELINES for APPLICANTS to PRACE 17Th CALL

TECHNICAL GUIDELINES FOR APPLICANTS TO PRACE 17th CALL (T ier-0) The contributing sites and the corresponding computer systems for this call are: System Architecture Site (Country) Core Hours Minimum (node hours) request Joliot Curie - Bull Sequana X1000 GENCI@CEA 134 million 15 million core SKL (FR) (2.8 million) hours Joliot Curie - BULL Sequana GENCI@CEA 72 million 15 million core KNL X1000 (FR) (1,1 million) hours Hazel Hen Cray XC40 System GCS@HLRS 70 million 35 million core (DE) (2.9 million) hours JUWELS Multicore cluster GCS@JSC (DE) 70 million 35 million core (1.5 million) hours Marconi- Lenovo System CINECA (IT) 36 million 15 million core Broadwell (1 million) hours Marconi-KNL Lenovo System CINECA (IT) 612 million 30 million core (9 million) hours MareNostrum Lenovo System BSC (ES) 240 million 15 million core (5 million) hours Piz Daint Cray XC50 System CSCS (CH) 510 million 68 million core (7.5 million) hours Use of GPUs SuperMUC Lenovo NextScale/ GCS@LRZ (DE) 105 million 35 million core SuperMUC-NG Lenovo ThinkSystem (3.8 million) hours The site selection is done together with the specification of the requested computing time by the two sections at the beginning of the online form. The applicant can choose one or several machines as execution system, as long as proper benchmarks and resource request justification are provided on each of the requested systems. The parameters are listed in tables. The first column describes the field in the web online form to be filled in by the applicant. The remaining columns specify the range limits for each system. -

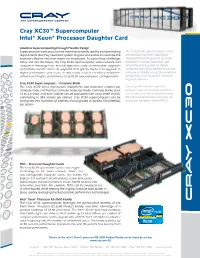

Cray XC30™ Supercomputer Intel® Xeon® Processor Daughter Card

Intel, Xeon, Aries and the Intel Logo are trademarks of Intel Corporation in the U.S. and/or other countries. All other trademarks mentioned herein are the properties of their respective owners. 20121024JRC 2012 Cray Inc. All rights reserved. Specifications are subject to change without notice. Cray is a registered trademark, Cray XC30, Cray Linux Environment, Cray SHMEM, and NodeKare are trademarks of Cray In. Cray XC30™ Supercomputer Intel® Xeon® Processor Daughter Card Adaptive Supercomputing through Flexible Design Supercomputer users procure their machines to satisfy specific and demanding The Cray XC30 supercomputer series requirements. But they need their system to grow and evolve to maximize the architecture has been specifically machine’s lifetime and their return on investment. To solve these challenges designed from the ground up to be today and into the future, the Cray XC30 supercomputer series network and adaptive. A holistic approach opti- compute technology have been designed to easily accommodate upgrades mizes the entire system to deliver and enhancements. Users can augment their system “in-place” to upgrade to sustained real-world performance and higher performance processors, or add coprocessor/accelerator components extreme scalability across the collective to build even higher performance Cray XC30 supercomputer configurations. integration of all hardware, network- ing and software. Cray XC30 Supercomputer — Compute Blade The Cray XC30 series architecture implements two processor engines per One key differentiator with this compute node, and has four compute nodes per blade. Compute blades stack adaptive supercomputing platform is 16 to a chassis, and each cabinet can be populated with up to three chassis, the flexible method of implementing culminating in 384 sockets per cabinet. -

CRAY XC30 System 利用者講習会

CRAY XC30 System 利用者講習会 2015/06/15 1 演習事前準備 演習用プログラム一式が /work/Samples/workshop2015 下に置いてあります。 各自、/work下の作業ディレクトリへコピーし てください。 2 Agenda 13:30 - 13:45 ・Cray XC30 システム概要 ハードウェア、ソフトウェア 13:45 - 14:00 ・Cray XC30 システムのサイト構成 ハードウェア、ソフトウェア 14:00 - 14:10 <休憩> 14:10 - 14:50 ・XC30 プログラミング環境 ・演習 14:50 - 15:00 <休憩> 15:00 - 15:10 ・MPIとは 15:10 - 15:50 ・簡単なMPIプログラム ・演習 15:50 - 16:00 <休憩> 16:00 - 16:20 ・主要なMPI関数の説明 ・演習 16:20 - 16:50 ・コードの書換えによる最適化 ・演習 16:50 - 17:00 ・さらに進んだ使い方を学ぶ為には 17:00 - 17:30 ・質疑応答 3 CRAY System Roadmap (XT~XC30) Cray XT3 “Red Storm” Cray XT4 Cray XT5 Cray XT5 Cray XE6 Cray XC30 (2005) (2006) h (2007) (2007) (2010) (2012) Cray XT Infrastructure XK System With GPU XMT is based on • XMT2: fall 2011 XT3 • larger memory infrastructure. • higher bandwidth • enhanced RAS • new performance features 6/5/2015 4 CRAY XC30 System構成(1) ノード数 360ノード 2014/12/25 (720CPU, 5760コア)以降 理論ピーク性能 ノード数 360ノード 119.8TFLOPS (720CPU, 8640コア) 総主記憶容量 22.5TB 理論ピーク性能 359.4TFLOPS 総主記憶容量 45TB フロントエンドサービスノード システム管理ノード (ログインノード) FCスイッチ 二次記憶装置 磁気ディスク装置 システムディスク SMW 管理用端末 System, SDB 4x QDR Infiniband 貴学Network 8Gbps Fibre Channel 1GbE or 10GbE 5 System構成 (計算ノード) ノード数 :360ノード(720CPU,5760コア) 360ノード(720CPU,8640コア) 総理論演算性能 :119.8TFLOPS 359.4TFLOPS 主記憶容量 :22.5TB 47TB ノード仕様 CPU :Intel Xeon E5-2670 2.6GHz 8core CPU数 :2 Intel Xeon E5-2690 V3 2.6GHz 12core CPU理論演算性能 :166.4GFLOPS 499.2GFLOPS ノード理論演算性能 :332.8GFLOPS 998.4GFLOPS ノード主記憶容量 :64GB 128GB (8GB DDR3-1600 ECC DIMM x8) ノードメモリバンド幅 :102.4GB/s 16 GB DDR4-2133 ECC DIMM x8 136.4GB/s 6 TOP500 (http://www.top500.org/) TOP500は、世界で最も高速なコンピュータシステムの上位500位までを定期的にランク付 -

Cray XC40 Power Monitoring and Control for Knights Landing

Cray XC40 Power Monitoring and Control for Knights Landing Steven J. Martin, David Rush Matthew Kappel, Michael Sandstedt, Joshua Williams Cray Inc. Cray Inc. Chippewa Falls, WI USA St. Paul, MN USA {stevem,rushd}@cray.com {mkappel,msandste,jw}@cray.com Abstract—This paper details the Cray XC40 power monitor- This paper is organized as follows: In section II, we ing and control capabilities for Intel Knights Landing (KNL) detail blade-level Hardware Supervisory System (HSS) ar- based systems. The Cray XC40 hardware blade design for Intel chitecture changes introduced to enable improved telemetry KNL processors is the first in the XC family to incorporate enhancements directly related to power monitoring feedback gathering capabilities on Cray XC40 blades with Intel KNL driven by customers and the HPC community. This paper fo- processors. Section III provides information on updates to cuses on power monitoring and control directly related to Cray interfaces available for power monitoring and control that blades with Intel KNL processors and the interfaces available are new for Cray XC40 systems and the software released to users, system administrators, and workload managers to to support blades with Intel KNL processors. Section IV access power management features. shows monitoring and control examples. Keywords-Power monitoring; power capping; RAPL; energy efficiency; power measurement; Cray XC40; Intel Knights II. ENHANCED HSS BLADE-LEVEL MONITORING Landing The XC40 KNL blade incorporates a number of en- hancements over previous XC blade types that enable the I. INTRODUCTION collection of power telemetry in greater detail and with Cray has provided advanced power monitoring and control improved accuracy. -

Hpc in Europe

HPC IN EUROPE Organisation of public HPC resources Context • Focus on publicly-funded HPC resources provided primarily to enable scientific research and development at European universities and other publicly-funded research institutes • These resources are also intended to benefit industrial / commercial users by: • facilitating access to HPC • providing HPC training • sponsoring academic-industrial collaborative projects to exchange expertise and accelerate efficient commercial exploitation of HPC • Do not consider private sector HPC resources owned and managed internally by companies, e.g. in aerospace design & manufacturing, oil & gas exploration, fintech (financial technology), etc. European HPC Infrastructure • Structured provision of European HPC facilities: • Tier-0: European Centres (> petaflop machines) • Tier-1: National Centres • Tier-2: Regional/University Centres • Tiers planned as part of an EU Research Infrastructure Roadmap • This is coordinated through “PRACE” – http://prace-ri.eu PRACE Partnership foR Advanced Computing in Europe • International non-profit association (HQ office in Brussels) • Established in 2010 following ESFRI* roadmap to create a persistent pan-European Research Infrastructure (RI) of world-class supercomputers • Mission: enable high-impact scientific discovery and engineering research and development across all disciplines to enhance European competitiveness for the benefit of society. *European Strategy Forum on Reseach Infrastructures PRACE Partnership foR Advanced Computing in Europe Aims: • Provide access to leading-edge computing and data management resources and services for large-scale scientific and engineering applications at the highest performance level • Provide pan-European HPC education and training • Strengthen the European users of HPC in industry A Brief History of PRACE PRACE Phases & Objectives • Preparation and implementation of the PRACE RI was supported by a series of projects funded by the EU’s FP7 and Horizon 2020 funding programmes • 530 M€ of funding for the period 2010-2015.