Practical AVR Microcontrollers Games, Gadgets, and Home Automation with the Microcontroller Used in the Arduino

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What Are Your Best Computer Options for Teleworking?

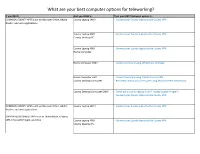

What are your best computer options for teleworking? If you NEED… And you HAVE a… Then your BEST telework option is… COMMON COUNTY APPS such as Microsoft Office, Adobe County Laptop ONLY - Connect your County laptop to the County VPN Reader, and web applications County Laptop AND - Connect your County laptop to the County VPN County Desktop PC County Laptop AND - Connect your County laptop to the County VPN Home Computer Home Computer ONLY - Connect remotely using VDI (Virtual Desktop) Home Computer AND - Connect remotely using Dakota County VPN County Desktop Computer - Remotely control your computer using Windows Remote Desktop County Desktop Computer ONLY - Check out a County laptop from IT Laptop Loaner Program - Connect your County laptop to the County VPN COMMON COUNTY APPS such as Microsoft Office, Adobe County Laptop ONLY - Connect your County laptop to the County VPN Reader, and web applications SUPPORTED BUSINESS APPS such as OneSolution, OnBase, SIRE, Microsoft Project, and Visio County Laptop AND - Connect your County laptop to the County VPN County Desktop PC If you NEED… And you HAVE a… Then your BEST telework option is… County Laptop AND - Connect your County laptop to the County VPN Home Computer Home Computer ONLY - Contact the County IT Help Desk at 651/438-4346 Home Computer AND - Connect remotely using Dakota County VPN County Desktop Computer - Remotely control your computer using Windows Remote Desktop County Desktop Computer ONLY - Check out a County laptop from IT Laptop Loaner Program - Connect your County laptop -

Resource Guide: Workstation Productivity

Resource Guide: Workstation Productivity Contents Beyond the Desktop: Workstation Productivity ……………………………………………………2 Find out how to get the highest productivity possible from high performance applications that require more power than traditional desktop computers Top IT Considerations for Mobile Workstations …………………...............................................4 Discover the top IT considerations for organization that require mobile workstations designed to provide a balance of performance and portability Maximum Management and Control with Virtualized Workstations ....…………………...…….6 Explore the benefits of virtualization to ensure the greatest possible management and control of high performance workstations for the most demanding workloads and applications Sponsored by: Page 1 of 6 Copyright © MMIX, CBS Broadcasting Inc. All Rights Reserved. For more downloads and a free TechRepublic membership, please visit http://techrepublic.com.com/2001-6240-0.html TechRepublic Resource Guide: Workstations Productivity Beyond the Desktop: Workstation Productivity Find out how to get the highest productivity possible from high performance applications that require more power than traditional desktop computers Desktop computers have come a long way in terms of value and performance for the average user but more advanced applications now require even more in terms of processing, graphics, memory and storage. The following is a brief overview of the differences between standard desktops and workstations as well as the implications for overall performance and productivity as compiled from the editorial resources of CNET, TechRepublic and ZDNet.com. Think Inside the Box A lot of people tend to think of desktop computers and computer workstation as one and the same but that isn’t always the case in terms of power and performance. In fact, many of today’s most demanding workloads and applications for industries such as engineering, entertainment, finance, manufacturing, and science actually require much higher functionality than what traditional desktops have to offer. -

Good Reasons to Use a Desktop Computer

Good reasons to use a desktop computer Why would anyone possibly need a desktop? For most, it goes against all common sense to use a desktop because they're not as convenient and portable as a lap- top, and a laptop does what you need it to do just fine. At the same time, the good ol' bulky, stationary desktop still has a place for many people. Here are good reasons why you might want to use desktop computer. You can upgrade the parts, which is cheaper than buying a new laptop. When a laptop starts to slow down, your only option is to buy a whole new one. Depending on what you need a com- puter for, a new laptop could cost thousands of dollars. That's because you have to buy every single part inside the laptop brand new — the processor, motherboard, RAM, hard drive, and everything else. But most of the parts in your old laptop are probably still fine, and it's usually only the processor (and the motherboard it sits on) that needs upgrading. With a desktop you can easily replace an aging processor (and motherboard) for around $300. That said, all-in-one desktops like the iMac can be a little difficult to upgrade. You get more bang for your buck. Depending on the brand, desktops and laptops with similar specs can often cost the same. Yet, the main differences between the two are the processor and the graphics processor. Most Laptops use the smaller mobile version of a processor model, which often aren't quite as powerful as the full-size desktop versions. -

Today Computers Are All Around Us. from Desktop Computers To

Today computers are all around us. From desktop computers to smartphones, they're changing the way that we live our lives. But have you ever asked yourself what exactly is a computer? A computer is an electronic device that manipulates information or data. The computer sees data as ones and zeros but it knows how to combine them into more complex things such as a photograph, a movie, a website, a game and much more... Computers use a combination of hardware and software. Hardware is any physical part of the computer which includes all of the internal components and also the external parts like the monitor and keyboard. Software is any set of instructions that tells the hardware what to do such as a web browser, media player or word processor. Now when most people say computer, they're talking about a personal computer. This can be a desktop computer or a laptop computer which has basically the same capabilities but in a more portable package. Personal computers come in two main styles, PC and Mac. PCs are the most common types, there are many different companies that make them and they usually come with the Microsoft Windows operating system. Macs or Macintosh computers are all made by one company, Apple, and they come with the Mac OS 10 operating system. Computers come in many other shapes and sizes, mobile phones, tablets, game consoles and even TVs have built-in computers, although they may not do everything a desktop or laptop can. There is another type of computer that plays an important role in our lives, servers. -

Desktop Computer Replacement Program Date: May 22, 2019 From: Information Technology Department Presentation Steve Ely, I.T

CITY COUNCIL AGENDA REPORT MEETING DATE: JUNE 4, 2019 ITEM NUMBER: CC-7 SUBJECT: DESKTOP COMPUTER REPLACEMENT PROGRAM DATE: MAY 22, 2019 FROM: INFORMATION TECHNOLOGY DEPARTMENT PRESENTATION STEVE ELY, I.T. DIRECTOR BY: FOR FURTHER INFORMATION STEVE ELY, I.T. DIRECTOR, 714-754-4891 CONTACT: RECOMMENDATION: Staff recommends that the City Council: 1 . Approve the Desktop Computer Replacement Program for the purchase of 435 desktop computers in three phases to replace the computers that have already reached their end-of-life stage. 2. Approve and authorize the Acting City Manager to execute a purchase order with Dell Marketing, LP. (Dell) in the amount of $166,266.27 for completion of the first phase of the Desktop Computer Replacement Program, which includes the purchase of 140 desktop computers. BACKGROUND: The City purchased 435 desktop computers in 2013. These computers have long passed their life expectancy. Their warranty expired in 2016, which makes them prone to issues and causes staff to have to troubleshoot or make repairs as much as they can. In addition to dedicating time for repairs, parts may become obsolete or costly to obtain, resulting in the necessity to purchase new equipment. Desktop computers are the most heavily used pieces of equipment and upgrading them will allow employees to be more efficient in their daily duties and provide the assurance that data and information will not be lost due to aging equipment. ANALYSIS: Many department computers have exceeded their useful life and become inefficient in performing normal functions. Over time, these resources wear, age, 1 and/or become obsolete, causing performance degradation, excessive support and repair activity, and loss of reliability. -

Connecting an LCD Projector to a Desktop Computer

Connecting an LCD Projector to a Desktop Computer INSTRUCTIONS 1. Disconnect the computer monitor cable from the back of the computer. Splitter Into 2. Connect the splitter to the Computer monitor port of the computer. Monitor Port 3. Connect the computer monitor cable to one end of the splitter. 4. Connect one end of the long Computer projector cable to the other end of Monitor the splitter. Long Cable Cable to Projector 5. Connect the other end of the long projector cable to the projector at Projector’s the "computer in" (not “monitor On/Off Switch out”) port. (Look varies depending on model; 6. Make sure the projector’s power might be a different cable is plugged into an electrical color) outlet. Long 7. All projectors have an on/off Projector switch. Press once to power on the lamp. Press twice to power off Cable Into the lamp. The fan will continue to Projector run after the lamp is powered off. Projector’s DO NOT UNPLUG THE Power Cable PROJECTOR UNTIL THE FAN STOPS RUNNING! CAUTION: Do not EVER look directly into the lamp of the projector or allow a student to do so. 8. Adjust the “feet” on the projector to get the correct height for the Permanent damage to vision can occur. projection. Depending on the model of projector, “feet” might be on the TROUBLESHOOTING bottom of any corner or in the If there is no computer image projected: middle front. 1. Make sure computer is powered on and logged in. 2. Make sure the projector is powered on. -

Types of Computer

ICT IGCSE Theory – Revision Presentation Chapter 1: Types and components of computer systems computer of components and 1: Types Chapter 1.4 Types of computer • Describe the characteristics of a personal/desktop computer and its uses, both as a standalone and networked computer • Describe the characteristics of a laptop computer and what it is used for, both as a standalone and networked computer • Describe the characteristics of a tablet computer and what it is used for, including its ability to use wireless technology or 3G/4G technology • Describe the computer characteristics of a smartphone and what it is used for in computing terms • Describe the advantages and disadvantages of each type of computer WWW.YAHMAD.CO.UK ICT IGCSE Theory – Revision Presentation Chapter 1: Types and components of computer systems computer of components and 1: Types Chapter 1.4 Types of computer Describe the characteristics of a personal/desktop computer and its uses, both as a standalone and networked computer Desktop/Personal Computer • Computers used to be huge and would sometime take up the whole space of a room. • In the 1980s (desktop/personal) computers were created and were small enough to fit on desks. • These computers were cheap and affordable for the public. • Desktop Computer normally have a separate monitor, keyboard, mouse, speakers etc. Standalone Networked • Can be used as a standalone • Share resources computer in your home for • Share files personal or work use. • Shared Drives • Tends to be connected to • Central Storage own printer and internet • Online Gaming connection. WWW.YAHMAD.CO.UK ICT IGCSE Theory – Revision Presentation Chapter 1: Types and components of computer systems computer of components and 1: Types Chapter 1.4 Types of computer Describe the characteristics of a laptop computer and what it is used for, both as a standalone and networked computer Laptop Computer • Laptops refers to a computer where all the components (monitor, keyboard etc.) are together on a single device. -

How to Set up Your UCSF Computer Workstation for a Home Or Remote Location

How to set up your UCSF computer workstation for a home or remote location You must obtain approval from your supervisor prior to moving your desktop computer home, then open a ticket with IT to ensure your workstation is ready. You can do this by submitting the Move Computers Request form. (Select “Other” for the move to location) Setting up a UCSF workstation at a remote location outside UCSF location (ex. Private home network) is dependent on the type of device as each scenario is different, and the user experience also depends on the home network connection. Before moving equipment to a remote location, consider taking photographs of how things are connected so you can easily reconnect everything properly. Requirements: The UCSF workstation will need to have our Security Suite installed, if this is a UCSF computer then these requirements are already met. o DDPEencrypted o Big Fix o Secure Connector o Secure Pulse for VPN (can be installed from software.ucsf.edu) o SEP Antivirus The workstation will need the following components: o Power Cables o Home Router (for network) o Network Cable or Wifi USB adapter o Mouse o Keyboard o Monitor or laptop screen o And monitor cable depending on the type of workstation. The user will need the following: o VPN access o Secure Pulse installed for DUO access o Space to accommodate workstation/monitors o Available power outlets ideally with a surge protector to protect the device from electrical surges (with the ground) o Means to connect to the Internet from a remote site (home) either via wireless or Ethernet hard-wired connection o User profiles must sign in to the device with their AD credentials while on the UCSF network before taking it home Setting up a monitor 1. -

Desktop Computer Hardware & Software

RARITAN VALLEY COMMUNITY COLLEGE Course Outline CISY 113 - Desktop Computer Hardware & Software I. Basic Course Information A. Course Number and Title: CISY 113 – Desktop Computer Hardware & Software B. Date of Proposal: January 2007 C. Sponsoring Department: Computer Science (CS) D. Semester Credit Hours: 3 E. Weekly Contact Hours: 4 Lecture 2 Lab 2 F. Prerequisite: None G. Laboratory Fees: Yes II. Catalog Description (Prerequisites: None) This course provides an introduction to the infrastructure components of desktop computers and their application to business problems. A study of current hardware and operating environments is presented. The course also covers basic troubleshooting methods and systems administration tasks III. Statement of Course Need To compete in today’s job market, students need to understand the theory of operation of basic desktop computer hardware and operating environments beyond basic application software literacy. This course provides students with the foundational understanding necessary for the basic support of administrative, maintenance and troubleshooting tasks associated with Desktop Computer Support. Since Desktop Computers are commonplace both at home and in business, there is an increasing need for people who can assist in the support of hardware and software for individual needs and for small business environments. 1 IV. Place of Course in College Curriculum A. Requirement – Required course in a. Information Systems & Technology A.S. Degree b. Information Systems & Technology A.A. S Degree c. Desktop Support Certificate d. Web Developer Certificate Program Elective – Satisfies any Computer Science Elective at the 100 level Free Elective B. Although this revised course has not been evaluated by NJTransfer yet, the previous version of this course only transferred to a handful of schools. -

Gateway Desktop Computer User's Manual

- 1 Gateway Desktop Computer User’s Manual Gateway Desktop Computer User’s Manual 2 - © 2013. All Rights Reserved. Gateway Desktop Computer Covers: Tower models This revision: October 2013 Sign up for an Acer ID and enable Acer Remote Files Open the Acer Portal from the Start screen to sign up for an Acer ID or sign in if you already have an Acer ID. There are three great reason for you to get an Acer ID: • Remotely access your PC from your other devices with our free Acer Remote Files app • Get the latest offers and product information • Register your device for warranty service For more information please visit the AcerCloud website: www.acer.com/acercloud Model number: _________________________________ Serial number: _________________________________ Date of purchase: ______________________________ Place of purchase: ______________________________ Table of contents - 3 TABLE OF CONTENTS First things first 4 Memory card reader 27 Your guides ............................................. 4 Connectivity options............................... 27 Basic care and tips for using your Video and audio connectors 29 computer.................................................. 4 Turning your computer on and off............... 4 HDMI 30 Taking care of your computer ..................... 5 Power cord information ............................... 5 Universal Serial Bus (USB) 31 Cleaning and servicing................................ 6 Frequently asked questions 32 Recovery 7 Requesting service ................................ 34 Creating a recovery backup..................... 7 Tips and hints for using Windows 8.1.... 36 Creating a drivers and applications How do I get to Start? ............................... 36 backup..................................................... 9 What are "Charms?" ................................. 36 Can I boot directly to the desktop? ........... 37 Recovering your system ........................ 11 How do I jump between apps?.................. 37 Reinstalling drivers and applications......... 12 How do I turn off my computer?............... -

Getting to Know Computers

Computer Basics Getting to Know Computers Page 1 What is a Computer? A computer is an electronic device that manipulates information, or "data." It has the ability to store, retrieve, and process data. You can use a computer to type documents, send email, and browse the internet. You can also use it to handle spreadsheets, accounting, database management, presentations, games, and more. Watch the video to learn about different types of computers. Watch the video (2:39). Need help? Computers Simplified For beginning computer users, the computer aisles at an electronics store can be quite a mystery, not to mention overwhelming. However, computers really aren't that mysterious. All types of computers consist of two basic parts: Hardware is any part of your computer that has a physical structure, such as the computer monitor or keyboard. Software is any set of instructions that tells the hardware what to do. It is what guides the hardware and tells it how to accomplish each task. Some examples of software are web browsers, games, and word processors such as Microsoft Word. A motherboard (hardware) Microsoft Word (software) Anything you buy for your computer can be classified as either hardware or software. Once you learn more about these items, computers are actually very straightforward. The first electronic computer, the Electronic Numerical Integrator and Computer (ENIAC), was developed in ©1998-2013 Goodwill Community Foundation, Inc. All rights reserved. 1946. It took up 1,800 square feet and weighed 30 tons. Page 2 What are the Different Types of Computers? When most people hear the word "computer," they think of a personal computer such as a desktop or laptop computer. -

Zebra Workstation Connect Fact Sheet

FACT SHEET WORKSTATION CONNECT Benefits • Get more value Turn Zebra mobile computers into mobile-driven workstations out of your Zebra mobile devices THE CHALLENGE: • Eliminate the need to purchase desktop Sometimes mobile workers need a big-screen environment computers — Your workers depend on their Zebra mobile computers for the information access they need to without eliminating perform everyday tasks. But when heavier data entry, navigation of information-dense applications, desktop computer or simultaneous interaction with multiple applications is required, workers need the features of a functionality desktop computer. Now, you can give them both with Zebra Workstation Connect. • Simplify infrastructure, reducing THE SOLUTION: management Turn your mobile computers into mobile-driven workstations time and cost With this powerful value-add software solution, workers can easily turn your Zebra mobile computers • Give workers more into workstations, on demand. Applications on the mobile computer will appear on a large monitor, functionality to complete with a mouse, keyboard, headphones and any other peripherals required to enable the better serve your customers and task at hand. Workers get a true workstation experience. Unlike screen mirroring, the application’s improve productivity user interface takes advantage of the additional monitor real estate — and users can work on different applications on the mobile device and desktop simultaneously, resize windows and open multiple apps in multiple windows. Plus, the ability to switch between mobile computer and mobile-driven workstation on the fly allows workers to choose the right mode for the moment. FACT SHEET WORKSTATION CONNECT How does it work? include the capture of a larger volume of barcodes, either on screen, printed on labels Comprehensive data features or printed in a catalog or on an inventory sheet.