Quantum Entropy and Its Applications to Quantum Communication and Statistical Physics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quantum Information, Entanglement and Entropy

QUANTUM INFORMATION, ENTANGLEMENT AND ENTROPY Siddharth Sharma Abstract In this review I had given an introduction to axiomatic Quantum Mechanics, Quantum Information and its measure entropy. Also, I had given an introduction to Entanglement and its measure as the minimum of relative quantum entropy of a density matrix of a Hilbert space with respect to all separable density matrices of the same Hilbert space. I also discussed geodesic in the space of density matrices, their orthogonality and Pythagorean theorem of density matrix. Postulates of Quantum Mechanics The basic postulate of quantum mechanics is about the Hilbert space formalism: • Postulates 0: To each quantum mechanical system, has a complex Hilbert space ℍ associated to it: Set of all pure state, ℍ⁄∼ ≔ {⟦푓⟧|⟦푓⟧ ≔ {ℎ: ℎ ∼ 푓}} and ℎ ∼ 푓 ⇔ ∃ 푧 ∈ ℂ such that ℎ = 푧 푓 where 푧 is called phase • Postulates 1: The physical states of a quantum mechanical system are described by statistical operators acting on the Hilbert space. A density matrix or statistical operator, is a positive operator of trace 1 on the Hilbert space. Where is called positive if 〈푥, 푥〉 for all 푥 ∈ ℍ and 〈⋇,⋇〉. • Postulates 2: The observables of a quantum mechanical system are described by self- adjoint operators acting on the Hilbert space. A self-adjoint operator A on a Hilbert space ℍ is a linear operator 퐴: ℍ → ℍ which satisfies 〈퐴푥, 푦〉 = 〈푥, 퐴푦〉 for 푥, 푦 ∈ ℍ. Lemma: The density matrices acting on a Hilbert space form a convex set whose extreme points are the pure states. Proof. Denote by Σ the set of density matrices. -

1 Postulate (QM4): Quantum Measurements

Part IIC Lent term 2019-2020 QUANTUM INFORMATION & COMPUTATION Nilanjana Datta, DAMTP Cambridge 1 Postulate (QM4): Quantum measurements An isolated (closed) quantum system has a unitary evolution. However, when an exper- iment is done to find out the properties of the system, there is an interaction between the system and the experimentalists and their equipment (i.e., the external physical world). So the system is no longer closed and its evolution is not necessarily unitary. The following postulate provides a means of describing the effects of a measurement on a quantum-mechanical system. In classical physics the state of any given physical system can always in principle be fully determined by suitable measurements on a single copy of the system, while leaving the original state intact. In quantum theory the corresponding situation is bizarrely different { quantum measurements generally have only probabilistic outcomes, they are \invasive", generally unavoidably corrupting the input state, and they reveal only a rather small amount of information about the (now irrevocably corrupted) input state identity. Furthermore the (probabilistic) change of state in a quantum measurement is (unlike normal time evolution) not a unitary process. Here we outline the associated mathematical formalism, which is at least, easy to apply. (QM4) Quantum measurements and the Born rule In Quantum Me- chanics one measures an observable, i.e. a self-adjoint operator. Let A be an observable acting on the state space V of a quantum system Since A is self-adjoint, its eigenvalues P are real. Let its spectral projection be given by A = n anPn, where fang denote the set of eigenvalues of A and Pn denotes the orthogonal projection onto the subspace of V spanned by eigenvectors of A corresponding to the eigenvalue Pn. -

Quantum Circuits with Mixed States Is Polynomially Equivalent in Computational Power to the Standard Unitary Model

Quantum Circuits with Mixed States Dorit Aharonov∗ Alexei Kitaev† Noam Nisan‡ February 1, 2008 Abstract Current formal models for quantum computation deal only with unitary gates operating on “pure quantum states”. In these models it is difficult or impossible to deal formally with several central issues: measurements in the middle of the computation; decoherence and noise, using probabilistic subroutines, and more. It turns out, that the restriction to unitary gates and pure states is unnecessary. In this paper we generalize the formal model of quantum circuits to a model in which the state can be a general quantum state, namely a mixed state, or a “density matrix”, and the gates can be general quantum operations, not necessarily unitary. The new model is shown to be equivalent in computational power to the standard one, and the problems mentioned above essentially disappear. The main result in this paper is a solution for the subroutine problem. The general function that a quantum circuit outputs is a probabilistic function. However, the question of using probabilistic functions as subroutines was not previously dealt with, the reason being that in the language of pure states, this simply can not be done. We define a natural notion of using general subroutines, and show that using general subroutines does not strengthen the model. As an example of the advantages of analyzing quantum complexity using density ma- trices, we prove a simple lower bound on depth of circuits that compute probabilistic func- arXiv:quant-ph/9806029v1 8 Jun 1998 tions. Finally, we deal with the question of inaccurate quantum computation with mixed states. -

Pilot Quantum Error Correction for Global

Pilot Quantum Error Correction for Global- Scale Quantum Communications Laszlo Gyongyosi*1,2, Member, IEEE, Sandor Imre1, Member, IEEE 1Quantum Technologies Laboratory, Department of Telecommunications Budapest University of Technology and Economics 2 Magyar tudosok krt, H-1111, Budapest, Hungary 2Information Systems Research Group, Mathematics and Natural Sciences Hungarian Academy of Sciences H-1518, Budapest, Hungary *[email protected] Real global-scale quantum communications and quantum key distribution systems cannot be implemented by the current fiber and free-space links. These links have high attenuation, low polarization-preserving capability or extreme sensitivity to the environment. A potential solution to the problem is the space-earth quantum channels. These channels have no absorption since the signal states are propagated in empty space, however a small fraction of these channels is in the atmosphere, which causes slight depolarizing effect. Furthermore, the relative motion of the ground station and the satellite causes a rotation in the polarization of the quantum states. In the current approaches to compensate for these types of polarization errors, high computational costs and extra physical apparatuses are required. Here we introduce a novel approach which breaks with the traditional views of currently developed quantum-error correction schemes. The proposed solution can be applied to fix the polarization errors which are critical in space-earth quantum communication systems. The channel coding scheme provides capacity-achieving communication over slightly depolarizing space-earth channels. I. Introduction Quantum error-correction schemes use different techniques to correct the various possible errors which occur in a quantum channel. In the first decade of the 21st century, many revolutionary properties of quantum channels were discovered [12-16], [19-22] however the efficient error- correction in quantum systems is still a challenge. -

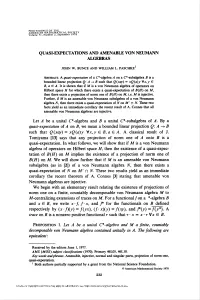

Quasi-Expectations and Amenable Von Neumann

PROCEEDINGS OI THE „ „ _„ AMERICAN MATHEMATICAL SOCIETY Volume 71, Number 2, September 1978 QUASI-EXPECTATIONSAND AMENABLEVON NEUMANN ALGEBRAS JOHN W. BUNCE AND WILLIAM L. PASCHKE1 Abstract. A quasi-expectation of a C*-algebra A on a C*-subalgebra B is a bounded linear projection Q: A -> B such that Q(xay) = xQ(a)y Vx,y £ B, a e A. It is shown that if M is a von Neumann algebra of operators on Hubert space H for which there exists a quasi-expectation of B(H) on M, then there exists a projection of norm one of B(H) on M, i.e. M is injective. Further, if M is an amenable von Neumann subalgebra of a von Neumann algebra N, then there exists a quasi-expectation of N on M' n N. These two facts yield as an immediate corollary the recent result of A. Cormes that all amenable von Neumann algebras are injective. Let A be a unital C*-algebra and B a unital C*-subalgebra of A. By a quasi-expectation of A on B, we mean a bounded linear projection Q: A -» B such that Q(xay) = xQ(a)y Vx,y G B, a G A. A classical result of J. Tomiyama [13] says that any projection of norm one of A onto F is a quasi-expectation. In what follows, we will show that if M is a von Neumann algebra of operators on Hubert space H, then the existence of a quasi-expec- tation of B(H) on M implies the existence of a projection of norm one of B(H) on M. -

Perturbative Algebraic Quantum Field Theory at Finite Temperature

Perturbative Algebraic Quantum Field Theory at Finite Temperature Dissertation zur Erlangung des Doktorgrades des Fachbereichs Physik der Universität Hamburg vorgelegt von Falk Lindner aus Zittau Hamburg 2013 Gutachter der Dissertation: Prof. Dr. K. Fredenhagen Prof. Dr. D. Bahns Gutachter der Disputation: Prof. Dr. K. Fredenhagen Prof. Dr. J. Louis Datum der Disputation: 01. 07. 2013 Vorsitzende des Prüfungsausschusses: Prof. Dr. C. Hagner Vorsitzender des Promotionsausschusses: Prof. Dr. P. Hauschildt Dekan der Fakultät für Mathematik, Informatik und Naturwissenschaften: Prof. Dr. H. Graener Zusammenfassung Der algebraische Zugang zur perturbativen Quantenfeldtheorie in der Minkowskiraum- zeit wird vorgestellt, wobei ein Schwerpunkt auf die inhärente Zustandsunabhängig- keit des Formalismus gelegt wird. Des Weiteren wird der Zustandsraum der pertur- bativen QFT eingehend untersucht. Die Dynamik wechselwirkender Theorien wird durch ein neues Verfahren konstruiert, das die Gültigkeit des Zeitschichtaxioms in der kausalen Störungstheorie systematisch ausnutzt. Dies beleuchtet einen bisher un- bekannten Zusammenhang zwischen dem statistischen Zugang der Quantenmechanik und der perturbativen Quantenfeldtheorie. Die entwickelten Methoden werden zur ex- pliziten Konstruktion von KMS- und Vakuumzuständen des wechselwirkenden, mas- siven Klein-Gordon Feldes benutzt und damit mögliche Infrarotdivergenzen der Theo- rie, also insbesondere der wechselwirkenden Wightman- und zeitgeordneten Funktio- nen des wechselwirkenden Feldes ausgeschlossen. Abstract We present the algebraic approach to perturbative quantum field theory for the real scalar field in Minkowski spacetime. In this work we put a special emphasis on the in- herent state-independence of the framework and provide a detailed analysis of the state space. The dynamics of the interacting system is constructed in a novel way by virtue of the time-slice axiom in causal perturbation theory. -

A Mini-Introduction to Information Theory

A Mini-Introduction To Information Theory Edward Witten School of Natural Sciences, Institute for Advanced Study Einstein Drive, Princeton, NJ 08540 USA Abstract This article consists of a very short introduction to classical and quantum information theory. Basic properties of the classical Shannon entropy and the quantum von Neumann entropy are described, along with related concepts such as classical and quantum relative entropy, conditional entropy, and mutual information. A few more detailed topics are considered in the quantum case. arXiv:1805.11965v5 [hep-th] 4 Oct 2019 Contents 1 Introduction 2 2 Classical Information Theory 2 2.1 ShannonEntropy ................................... .... 2 2.2 ConditionalEntropy ................................. .... 4 2.3 RelativeEntropy .................................... ... 6 2.4 Monotonicity of Relative Entropy . ...... 7 3 Quantum Information Theory: Basic Ingredients 10 3.1 DensityMatrices .................................... ... 10 3.2 QuantumEntropy................................... .... 14 3.3 Concavity ......................................... .. 16 3.4 Conditional and Relative Quantum Entropy . ....... 17 3.5 Monotonicity of Relative Entropy . ...... 20 3.6 GeneralizedMeasurements . ...... 22 3.7 QuantumChannels ................................... ... 24 3.8 Thermodynamics And Quantum Channels . ...... 26 4 More On Quantum Information Theory 27 4.1 Quantum Teleportation and Conditional Entropy . ......... 28 4.2 Quantum Relative Entropy And Hypothesis Testing . ......... 32 4.3 Encoding -

Von Neumann Algebras Form a Model for the Quantum Lambda Calculus∗

Von Neumann Algebras form a Model for the Quantum Lambda Calculus∗ Kenta Cho and Abraham Westerbaan Institute for Computing and Information Sciences Radboud University, Nijmegen, the Netherlands {K.Cho,awesterb}@cs.ru.nl Abstract We present a model of Selinger and Valiron’s quantum lambda calculus based on von Neumann algebras, and show that the model is adequate with respect to the operational semantics. 1998 ACM Subject Classification F.3.2 Semantics of Programming Language Keywords and phrases quantum lambda calculus, von Neumann algebras 1 Introduction In 1925, Heisenberg realised, pondering upon the problem of the spectral lines of the hydrogen atom, that a physical quantity such as the x-position of an electron orbiting a proton is best described not by a real number but by an infinite array of complex numbers [12]. Soon afterwards, Born and Jordan noted that these arrays should be multiplied as matrices are [3]. Of course, multiplying such infinite matrices may lead to mathematically dubious situations, which spurred von Neumann to replace the infinite matrices by operators on a Hilbert space [44]. He organised these into rings of operators [25], now called von Neumann algebras, and thereby set off an explosion of research (also into related structures such as Jordan algebras [13], orthomodular lattices [2], C∗-algebras [34], AW ∗-algebras [17], order unit spaces [14], Hilbert C∗-modules [28], operator spaces [31], effect algebras [8], . ), which continues even to this day. One current line of research (with old roots [6, 7, 9, 19]) is the study of von Neumann algebras from a categorical perspective (see e.g. -

Chapter 6 Quantum Entropy

Chapter 6 Quantum entropy There is a notion of entropy which quantifies the amount of uncertainty contained in an ensemble of Qbits. This is the von Neumann entropy that we introduce in this chapter. In some respects it behaves just like Shannon's entropy but in some others it is very different and strange. As an illustration let us immediately say that as in the classical theory, conditioning reduces entropy; but in sharp contrast with classical theory the entropy of a quantum system can be lower than the entropy of its parts. The von Neumann entropy was first introduced in the realm of quantum statistical mechanics, but we will see in later chapters that it enters naturally in various theorems of quantum information theory. 6.1 Main properties of Shannon entropy Let X be a random variable taking values x in some alphabet with probabil- ities px = Prob(X = x). The Shannon entropy of X is X 1 H(X) = p ln x p x x and quantifies the average uncertainty about X. The joint entropy of two random variables X, Y is similarly defined as X 1 H(X; Y ) = p ln x;y p x;y x;y and the conditional entropy X X 1 H(XjY ) = py pxjy ln p j y x;y x y 1 2 CHAPTER 6. QUANTUM ENTROPY where px;y pxjy = py The conditional entropy is the average uncertainty of X given that we observe Y = y. It is easily seen that H(XjY ) = H(X; Y ) − H(Y ) The formula is consistent with the interpretation of H(XjY ): when we ob- serve Y the uncertainty H(X; Y ) is reduced by the amount H(Y ). -

Quantum Thermodynamics at Strong Coupling: Operator Thermodynamic Functions and Relations

Article Quantum Thermodynamics at Strong Coupling: Operator Thermodynamic Functions and Relations Jen-Tsung Hsiang 1,*,† and Bei-Lok Hu 2,† ID 1 Center for Field Theory and Particle Physics, Department of Physics, Fudan University, Shanghai 200433, China 2 Maryland Center for Fundamental Physics and Joint Quantum Institute, University of Maryland, College Park, MD 20742-4111, USA; [email protected] * Correspondence: [email protected]; Tel.: +86-21-3124-3754 † These authors contributed equally to this work. Received: 26 April 2018; Accepted: 30 May 2018; Published: 31 May 2018 Abstract: Identifying or constructing a fine-grained microscopic theory that will emerge under specific conditions to a known macroscopic theory is always a formidable challenge. Thermodynamics is perhaps one of the most powerful theories and best understood examples of emergence in physical sciences, which can be used for understanding the characteristics and mechanisms of emergent processes, both in terms of emergent structures and the emergent laws governing the effective or collective variables. Viewing quantum mechanics as an emergent theory requires a better understanding of all this. In this work we aim at a very modest goal, not quantum mechanics as thermodynamics, not yet, but the thermodynamics of quantum systems, or quantum thermodynamics. We will show why even with this minimal demand, there are many new issues which need be addressed and new rules formulated. The thermodynamics of small quantum many-body systems strongly coupled to a heat bath at low temperatures with non-Markovian behavior contains elements, such as quantum coherence, correlations, entanglement and fluctuations, that are not well recognized in traditional thermodynamics, built on large systems vanishingly weakly coupled to a non-dynamical reservoir. -

Some Trace Inequalities for Exponential and Logarithmic Functions

Bull. Math. Sci. https://doi.org/10.1007/s13373-018-0123-3 Some trace inequalities for exponential and logarithmic functions Eric A. Carlen1 · Elliott H. Lieb2 Received: 8 October 2017 / Revised: 17 April 2018 / Accepted: 24 April 2018 © The Author(s) 2018 Abstract Consider a function F(X, Y ) of pairs of positive matrices with values in the positive matrices such that whenever X and Y commute F(X, Y ) = X pY q . Our first main result gives conditions on F such that Tr[X log(F(Z, Y ))]≤Tr[X(p log X + q log Y )] for all X, Y, Z such that TrZ = Tr X. (Note that Z is absent from the right side of the inequality.) We give several examples of functions F to which the theorem applies. Our theorem allows us to give simple proofs of the well known logarithmic inequalities of Hiai and Petz and several new generalizations of them which involve three variables X, Y, Z instead of just X, Y alone. The investigation of these logarith- mic inequalities is closely connected with three quantum relative entropy functionals: The standard Umegaki quantum relative entropy D(X||Y ) = Tr[X(log X − log Y ]), and two others, the Donald relative entropy DD(X||Y ), and the Belavkin–Stasewski relative entropy DBS(X||Y ). They are known to satisfy DD(X||Y ) ≤ D(X||Y ) ≤ DBS(X||Y ). We prove that the Donald relative entropy provides the sharp upper bound, independent of Z on Tr[X log(F(Z, Y ))] in a number of cases in which F(Z, Y ) is homogeneous of degree 1 in Z and −1inY . -

Lecture Notes on Spontaneous Symmetry Breaking and the Higgs Mechanism

Lecture Notes on Spontaneous Symmetry Breaking and the Higgs mechanism Draft: June 19, 2012 N.P. Landsman Institute for Mathematics, Astrophysics, and Particle Physics Radboud University Nijmegen Heyendaalseweg 135 6525 AJ NIJMEGEN THE NETHERLANDS email: [email protected] website: http://www.math.ru.nl/∼landsman/SSB.html tel.: 024-3652874 office: HG03.740 C'est la dissym´etriequi cre´ele ph´enom`ene(Pierre Curie, 1894) 1 INTRODUCTION 2 1 Introduction Spontaneous Symmetry Breaking or SSB is the phenomenon in which an equation (or system of equations) possesses a symmetry that is not shared by some `preferred' solution. For example, x2 = 1 has a symmetry x 7! −x, but both solutions x = ±1 `break' this symmetry. However, the symmetry acts on the solution space f1; −1g in the obvious way, mapping one asymmetric solution into another. In physics, the equations in question are typically derived from a Lagrangian L or Hamiltonian H, and instead of looking at the symmetries of the equations of motion one may look at the symmetries of L or H. Furthermore, rather than looking at the solutions, one focuses on the initial conditions, especially in the Hamiltonian formalism. These initial conditions are states. Finally, in the context of SSB one is typically interested in two kinds of `preferred' solutions: ground states and thermal equilibrium states (both of which are time-independent by definition). Thus we may (initially) say that SSB occurs when some Hamiltonian has a symmetry that is not shared by its ground state(s) and/or thermal equilibrium states.1 The archetypical example of SSB in classical mechanics is the potential 1 2 2 1 2 4 V (q) = − 2 ! q + 4 λ q ; (1.1) often called the double-well potential (we assume that ! ad λ are real).