Mixed Reality: a Survey

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Use of Bioinformatics Resources and Tools by Users of Bioinformatics Centers in India Meera Yadav University of Delhi, [email protected]

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Library Philosophy and Practice (e-journal) Libraries at University of Nebraska-Lincoln 2015 Use of Bioinformatics Resources and Tools by Users of Bioinformatics Centers in India meera yadav University of Delhi, [email protected] Manlunching Tawmbing Saha Institute of Nuclear Physisc, [email protected] Follow this and additional works at: http://digitalcommons.unl.edu/libphilprac Part of the Library and Information Science Commons yadav, meera and Tawmbing, Manlunching, "Use of Bioinformatics Resources and Tools by Users of Bioinformatics Centers in India" (2015). Library Philosophy and Practice (e-journal). 1254. http://digitalcommons.unl.edu/libphilprac/1254 Use of Bioinformatics Resources and Tools by Users of Bioinformatics Centers in India Dr Meera, Manlunching Department of Library and Information Science, University of Delhi, India [email protected], [email protected] Abstract Information plays a vital role in Bioinformatics to achieve the existing Bioinformatics information technologies. Librarians have to identify the information needs, uses and problems faced to meet the needs and requirements of the Bioinformatics users. The paper analyses the response of 315 Bioinformatics users of 15 Bioinformatics centers in India. The papers analyze the data with respect to use of different Bioinformatics databases and tools used by scholars and scientists, areas of major research in Bioinformatics, Major research project, thrust areas of research and use of different resources by the user. The study identifies the various Bioinformatics services and resources used by the Bioinformatics researchers. Keywords: Informaion services, Users, Inforamtion needs, Bioinformatics resources 1. Introduction ‘Needs’ refer to lack of self-sufficiency and also represent gaps in the present knowledge of the users. -

Establishing the Basis for a CIS (Computer Information Systems) Undergraduate Program: on Seeking the Body of Knowledge

Information Systems Education Journal (ISEDJ) 13 (5) ISSN: 1545-679X September 2015 Establishing the Basis for a CIS (Computer Information Systems) Undergraduate Program: On Seeking the Body of Knowledge Herbert E. Longenecker, Jr. [email protected] University of South Alabama Mobile, AL 36688, USA Jeffry Babb [email protected] West Texas A&M University Canyon, TX 79016, USA Leslie J. Waguespack [email protected] Bentley University Waltham, Massachusetts 02452, USA Thomas N. Janicki [email protected] University of North Carolina Wilmington Wilmington, NC 28403, USA David Feinstein [email protected] University of South Alabama Mobile, AL 36688, USA Abstract The evolution of computing education spans a spectrum from computer science (CS) grounded in the theory of computing, to information systems (IS), grounded in the organizational application of data processing. This paper reports on a project focusing on a particular slice of that spectrum commonly labeled as computer information systems (CIS) and reflected in undergraduate academic programs designed to prepare graduates for professions as software developers building systems in government, commercial and not-for-profit enterprises. These programs with varying titles number in the hundreds. This project is an effort to determine if a common knowledge footprint characterizes CIS. If so, an eventual goal would be to describe the proportions of those essential knowledge components and propose guidelines specifically for effective undergraduate CIS curricula. Professional computing societies (ACM, IEEE, AITP (formerly DPMA), etc.) over the past fifty years have sponsored curriculum guidelines for various slices of education that in aggregate offer a compendium of knowledge areas in ©2015 EDSIG (Education Special Interest Group of the AITP) Page 37 www.aitp-edsig.org /www.isedj.org Information Systems Education Journal (ISEDJ) 13 (5) ISSN: 1545-679X September 2015 computing. -

The Influence of Mixed Reality on Satisfaction and Brand Loyalty: a Brand Equity Perspective

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 31 January 2020 doi:10.20944/preprints202001.0384.v1 Peer-reviewed version available at Sustainability 2020, 12, 2956; doi:10.3390/su12072956 The Influence of Mixed Reality on Satisfaction and Brand Loyalty: A Brand Equity Perspective Sujin Bae, Ph.D. Candidate School of Management, Kyung Hee University Kyung Hee Dae Ro 26, Dong Dae Mun Gu Seoul 02447, Korea Tel: +82 2 961 9491, E-Mail: [email protected] Timothy Hyungsoo Jung, Ph.D. Director of Creative AR & VR Hub Faculty of Business and Law Manchester Metropolitan University, Righton Building, Cavendish Street, Manchester M15 6BG, UK Tel: +44 161-247-2701, Email: [email protected] Natasha Moorhouse, Ph.D. Research Associate Faculty of Business and Law Manchester Metropolitan University All Saints Building, All Saints, Manchester M15 6BH, UK E-Mail: [email protected] Minjeong Suh, Ph.D. Adjunct Professor Graduate school of international tourism, Hanyang University 222, Wangsimni-ro, Seongdong-gu Seoul 04763, Korea Tel: +82 (0)10 5444 4706, E-Mail: [email protected] Ohbyung Kwon,* Ph.D. School of Management, Kyung Hee University Kyung Hee Dae Ro 26, Dong Dae Mun Gu Seoul 02447, Korea Tel: +82 2 961 2148, E-Mail: [email protected] * Corresponding author. © 2020 by the author(s). Distributed under a Creative Commons CC BY license. Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 31 January 2020 doi:10.20944/preprints202001.0384.v1 Peer-reviewed version available at Sustainability 2020, 12, 2956; doi:10.3390/su12072956 The Influence of Mixed Reality on Satisfaction and Brand Loyalty: A Brand Equity Perspective Abstract Mixed reality technology is being increasingly used in cultural heritage attractions to enhance visitors’ experience. -

Introduction to Minitrack: Mixed, Augmented and Virtual Reality: Co- Designed Services and Applications

Proceedings of the 52nd Hawaii International Conference on System Sciences | 2019 Introduction to Minitrack: Mixed, Augmented and Virtual Reality: Co- designed Services and Applications Petri Parvinen, Minitrack Chair Juho Hamari Essi Pöyry University of Helsinki University of Tampere University of Helsinki [email protected] [email protected] [email protected] Virtual reality (VR) refers to computer safety, privacy and confidentiality and immersive technologies that use software to generate the experiences [1, 2, 4, 5, 8, 12]. A co-created realistic images, sounds and other sensations that envisioning of an immersive experience also elevates represent an immersive environment and simulate a institutions of agreement, commonly coined as a user’s physical presence in this environment [10]. feeling of win-win. From this point of view, the Mixed reality (MR) refers to combining real and major challenge for both VR and AR technologies is virtual contents with the aid of digital devices [3]. to convince users that the added value is high enough Mixed reality is seen to consist of both augmented to compete with the current systems and offerings in reality (i.e., virtual 3D objects in immersive reality), desktops, notebooks, tablets, smartphones and related and augmented virtuality (i.e., captured features of video and game-like applications. reality in immersive virtual 3D environments) [7]. The minitrack encouraged submissions from both All these technologies have recently peaked in terms cutting edge technology and practical applications. of media attention as they are expected to disturb The minitrack showcases research on: existing markets like PCs and smartphones did when · Realistic virtual humans in immersive they were introduced to the markets. -

Virtual and Mixed Prototyping Techniques and Technologies for Consumer Product Design Within a Blended Learning Design Environment

INTERNATIONAL DESIGN CONFERENCE - DESIGN 2018 https://doi.org/10.21278/idc.2018.0428 VIRTUAL AND MIXED PROTOTYPING TECHNIQUES AND TECHNOLOGIES FOR CONSUMER PRODUCT DESIGN WITHIN A BLENDED LEARNING DESIGN ENVIRONMENT M. Bordegoni, F. Ferrise, R. Wendrich and S. Barone Abstract Both physical and virtual prototyping are core elements of the design and engineering process. In this paper, we present an industrial case-study in conjunction with a collaborative agile design engineering process and “methodology.” Four groups of heterogeneous Post-doc and Ph.D. students from various domains were assembled and instructed to fulfill a multi-disciplinary design task based on a real-world industry use-case. We present findings, evaluation, and results of this study. Keywords: virtual prototyping, virtual reality (VR), augmented reality (AR), engineering design, collaborative design 1. Introduction Virtual and mixed prototyping within a collaborative blended learning environment is considered an enabling design support technique that allows designers to gain first-hand appreciation of existing or near-future conditions through active engagement with a wide variety in prototyping techniques and prototypes. The product design process (PDP) follows a dual approach and method based on a combination of a blended learning environment (Boelens et al., 2017) and interactive affective experience prototyping (Buchenau and Suri, 2000). The virtual prototyping summer school (VRPT-SS) facilitates this combinatorial collaborative setting to immerse learners in the externalization and representation of their ideas quickly, exploring and communicating their ideas with others. During the course of the week a series of evaluations on the prototyping progressions are made. The spectrum of virtual and mixed prototyping is quite extensive and has multiple definitions and meanings according to literature (see Wang, 2002, and Zorriassatine et al., 2003). -

Growth of Immersive Media- a Reality Check

Growth of Immersive Media - A Reality Check | Growth of Immersive Media- A Reality Check 2019 1 Growth of Immersive Media- Growth of Immersive Media - A Reality Check | Table of Contents A Reality Check Table of Contents 1. Report Introduction & Methodology 3 2. Executive Summary 4 3. Overview & Evolution 6 4. Immersive Media Ecosystem 10 5. Global Market Analysis 16 6. Applications & Use Cases 21 7. Emerging Technology Innovations 31 8. The India Story 35 9. Recommendations 51 Profiles of Key Players 55 Appendix – Additional Case Studies 65 Acknowledgements 71 Glossary of Terms 72 About Nasscom 73 Disclaimer 74 1 Growth of Immersive Media - A Reality Check | Table of Contents 2 Growth of Immersive Media - A Reality Check | Report Introduction & Methodology Report Introduction 1 & Methodology Background, Scope and Objective • This report aims to provide an assessment of the current market for Immersive Media both globally and in India. • This report provides a summary view of the following with respect to Immersive Media: Brief Introduction & its Evolution, Ecosystem Players, Market Size and Growth Estimates, Key Applications and Use Cases, Analysis of the Indian Market, Key demand drivers, Factors limiting growth, along with the key recommendations that may propel the growth of the Immersive Media market in India. Details of Research Tools and Methodology • We conducted Primary and Secondary Research for our market analysis. Secondary research formed the initial phase of our study, where we conducted data mining, referring to verified data sources such as independent studies, technical journals, trade magazines, and paid data sources. • As part of the Primary Research, we performed in-depth interviews with stakeholders from throughout the Immersive Media ecosystem to gain insights on market trends, demand & supply side drivers, regulatory scenarios, major technological trends, opportunities & challenges for future state analysis. -

Lightdb: a DBMS for Virtual Reality Video

LightDB: A DBMS for Virtual Reality Video Brandon Haynes, Amrita Mazumdar, Armin Alaghi, Magdalena Balazinska, Luis Ceze, Alvin Cheung Paul G. Allen School of Computer Science & Engineering University of Washington, Seattle, Washington, USA {bhaynes, amrita, armin, magda, luisceze, akcheung}@cs.washington.edu http://lightdb.uwdb.io ABSTRACT spherical panoramic VR videos (a.k.a. 360◦ videos), encoding one We present the data model, architecture, and evaluation of stereoscopic frame of video can involve processing up to 18× more LightDB, a database management system designed to efficiently bytes than an ordinary 2D video [30]. manage virtual, augmented, and mixed reality (VAMR) video con- AR and MR video applications, on the other hand, often mix tent. VAMR video differs from its two-dimensional counterpart smaller amounts of synthetic video with the world around a user. in that it is spherical with periodic angular dimensions, is nonuni- Similar to VR, however, these applications have extremely de- formly and continuously sampled, and applications that consume manding latency and throughput requirements since they must react such videos often have demanding latency and throughput require- to the real world in real time. ments. To address these challenges, LightDB treats VAMR video To address these challenges, various specialized VAMR sys- data as a logically-continuous six-dimensional light field. Further- tems have been introduced for preparing and serving VAMR video more, LightDB supports a rich set of operations over light fields, data (e.g., VRView [71], Facebook Surround 360 [20], YouTube and automatically transforms declarative queries into executable VR [75], Google Poly [25], Lytro VR [41], Magic Leap Cre- physical plans. -

Information Systems Foundations Theory, Representation and Reality

Information Systems Foundations Theory, Representation and Reality Information Systems Foundations Theory, Representation and Reality Dennis N. Hart and Shirley D. Gregor (Editors) Workshop Chair Shirley D. Gregor ANU Program Chairs Dennis N. Hart ANU Shirley D. Gregor ANU Program Committee Bob Colomb University of Queensland Walter Fernandez ANU Steven Fraser ANU Sigi Goode ANU Peter Green University of Queensland Robert Johnston University of Melbourne Sumit Lodhia ANU Mike Metcalfe University of South Australia Graham Pervan Curtin University of Technology Michael Rosemann Queensland University of Technology Graeme Shanks University of Melbourne Tim Turner Australian Defence Force Academy Leoni Warne Defence Science and Technology Organisation David Wilson University of Technology, Sydney Published by ANU E Press The Australian National University Canberra ACT 0200, Australia Email: [email protected] This title is also available online at: http://epress.anu.edu.au/info_systems02_citation.html National Library of Australia Cataloguing-in-Publication entry Information systems foundations : theory, representation and reality Bibliography. ISBN 9781921313134 (pbk.) ISBN 9781921313141 (online) 1. Management information systems–Congresses. 2. Information resources management–Congresses. 658.4038 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying or otherwise, without the prior permission of the publisher. Cover design by Brendon McKinley with logo by Michael Gregor Authors’ photographs on back cover: ANU Photography Printed by University Printing Services, ANU This edition © 2007 ANU E Press Table of Contents Preface vii The Papers ix Theory Designing for Mutability in Information Systems Artifacts, Shirley Gregor and Juhani Iivari 3 The Eect of the Application Domain in IS Problem Solving: A Theoretical Analysis, Iris Vessey 25 Towards a Unied Theory of Fit: Task, Technology and Individual, Michael J. -

Information System Owner

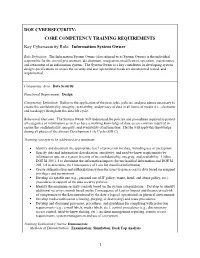

DOE CYBERSECURITY: CORE COMPETENCY TRAINING REQUIREMENTS Key Cybersecurity Role: Information System Owner Role Definition: The Information System Owner (also referred to as System Owner) is the individual responsible for the overall procurement, development, integration, modification, operation, maintenance, and retirement of an information system. The System Owner is a key contributor in developing system design specifications to ensure the security and user operational needs are documented, tested, and implemented. Competency Area: Data Security Functional Requirement: Design Competency Definition: Refers to the application of the principles, policies, and procedures necessary to ensure the confidentiality, integrity, availability, and privacy of data in all forms of media (i.e., electronic and hardcopy) throughout the data life cycle. Behavioral Outcome: The System Owner will understand the policies and procedures required to protect all categories of information as well as have a working knowledge of data access controls required to ensure the confidentiality, integrity, and availability of information. He/she will apply this knowledge during all phases of the System Development Life Cycle (SDLC). Training concepts to be addressed at a minimum: Identify and document the appropriate level of protection for data, including use of encryption. Specify data and information classification, sensitivity, and need-to-know requirements by information type on a system in terms of its confidentiality, integrity, and availability. Utilize DOE M 205.1-5 to determine the information impacts for unclassified information and DOE M 205.1-4 to determine the Consequence of Loss for classified information. Create authentication and authorization system for users to gain access to data based on assigned privileges and permissions. -

Assessing the Quantitative and Qualitative Effects of Using Mixed

23rd ICCRTS Info. Central, Pensacola, Florida 6 - 9 November 2018 Assessing the quantitative and qualitative effects of using mixed reality for operational decision making Mark Dennison1, Jerald Thomas2, Theron Trout1,3 and Evan Suma Rosenberg2 1U.S. Army Research Laboratory West, Playa Vista, CA 2University of Minnesota, Minneapolis, MN 3Stormfish Scientific, Chevy Chase, MD Abstract parate systems (physical objects, computers, paper documents, etc.) that require a significant amount The emergence of next generation VR and AR de- of resources and effort to bring into a unified space. vices like the Oculus Rift and Microsoft HoloLens Human interactions require shared cognitive mod- has increased interest in using mixed reality (MR) for els where interaction with systems must support and simulated training, enhancing command and control, maintain this shared representation, stored informa- and augmenting operator effectiveness at the tactical tion must persist the representation at a fundamen- edge. It is thought that virtualizing mission relevant tal data-level, and the underlying network must allow battlefield data, such as satellite imagery or body- these data and information to flow without hindrance worn sensor information, will allow commanders and between human collaborators and non-human agents analysts to retrieve, collaborate, and make decisions (1; 2). about such information more effectively than tradi- The emergence of Mixed Reality (MR) technolo- tional methods, which may have cognitive and spa- gies has provided the potential for new methods for tial constraints. However, there is currently little the Warfighter to access, consume, and interact with evidence in the scientific literature that using mod- battlefield information. MR may serve as a unified ern MR equipment provides any qualitative benefits platform for data ingestion, analysis, collaboration, or quantitative benefits, such as increased task en- and execution and also has the benefit of being cus- gagement or improved decision accuracy. -

Information System Development Using Augmented Reality Tools*

Information system development using augmented reality tools* Grigorev Rostislav Aleksandrovich Valijanov Bahrom Adhamdzon-ugli Kazan Federal University Kazan Federal University Kazan, Russia Kazan, Russia [email protected] [email protected] Medvedeva Olga Anatolievna Mustafina Svetlana Anatol’evna Kazan Federal University Bashkir State University Kazan, Russia Ufa, Russia [email protected] [email protected] Abstract This work is devoted to the issues of visualization and information processing, in particular, to improving the visualization of 3D objects using augmented reality technology. The concept of augmented reality offers a more advanced user interface for visualization due to a combination of natural ways to control the change of angle of an object and visualization in a real context. In the process of performing the work, computer graphics, algorithms, and modeling methods were used. The experimental part of the work was carried out using a set of development tools for tracking Vuforia and Unity development tools. Introduction Augmented reality (AR) is an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer-generated perceptual information, sometimes across multiple sensory modalities, including visual, auditory, haptic, somatosensory and olfactory. AR can be defined as a system that fulfills three basic features: a combination of real and virtual worlds, real-time interaction, and accurate 3D registration of virtual and real objects. The overlaid sensory information can be constructive (i.e. additive to the natural environment), or destructive (i.e. masking of the natural environment). This experience is seamlessly interwoven with the physical world such that it is perceived as an immersive aspect of the real environment. -

Security and Privacy Approaches in Mixed Reality:A Literature Survey

0 Security and Privacy Approaches in Mixed Reality: A Literature Survey JAYBIE A. DE GUZMAN, University of New South Wales and Data 61, CSIRO KANCHANA THILAKARATHNA, University of Sydney and Data 61, CSIRO ARUNA SENEVIRATNE, University of New South Wales and Data 61, CSIRO Mixed reality (MR) technology development is now gaining momentum due to advances in computer vision, sensor fusion, and realistic display technologies. With most of the research and development focused on delivering the promise of MR, there is only barely a few working on the privacy and security implications of this technology. is survey paper aims to put in to light these risks, and to look into the latest security and privacy work on MR. Specically, we list and review the dierent protection approaches that have been proposed to ensure user and data security and privacy in MR. We extend the scope to include work on related technologies such as augmented reality (AR), virtual reality (VR), and human-computer interaction (HCI) as crucial components, if not the origins, of MR, as well as numerous related work from the larger area of mobile devices, wearables, and Internet-of-ings (IoT). We highlight the lack of investigation, implementation, and evaluation of data protection approaches in MR. Further challenges and directions on MR security and privacy are also discussed. CCS Concepts: •Human-centered computing ! Mixed / augmented reality; •Security and privacy ! Privacy protections; Usability in security and privacy; •General and reference ! Surveys and overviews; Additional Key Words and Phrases: Mixed Reality, Augmented Reality, Privacy, Security 1 INTRODUCTION Mixed reality (MR) was used to pertain to the various devices – specically, displays – that encompass the reality-virtuality continuum as seen in Figure1(Milgram et al .