A Five-Year Plan for Automatic Chess

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

White Knight Review Chess E-Magazine January/February - 2012 Table of Contents

Chess E-Magazine Interactive E-Magazine Volume 3 • Issue 1 January/February 2012 Chess Gambits Chess Gambits The Immortal Game Canada and Chess Anderssen- Vs. -Kieseritzky Bill Wall’s Top 10 Chess software programs C Seraphim Press White Knight Review Chess E-Magazine January/February - 2012 Table of Contents Editorial~ “My Move” 4 contents Feature~ Chess and Canada 5 Article~ Bill Wall’s Top 10 Software Programs 9 INTERACTIVE CONTENT ________________ Feature~ The Incomparable Kasparov 10 • Click on title in Table of Contents Article~ Chess Variants 17 to move directly to Unorthodox Chess Variations page. • Click on “White Feature~ Proof Games 21 Knight Review” on the top of each page to return to ARTICLE~ The Immortal Game 22 Table of Contents. Anderssen Vrs. Kieseritzky • Click on red type to continue to next page ARTICLE~ News Around the World 24 • Click on ads to go to their websites BOOK REVIEW~ Kasparov on Kasparov Pt. 1 25 • Click on email to Pt.One, 1973-1985 open up email program Feature~ Chess Gambits 26 • Click up URLs to go to websites. ANNOTATED GAME~ Bareev Vs. Kasparov 30 COMMENTARY~ “Ask Bill” 31 White Knight Review January/February 2012 White Knight Review January/February 2012 Feature My Move Editorial - Jerry Wall [email protected] Well it has been over a year now since we started this publication. It is not easy putting together a 32 page magazine on chess White Knight every couple of months but it certainly has been rewarding (maybe not so Review much financially but then that really never was Chess E-Magazine the goal). -

Of Kings and Pawns

OF KINGS AND PAWNS CHESS STRATEGY IN THE ENDGAME ERIC SCHILLER Universal Publishers Boca Raton • 2006 Of Kings and Pawns: Chess Strategy in the Endgame Copyright © 2006 Eric Schiller All rights reserved. Universal Publishers Boca Raton , Florida USA • 2006 ISBN: 1-58112-909-2 (paperback) ISBN: 1-58112-910-6 (ebook) Universal-Publishers.com Preface Endgames with just kings and pawns look simple but they are actually among the most complicated endgames to learn. This book contains 26 endgame positions in a unique format that gives you not only the starting position, but also a critical position you should use as a target. Your workout consists of looking at the starting position and seeing if you can figure out how you can reach the indicated target position. Although this hint makes solving the problems easier, there is still plenty of work for you to do. The positions have been chosen for their instructional value, and often combined many different themes. You’ll find examples of the horse race, the opposition, zugzwang, stalemate and the importance of escorting the pawn with the king marching in front, among others. When you start out in chess, king and pawn endings are not very important because usually there is a great material imbalance at the end of the game so one side is winning easily. However, as you advance through chess you’ll find that these endgame positions play a great role in determining the outcome of the game. It is critically important that you understand when a single pawn advantage or positional advantage will lead to a win and when it will merely wind up drawn with best play. -

3 After the Tournament

Important Dates for 2018-19 Important Changes early Sept. Chess Manual & Rule Book posted online TERMS & CONDITIONS November 1 Preliminary list of entries posted online V-E-3 Removes all restrictions on pairing teams at the state December 1 Official Entry due tournament. The result will be that teams from the same Official Entry should be submitted online by conference may be paired in any round. your school’s official representative. V- E-6 Provides that sectional tournament will only be paired There is no entry fee, but late entries will incur after registration is complete so that last-minute with- a $100 late fee. drawals can be taken into account. December 1 Updated list of entries posted online IX-G-4 Clarifies that the use of a smartwatch by a player is ille- gal, with penalties similar to the use of a cell phone. December 1 List of Participants form available online Contact your activities director for your login ID and password. VII-C-1,4,5,6 and VIII-D-1,2,4 Failure to fill out this form by the deadline con- Eliminates individual awards at all levels of the stitutes withdrawal from the tournament. tournament. Eliminates the requirement that a player stay on one board for the entire tournament. Allows January 2 Required rules video posted players to shift up and down to a different board, while January 16 Deadline to view online rules presentation remaining in the "Strength Order" declared by the Deadline to submit List of Participants (final coach prior to the start of the tournament. -

Chess Strategies for Beginners II Top Books for Beginners Chess Thinking

Chess Strategies for Beginners II Stop making silly Moves! Learn Chess Strategies for Beginners to play better chess. Stop losing making dumb moves. "When you are lonely, when you feel yourself an alien in the world, play Chess. This will raise your spirits and be your counselor in war." Aristotle Learn chess strategies first at Chess Strategies for Beginners I. After that come back here. Chess Formation Strategy I show you now how to start your game. Before you start to play you should know where to place your pieces - know the right chess formation strategy. Where do you place your pawns, knights and bishops, when do you castle and what happens to the queen and the rooks. When should you attack? Or do you have to attack at all? Questions over questions. I will give you a rough idea now. Please study the following chess strategies for beginners carefully. Read the Guidelines: Chess Formation Strategy. WRITE YOUR REVIEW ASK QUESTIONS HERE! Top Books For Beginners For beginners I recommend Logical Chess - Move by Move by Chernev because it explains every move. Another good book is the Complete Idiot's Guide to Chess that received very good reviews. Chess Thinking Now try to get mentally into the real game and try to understand some of the following positions. Some are difficult to master, but don't worry, just repeat them the next day to get used to chess thinking. Your brain has to adjust, that's all there is to it. Win some Positions here! - Chess Puzzles Did you manage it all right? It is necessary that you understand the following basic chess strategies for beginners called - Endgames or Endings, using the heavy pieces.(queen and rook are called heavy pieces) Check them out now! Rook and Queen Endgames - Basic Chess Strategies How a Beginner plays Chess Replay the games of a beginner. -

Read Book Winning Chess Strategies

WINNING CHESS STRATEGIES PDF, EPUB, EBOOK Yasser Seirawan | 272 pages | 01 May 2005 | EVERYMAN CHESS | 9781857443851 | English | London, United Kingdom Winning Chess Strategies PDF Book Analyse Your Chess. Beyond what you can calculate, you must rely on strategy to guide you in finding the best plans and moves in a given position. Your biggest concern is controlling the center tiles, specifically the four in the very middle, when playing chess. One of the world's most creative players combines his attacking talent with the traditionally solid French structure, resulting in a powerful armoury of opening weapons. Later you'll notice that on occasion rarely it's best to ignore a principle of chess strategy in the opening; nothing here is carved in granite. Learn to castle. Article Summary. Press forward in groups. I have dozens of books, more than my local library, but they r not too precise to the point like your lessons. If you keep these points in your head, you'll find you can easily start improvising multi-move plans to win the game: Develop multiple pieces Rooks, Knights, Queen, Bishop early and often. Seldom will you move the same piece twice in the chess opening. In the endgame, the fight is less complicated and the weaknesses can be exploited easier. In the Endgame, however, Bishops can quickly move across the entire, much emptier board, while Knights are still slow. Products Posts. Nerves of steel have assured Magnus Carlsen supremacy in the chess world. Most games on this level are not decided by tiny advantages obtained in the opening. -

Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25

Title: Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25 Introduction The UCS contains symbols for the game of chess in the Miscellaneous Symbols block. These are used in figurine notation, a common variation on algebraic notation in which pieces are represented in running text using the same symbols as are found in diagrams. While the symbols already encoded in Unicode are sufficient for use in the orthodox game, they are insufficient for many chess problems and variant games, which make use of extended sets. 1. Fairy chess problems The presentation of chess positions as puzzles to be solved predates the existence of the modern game, dating back to the mansūbāt composed for shatranj, the Muslim predecessor of chess. In modern chess problems, a position is provided along with a stipulation such as “white to move and mate in two”, and the solver is tasked with finding a move (called a “key”) that satisfies the stipulation regardless of a hypothetical opposing player’s moves in response. These solutions are given in the same notation as lines of play in over-the-board games: typically algebraic notation, using abbreviations for the names of pieces, or figurine algebraic notation. Problem composers have not limited themselves to the materials of the conventional game, but have experimented with different board sizes and geometries, altered rules, goals other than checkmate, and different pieces. Problems that diverge from the standard game comprise a genre called “fairy chess”. Thomas Rayner Dawson, known as the “father of fairy chess”, pop- ularized the genre in the early 20th century. -

Braille Chess Association Annual General Meeting and Chess Congress

ChessMoves March/April 2010 NEWSLETTER OF THE ENGLISH CHESS FEDERATION £1.50 BraiLLe Chess assoCiation annual General Meeting and Chess Congress George Phillips of Kingston-upon-Thames, winner of the Minor section This chess extravaganza took place at the Hallmark Hotel, Derby from the 5th – 7th March 2010. Chris Ross and George Phillips each achieved perfect five from five scores in the open and the minor events respectively in the annual BCA AGM tournament. One way of boosting attendance at an AGM! (continued on page 3) editorial Today (18/03/10) CJ de Mooi the ECF President visited the Office at Battle for the first time (the original The ECF April Finance Council Meeting will be held in visit was postponed because of the LondonECF on the 17th April. FullNews details and a map are horrendous weather in January). available on the ECF website (www.englishchess.org.uk) This was only my second meeting with CJ and what a really nice, new eCF Manager of Women’s Chess! approachable man he is, unlike the public persona portrayed on Ljubica Lazarevic websites. [email protected] Cynthia Gurney, Editor Ljubica’s interest in chess began watching games played at her secondary school’s chess club. Soon after she eCF Batsford joined what was Grays Chess Club (now Thurrock Chess Club). Over the past few years Ljubica has co-organised Competition tournaments including the British Blitz Championships, Winner JANUARY-FeBrUARY as well as playing in congresses in the UK and abroad. Guy Gibson from Kew Having recently completed her doctorate, Ljubica takes on the role The correct Answer is 1.e5 of manager of women’s chess and is keen to make the game a more This issue’s problem approachable and enjoyable experience for women of all ages and Robin C. -

Emanuel Lasker 2Nd Classical World Champ from 1894-1921

Emanuel Lasker 2nd classical world champ from 1894-1921 Emanuel Lasker, (born December 24, 1868, Berlinchen, Prussia [now Barlinek, Poland]— died January 11, 1941, New York, New York, U.S.), Emanuel Lasker was a German chess player, mathematician, and philosopher who was World Chess Champion for 27 years, from 1894 to 1921, the longest reign of any officially recognised World Chess Champion in history. Lasker, the son of a Jewish cantor, first left Prussia in 1889 and only five years later won the world chess championship from Wilhelm Steinitz. He went on to a series of stunning wins in tournaments at St. Petersburg, Nürnberg, London, and Paris before concentrating on his education. In 1902 he received his doctorate in mathematics from the University of Erlangen- Nürnberg, Germany. In 1904 Lasker resumed his chess career, publishing a magazine, Lasker’s Chess Magazine, for four years and winning against the top masters. He continued to play successfully through 1925, when he retired. He was forced out of retirement, however, after Nazi Germany confiscated his property in 1933. Fleeing first to England, then to the U.S.S.R., and finally to the U.S., he returned to tournament play, where he again competed at the highest levels, a rare achievement for his age. Lasker changed the nature of chess not in its strategy but in its economic base. He became the first chess master to demand high fees and thus paved the way to strengthening the financial status of professional chess players. He invented new endgame theories and then retired for some years to study philosophy and to teach and write. -

An Arbiter's Notebook

10.2 Again Purchases from our shop help keep ChessCafe.com freely accessible: Question: Dear, Mr. Gijssen. I was playing in a tournament and the board next to me played this game: 1.Nf3 Nf6 2.Ng1 Ng8 3.Nf3 Nf6 4.Ng1 Ng8. When a draw was claimed, the arbiter demanded that both players replay the game. When one of them refused, the arbiter forfeited them. Does the opening position count for purposes of threefold repetition? Matthew Larson (UK) Answer In my opinion, the arbiter was right not to accept this game. I refer to Article 12.1 of the Laws of Chess: An Arbiter’s The players shall take no action that will bring the game of chess into disrepute. Notebook To produce a "game" as mentioned in your letter brings the game of chess The Greatest Tournaments into disrepute. The only element in your letter that puzzles me is the fact that Geurt Gijssen 2001-2009 the arbiter forfeited both players, although only one refused to play a new by Chess Informant game. The question of the threefold repetition is immaterial in this case. Question Greetings, Mr. Gijssen. I acted as a member of the appeals committee in a rapid chess tournament (G60/sudden death). I also won that tournament, but I am not a strong player, just 2100 FIDE, with a good understanding of the rules. The incident is as follows: A player lost a game and signed the score sheet, and then he appealed the decision of the arbiter to the committee with regards to some bad rulings during the game. -

The Queen's Gambit

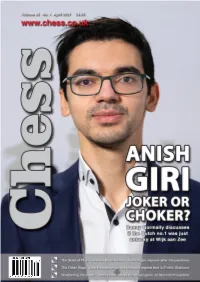

01-01 Cover - April 2021_Layout 1 16/03/2021 13:03 Page 1 03-03 Contents_Chess mag - 21_6_10 18/03/2021 11:45 Page 3 Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Editorial....................................................................................................................4 Executive Editor: Malcolm Pein Malcolm Pein on the latest developments in the game Editors: Richard Palliser, Matt Read Associate Editor: John Saunders 60 Seconds with...Geert van der Velde.....................................................7 Subscriptions Manager: Paul Harrington We catch up with the Play Magnus Group’s VP of Content Chess Magazine (ISSN 0964-6221) is published by: A Tale of Two Players.........................................................................................8 Chess & Bridge Ltd, 44 Baker St, London, W1U 7RT Wesley So shone while Carlsen struggled at the Opera Euro Rapid Tel: 020 7486 7015 Anish Giri: Choker or Joker?........................................................................14 Email: [email protected], Website: www.chess.co.uk Danny Gormally discusses if the Dutch no.1 was just unlucky at Wijk Twitter: @CHESS_Magazine How Good is Your Chess?..............................................................................18 Twitter: @TelegraphChess - Malcolm Pein Daniel King also takes a look at the play of Anish Giri Twitter: @chessandbridge The Other Saga ..................................................................................................22 Subscription Rates: John Henderson very much -

8 Wheatfield Avenue

THE INTERNATIONAL CORRESPONDENCE CHESS FEDERATION Leonardo Madonia via L. Alberti 54 IT-40137 Bologna Italia THEMATIC TOURNAMENT OFFICE E -mail: [email protected] CHESS 960 EVENTS Chess 960 changes the initial position of the pieces at the start of a game. This chess variant was created by GM Bobby Fischer. It was originally announced on 1996 in Buenos Aires. Fischer's goal was to create a chess variant in which chess creativity and talent would be more important than memorization and analysis of opening moves. His approach was to create a randomized initial chess position, which would thus make memorizing chess opening move sequence far less helpful. The starting position for Chess 960 must meet the following rules: - White pawns are placed on their orthodox home squares. - All remaining white pieces are placed on the first rank. - The white king is placed somewhere between the two white rooks (never on “a1” or “h1”). - The white bishops are placed on opposite-colored squares. - The black pieces are placed equal-and-opposite to the white pieces. For example, if white's king is placed on b1, then black's king is placed on b8. There are 960 initial positions with an equal chance. Note that one of these initial positions is the standard chess position but not played in this case. Once the starting position is set up, the rules for play are the same as standard chess. In particular, pieces and pawns have their normal moves, and each player's objective is to checkmate their opponent's king. Castling may only occur under the following conditions, which are extensions of the standard rules for castling: 1. -

John D. Rockefeller V Embraces Family Legacy with $3 Million Giff to US Chess

Included with this issue: 2021 Annual Buying Guide John D. Rockefeller V Embraces Family Legacy with $3 Million Giftto US Chess DECEMBER 2020 | USCHESS.ORG The United States’ Largest Chess Specialty Retailer 888.51.CHESS (512.4377) www.USCFSales.com So you want to improve your chess? NEW! If you want to improve your chess the best place to start is looking how the great champs did it. dŚƌĞĞͲƟŵĞh͘^͘ŚĂŵƉŝŽŶĂŶĚǁĞůůͲ known chess educator Joel Benjamin ŝŶƚƌŽĚƵĐĞƐĂůůtŽƌůĚŚĂŵƉŝŽŶƐĂŶĚ shows what is important about their play and what you can learn from them. ĞŶũĂŵŝŶƉƌĞƐĞŶƚƐƚŚĞŵŽƐƚŝŶƐƚƌƵĐƟǀĞ games of each champion. Magic names ƐƵĐŚĂƐĂƉĂďůĂŶĐĂ͕ůĞŬŚŝŶĞ͕dĂů͕<ĂƌƉŽǀ ĂŶĚ<ĂƐƉĂƌŽǀ͕ƚŚĞLJ͛ƌĞĂůůƚŚĞƌĞ͕ƵƉƚŽ ĐƵƌƌĞŶƚtŽƌůĚŚĂŵƉŝŽŶDĂŐŶƵƐĂƌůƐĞŶ͘ Of course the crystal-clear style of Bobby &ŝƐĐŚĞƌ͕ƚŚĞϭϭƚŚtŽƌůĚŚĂŵƉŝŽŶ͕ŵĂŬĞƐ for a very memorable chapter. ^ƚƵĚLJŝŶŐƚŚŝƐŬǁŝůůƉƌŽǀĞĂŶĞdžƚƌĞŵĞůLJ ƌĞǁĂƌĚŝŶŐĞdžƉĞƌŝĞŶĐĞĨŽƌĂŵďŝƟŽƵƐ LJŽƵŶŐƐƚĞƌƐ͘ůŽƚŽĨƚƌĂŝŶĞƌƐĂŶĚĐŽĂĐŚĞƐ ǁŝůůĮŶĚŝƚǁŽƌƚŚǁŚŝůĞƚŽŝŶĐůƵĚĞƚŚĞŬ in their curriculum. paperback | 256 pages | $22.95 from the publishers of A Magazine Free Ground Shipping On All Books, Software and DVDS at US Chess Sales $25.00 Minimum – Excludes Clearance, Shopworn and Items Otherwise Marked CONTRIBUTORS DECEMBER Dan Lucas (Cover Story) Dan Lucas is the Senior Director of Strategic Communication for US Chess. He served as the Editor for Chess Life from 2006 through 2018, making him one of the longest serving editors in US Chess history. This is his first cover story forChess Life. { EDITORIAL } CHESS LIFE/CLO EDITOR John Hartmann ([email protected])