Cleary University Academic Assessment Annual Report 2017

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

New Undergrad Bulletin.Qxp

The Felician Sisters conduct three colleges: Felician College Lodi and Rutherford, New Jersey 07644 Villa Maria College Buffalo, New York 14225 Madonna University Livonia, Michigan 48150 MADONNA UNIVERSITY The , the first initial of Madonna, is a tribute to Mary, the patroness of Madonna University. The flame symbolizes the Holy Spirit, the source of all knowledge, and signifies the fact that liberal arts education is the aim of Madonna University whose motto is Sapientia Desursum (Wisdom from Above). The upward movement of the slanted implies continuous commitment to meeting the ever growing educational needs and assurance of standards of academic quality. The box enclosing the is symbolic of unity through ecumenism. The heavy bottom line of the box signifies the Judeo-Christian foundation of the University. (The Madonna University logo was adopted in 1980) Madonna University guarantees the right to equal education opportunity without discrimination because of race, religion, sex, age, national origin or disabilities. The crest consists of the Franciscan emblem, which is a cross and the two pierced hands of Christ and St. Francis. The Felician Sisters' emblem is the pierced Heart of Mary, with a host symbolizing the adoration of the Eucharist through the Immaculate Heart, to which the Community is dedicated. The University motto, Sapientia Desursum, is translated “Wisdom from Above”. MADONNA UNIVERSITY Undergraduate Bulletin Volume 38, 2004 - 2006 (Effective as of Term I, 2004) Madonna University 36600 Schoolcraft Livonia, Michigan 48150-1173 (734) 432-5300 (800) 852-4951 TTY (734) 432-5753 FAX (734) 432-5393 email: [email protected] Web site: http://www.madonna.edu Madonna University guarantees the right to equal educational opportunity without discrimination because of race, religion, sex, age, national origin, or disabilities. -

Bulletin 2009-2011

Madonna University Graduate Bulletin Your Success Is Our Greatest Achievement Volume 14 . 2009-2011 Madonna University Graduate Bulletin 36600 Schoolcraft Road Livonia, Michigan 48150-1176 www.madonna.edu 2009-2011 Madonna University Calendar Telephone Directory SEMESTER I — FALL 2009-2010 2010-2011 2011-2012 Faculty Conference Aug. 31 Aug. 30 Aug. 29 All phone numbers are preceded by area code 734 Final Registration Sept. 4 Sept. 3 Sept. 2 Classes Begin Sept. 8 Sept. 7 Sept. 6 Course Add Period See Tuition and Fees Section Deans and Graduate Program Directors General Information Deadline: Removal of “I” grade from Spring/Summer semester Sept. 4 Sept. 10 Sept. 9 Graduate School Office 432-5667 Central Switchboard (734) 432-5300 Filing Deadline–Application for Graduation Winter Semester, May Sept. 25 Oct. 1 Sept. 30 Dr. Edith Raleigh, Dean 432-5667 (800) 852-4951 Community Gathering Oct. 9 Oct. 8 Oct. 14 School of Business 432-5355 Orchard Lake Center (248) 683-0521 Web Registration Begins–Winter Semester Oct. 26 Oct. 25 Oct. 24 Dr. Stuart Arends, Dean 432-5366 In Person/Open Registration Begins–Winter Semester Nov. 2 Nov. 1 Oct. 31 Video Phone I.P.# 198.019.72.8 Management and Marketing Chair, Final Date, Election of S Grade Nov. 6 Nov. 5 Nov. 4 Dr. Betty Jean Hebel 432-5357 Final Filing Date/December graduation: Doctoral Capstone Experience — — Nov. 17 Management Information Systems Chair, Student Services Final Date, Withdrawal from courses Nov. 20 Nov. 19 Nov. 18 Dr. William McMillan 432-5367 **Thanksgiving Recess Nov. 26-29 Nov. 25-28 Nov. -

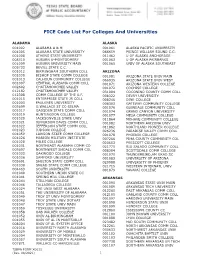

FICE Code List for Colleges and Universities (X0011)

FICE Code List For Colleges And Universities ALABAMA ALASKA 001002 ALABAMA A & M 001061 ALASKA PACIFIC UNIVERSITY 001005 ALABAMA STATE UNIVERSITY 066659 PRINCE WILLIAM SOUND C.C. 001008 ATHENS STATE UNIVERSITY 011462 U OF ALASKA ANCHORAGE 008310 AUBURN U-MONTGOMERY 001063 U OF ALASKA FAIRBANKS 001009 AUBURN UNIVERSITY MAIN 001065 UNIV OF ALASKA SOUTHEAST 005733 BEVILL STATE C.C. 001012 BIRMINGHAM SOUTHERN COLL ARIZONA 001030 BISHOP STATE COMM COLLEGE 001081 ARIZONA STATE UNIV MAIN 001013 CALHOUN COMMUNITY COLLEGE 066935 ARIZONA STATE UNIV WEST 001007 CENTRAL ALABAMA COMM COLL 001071 ARIZONA WESTERN COLLEGE 002602 CHATTAHOOCHEE VALLEY 001072 COCHISE COLLEGE 012182 CHATTAHOOCHEE VALLEY 031004 COCONINO COUNTY COMM COLL 012308 COMM COLLEGE OF THE A.F. 008322 DEVRY UNIVERSITY 001015 ENTERPRISE STATE JR COLL 008246 DINE COLLEGE 001003 FAULKNER UNIVERSITY 008303 GATEWAY COMMUNITY COLLEGE 005699 G.WALLACE ST CC-SELMA 001076 GLENDALE COMMUNITY COLL 001017 GADSDEN STATE COMM COLL 001074 GRAND CANYON UNIVERSITY 001019 HUNTINGDON COLLEGE 001077 MESA COMMUNITY COLLEGE 001020 JACKSONVILLE STATE UNIV 011864 MOHAVE COMMUNITY COLLEGE 001021 JEFFERSON DAVIS COMM COLL 001082 NORTHERN ARIZONA UNIV 001022 JEFFERSON STATE COMM COLL 011862 NORTHLAND PIONEER COLLEGE 001023 JUDSON COLLEGE 026236 PARADISE VALLEY COMM COLL 001059 LAWSON STATE COMM COLLEGE 001078 PHOENIX COLLEGE 001026 MARION MILITARY INSTITUTE 007266 PIMA COUNTY COMMUNITY COL 001028 MILES COLLEGE 020653 PRESCOTT COLLEGE 001031 NORTHEAST ALABAMA COMM CO 021775 RIO SALADO COMMUNITY COLL 005697 NORTHWEST -

Michigan Competitive Scholarship Fact Sheet for Academic Year 2020-21

Michigan Competitive Scholarship Fact Sheet for Academic Year 2020-21 Description The Michigan Competitive Scholarship (MCS) provides renewable scholarships for undergraduate students attending eligible Michigan postsecondary institutions. Awards are based on both academic merit and financial need. Application To apply for MCS, a student must file a Free Application for Federal Student Aid (FAFSA). To check the status of an application, the student can log into the MiSSG Student Portal at www.michigan.gov/missg. MCS Application Deadline The deadline for Academic Year 2020-21 is March 1, 2020. Eligibility To be eligible for award, a student must: • File a FAFSA prior to the MCS application deadline. • Achieve a qualifying score by June 30 of the academic year that the student completed high school or its recognized equivalent: The student’s high school completion year determines which test counts as qualifying: Beginning with the Class of 2017: SAT minimum score of 1200. Ending with the Class of 2016: ACT minimum composite score of 23. MI Student Aid must have the student’s Social Security number on file in order to pair with the FAFSA. • Demonstrate financial need. • Be enrolled in at least six credit hours at a participating institution. All participating institutions are listed on the last page of this Fact Sheet. • Possess a high school diploma or its recognized equivalent. • Be a Michigan resident since July 1 of the previous calendar year. If a dependent student, parent must also be a Michigan resident since July 1 of the previous calendar year. • Be a U.S. citizen, permanent resident, or approved refugee. -

Programs Already Using the Nasba Annual University Brief

PROGRAMS ALREADY USING THE NASBA ANNUAL UNIVERSITY BRIEF Coastal Carolina University Touro College Drexel University Truman State University Farleigh Dickinson University Tulane University Florida International University University of Alabama at Birmingham Fordham University University of Arkansas at Little Rock George Mason University University of California Santa Cruz Iowa State University University of Central Oklahoma James Madison University University of Guam La Salle University University of Houston Lakeland College University of Louisiana at Lafayette Lamar University University of Louisiana at Monroe Lehigh University University of Michigan Dearborn Liberty University University of Minnesota Duluth McNeese State University University of Mississippi Mercer University University of Missouri Columbia Mississippi State University University of Nebraska at Omaha New Mexico State University University of North Texas North Central College University of Pacific Northern Arizona University University of Portland Northwood University University of Rochester Oklahoma Christian University University of South Dakota Oregon State University University of South Carolina Penn State University University of Tennessee Chattanooga Providence College University of Texas Arlington Rhodes College University of Texas San Antonio Rider University University of Wisconsin Whitewater Sacred Heart University Vanderbilt University Sam Houston State University Walsh College Seattle University Wayne State University St. Ambrose University West Chester University Stockton University Western Connecticut State University Stonehill College Western Illinois University SUNY Binghamton Western Michigan University SUNY Oneonta Western New England University SUNY Oswego Widener University Syracuse University Winona State University Texas A&M University Youngstown State University The College of Saint Rose . -

Rochester University 2019 Warrior Fall Classic

Rochester University 2019 Warrior Fall Classic MEN'S DIVISION Ranking School Game 1 Game 2 Game 3 Game 4 Baker Set 1 Baker Set 2 Baker Set 3 Baker Set 4 TOTAL 1 Madonna University 933 924 1015 984 740 771 864 822 7053 2 Bowling Green State Univ 939 929 828 898 757 729 788 756 6624 3 Spring Arbor University 871 797 897 910 701 691 725 695 6287 4 Georgian College 794 941 860 896 710 708 678 674 6261 5 Olivet College 917 916 859 917 754 620 641 615 6239 6 Rochester University 889 890 846 868 579 745 745 669 6231 7 Cornerstone University 883 814 816 881 698 732 697 685 6206 8 U of M Dearborn 850 868 815 864 679 633 632 704 6045 9 Bethel University 867 791 842 855 673 678 657 661 6024 10 Grand Valley State Univ 736 870 767 801 700 652 585 697 5808 11 Cleary University 799 803 739 795 632 625 611 628 5632 12 Madonna University - JV 745 747 728 782 738 555 670 594 5559 13 Ferris State University 764 772 769 789 610 633 616 495 5448 WOMEN'S DIVISION Ranking School Game 1 Game 2 Game 3 Game 4 Baker Set 1 Baker Set 2 Baker Set 3 Baker Set 4 TOTAL 1 Spring Arbor University 884 819 912 764 649 642 654 709 6033 2 Madonna University 813 783 861 814 637 620 644 704 5876 3 Rochester University 791 832 867 754 651 675 591 668 5829 4 Spring Arbor Univ - JV 700 895 878 826 650 582 609 625 5765 5 U of M Dearborn 749 800 741 788 664 611 685 681 5719 6 Cornerstone University 804 787 756 790 658 635 580 659 5669 7 Bowling Green State Univ 821 791 759 721 590 653 693 564 5592 8 Olivet College 755 747 776 771 593 616 656 539 5453 9 Madonna Univ - JV 758 776 703 -

Career Pathways in Healthcare

Colleges with Career Pathways in Healthcare Healthcare jobs require a wide range of education and training from short term (such as home health care aides) to moderate training (like pharmacy technicians). Associate’s, bachelor’s and graduate degree programs are needed for field like dental hygiene, physical therapists, nurses and doctors. One of the largest health care shortage needs is in nursing. Lansing Community College Wayne State University Majors/Programs include: 17 majors in the School of Medicine, College of Health and Human Services Nursing, College of Pharmacy and Department Health Sciences Michigan State University Central Michigan University Majors/Programs include: Majors/Programs include: College of Human Medicine, College of Health Professionals, College of Nursing, Department College of Medicine of Kinesiology Eastern Michigan University University of Michigan Majors/Programs include: Majors/Programs include: Health and Human Nursing, Dental Hygiene, Services Public Health Sciences, School of Medicine Grand Valley State University Western Michigan University Majors/Programs include: Majors/Programs include: Nursing, Allied Health Healthcare Services and Sciences Sciences, Nursing, Pre-Dentistry, Pre-Medicine Oakland University Saginaw Valley State University Majors/Programs include: S chool of Health Sciences, School Majors/Programs include: of Medicine, School of Nursing Pre-Health Professions University of Detroit Mercy Northern Michigan University Majors/Programs include: Pre-Health Professions, Nursing Majors/Programs include: Nursing, Physicians Assistant Finlandia University Majors/Programs include: Art Therapy Colleges with Career Pathways in Transportation, Distribution, Logistics Planning, management, and movement of people, materials, and goods by road, pipeline, air, rail and water and related professional support services such as transportation infrastructure planning and management, logistics services, mobile equipment and facility maintenance. -

UNDERGRADUATE and GRADUATE Catalog and Student Handbook 2017—2018

UNDERGRADUATE and GRADUATE Catalog and Student Handbook 2017—2018 For more information: 1.800.686.1883 or www.cleary.edu Page i Cleary University is a member of and accredited by the Higher Learning Commission 30 North LaSalle Street Suite 2400 Chicago, IL 60602-2504 312.263.0456 http://www.ncahlc.org For information on Cleary University’s accreditation or to review copies of accreditation documents, contact: Dawn Fiser Assistant Provost, Academic Services Cleary University 3750 Cleary Drive Howell, MI 48843 The contents of this catalog are subject to revision at any time. Cleary University reserves the right to change courses, policies, programs, services, and personnel as required. Version 2.1, June 2017 For more information: 1.800.686.1883 or www.cleary.edu Page ii TABLE OF CONTENTS CLEARY UNIVERSITY ............................................................................................................................ 6 ENROLLMENT AND STUDENT PROFILE ..................................................................................................... 6 CLEARY UNIVERSITY FACULTY .................................................................................................................. 6 CLEARY UNIVERSITY ACADEMIC PROGRAMS .................................................................................. 2 OUR VALUE PROPOSITION ......................................................................................................................... 2 Graduation and Retention Rates ................................................................................................................... -

2017-2018 Academic Catalog

NORTHWOOD UNIVERSITY Academic Catalog 2017 - 2018 Academic Catalog 2017 - 2018 - Academic Catalog 2017 www.northwood.edu NORTHWOOD UNIVERSITY ACADEMIC CATALOG 2017-2018 TABLE OF CONTENTS WELCOME ................................................................................................................................................... 2 ABOUT NORTHWOOD ........................................................................................................................................... 3 ADMISSIONS INFORMATION ............................................................................................................................... 7 FINANCIAL INFORMATION ................................................................................................................................ 12 FINANCIAL AID INFORMATION ....................................................................................................................... 15 DEGREE PROGRAM INFORMATION ................................................................................................................. 20 UNDERGRADUATE CURRICULUM GUIDES ................................................................................................... 26 UNDERGRADUATE COURSE DESCRIPTIONS ................................................................................................ 48 ACADEMIC POLICIES AND INFORMATION .................................................................................................... 97 STUDENT AFFAIRS ........................................................................................................................................... -

5111 Tuition Incentive Program Flyer

Tuition Incentive PROGRAM Have you ever been Medicaid eligible? What is TIP? If so, then you might be TIP eligible! If you qualified for Medicaid for at The Tuition Incentive least two years between age nine and high school graduation, you are Program encourages encouraged to contact MI Student Aid to learn more and complete your students to complete TIP application, if eligible. high school by providing college tuition assistance How Do I Complete My Application or Check My Eligibility? after graduation. There are two ways to check eligibility or complete an application: 1. Create a MiSSG Student Portal account, log in, and complete the How does TIP work? online application. 2. Call our Customer Care Center at 888-447-2687. TIP helps the student • Only eligible students may complete an application. pursue a certificate or • Eligible students must complete the application before August 31st associate degree by of their graduating year from high school. Students must graduate providing tuition and from high school before their 20th birthday. mandatory fee assistance • Beginning with the class of 2017, students who attend an Early/ at participating Michigan Middle College Program have until age 21 to graduate and institutions. complete the TIP application. Students who meet this criteria must contact us to complete the application. After earning a certificate, associate degree, or even Where is the MiSSG Student Portal? 56 transferable credits, the student can then be Go to www.michigan.gov/missg and click the Student button. eligible to receive up to What Happens After I Complete My Application? $500 per semester while pursuing a bachelor’s Only the institution you list first on your Free Application for Federal Student degree, not to exceed Aid (FAFSA) will be notified of your TIP eligibility. -

DIRECTORY (As of August 1, 2018)

DIRECTORY (As of August 1, 2018) Officers of the University Keith A. Pretty ........................................................................................................................... President and Chief Executive Officer B.S., Western Michigan University J.D., Thomas M. Cooley Law School Kristin Stehouwer ........................................................ Executive Vice President / Chief Operating Officer / Chief Academic Officer B.A., M.A., Ph.D., Northwestern University Rhonda Anderson .............................................................................................................. Vice President of Enrollment Management A.A., B.B.A., M.B.A., Northwood University Justin Marshall ................................................................................................ Vice President of Advancement and Alumni Relations A.A., B.B.A., Northwood University Timothy Nash ........................................................................................... Senior Vice President of Strategic and Corporate Alliances; A.A., B.B.A., Northwood University Robert C. NcNair Endowed Chair; M.A., Central Michigan University Director, The McNair Center for the Advancement of Ed.D., Wayne State University Free Enterprise and Entrepreneurship W. Karl Stephan .............................................................................. Vice President of Finance and Chief Financial Officer/Treasurer B.A., Yale University M.B.A., University of Chicago Rachel Valdiserri ..................................................................... -

Military Representatives

Hartland High School ~ 2020 – 2021 School Year Military Recruiters & Military Academy Representatives MILITARY RECRUITERS MILITARY ACADEMIES Air Force United States Air Force Academy Air Force Recruiting Office 2304 Cadet Drive, Suite 2400 30955 Five Mile Rd. USAF Academy, CO 80840-5002 Livonia, MI 48154 (800) 443-9266 SSgt. Jordan Akles, Enlisted Recruiter [email protected] (cell) 734-260-8030 www.usafa.edu [email protected] Army West Point United States Military Academy U.S. Army Recruiting Station CPT Kathleen Rogers 815 S. Latson Rd Great Lakes Regional Commander Howell, MI 48843 606 Thayer Road Sgt. Alesia J. Hughey, Enlisted Recruiter West Point, NY 10996-1797 (office) 517-552-1236 (cell) 517-862-5143 (845) 938-5728 [email protected] [email protected] SSG Tyler E. Teadt, Reserve Recruiter www.westpoint.edu (office) 517-552-1236 (cell) 517-862-5118 [email protected] Marines United States Merchant Marines Academy Marine Recruiting Office LCDR Tina Schoggers, USMS 204 W. Grand River Midwest Admissions Officer Howell, MI 48843 300 Steamboat Road Sgt. Ryan J. Robert, Recruiter Kings Point, NY 11024 (office) 517-546-6542 (cell) 248-631-8685 (866) 546-4778 [email protected] [email protected] www.usmma.edu Navy United States Naval Academy Naval Recruiting Station Office of Admissions 6250 S. Cedar St. 52 King George St. Lansing, MI 48911 Annapolis, MD 21402-5017 HT2 Adam Smrekar, Recruiter (410) 293-1858 (office) 517-393-0224 (cell) 517-425-1396 [email protected] [email protected] www.usna.edu Officer Recruiter 2601 Coolidge Rd #205 East Lansing, MI 48823 (office) 517-337-9390 Michigan Air National Guard United States Coast Guard Academy Selfridge Air National Guard Base LTJG John Hu 44875 N.