A Survey of Raaga Recognition Techniques and Improvements to the State-Of-The-Art

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Towards Automatic Audio Segmentation of Indian Carnatic Music

Friedrich-Alexander-Universit¨at Erlangen-Nurnberg¨ Master Thesis Towards Automatic Audio Segmentation of Indian Carnatic Music submitted by Venkatesh Kulkarni submitted July 29, 2014 Supervisor / Advisor Dr. Balaji Thoshkahna Prof. Dr. Meinard Muller¨ Reviewers Prof. Dr. Meinard Muller¨ International Audio Laboratories Erlangen A Joint Institution of the Friedrich-Alexander-Universit¨at Erlangen-N¨urnberg (FAU) and Fraunhofer Institute for Integrated Circuits IIS ERKLARUNG¨ Erkl¨arung Hiermit versichere ich an Eides statt, dass ich die vorliegende Arbeit selbstst¨andig und ohne Benutzung anderer als der angegebenen Hilfsmittel angefertigt habe. Die aus anderen Quellen oder indirekt ubernommenen¨ Daten und Konzepte sind unter Angabe der Quelle gekennzeichnet. Die Arbeit wurde bisher weder im In- noch im Ausland in gleicher oder ¨ahnlicher Form in einem Verfahren zur Erlangung eines akademischen Grades vorgelegt. Erlangen, July 29, 2014 Venkatesh Kulkarni i Master Thesis, Venkatesh Kulkarni ACKNOWLEDGEMENTS Acknowledgements I would like to express my gratitude to my supervisor, Dr. Balaji Thoshkahna, whose expertise, understanding and patience added considerably to my learning experience. I appreciate his vast knowledge and skill in many areas (e.g., signal processing, Carnatic music, ethics and interaction with participants).He provided me with direction, technical support and became more of a friend, than a supervisor. A very special thanks goes out to my Prof. Dr. Meinard M¨uller,without whose motivation and encouragement, I would not have considered a graduate career in music signal analysis research. Prof. Dr. Meinard M¨ulleris the one professor/teacher who truly made a difference in my life. He was always there to give his valuable and inspiring ideas during my thesis which motivated me to think like a researcher. -

Syllabus for Post Graduate Programme in Music

1 Appendix to U.O.No.Acad/C1/13058/2020, dated 10.12.2020 KANNUR UNIVERSITY SYLLABUS FOR POST GRADUATE PROGRAMME IN MUSIC UNDER CHOICE BASED CREDIT SEMESTER SYSTEM FROM 2020 ADMISSION NAME OF THE DEPARTMENT: DEPARTMENT OF MUSIC NAME OF THE PROGRAMME: MA MUSIC DEPARTMENT OF MUSIC KANNUR UNIVERSITY SWAMI ANANDA THEERTHA CAMPUS EDAT PO, PAYYANUR PIN: 670327 2 SYLLABUS FOR POST GRADUATE PROGRAMME IN MUSIC UNDER CHOICE BASED CREDIT SEMESTER SYSTEM FROM 2020 ADMISSION NAME OF THE DEPARTMENT: DEPARTMENT OF MUSIC NAME OF THE PROGRAMME: M A (MUSIC) ABOUT THE DEPARTMENT. The Department of Music, Kannur University was established in 2002. Department offers MA Music programme and PhD. So far 17 batches of students have passed out from this Department. This Department is the only institution offering PG programme in Music in Malabar area of Kerala. The Department is functioning at Swami Ananda Theertha Campus, Kannur University, Edat, Payyanur. The Department has a well-equipped library with more than 1800 books and subscription to over 10 Journals on Music. We have gooddigital collection of recordings of well-known musicians. The Department also possesses variety of musical instruments such as Tambura, Veena, Violin, Mridangam, Key board, Harmonium etc. The Department is active in the research of various facets of music. So far 7 scholars have been awarded Ph D and two Ph D thesis are under evaluation. Department of Music conducts Seminars, Lecture programmes and Music concerts. Department of Music has conducted seminars and workshops in collaboration with Indira Gandhi National Centre for the Arts-New Delhi, All India Radio, Zonal Cultural Centre under the Ministry of Culture, Government of India, and Folklore Academy, Kannur. -

Fusion Without Confusion Raga Basics Indian

Fusion Without Confusion Raga Basics Indian Rhythm Basics Solkattu, also known as konnakol is the art of performing percussion syllables vocally. It comes from the Carnatic music tradition of South India and is mostly used in conjunction with instrumental music and dance instruction, although it has been widely adopted throughout the world as a modern composition and performance tool. Similarly, the music of North India has its own system of rhythm vocalization that is based on Bols, which are the vocalization of specific sounds that correspond to specific sounds that are made on the drums of North India, most notably the Tabla drums. Like in the south, the bols are used in musical training, as well as composition and performance. In addition, solkattu sounds are often referred to as bols, and the practice of reciting bols in the north is sometimes referred to as solkattu, so the distinction between the two practices is blurred a bit. The exercises and compositions we will discuss contain bols that are found in both North and South India, however they come from the tradition of the North Indian tabla drums. Furthermore, the theoretical aspect of the compositions is distinctly from the Hindustani, (north Indian) tradition. Hence, for the purpose of this presentation, the use of the term Solkattu refers to the broader, more general practice of Indian rhythmic language. South Indian Percussion Mridangam Dolak Kanjira Gattam North Indian Percussion Tabla Baya (a.k.a. Tabla) Pakhawaj Indian Rhythm Terms Tal (also tala, taal, or taala) – The Indian system of rhythm. Tal literally means "clap". -

Applying Natural Language Processing and Deep Learning Techniques for Raga Recognition in Indian Classical Music

Applying Natural Language Processing and Deep Learning Techniques for Raga Recognition in Indian Classical Music Deepthi Peri Dissertation submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Master of Science in Computer Science and Applications Eli Tilevich, Chair Sang Won Lee Eric Lyon August 6th, 2020 Blacksburg, Virginia Keywords: Raga Recognition, ICM, MIR, Deep Learning Copyright 2020, Deepthi Peri Applying Natural Language Processing and Deep Learning Tech- niques for Raga Recognition in Indian Classical Music Deepthi Peri (ABSTRACT) In Indian Classical Music (ICM), the Raga is a musical piece’s melodic framework. It encom- passes the characteristics of a scale, a mode, and a tune, with none of them fully describing it, rendering the Raga a unique concept in ICM. The Raga provides musicians with a melodic fabric, within which all compositions and improvisations must take place. Identifying and categorizing the Raga is challenging due to its dynamism and complex structure as well as the polyphonic nature of ICM. Hence, Raga recognition—identify the constituent Raga in an audio file—has become an important problem in music informatics with several known prior approaches. Advancing the state of the art in Raga recognition paves the way to improving other Music Information Retrieval tasks in ICM, including transcribing notes automatically, recommending music, and organizing large databases. This thesis presents a novel melodic pattern-based approach to recognizing Ragas by representing this task as a document clas- sification problem, solved by applying a deep learning technique. A digital audio excerpt is hierarchically processed and split into subsequences and gamaka sequences to mimic a textual document structure, so our model can learn the resulting tonal and temporal se- quence patterns using a Recurrent Neural Network. -

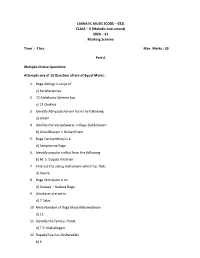

CARNATIC MUSIC (CODE – 032) CLASS – X (Melodic Instrument) 2020 – 21 Marking Scheme

CARNATIC MUSIC (CODE – 032) CLASS – X (Melodic Instrument) 2020 – 21 Marking Scheme Time - 2 hrs. Max. Marks : 30 Part A Multiple Choice Questions: Attempts any of 15 Question all are of Equal Marks : 1. Raga Abhogi is Janya of a) Karaharapriya 2. 72 Melakarta Scheme has c) 12 Chakras 3. Identify AbhyasaGhanam form the following d) Gitam 4. Idenfity the VarjyaSwaras in Raga SuddoSaveri b) GhanDharam – NishanDham 5. Raga Harikambhoji is a d) Sampoorna Raga 6. Identify popular vidilist from the following b) M. S. Gopala Krishnan 7. Find out the string instrument which has frets d) Veena 8. Raga Mohanam is an d) Audava – Audava Raga 9. Alankaras are set to d) 7 Talas 10 Mela Number of Raga Maya MalawaGoula d) 15 11. Identify the famous flutist d) T R. Mahalingam 12. RupakaTala has AksharaKals b) 6 13. Indentify composer of Navagrehakritis c) MuthuswaniDikshitan 14. Essential angas of kriti are a) Pallavi-Anuppallavi- Charanam b) Pallavi –multifplecharanma c) Pallavi – MukkyiSwaram d) Pallavi – Charanam 15. Raga SuddaDeven is Janya of a) Sankarabharanam 16. Composer of Famous GhanePanchartnaKritis – identify a) Thyagaraja 17. Find out most important accompanying instrument for a vocal concert b) Mridangam 18. A musical form set to different ragas c) Ragamalika 19. Identify dance from of music b) Tillana 20. Raga Sri Ranjani is Janya of a) Karahara Priya 21. Find out the popular Vena artist d) S. Bala Chander Part B Answer any five questions. All questions carry equal marks 5X3 = 15 1. Gitam : Gitam are the simplest musical form. The term “Gita” means song it is melodic extension of raga in which it is composed. -

1 ; Mahatma Gandhi University B. A. Music Programme(Vocal

1 ; MAHATMA GANDHI UNIVERSITY B. A. MUSIC PROGRAMME(VOCAL) COURSE DETAILS Sem Course Title Hrs/ Cred Exam Hrs. Total Week it Practical 30 mts Credit Theory 3 hrs. Common Course – 1 5 4 3 Common Course – 2 4 3 3 I Common Course – 3 4 4 3 20 Core Course – 1 (Practical) 7 4 30 mts 1st Complementary – 1 (Instrument) 3 3 Practical 30 mts 2nd Complementary – 1 (Theory) 2 2 3 Common Course – 4 5 4 3 Common Course – 5 4 3 3 II Common Course – 6 4 4 3 20 Core Course – 2 (Practical) 7 4 30 mts 1st Complementary – 2 (Instrument) 3 3 Practical 30 mts 2nd Complementary – 2 (Theory) 2 2 3 Common Course – 7 5 4 3 Common Course – 8 5 4 3 III Core Course – 3 (Theory) 3 4 3 19 Core Course – 4 (Practical) 7 3 30 mts 1st Complementary – 3 (Instrument) 3 2 Practical 30 mts 2nd Complementary – 3 (Theory) 2 2 3 Common Course – 9 5 4 3 Common Course – 10 5 4 3 IV Core Course – 5 (Theory) 3 4 3 19 Core Course – 6 (Practical) 7 3 30 mts 1st Complementary – 4 (Instrument) 3 2 Practical 30 mts 2nd Complementary – 4 (Theory) 2 2 3 Core Course – 7 (Theory) 4 4 3 Core Course – 8 (Practical) 6 4 30 mts V Core Course – 9 (Practical) 5 4 30 mts 21 Core Course – 10 (Practical) 5 4 30 mts Open Course – 1 (Practical/Theory) 3 4 Practical 30 mts Theory 3 hrs Course Work/ Project Work – 1 2 1 Core Course – 11 (Theory) 4 4 3 Core Course – 12 (Practical) 6 4 30 mts VI Core Course – 13 (Practical) 5 4 30 mts 21 Core Course – 14 (Practical) 5 4 30 mts Elective (Practical/Theory) 3 4 Practical 30 mts Theory 3 hrs Course Work/ Project Work – 2 2 1 Total 150 120 120 Core & Complementary 104 hrs 82 credits Common Course 46 hrs 38 credits Practical examination will be conducted at the end of each semester 2 MAHATMA GANDHI UNIVERSITY B. -

Tillana Raaga: Bageshri; Taala: Aadi; Composer

Tillana Raaga: Bageshri; Taala: Aadi; Composer: Lalgudi G. Jayaraman Aarohana: Sa Ga2 Ma1 Dha2 Ni2 Sa Avarohana: Sa Ni2 Dha2 Ma1 Pa Dha2 Ga2 Ma1 Ga2 Ri2 Sa SaNiDhaMa .MaPaDha | Ga. .Ma | RiRiSa . || DhaNiSaGa .SaGaMa | Dha. MaDha| NiRi Sa . || DhaNiSaMa .GaRiSa |Ri. NiDha | NiRi Sa . || SaRiNiDha .MaPaDha |Ga . Ma . | RiNiSa . || Sa ..Ni .Dha Ma . |Sa..Ma .Ga | RiNiSa . || Sa ..Ni .Dha Ma~~ . |Sa..Ma .Ga | RiNiSa . || Pallavi tom dhru dhru dheem tadara | tadheem dheem ta na || dhim . dhira | na dhira na Dhridhru| (dhirana: DhaMaNi .. dhirana.: DhaMaGa .) tom dhru dhru dheem tadara | tadheem dheem ta na || dhim . dhira | na dhira na Dhridhru|| (dhirana: MaDha NiSa.. dhirana:DhaMa Ga..) tom dhru dhru dheem tadara | tadheem dheem ta na || (ta:DhaNi na:NiGaRi) dhim . dhira | na dhira na Dhridhru|| (dhirana:NiGaSaSaNi. Dhirana:DhaSaNiNiDha .) tom dhru dhru dheem tadara | tadheem dheem ta na || dhim . dhira | na dhira na Dhridhru|| (dhira:GaMaDhaNi na:GaGaRiSa dhira:NiDha na:Ga..) tom dhru dhru dheem tadana | tadheem dheem ta na || dhim.... Anupallavi SaMa .Ga MaNi . Dha| NiGa .Ri | NiDhaSa . || GaRi .Sa NiMa .Pa | Dha Ga..Ma | RiNi Sa . || naadhru daani tomdhru dhim | ^ta- ka-jha | Nuta dhim || … naadhru daani tomdhru dhim | (Naadru:MaGa, daani:DhaMa, tomdhru:NiDha, dhim: Sa) ^ta- ka-jha | Nuta dhim || (NiDha SaNi RiSa) taJha-Nu~ta dhim jhaNu | (tajha:SaSa Nu~ta: NiSaRiSa dhim:Ni; jha~Nu:MaDhaNi. tadhim . na | ta dhim ta || (tadhim:Dha Ga..;nata dhimta: MNiDha Sa.Sa) tanadheem .tatana dheemta tanadheem |(tanadheemta: DhaNi Ri ..Sa tanadheem: NiRiSa. .Sa tanadheem: NiDhaNi . ) .dheem dheemta | tom dhru dheem (dheem: Sa deemta:Ga.Ma tomdhrudeem:Ri..Ri Sa) .dheem dheem dheemta ton-| (dheem:Dha. -

Carnatic Music Theory Year I

CARNATIC MUSIC THEORY YEAR I BASED ON THE SYLLABUS FOLLOWED BY GOVERNMENT MUSIC COLLEGES IN ANDHRA PRADESH AND TELANGANA FOR CERTIFICATE EXAMS HELD BY POTTI SRIRAMULU TELUGU UNIVERSITY ANANTH PATTABIRAMAN EDITION: 2.6 Latest edition can be downloaded from https://beautifulnote.com/theory Preface This text covers topics on Carnatic music required to clear the first year exams in Government music colleges in Andhra Pradesh and Telangana. Also, this is the first of four modules of theory as per Certificate in Music (Carnatic) examinations conducted by Potti Sriramulu Telugu University. So, if you are a music student from one of the above mentioned colleges, or preparing to appear for the university exam as a private candidate, you’ll find this useful. Though attempts are made to keep this text up-to-date with changes in the syllabus, students are strongly advised to consult the college or univer- sity and make sure all necessary topics are covered. This might also serve as an easy-to-follow introduction to Carnatic music for those who are generally interested in the system but not appearing for any particular examination. I’m grateful to my late guru, veteran violinist, Vidwan. Peri Srirama- murthy, for his guidance in preparing this document. Ananth Pattabiraman Editions First published in 2009, editions 2–2.2 in 2017, 2.3–2.5 in 2018, 2.6 in 2019. Latest edition available at https://beautifulnote.com/theory Copyright This work is copyrighted and is distributed under Creative Commons BY-NC-ND 4.0 license. You can make copies and share freely. -

A Study on the Effects of Music Listening Based on Indian Time Theory of Ragas on Patients with Pre-Hypertension

International Journal of Scientific & Engineering Research Volume 9, Issue 1, January-2018 ISSN 2229-5518 370 A STUDY ON THE EFFECTS OF MUSIC LISTENING BASED ON INDIAN TIME THEORY OF RAGAS ON PATIENTS WITH PRE-HYPERTENSION Suguna V Masters in Indian Music, PGD in Music Therapy IJSER IJSER © 2018 http://www.ijser.org International Journal of Scientific & Engineering Research Volume 9, Issue 1, January-2018 ISSN 2229-5518 371 1. ABSTRACT 1.1 INTRODUCTION: Pre-hypertension is more prevalent than hypertension worldwide. Management of pre- hypertensive state is usually with lifestyle modifications including, listening to relaxing music that can help in preventing the progressive rise in blood pressure and cardio-vascular disorders. This study was taken up to find out the effects of listening to Indian classical music based on time theory of Ragas (playing a Raga at the right time) on pre-hypertensive patients and how they respond to this concept. 1.2 AIM & OBJECTIVES: It has been undertaken with an aim to understand the effect of listening to Indian classical instrumental music based on the time theory of Ragas on pre-hypertensive patients. 1.3 SUBJECTS & METHODS: This study is a repeated measure randomized group designed study with a music listening experimental group and a control group receiving no music intervention. The study was conductedIJSER at Center for Music Therapy Education and Research at Mahatma Gandhi medical college and research institute, Pondicherry. 30 Pre-hypertensive out patients from the Department of General Medicine participated in the study after giving informed consent form. Systolic BP (SBP), Diastolic BP (DBP), Pulse Rate (PR) and Respiratory Rate (RR) measures were taken on baseline and every week for a month. -

Rāga Recognition Based on Pitch Distribution Methods

Rāga Recognition based on Pitch Distribution Methods Gopala K. Koduria, Sankalp Gulatia, Preeti Raob, Xavier Serraa aMusic Technology Group, Universitat Pompeu Fabra, Barcelona, Spain. bDepartment of Electrical Engg., Indian Institute of Technology Bombay, Mumbai, India. Abstract Rāga forms the melodic framework for most of the music of the Indian subcontinent. us automatic rāga recognition is a fundamental step in the computational modeling of the Indian art-music traditions. In this work, we investigate the properties of rāga and the natural processes by which people identify it. We bring together and discuss the previous computational approaches to rāga recognition correlating them with human techniques, in both Karṇāṭak (south Indian) and Hindustānī (north Indian) music traditions. e approaches which are based on first-order pitch distributions are further evaluated on a large com- prehensive dataset to understand their merits and limitations. We outline the possible short and mid-term future directions in this line of work. Keywords: Indian art music, Rāga recognition, Pitch-distribution analysis 1. Introduction ere are two prominent art-music traditions in the Indian subcontinent: Karṇāṭak in the Indian peninsular and Hindustānī in north India, Pakistan and Bangladesh. Both are orally transmied from one generation to another, and are heterophonic in nature (Viswanathan & Allen (2004)). Rāga and tāla are their fundamental melodic and rhythmic frameworks respectively. However, there are ample differ- ences between the two traditions in their conceptualization (See (Narmada (2001)) for a comparative study of rāgas). ere is an abundance of musicological resources that thoroughly discuss the melodic and rhythmic concepts (Viswanathan & Allen (2004); Clayton (2000); Sambamoorthy (1998); Bagchee (1998)). -

Carnatic Music Primer

A KARNATIC MUSIC PRIMER P. Sriram PUBLISHED BY The Carnatic Music Association of North America, Inc. ABOUT THE AUTHOR Dr. Parthasarathy Sriram, is an aerospace engineer, with a bachelor’s degree from IIT, Madras (1982) and a Ph.D. from Georgia Institute of Technology where is currently a research engineer in the Dept. of Aerospace Engineering. The preface written by Dr. Sriram speaks of why he wrote this monograph. At present Dr. Sriram is looking after the affairs of the provisionally recognized South Eastern chapter of the Carnatic Music Association of North America in Atlanta, Georgia. CMANA is very privileged to publish this scientific approach to Carnatic Music written by a young student of music. © copyright by CMANA, 375 Ridgewood Ave, Paramus, New Jersey 1990 Price: $3.00 Table of Contents Preface...........................................................................................................................i Introduction .................................................................................................................1 Swaras and Swarasthanas..........................................................................................5 Ragas.............................................................................................................................10 The Melakarta Scheme ...............................................................................................12 Janya Ragas .................................................................................................................23 -

Department of Indian Music Name of the PG Degree Program

Department of Indian Music Name of the PG Degree Program: M.A. Indian Music First Semester FPAC101 Foundation Course in Performance – 1 (Practical) C 4 FPAC102 Kalpita Sangita 1 (Practical) C 4 FPAC103 Manodharma Sangita 1 (Practical) C 4 FPAC114 Historical and Theoretical Concepts of Fine Arts – 1 C 4 ( Theory) FPAE101 Elective Paper 1 - Devotional Music - Regional Forms of South India E 3 (Practical) FPAE105 Elective Paper 2 - Compositions of Muttusvami Dikshitar (Practical) E 3 Soft Skills Languages (Sanskrit and Telugu)1 S 2 Second Semester FPAC105 Foundation Course in Performance – 2 (Practical) C 4 FPAC107 Manodharma Sangita 2 (Practical) C 4 FPAC115 Historical and Theoretical Concepts of Fine Arts – 2 ( Theory) C 4 FPAE111 Elective Paper 3 - Opera – Nauka Caritram (Practical) E 3 FPAE102 Elective Paper 4 - Nandanar caritram (Practical) E 3 FPAE104 Elective Paper 5 – Percussion Instruments (Theory) E 3 Soft Skills Languages (Kannada and Malayalam)2 S 2 Third Semester FPAC108 Foundation Course in Performance – 3 (Practical) C 4 FPAC106 Kalpita Sangita 2 (Practical) C 4 FPAC110 Alapana, Tanam & Pallavi – 1 (Practical) C 4 FPAC116 Advanced Theory – Music (Theory) C 4 FPAE106 Elective Paper 6 - Compositions of Syama Sastri (Practical) E 3 FPAE108 Elective Paper 7 - South Indian Art Music - An appreciation (Theory) E 3 UOMS145 Source Readings-Selected Verses and Passages from Tamiz Texts ( S 2 Theory) uom1001 Internship S 2 Fourth Semester FPAC117 Research Methodology (Theory) C 4 FPAC112 Project work and Viva Voce C 8 FPAC113 Alapana,