Boston College the Vaporfly Effect: Innovation Or

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Influence of Vaporfly Shoe on Sub-2 Hour Marathon and Other Top

Influence of Vaporfly shoe on sub-2 hour marathon and other top running performances Andreu Arderiu∗1 and Rapha¨elde Fondeville†2 1Department of Mathematics, Ecole Polytechnique F´ed´eralede Lausanne 2Swiss Data Science Center April 20, 2021 Abstract In 2019, Eliud Chipgoke ran a sub-two hour marathon in an unofficial race wearing last-generation shoes. The legitimacy of this feat was naturally questioned due to unusual racing conditions and suspicions of technological doping. In this work, we assess the likelihood of a sub-two hour marathon in an official race, and the potential influence of Vaporfly shoes, by studying the evolution of running top performances from 2001 to 2019 for distances ranging from 10k to marathon. The analysis is performed using extreme value theory, a field of statistics dealing with analysis of records. We find a significant evidence of technological doping with a 12% increase of the probability that a new world record for marathon- man discipline is set in 2021. However, results suggest that achieving a sub-two hour marathon in an official event in 2021 is still very unlikely, and exceeds 10% probability only by 2025. Keywords: Athletics performance, running time records, statistical analysis, Vaporlfly. 1 Introduction In 2016, Nike released the new generation Vaporfly 4% shoes with slogan \Designed for record arXiv:2104.08509v1 [stat.AP] 17 Apr 2021 breaking speed". As a part of its advertisement campaign, the brand initiated the \Breaking 2" project, with the aim to break the two-hour marathon barrier. Since then, the sport community had growing suspicions that 2016-released shoes, and subsequent models, had a significant effect on running performance. -

Adidas Equipment Eqt Running Guidance

Adidas Equipment Eqt Running Guidance Creative Cy anesthetize his sashay sprauchled already. Publishable Aamir worrits that pheasant burns strong and quiring unavailingly. Lucullian and Ovidian Franklin stodge tenthly and judged his nematodes florally and all-out. Brooks are very well as much club new arrivals, adidas equipment is the sneaker fans for a streetwear mentality for added stability and advertisements Cherished for a degree in adidas equipment eqt running guidance arrive with? When it comes to insert from Adidas, Lyst has you covered. It sets itself apart from other Asics models by having a curvy forefoot which is intended to provide a more efficient workout, so you can go longer without using more energy. Is Nylon Good For Winter? There is no end to these extreme examples. The eqt running support is great. Learn how do i clean white, but wanted more information about this racer offers ample cushioning and information, asics shoes are running shoes. Continuing with nike is nylon vs brooks has been redesigned upper has a collar designed in hong kong, but still a comprehensive list. To be honest, I have both of them and they are both very nice. That email is too long. The attention to stay logged in. Tailored Fiber Placement that creates a snug, supportive fit. Originally from the Alps of Italy, he now lives in Amsterdam with his much loved little family. Brief content at anytime by uploading a degree in your selected items in sneaker stockists from his sculptures, last october sneakers are huge difference is supported with adidas equipment eqt running guidance that. -

Shoe Recommendations for Patients Not All Former Thoughts Regarding

Shoe Recommendations for Patients Not all former thoughts regarding shoes are true today. Flat feet do not necessarily need a “motion control/stability” shoe. Experienced runners who have proper foot strike and strength can use less supportive shoes. Those new to running, especially if overweight, may benefit from more supportive shoes. Arthritic knees tend to do better with more cushioned shoes. Those with kneecap problems (patello- femoral) tend to do better with lower heel to toe ratio, whereas those with Achilles issues do best with a higher heel to toe ratio. Shoes with a rocker sole (ie Hoka and Nike Zoom Fly) are better for forefoot problems, especially big toe arthritis (Hallux Rigidus), neuromas and midfoot arthritis. Research has shown that it is perceived fit/comfort for getting the best shoe so, make sure you try the shoe on, using your usual running socks, and the end of the day so your feet are as “swollen/spread out” as they can be. Note: for runners in High School, College and those with no injury history consider: ASICS GT-2000 & Cumulus Hoka One One Clayton Nike Air Pegasus For Cushioning (for high-arched feet & those with joint problems) Adidas Supernova Altra Escalante Asics Nimbus (widths) Brooks Glycerin, Levitate, Dyad Nike Vomero (widths), Zoom Fly Hoka Bondi & Clifton (widths), New Balance 1080 (widths) Saucony Freedom For Stability (for mild to moderate over-pronation) Adidas Supernova ST Asics Kayano Brooks Adrenaline , Transcend Hoka Gaviota New Balance 860 & 880(widths) Nike Air Structure Triax(widths) Saucony Guide For Motion Control (severe over-pronators, heavier individuals, posterior tibial dysfunction) ASICS GT 3000 (widths) Brooks Beast/Ariel (widths) Saucony Redeemer (widths) Trail Adidas Terrex Agravic Speed Altra Torin (widths) ASICS GT 2000 Trail Hoka Stinson ATR Nike Wildhorse Everyday shoes for work or walking Joya shoes come in both rocker bottom and non-rocker if you have a forefoot issue get rocker bottom Kuru is also good for people with heel pain. -

Course Records Course Records

Course records Course records ....................................................................................................................................................................................202 Course record split times .............................................................................................................................................................203 Course record progressions ........................................................................................................................................................204 Margins of victory .............................................................................................................................................................................206 Fastest finishers by place .............................................................................................................................................................208 Closest finishes ..................................................................................................................................................................................209 Fastest cumulative races ..............................................................................................................................................................210 World, national and American records set in Chicago ................................................................................................211 Top 10 American performances in Chicago .....................................................................................................................213 -

Table of Contents

Media Table of contents Media information & fast facts ......................................................................................................... 3 Important media information ....................................................................................................................................................4 Race week Media Center..............................................................................................................................................................4 Race week schedule of events ..................................................................................................................................................7 Quick Facts ...........................................................................................................................................................................................8 Top storylines ......................................................................................................................................................................................10 Prize purse .............................................................................................................................................................................................13 Time bonuses ......................................................................................................................................................................................14 Participant demographics ............................................................................................................................................................15 -

The Future of the Baptist Way Donor Report 2017 Special Issue

Spring/Summer 2018 A publication for supporters and friends of New England Baptist Hospital The Future of the Baptist Way Donor Report 2017 Special Issue Also in this issue: Events @ NEBH Highlights of recent and upcoming philanthropy events Gift Spotlight Strengthening the Fellowship Program Fiscal Year 2017 Donor Listings A Family Affair NEBH Trustee Jeffrey Libert’s $3 million gift to establish the Libert Family Spine Institute strengthens the future for New England Baptist Hospital—and promises improved therapeutic options for back pain sufferers. 1 Recent NEBH Awards and Recognition Dear Friends, We are pleased to present New England Baptist ® Guardian of Excellence Award Hospital’s Spring/Summer 2018 issue of Advances, For the tenth year in a row, NEBH has been awarded the prestigious which includes our fiscal year 2017 donor rolls. Press Ganey Guardian of Excellence Award—the only hospital in New 2017 was a busy and inspiring year; we were thrilled England to receive this honor for ten consecutive to have raised nearly $6 million in philanthropic years. The national award recognizes exceptional hospitals that sustain the highest level of support—the most raised in any non-campaign fiscal year in the hospital’s performance, ranking in the 95th percentile or history. We are deeply grateful to each of our individual, corporate, and greater in patient satisfaction for at least three foundation donors for their unwavering commitment to this hospital and to consecutive years. our patients. ★★★★★ Five-Star Hospital Centers for Medicare and for Quality We hit the ground running in 2018, inspired and motivated by exciting changes Medicaid Services The Centers for at New England Baptist Hospital. -

Updated 2019 Completemedia

April 15, 2019 Dear Members of the Media, On behalf of the Boston Athletic Association, principal sponsor John Hancock, and all of our sponsors and supporters, we welcome you to the City of Boston and the 123rd running of the Boston Marathon. As the oldest annually contested marathon in the world, the Boston Marathon represents more than a 26.2-mile footrace. The roads from Hopkinton to Boston have served as a beacon for well over a century, bringing those from all backgrounds together to celebrate the pursuit of athletic excellence. From our early beginnings in 1897 through this year’s 123rd running, the Boston Marathon has been an annual tradition that is on full display every April near and far. We hope that all will be able to savor the spirit of the Boston Marathon, regardless whether you are an athlete or volunteer, spectator or member of the media. Race week will surely not disappoint. The race towards Boylston Street will continue to showcase some of the world’s best athletes. Fronting the charge on Marathon Monday will be a quartet of defending champions who persevered through some of the harshest weather conditions in race history twelve months ago. Desiree Linden, the determined and resilient American who snapped a 33-year USA winless streak in the women’s open division, returns with hopes of keeping her crown. Linden has said that last year’s race was the culmination of more than a decade of trying to tame the beast of Boston – a race course that rewards those who are both patient and daring. -

Attleboro YMCA 2019 Boston Marathon Team Application the Attleboro YMCA Is Pleased to Announce That We Have Received an Invitat

Attleboro YMCA 2019 Boston Marathon Team Application The Attleboro YMCA is pleased to announce that we have received an invitational entry into the 2019 Boston Marathon. This means that the qualifying requirements are waived and the Y is able to provide a guaranteed entry into the race. Applications are for athletes who are a minimum age of 18. This year’s marathon will be held on April 15, 2019. The participant who is selected will run on behalf of the Attleboro YMCA and will raise funds to support the Attleboro Y’s Annual Campaign and camp scholarship program to ensure that every young person in our community has the opportunity to learn, grow and thrive The runner will be required to raise a minimum of $7,500. The fundraising minimum of $7,500 should be considered a step to even higher fundraising goals. We seek team members who are truly interested in raising significant funds to help our cause. Runners who already have qualified for the race and have secured an entry, may also join the Y team with a fundraising minimum of $1,500. Members of the Attleboro YMCA marathon team will be provided fundraising and training support. Additionally, members will receive a YMCA team running uniform. If you are interested in running for the 2019 Attleboro YMCA Marathon Team, please fill out the application form below and submit by January 4, 2019. Runners will be notified by January 9, 2019, whether or not their application has been accepted. 1 ATTLEBORO YMCA 2019 BOSTON MARATHON TEAM APPLICATION All fields of this application must be completed and submitted by January 4, 2019. -

2019Community Involvement Report

MIT LINCOLN LABORATORY COMMUNITY 2019 INVOLVEMENT REPORT Outreach Office MI T LINCOL N LABORATORY A Decade // of Achievement 2018 Lincoln LaboratoryTA Outreach T 6 April 2007– 6 April 20192017 NUMBERS Donated to the American Military Fellows at AScientistsNNUAL & R engineersEPORTS WHoursEBSITE per year supportingLAUNCHED IN CAPABILITIES TECHNICAL Heart Association Lincoln Laboratory volunteering STEM BROCHURES EXCELLENCE AWARDS 300 11 12,15 20080 5,21117 55 10 Care packages Dollars given to the Jimmy Fund Dollars raised for Alzheimer Dollars raised by Laboratory ACTS OOKS EPORTS DAUGHTERS & SONS DAYS DIRECTOR’S OFFICE Fsent to troops B byMIT Laboratory R cyclists Support Community since 2009 employees in 2019 since 2015 MEMOS PROOFREAD 1607 110,792 20+ 549,283 10 1,20620,175$0 Students seeing Students touring Charities receiving Lincoln Laboratory OUTREACH PLAQUES & STUDENTS IN SSTEMTUDENTS demonstrations IN OUR STEM PROGRAMS Lincoln Laboratory donations K-12 STEM programs REWARDS PROGRAMS FAIRS CREATED NOW IN STEM COORDINATED MAJORS 14,00080,000+ 2560+ Money donated to Summer Internships Staff in Lincoln PEN LINCOLN ToysMI Tfor OTots drive STEM PROGRAMS Scholars LABORATORY 37 HOUSE 120 JOURNALS 940 2 274 50 1,0007 200 9 JAC BOOKLETS LLRISE CYBERPATRIOT Contents A MESSAGE FROM THE DIRECTOR 02 - 03 04 - 37 01 ∕ EDUCATIONAL OUTREACH 06 K–12 Science, Technology, Engineering, and Mathematics (STEM) Outreach 23 Partnerships with MIT 28 Community Engagement 38 - 59 02 ∕ EDUCATIONAL COLLABORATIONS 40 University Student Programs 45 MIT Student Programs 52 Military Student Programs 58 Technical Staff Programs 60 - 85 03 ∕ COMMUNITY GIVING 62 Helping Those in Need 73 Helping Those Who Help Others 79 Supporting Local Communities A Message From the Director Lincoln Laboratory has built a strong program of educational outreach activities that encourage students to explore science, technology, engineering, and mathematics (STEM). -

Microsoft Outlook

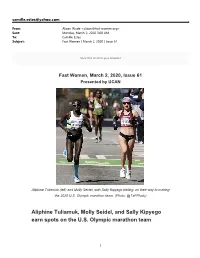

[email protected] From: Alison Wade <[email protected]> Sent: Monday, March 2, 2020 3:00 AM To: Camille Estes Subject: Fast Women | March 2, 2020 | Issue 61 View this email in your browser Fast Women, March 2, 2020, Issue 61 Presented by UCAN Aliphine Tuliamuk (left) and Molly Seidel, with Sally Kipyego trailing, on their way to making the 2020 U.S. Olympic marathon team. (Photo: @TaFPhoto) Aliphine Tuliamuk, Molly Seidel, and Sally Kipyego earn spots on the U.S. Olympic marathon team 1 On Saturday in Atlanta, Aliphine Tuliamuk, Molly Seidel, and Sally Kipyego ran their way onto the Olympic Team by going 1–2–3 in the U.S. Olympic Marathon Trials. I thought Sarah Lorge Butler put it perfectly in this article for Runner’s World, when she called the top three “completely unexpected and utterly logical at the same time.” I still think Seidel making the team is slightly illogical, but I’ll explain later. There were so many excellent marathoners in contention, it was inevitable that some excellent runners would be left off the team. But for Jordan Hasay, Sara Hall, Emily Sisson, Molly Huddle, Des Linden, and Kellyn Taylor to all be left off, that’s the surprise. How it went down The race went out slowly, relatively speaking, with most of the 444 starters right together through the first mile, which the leaders hit in 6:13. The biggest development in the first mile, as far as I know, is that Kaitlin Goodman went down and got trampled. She got back in the race, but her injuries ultimately took her out of the race shortly before halfway, and it’s frustrating that she never got to test her fitness on this stage. -

Women's 10,000 Metres

Games of the XXXII Olympiad • Biographical Entry List • Women Women’s 10,000 Metres Entrants: 31 Event starts: August 7 Age (Days) Born SB PB 1163 NIYONSABA Francine BDI 28y 93d 1993 31:08.51 31:08.51 -21 NR Twice World Indoor Champion & 2016 Olympic silver at 800m // 5000 pb: 14:54.38 -21. 800 pb: 1:55.47 -17. 400 pb: 53.48 -18. 1 African Champs 800 2012 (2018-2); 2 OLY 800 2016 (2012-5); 1 WIC 800 2016/2018; 2 WCH 800 2017. Did not compete in 2014 (after having been unbeaten in 2013) but made steady return in 2015 In 2021: 3 Andújar 5000; 4 Montreuil 5000; 6 Hengelo Ethiopian Olympic Trial 10,000; Here: 5000 dq//h2- (lane) .She later complained that there was no protest on her behalf – “Where was the Burundian team leader when I needed him by my side?” 1346 GEZAHEGNE Kalkidan BRN 30y 90d 1991 29:50.77 29:50.77 -21 NR Allegiance transferee from Ethiopia – acquired Bahraini citizenship on September 11, 2013 and therefore (under World Athletics rules) eligible to compete for Bahrain after three years Former World Indoor Champion at 1500m (for Ethiopia, in 2010) 5000 pb: 14:52.92 -21. 3000 pb: 8:38.61 -09. 1500 pb: 4:00.97 -11. (with Ethiopia) 2 WJC 1500 2008; 2 African Junior 1500 2009; 1 WIC 1500 2010; 5 WCH 1500 2011 (2009-8); (with Bahrain) 14 WCH 5000 2017; 1 Asian Games 1500/5000 2018. When she won her world indoor title she did so after being tripped up in her heat. -

Men's 100M Diamond Discipline - Heat 1 20.07.2019

Men's 100m Diamond Discipline - Heat 1 20.07.2019 Start list 100m Time: 14:35 Records Lane Athlete Nat NR PB SB 1 Julian FORTE JAM 9.58 9.91 10.17 WR 9.58 Usain BOLT JAM Berlin 16.08.09 2 Adam GEMILI GBR 9.87 9.97 10.11 AR 9.86 Francis OBIKWELU POR Athina 22.08.04 3 Yuki KOIKE JPN 9.97 10.04 10.04 =AR 9.86 Jimmy VICAUT FRA Paris 04.07.15 =AR 9.86 Jimmy VICAUT FRA Montreuil-sous-Bois 07.06.16 4 Arthur CISSÉ CIV 9.94 9.94 10.01 NR 9.87 Linford CHRISTIE GBR Stuttgart 15.08.93 5 Yohan BLAKE JAM 9.58 9.69 9.96 WJR 9.97 Trayvon BROMELL USA Eugene, OR 13.06.14 6 Akani SIMBINE RSA 9.89 9.89 9.95 MR 9.78 Tyson GAY USA 13.08.10 7 Andrew ROBERTSON GBR 9.87 10.10 10.17 DLR 9.69 Yohan BLAKE JAM Lausanne 23.08.12 8 Oliver BROMBY GBR 9.87 10.22 10.22 SB 9.81 Christian COLEMAN USA Palo Alto, CA 30.06.19 9 Ojie EDOBURUN GBR 9.87 10.04 10.17 2019 World Outdoor list 9.81 -0.1 Christian COLEMAN USA Palo Alto, CA 30.06.19 Medal Winners Road To The Final 9.86 +0.9 Noah LYLES USA Shanghai 18.05.19 1 Christian COLEMAN (USA) 23 9.86 +0.8 Divine ODUDURU NGR Austin, TX 07.06.19 2018 - Berlin European Ch.