Module: Physical Layer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Logical Link Control and Channel Scheduling for Multichannel Underwater Sensor Networks

ICST Transactions on Mobile Communications and Applications Research Article Logical Link Control and Channel Scheduling for Multichannel Underwater Sensor Networks Jun Li ∗, Mylene` Toulgoat, Yifeng Zhou, and Louise Lamont Communications Research Centre Canada, 3701 Carling Avenue, Ottawa, ON. K2H 8S2 Canada Abstract With recent developments in terrestrial wireless networks and advances in acoustic communications, multichannel technologies have been proposed to be used in underwater networks to increase data transmission rate over bandwidth-limited underwater channels. Due to high bit error rates in underwater networks, an efficient error control technique is critical in the logical link control (LLC) sublayer to establish reliable data communications over intrinsically unreliable underwater channels. In this paper, we propose a novel protocol stack architecture featuring cross-layer design of LLC sublayer and more efficient packet- to-channel scheduling for multichannel underwater sensor networks. In the proposed stack architecture, a selective-repeat automatic repeat request (SR-ARQ) based error control protocol is combined with a dynamic channel scheduling policy at the LLC sublayer. The dynamic channel scheduling policy uses the channel state information provided via cross-layer design. It is demonstrated that the proposed protocol stack architecture leads to more efficient transmission of multiple packets over parallel channels. Simulation studies are conducted to evaluate the packet delay performance of the proposed cross-layer protocol stack architecture with two different scheduling policies: the proposed dynamic channel scheduling and a static channel scheduling. Simulation results show that the dynamic channel scheduling used in the cross-layer protocol stack outperforms the static channel scheduling. It is observed that, when the dynamic channel scheduling is used, the number of parallel channels has only an insignificant impact on the average packet delay. -

Physical Layer Overview

ELEC3030 (EL336) Computer Networks S Chen Physical Layer Overview • Physical layer forms the basis of all networks, and we will first revisit some of fundamental limits imposed on communication media by nature Recall a medium or physical channel has finite Spectrum bandwidth and is noisy, and this imposes a limit Channel bandwidth: on information rate over the channel → This H Hz is a fundamental consideration when designing f network speed or data rate 0 H Type of medium determines network technology → compare wireless network with optic network • Transmission media can be guided or unguided, and we will have a brief review of a variety of transmission media • Communication networks can be classified as switched and broadcast networks, and we will discuss a few examples • The term “physical layer protocol” as such is not used, but we will attempt to draw some common design considerations and exams a few “physical layer standards” 13 ELEC3030 (EL336) Computer Networks S Chen Rate Limit • A medium or channel is defined by its bandwidth H (Hz) and noise level which is specified by the signal-to-noise ratio S/N (dB) • Capability of a medium is determined by a physical quantity called channel capacity, defined as C = H log2(1 + S/N) bps • Network speed is usually given as data or information rate in bps, and every one wants a higher speed network: for example, with a 10 Mbps network, you may ask yourself why not 10 Gbps? • Given data rate fd (bps), the actual transmission or baud rate fb (Hz) over the medium is often different to fd • This is for -

Telematics Chapter 3: Physical Layer

Telematics User Server watching with video Chapter 3: Physical Layer video clip clips Application Layer Application Layer Presentation Layer Presentation Layer Session Layer Session Layer Transport Layer Transport Layer Network Layer Network Layer Network Layer Data Link Layer Data Link Layer Data Link Layer Physical Layer Physical Layer Physical Layer Univ.-Prof. Dr.-Ing. Jochen H. Schiller Computer Systems and Telematics (CST) Institute of Computer Science Freie Universität Berlin http://cst.mi.fu-berlin.de Contents ● Design Issues ● Theoretical Basis for Data Communication ● Analog Data and Digital Signals ● Data Encoding ● Transmission Media ● Guided Transmission Media ● Wireless Transmission (see Mobile Communications) ● The Last Mile Problem ● Multiplexing ● Integrated Services Digital Network (ISDN) ● Digital Subscriber Line (DSL) ● Mobile Telephone System Univ.-Prof. Dr.-Ing. Jochen H. Schiller ▪ cst.mi.fu-berlin.de ▪ Telematics ▪ Chapter 3: Physical Layer 3.2 Design Issues Univ.-Prof. Dr.-Ing. Jochen H. Schiller ▪ cst.mi.fu-berlin.de ▪ Telematics ▪ Chapter 3: Physical Layer 3.3 Design Issues ● Connection parameters ● mechanical OSI Reference Model ● electric and electronic Application Layer ● functional and procedural Presentation Layer ● More detailed ● Physical transmission medium (copper cable, Session Layer optical fiber, radio, ...) ● Pin usage in network connectors Transport Layer ● Representation of raw bits (code, voltage,…) Network Layer ● Data rate ● Control of bit flow: Data Link Layer ● serial or parallel transmission of bits Physical Layer ● synchronous or asynchronous transmission ● simplex, half-duplex, or full-duplex transmission mode Univ.-Prof. Dr.-Ing. Jochen H. Schiller ▪ cst.mi.fu-berlin.de ▪ Telematics ▪ Chapter 3: Physical Layer 3.4 Design Issues Transmitter Receiver Source Transmission System Destination NIC NIC Input Abcdef djasdja dak jd ashda kshd akjsd asdkjhasjd as kdjh askjda Univ.-Prof. -

Medium Access Control Layer

Telematics Chapter 5: Medium Access Control Sublayer User Server watching with video Beispielbildvideo clip clips Application Layer Application Layer Presentation Layer Presentation Layer Session Layer Session Layer Transport Layer Transport Layer Network Layer Network Layer Network Layer Univ.-Prof. Dr.-Ing. Jochen H. Schiller Data Link Layer Data Link Layer Data Link Layer Computer Systems and Telematics (CST) Physical Layer Physical Layer Physical Layer Institute of Computer Science Freie Universität Berlin http://cst.mi.fu-berlin.de Contents ● Design Issues ● Metropolitan Area Networks ● Network Topologies (MAN) ● The Channel Allocation Problem ● Wide Area Networks (WAN) ● Multiple Access Protocols ● Frame Relay (historical) ● Ethernet ● ATM ● IEEE 802.2 – Logical Link Control ● SDH ● Token Bus (historical) ● Network Infrastructure ● Token Ring (historical) ● Virtual LANs ● Fiber Distributed Data Interface ● Structured Cabling Univ.-Prof. Dr.-Ing. Jochen H. Schiller ▪ cst.mi.fu-berlin.de ▪ Telematics ▪ Chapter 5: Medium Access Control Sublayer 5.2 Design Issues Univ.-Prof. Dr.-Ing. Jochen H. Schiller ▪ cst.mi.fu-berlin.de ▪ Telematics ▪ Chapter 5: Medium Access Control Sublayer 5.3 Design Issues ● Two kinds of connections in networks ● Point-to-point connections OSI Reference Model ● Broadcast (Multi-access channel, Application Layer Random access channel) Presentation Layer ● In a network with broadcast Session Layer connections ● Who gets the channel? Transport Layer Network Layer ● Protocols used to determine who gets next access to the channel Data Link Layer ● Medium Access Control (MAC) sublayer Physical Layer Univ.-Prof. Dr.-Ing. Jochen H. Schiller ▪ cst.mi.fu-berlin.de ▪ Telematics ▪ Chapter 5: Medium Access Control Sublayer 5.4 Network Types for the Local Range ● LLC layer: uniform interface and same frame format to upper layers ● MAC layer: defines medium access .. -

Physical Layer Compliance Testing for 1000BASE-T Ethernet

Physical Layer Compliance Testing for 1000BASE-T Ethernet –– APPLICATION NOTE Physical Layer Compliance Testing for 1000BASE-T Ethernet APPLICATION NOTE Engineers designing or validating the 1000BASE-T Ethernet 1000BASE-T Physical Layer physical layer on their products need to perform a wide range Compliance Standards of tests, quickly, reliably and efficiently. This application note describes the tests that ensure validation, the challenges To ensure reliable information transmission over a network, faced while testing multi-level signals, and how oscilloscope- industry standards specify requirements for the network’s resident test software enables significant efficiency physical layer. The IEEE 802.3 standard defines an array of improvements with its wide range of tests, including return compliance tests for 1000BASE-T physical layer. These tests loss, fast validation cycles, and high reliability. are performed by placing the device under test in test modes specified in the standard. The Basics of 1000BASE-T Testing While it is recommended to perform as many tests as Popularly known as Gigabit Ethernet, 1000BASE-T has been possible, the following core tests are critical for compliance: experiencing rapid growth. With only minimal changes to IEEE 802.3 Test Mode Test the legacy cable structure, it offers 100 times faster data Reference rates than 10BASE-T Ethernet signals. Gigabit Ethernet, in Peak 40.6.1.2.1 combination with Fast Ethernet and switched Ethernet, offers Test Mode-1 Droof 40.6.1.2.2 Template 40.6.1.2.3 a cost-effective alternative to slow networks. Test Mode-2 Master Jitter 40.6.1.2.5 Test Mode-3 Slave Jitter 1000BASE-T uses four signal pairs for full-duplex Distortion 40.6.1.2.4 transmission and reception over CAT-5 balanced cabling. -

Structure of IEEE 802.11 Packets at Various Physical Layers

Appendix A: Structure of IEEE 802.11 Packets at Various Physical Layers This appendix gives a detailed description of the structure of packets in IEEE 802.11 for the different physical layers. 1. Packet Format of Frequency Hopping Spread-spectrum Physical Layer (FHSS PHY) The packet is made up of the following elements (Figure A.1): 1.1 Preamble It depends on the physical layer and includes: – Synch: a sequence of 80 bits alterning 0 and 1, used by the physical circuits to select the correct antenna (if more than one are in use), and correct offsets of frequency and synchronization. – SFD: start frame delimiter consists of a pattern of 16 bits: 0000 1100 1011 1101, used to define the beginning of the frame. 1.2 Physical Layer Convergence Protocol Header The Physical Layer Convergence Protocol (PLCP) header is always transmitted at 1 Mbps and carries some logical information used by the physical layer to decode the frame: – Length of word of PLCP PDU (PLW): representing the number of bytes in the packet, useful to the physical layer to detect correctly the end of the packet. – Flag of signalization PLCP (PSF): indicating the supported rate going from 1 to 4.5 Mbps with steps of 0.5 Mbps. Even though the standard gives the combinations of bits for PSF (see Table A.1) to support eight different rates, only the modulations for 1 and 2 Mbps have been defined. – Control error field (HEC): CRC field for error detection of 16 bits (or 32 bits). The polynomial generator used is G(x)=x16 + x12 + x5 +1. -

High-Level Data Link Control

ELEC3030 (EL336) Computer Networks S Chen High-Level Data Link Control • This class of data link layer protocols includes High-level Data Link Control (HDLC), Link Access Procedure Balanced (LAPB) for X.25, Link Access Procedure for D-channel (LAPD) for ISDN, and Logic Link Control (LLC) for FDDI • The frame format is: Flag Address Control Data FCS Flag Note that address and control bits 8 8 8 variable 16 8 can be extended to 16 bits, so bit position 12 3 4 5 6 7 8 N(S)=send sequence number N(R)=receive sequence number that sequence number is 7-bit Information: 0 N(S) P/F N(R) S=supervisory function bits P/F • Frame flag: 01111110, so bit Supervisory: 1 0 S N(R) M=unumbered function bits stuffing is used Unumbered: 1 1 M P/F M P/F=poll/final bit • Address: For multipoint operation, it is used to identify the terminal that transmits or receives the frame and, in point-to-point link, it is used to distinguish Commands from Responses (2nd bit for C/R: 0/1). The address is now extended to 16 bits (1st bit indicates long/short 16/8 bits) • Checksum: FCS contains the remainder of a 16-bit CRC calculation of the frame. It may be extended to 32 bits, using a 32-bit CRC • Control: Three types of frames, I, S and U frames. The old protocol uses a sliding window with 3-bit sequence number and the maximum window size is N = 7. The control field is now extended to 16 bits with 7-bit sequence number 60 ELEC3030 (EL336) Computer Networks S Chen HDLC (continue) • I-frames: carry user data. -

Data Link Layer Review

Data Link Layer Review Advanced Computer Networks Data Link Layer • Provides a well-defined service interface to the network layer. • Determines how the bits of the physical layer are grouped into frames (framing). • Deals with transmission errors (CRC and ARQ). • Regulates the flow of frames. • Performs general link layer management. Advanced Computer Networks Data Link Layer 2 Packets Packets (a) Data link Data link Layer Layer A Physical Frames Physical B Layer Layer (b) 1 2 3 2 1 1 2 3 2 1 Medium 2 A B 1 1 Physical layer entity 3 Network layer entity 2 Data link layer entity Leon-Garcia & Widjaja: Communication Networks Advanced Computer Networks Data Link Layer 3 End to End Transport Layer ACK/NAK 1 2 3 4 5 Data Data Data Data Hop by Hop Data Data Data Data 1 2 3 4 5 ACK/ ACK/ ACK/ ACK/ NAK NAK NAK NAK Leon-Garcia & Widjaja: Communication Networks Advanced Computer Networks Data Link Layer 4 Tanenbaum’s Data Link Layer Treatment • Concerned with communication between two adjacent nodes in the subnet (node to node). • Assumptions: – The bits are delivered in the order sent. – A rigid interface between the HOST and the node the communications policy and the Host protocol (with OS effects) can evolve separately. – He uses a simplified model. Advanced Computer Networks Data Link Layer 5 LayerLayer 4 4 Host Host A B Layer 2 Node Node 1 2 frame Tanenbaum’s Data Link Layer Model Assume the sending Host has infinite supply of messages. A node constructs a frame from a single packet message. -

An Introduction to Bluetooth Low Energy Robin Heydon, Senior Director, Technology Qualcomm Technologies International, Ltd

An Introduction to Bluetooth low energy Robin Heydon, Senior Director, Technology Qualcomm Technologies International, Ltd. Agenda What is Bluetooth low energy What are the important features? How does it work? What are the next steps? What is it good for today? What else can you do with it? 2 What is low energy? It is a new technology blank sheet of paper design optimized for ultra low power different to Bluetooth classic 3 New Technology? Yes efficient discovery / connection procedures very short packets asymmetric design for peripherals client server architecture No reuse existing BR radio architecture reuse existing HCI logical and physical transports reuse existing L2CAP packets 4 Basic Concepts Design for success able to discover thousands of devices in local area unlimited number of slaves connected to a master unlimited number of masters state of the art encryption security including privacy / authentication / authorization class leading robustness, data integrity future proof 5 Basic Concepts Everything has STATE devices expose their state these are servers Clients can use the state exposed on servers read it – get current temperature write it – increase set point temperature for room Servers can tell clients when state updates notify it – temperature up to set point 6 Stack Architecture Applications Apps Generic Access Profile Generic Attribute Profile Host Attribute Protocol Security Manager Logical Link Control and Adaptation Protocol Host Controller Interface Link Layer Direct Test Mode Controller Physical Layer 7 Physical -

Chapter 6: Medium Access Control Layer

Chapter 6: Medium Access Control Layer Chapter 6: Roadmap " Overview! " Wireless MAC protocols! " Carrier Sense Multiple Access! " Multiple Access with Collision Avoidance (MACA) and MACAW! " MACA By Invitation! " IEEE 802.11! " IEEE 802.15.4 and ZigBee! " Characteristics of MAC Protocols in Sensor Networks! " Energy Efficiency! " Scalability! " Adaptability! " Low Latency and Predictability! " Reliability! " Contention-Free MAC Protocols! " Contention-Based MAC Protocols! " Hybrid MAC Protocols! Fundamentals of Wireless Sensor Networks: Theory and Practice Waltenegus Dargie and Christian Poellabauer © 2010 John Wiley & Sons Ltd. 2! Medium Access Control " In most networks, multiple nodes share a communication medium for transmitting their data packets! " The medium access control (MAC) protocol is primarily responsible for regulating access to the shared medium! " The choice of MAC protocol has a direct bearing on the reliability and efficiency of network transmissions! " due to errors and interferences in wireless communications and to other challenges! " Energy efficiency also affects the design of the MAC protocol! " trade energy efficiency for increased latency or a reduction in throughput or fairness! Fundamentals of Wireless Sensor Networks: Theory and Practice Waltenegus Dargie and Christian Poellabauer © 2010 John Wiley & Sons Ltd. 3! 1! Overview " Responsibilities of MAC layer include:! " decide when a node accesses a shared medium! " resolve any potential conflicts between competing nodes! " correct communication errors occurring at the physical layer! " perform other activities such as framing, addressing, and flow control! " Second layer of the OSI reference model (data link layer) or the IEEE 802 reference model (which divides data link layer into logical link control and medium access control layer)! Fundamentals of Wireless Sensor Networks: Theory and Practice Waltenegus Dargie and Christian Poellabauer © 2010 John Wiley & Sons Ltd. -

Ethernet Explained

Technical Note TN_157 Ethernet Explained Version 1.0 Issue Date: 2015-03-23 The FTDI FT900 32 bit MCU series, provides for high data rate, computationally intensive data transfers. One of the interfaces used for this high speed communication is Ethernet. This application note discusses some of the key features of an Ethernet link and how the FT900 assists in establishing the link. Use of FTDI devices in life support and/or safety applications is entirely at the user’s risk, and the user agrees to defend, indemnify and hold FTDI harmless from any and all damages, claims, suits or expense resulting from such use. Future Technology Devices International Limited (FTDI) Unit 1, 2 Seaward Place, Glasgow G41 1HH, United Kingdom Tel.: +44 (0) 141 429 2777 Fax: + 44 (0) 141 429 2758 Web Site: http://ftdichip.com Copyright © 2015 Future Technology Devices International Limited Technical Note TN_157 Ethernet Explained Version 1.0 Document Reference No.: FT_001105 Clearance No.: FTDI# 442 Table of Contents 1 Introduction .................................................................................................................................... 3 1.1 Scope ....................................................................................................................................... 3 2 What is Ethernet? ........................................................................................................................... 4 2.1 Speeds .................................................................................................................................... -

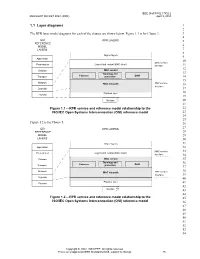

Layer Diagrams 1 2 the RPR Layer Model Diagrams for Each of the Clauses Are Shown Below

IEEE Draft P802.17/D2.2 RESILIENT PACKET RING (RPR) April 9, 2003 1.1 Layer diagrams 1 2 The RPR layer model diagrams for each of the clauses are shown below. Figure 1.1 is for Clause 1. 3 4 OSI RPR LAYERS 5 REFERENCE 6 MODEL LAYERS 7 8 Higher layers 9 Application 10 MAC service Presentation Logical link control (MAC client) interface 11 12 Session MAC control Topology and 13 Transport Fairness protection OAM 14 15 Network MAC datapath PHY service 16 interface Data link 17 Physical layer 18 Physical 19 Medium 20 21 Figure 1.1—RPR service and reference model relationship to the 22 ISO/IEC Open Systems Interconnection (OSI) reference model 23 24 25 Figure 1.2 is for Clause 5. 26 27 OSI RPR LAYERS REFERENCE 28 MODEL 29 LAYERS 30 Higher layers 31 Application 32 MAC service 33 Presentation Logical link control (MAC client) interface 34 Session MAC control 35 Topology and 36 Fairness OAM Transport protection 37 38 Network MAC datapath PHY service interface 39 Data link 40 Physical layer Physical 41 42 Medium 43 44 Figure 1.2—RPR service and reference model relationship to the 45 ISO/IEC Open Systems Interconnection (OSI) reference model 46 47 48 49 50 51 52 53 54 Copyright © 2002, 2003 IEEE. All rights reserved. This is an unapproved IEEE Standards Draft, subject to change. 15 IEEE Draft P802.17/D2.2 April 9, 2003 DRAFT STANDARD FOR 1 Figure 1.3 is for Clause 6. 2 3 OSI 4 REFERENCE RPR LAYERS 5 MODEL LAYERS 6 7 Higher layers 8 Application 9 Presentation Logical link control (MAC client) 10 11 Session MAC control Topology and 12 Transport Fairness protection OAM 13 14 Network MAC datapath 15 Data link 16 Physical layer 17 Physical 18 Medium 19 20 Figure 1.3—MAC datapath sublayer relationship to the 21 ISO/IEC Open Systems Interconnection (OSI) reference model 22 23 24 Figure 1.4 is for Clause 7.