Integrating Web-Based Multimedia Technologies with Operator Multimedia Services Core

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Space Application Development Board

Space Application Development Board The Space Application Development Board (SADB-C6727B) enables developers to implement systems using standard and custom algorithms on processor hardware similar to what would be deployed for space in a fully radiation hardened (rad-hard) design. The SADB-C6727B is devised to be similar to a space qualified rad-hardened system design using the TI SMV320C6727B-SP DSP and high-rel memories (SRAM, SDRAM, NOR FLASH) from Cobham. The VOCAL SADB- C6727B development board does not use these high reliability space qualified components but rather implements various common bus widths and memory types/speeds for algorithm development and performance evaluation. VOCAL Technologies, Ltd. www.vocal.com 520 Lee Entrance, Suite 202 Tel: (716) 688-4675 Buffalo, New York 14228 Fax: (716) 639-0713 Audio inputs are handled by a TI ADS1278 high speed multichannel analog-to-digital converter (ADC) which has a variant qualified for space operation. Audio outputs may be generated from digital signal Pulse Density Modulation (PDM) or Pulse Width Modulation (PWM) signals to avoid the need for a space qualified digital-to-analog converter (DAC) hardware or a traditional audio codec circuit. A commercial grade AIC3106 audio codec is provided for algorithm development and for comparison of audio performance to PDM/PWM generated audio signals. Digital microphones (PDM or I2S formats) can be sampled by the processor directly or by the AIC3106 audio codec (PDM format only). Digital audio inputs/outputs can also be connected to other processors (using the McASP signals directly). Both SRAM and SDRAM memories are supported via configuration resistors to allow for developing and benchmarking algorithms using either 16-bit wide or 32-bit wide busses (also via configuration resistors). -

Concatenation of Compression Codecs – the Need for Objective Evaluations

Concatenation of compression codecs – The need for objective evaluations C.J. Dalton (UKIB) In this article the Author considers, firstly, a hypothetical broadcast network in which compression equipments have replaced several existing functions – resulting in multiple-cascading. Secondly, he describes a similar network that has been optimized for compression technology. Picture-quality assessment methods – both conventional and new, fied for still-picture applications in the printing in- subjective and objective – are dustry and was adapted to motion video applica- discussed with the aim of providing tions, by encoding on a picture-by-picture basis. background information. Some Various proprietary versions of JPEG, with higher proposals are put forward for compression ratios, were introduced to reduce the objective evaluation together with storage requirements, and alternative systems based on Wavelets and Fractals have been imple- initial observations when concaten- mented. Due to the availability of integrated hard- ating (cascading) codecs of similar ware, motion JPEG is now widely used for off- and different types. and on-line editing, disc servers, slow-motion sys- tems, etc., but there is little standardization and few interfaces at the compressed level. The MPEG-1 compression standard was aimed at 1. Introduction progressively-scanned moving images and has been widely adopted for CD-ROM and similar ap- Bit-rate reduction (BRR) techniques – commonly plications; it has also found limited application for referred to as compression – are now firmly estab- broadcast video. The MPEG-2 system is specifi- lished in the latest generation of broadcast televi- cally targeted at the complete gamut of video sys- sion equipments. tems; for standard-definition television, the MP@ML codec – with compression ratios of 20:1 Original language: English Manuscript received 15/1/97. -

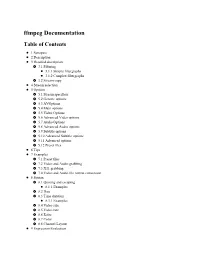

Ffmpeg Documentation Table of Contents

ffmpeg Documentation Table of Contents 1 Synopsis 2 Description 3 Detailed description 3.1 Filtering 3.1.1 Simple filtergraphs 3.1.2 Complex filtergraphs 3.2 Stream copy 4 Stream selection 5 Options 5.1 Stream specifiers 5.2 Generic options 5.3 AVOptions 5.4 Main options 5.5 Video Options 5.6 Advanced Video options 5.7 Audio Options 5.8 Advanced Audio options 5.9 Subtitle options 5.10 Advanced Subtitle options 5.11 Advanced options 5.12 Preset files 6 Tips 7 Examples 7.1 Preset files 7.2 Video and Audio grabbing 7.3 X11 grabbing 7.4 Video and Audio file format conversion 8 Syntax 8.1 Quoting and escaping 8.1.1 Examples 8.2 Date 8.3 Time duration 8.3.1 Examples 8.4 Video size 8.5 Video rate 8.6 Ratio 8.7 Color 8.8 Channel Layout 9 Expression Evaluation 10 OpenCL Options 11 Codec Options 12 Decoders 13 Video Decoders 13.1 rawvideo 13.1.1 Options 14 Audio Decoders 14.1 ac3 14.1.1 AC-3 Decoder Options 14.2 ffwavesynth 14.3 libcelt 14.4 libgsm 14.5 libilbc 14.5.1 Options 14.6 libopencore-amrnb 14.7 libopencore-amrwb 14.8 libopus 15 Subtitles Decoders 15.1 dvdsub 15.1.1 Options 15.2 libzvbi-teletext 15.2.1 Options 16 Encoders 17 Audio Encoders 17.1 aac 17.1.1 Options 17.2 ac3 and ac3_fixed 17.2.1 AC-3 Metadata 17.2.1.1 Metadata Control Options 17.2.1.2 Downmix Levels 17.2.1.3 Audio Production Information 17.2.1.4 Other Metadata Options 17.2.2 Extended Bitstream Information 17.2.2.1 Extended Bitstream Information - Part 1 17.2.2.2 Extended Bitstream Information - Part 2 17.2.3 Other AC-3 Encoding Options 17.2.4 Floating-Point-Only AC-3 Encoding -

Surround Sound Processed by Opus Codec: a Perceptual Quality Assessment

28. Konferenz Elektronische Sprachsignalverarbeitung 2017, Saarbrücken SURROUND SOUND PROCESSED BY OPUS CODEC: APERCEPTUAL QUALITY ASSESSMENT Franziska Trojahn, Martin Meszaros, Michael Maruschke and Oliver Jokisch Hochschule für Telekommunikation Leipzig, Germany [email protected] Abstract: The article describes the first perceptual quality study of 5.1 surround sound that has been processed by the Opus codec standardised by the Internet Engineering Task Force (IETF). All listening sessions with up to five subjects took place in a slightly sound absorbing laboratory – simulating living room conditions. For the assessment we conducted a Degradation Category Rating (DCR) listening- opinion test according to ITU-T P.800 recommendation with stimuli for six channels at total bitrates between 96 kbit/s and 192 kbit/s as well as hidden references. A group of 27 naive listeners compared a total of 20 sound samples. The differences between uncompressed and degraded sound samples were rated on a five-point degradation category scale resulting in Degradation Mean Opinion Score (DMOS). The overall results show that the average quality correlates with the bitrates. The quality diverges for the individual test stimuli depending on the music characteristics. Under most circumstances, a bitrate of 128 kbit/s is sufficient to achieve acceptable quality. 1 Introduction Nowadays, a high number of different speech and audio codecs are implemented in several kinds of multimedia applications; including audio / video entertainment, broadcasting and gaming. In recent years the demand for low delay and high quality audio applications, such as remote real-time jamming and cloud gaming, has been increasing. Therefore, current research objectives do not only include close to natural speech or audio quality, but also the requirements of low bitrates and a minimum latency. -

Influence of Speech Codecs Selection on Transcoding Steganography

Influence of Speech Codecs Selection on Transcoding Steganography Artur Janicki, Wojciech Mazurczyk, Krzysztof Szczypiorski Warsaw University of Technology, Institute of Telecommunications Warsaw, Poland, 00-665, Nowowiejska 15/19 Abstract. The typical approach to steganography is to compress the covert data in order to limit its size, which is reasonable in the context of a limited steganographic bandwidth. TranSteg (Trancoding Steganography) is a new IP telephony steganographic method that was recently proposed that offers high steganographic bandwidth while retaining good voice quality. In TranSteg, compression of the overt data is used to make space for the steganogram. In this paper we focus on analyzing the influence of the selection of speech codecs on hidden transmission performance, that is, which codecs would be the most advantageous ones for TranSteg. Therefore, by considering the codecs which are currently most popular for IP telephony we aim to find out which codecs should be chosen for transcoding to minimize the negative influence on voice quality while maximizing the obtained steganographic bandwidth. Key words: IP telephony, network steganography, TranSteg, information hiding, speech coding 1. Introduction Steganography is an ancient art that encompasses various information hiding techniques, whose aim is to embed a secret message (steganogram) into a carrier of this message. Steganographic methods are aimed at hiding the very existence of the communication, and therefore any third-party observers should remain unaware of the presence of the steganographic exchange. Steganographic carriers have evolved throughout the ages and are related to the evolution of the methods of communication between people. Thus, it is not surprising that currently telecommunication networks are a natural target for steganography. -

TR 101 329-7 V1.1.1 (2000-11) Technical Report

ETSI TR 101 329-7 V1.1.1 (2000-11) Technical Report TIPHON; Design Guide; Part 7: Design Guide for Elements of a TIPHON connection from an end-to-end speech transmission performance point of view 2 ETSI TR 101 329-7 V1.1.1 (2000-11) Reference DTR/TIPHON-05011 Keywords internet, IP, network, performance, protocol, quality, speech, voice ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.:+33492944200 Fax:+33493654716 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice Individual copies of the present document can be downloaded from: http://www.etsi.org The present document may be made available in more than one electronic version or in print. In any case of existing or perceived difference in contents between such versions, the reference version is the Portable Document Format (PDF). In case of dispute, the reference shall be the printing on ETSI printers of the PDF version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://www.etsi.org/tb/status/ If you find errors in the present document, send your comment to: [email protected] Copyright Notification No part may be reproduced except as authorized by written permission. The copyright and the foregoing restriction extend to reproduction in all media. -

Ffmpeg Codecs Documentation Table of Contents

FFmpeg Codecs Documentation Table of Contents 1 Description 2 Codec Options 3 Decoders 4 Video Decoders 4.1 hevc 4.2 rawvideo 4.2.1 Options 5 Audio Decoders 5.1 ac3 5.1.1 AC-3 Decoder Options 5.2 flac 5.2.1 FLAC Decoder options 5.3 ffwavesynth 5.4 libcelt 5.5 libgsm 5.6 libilbc 5.6.1 Options 5.7 libopencore-amrnb 5.8 libopencore-amrwb 5.9 libopus 6 Subtitles Decoders 6.1 dvbsub 6.1.1 Options 6.2 dvdsub 6.2.1 Options 6.3 libzvbi-teletext 6.3.1 Options 7 Encoders 8 Audio Encoders 8.1 aac 8.1.1 Options 8.2 ac3 and ac3_fixed 8.2.1 AC-3 Metadata 8.2.1.1 Metadata Control Options 8.2.1.2 Downmix Levels 8.2.1.3 Audio Production Information 8.2.1.4 Other Metadata Options 8.2.2 Extended Bitstream Information 8.2.2.1 Extended Bitstream Information - Part 1 8.2.2.2 Extended Bitstream Information - Part 2 8.2.3 Other AC-3 Encoding Options 8.2.4 Floating-Point-Only AC-3 Encoding Options 8.3 flac 8.3.1 Options 8.4 opus 8.4.1 Options 8.5 libfdk_aac 8.5.1 Options 8.5.2 Examples 8.6 libmp3lame 8.6.1 Options 8.7 libopencore-amrnb 8.7.1 Options 8.8 libopus 8.8.1 Option Mapping 8.9 libshine 8.9.1 Options 8.10 libtwolame 8.10.1 Options 8.11 libvo-amrwbenc 8.11.1 Options 8.12 libvorbis 8.12.1 Options 8.13 libwavpack 8.13.1 Options 8.14 mjpeg 8.14.1 Options 8.15 wavpack 8.15.1 Options 8.15.1.1 Shared options 8.15.1.2 Private options 9 Video Encoders 9.1 Hap 9.1.1 Options 9.2 jpeg2000 9.2.1 Options 9.3 libkvazaar 9.3.1 Options 9.4 libopenh264 9.4.1 Options 9.5 libtheora 9.5.1 Options 9.5.2 Examples 9.6 libvpx 9.6.1 Options 9.7 libwebp 9.7.1 Pixel Format 9.7.2 Options 9.8 libx264, libx264rgb 9.8.1 Supported Pixel Formats 9.8.2 Options 9.9 libx265 9.9.1 Options 9.10 libxvid 9.10.1 Options 9.11 mpeg2 9.11.1 Options 9.12 png 9.12.1 Private options 9.13 ProRes 9.13.1 Private Options for prores-ks 9.13.2 Speed considerations 9.14 QSV encoders 9.15 snow 9.15.1 Options 9.16 vc2 9.16.1 Options 10 Subtitles Encoders 10.1 dvdsub 10.1.1 Options 11 See Also 12 Authors 1 Description# TOC This document describes the codecs (decoders and encoders) provided by the libavcodec library. -

Tr 126 959 V15.0.0 (2018-07)

ETSI TR 126 959 V15.0.0 (2018-07) TECHNICAL REPORT 5G; Study on enhanced Voice over LTE (VoLTE) performance (3GPP TR 26.959 version 15.0.0 Release 15) 3GPP TR 26.959 version 15.0.0 Release 15 1 ETSI TR 126 959 V15.0.0 (2018-07) Reference DTR/TSGS-0426959vf00 Keywords 5G ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice The present document can be downloaded from: http://www.etsi.org/standards-search The present document may be made available in electronic versions and/or in print. The content of any electronic and/or print versions of the present document shall not be modified without the prior written authorization of ETSI. In case of any existing or perceived difference in contents between such versions and/or in print, the only prevailing document is the print of the Portable Document Format (PDF) version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at https://portal.etsi.org/TB/ETSIDeliverableStatus.aspx If you find errors in the present document, please send your comment to one of the following services: https://portal.etsi.org/People/CommiteeSupportStaff.aspx Copyright Notification No part may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm except as authorized by written permission of ETSI. -

Enhanced Voice Services (EVS) Codec Management of the Institute Until Now, Telephone Services Have Generally Failed to Offer a High-Quality Audio Experience Prof

TECHNICAL PAPER Fraunhofer Institute for Integrated Circuits IIS ENHANCED VOICE SERVICES (EVS) CODEC Management of the institute Until now, telephone services have generally failed to offer a high-quality audio experience Prof. Dr.-Ing. Albert Heuberger due to limitations such as very low audio bandwidth and poor performance on non- (executive) speech signals. However, recent developments in speech and audio coding now promise a Dr.-Ing. Bernhard Grill significant quality boost in conversational services, providing the full audio bandwidth for Am Wolfsmantel 33 a more natural experience, improved speech intelligibility and listening comfort. 91058 Erlangen www.iis.fraunhofer.de The recently standardized Enhanced Voice Service (EVS) codec is the first 3GPP commu- nication codec providing super-wideband (SWB) audio bandwidth for improved speech Contact quality already at 9.6 kbps. At the same time, the codec’s performance on other signals, Matthias Rose like music or mixed content, is comparable to modern audio codecs. The key technology Phone +49 9131 776-6175 of the codec is a flexible switching scheme between specialized coding modes for speech [email protected] and music signals. The codec was jointly developed by the following companies, repre- senting operators, terminal, infrastructure and chipset vendors, as well as leading speech Contact USA and audio coding experts: Ericsson, Fraunhofer IIS, Huawei Technologies Co. Ltd, NOKIA Fraunhofer USA, Inc. Corporation, NTT, NTT DOCOMO INC., ORANGE, Panasonic Corporation, Qualcomm Digital Media Technologies* Incorporated, Samsung Electronics Co. Ltd, VoiceAge and ZTE Corporation. Phone +1 408 573 9900 [email protected] The objective of this paper is to provide a brief overview of the landscape of commu- nication systems with special focus on the EVS codec. -

Mivoice Border Gateway Engineering Guidelines

MiVoice Border Gateway Engineering Guidelines January, 2017 Release 9.4 1 NOTICE The information contained in this document is believed to be accurate in all respects but is not warranted by Mitel Networks™ Corporation (MITEL®). The information is subject to change without notice and should not be construed in any way as a commitment by Mitel or any of its affiliates or subsidiaries. Mitel and its affiliates and subsidiaries assume no responsibility for any errors or omissions in this document. Revisions of this document or new editions of it may be issued to incorporate such changes. No part of this document can be reproduced or transmitted in any form or by any means - electronic or mechanical - for any purpose without written permission from Mitel Networks Corporation. Trademarks The trademarks, service marks, logos and graphics (collectively "Trademarks") appearing on Mitel's Internet sites or in its publications are registered and unregistered trademarks of Mitel Networks Corporation (MNC) or its subsidiaries (collectively "Mitel") or others. Use of the Trademarks is prohibited without the express consent from Mitel. Please contact our legal department at [email protected] for additional information. For a list of the worldwide Mitel Networks Corporation registered trademarks, please refer to the website: http://www.mitel.com/trademarks. Product names mentioned in this document may be trademarks of their respective companies and are hereby acknowledged. MBG - Engineering Guidelines Release 9.4 January, 2017 ®,™ Trademark of Mitel Networks -

A Case for SIP in Javascript", IEEE Communications Magazine , Vol

Copyright © IEEE, 2013. This is the author's copy of a paper that appears in IEEE Communications Magazine. Please cite as follows: K.Singh and V.Krishnaswamy, "A case for SIP in JavaScript", IEEE Communications Magazine , Vol. 51, No. 4, April 2013. A Case for SIP in JavaScript Kundan Singh Venkatesh Krishnaswamy IP Communications Department IP Communications Department Avaya Labs Avaya Labs Santa Clara, USA Basking Ridge, USA [email protected] [email protected] Abstract —This paper presents the challenges and compares the applications independent of the specific SIP extensions alternatives to interoperate between the Session Initiation supported in the vendor's gateway. Protocol (SIP)-based systems and the emerging standards for the Web Real-Time Communication (WebRTC). We argue for an We observe that decoupled development across services , end-point and web-focused architecture, and present both sides tools and applications promotes interoperability. Today, the of the SIP in JavaScript approach. Until WebRTC has ubiquitous web developers can build applications independent of a cross-browser availability, we suggest a fall back strategy for web particular service (Internet service provider) or vendor tool developers — detect and use HTML5 if available, otherwise fall (browser, web server). Unfortunately, many existing SIP back to a browser plugin. systems are closely integrated and have overlap among two or more of these categories, e.g., access network provider Keywords- SIP; HTML5; WebRTC; Flash Player; web-based (service) wants to control voice calling (application), or three communication party calling (application) depends on your telephony provider (service). The main motivation of the endpoint approach (also I. INTRODUCTION known as SIP in JavaScript) is to separate the applications In the past, web developers have resorted to browser (various SIP extensions and telephony features) from the plugins such as Flash Player to do audio and video calls in the specific tools (browsers or proxies) or VoIP service providers. -

A Flexible and Scalable Media Centric Webrtc

An Oracle White Paper May 2014 Towards a Flexible and Scalable Media Centric WebRTC Towards a Flexible and Scalable Media Centric WebRTC Introduction Web Real Time Communications (WebRTC) transforms any device with a browser into a voice, video, and screen sharing endpoint, without the need of downloading, installing, and configuring special applications or browser plug-ins. With the almost ubiquitous presence of browsers, WebRTC promises to broaden the range of communicating devices to include game consoles and set-top boxes in addition to laptops, smartphones, tablets, etc. WebRTC has been described as a transformative technology bridging the worlds of telephony and web. But what does this bridging really imply and have the offerings from vendors fully realized the possibilities that WebRTC promises? In this paper we discuss the importance of WebRTC Service Enablers that help unlock the full potential of WebRTC and the architectures that these elements make possible in this new era of democratization. New Elements in the WebRTC Network Architecture While web-only communications mechanisms would require nothing more than web browsers and web servers, the usefulness of such web-only networks would be limited. Not only is there a need to interwork with existing SIP1 and IMS2 based networks, there is also a need to provide value added services. There are several products in the marketplace that attempt to offer this functionality. But most of the offerings are little more than basic gateways. Such basic gateways perform simple protocol conversions where messages are translated from one domain to another. Such a simplistic gateway would convert call signaling from JSON3, XMPP4, or SIP over WebSockets, to SIP, and might also provide interoperability between media formats.