Alternative Strategies for Mapping ACS Estimates and Error of Estimation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evaluation of Methods for Classifying Epidemiological Data on Choropleth Maps in Series

Evaluation of Methods for Classifying Epidemiological Data on Choropleth Maps in Series Cynthia A. Brewer* and Linda Pickle** *Department of Geography, The Pennsylvania State University **National Cancer Institute Our research goal was to determine which choropleth classification methods are most suitable for epidemiological rate maps. We compared seven methods using responses by fifty-six subjects in a two-part experiment involving nine series of U.S. mortality maps. Subjects answered a wide range of general map-reading questions that involved indi- vidual maps and comparisons among maps in a series. The questions addressed varied scales of map-reading, from in- dividual enumeration units, to regions, to whole-map distributions. Quantiles and minimum boundary error classi- fication methods were best suited for these general choropleth map-reading tasks. Natural breaks (Jenks) and a hybrid version of equal-intervals classing formed a second grouping in the results, both producing responses less than 70 percent as accurate as for quantiles. Using matched legends across a series of maps (when possible) increased map-comparison accuracy by approximately 28 percent. The advantages of careful optimization procedures in choropleth classification seem to offer no benefit over the simpler quantile method for the general map-reading tasks tested in the reported experiment. Key Words: choropleth, classification, epidemiology, maps. horopleth mapping is well suited for presenta- a range of classification methods in anticipation of in- tion and exploration of mortality-rate data. Of creased production of mortality maps at the NCHS, the C the many options available, epidemiologists cus- National Cancer Institute (NCI), and other health agen- tomarily use quantile-based classification in their map- cies through desktop geographic information systems ping (see Table 5 in Walter and Birnie 1991). -

Cartography. the Definitive Guide to Making Maps, Sample Chapter

Cartograms Cartograms offer a way of accounting for differences in population distribution by modifying the geography. Geography can easily get in the way of making a good Consider the United States map in which thematic map. The advantage of a geographic map is that it states with larger populations will inevitably lead to larger numbers for most population- gives us the greatest recognition of shapes we’re familiar with related variables. but the disadvantage is that the geographic size of the areas has no correlation to the quantitative data shown. The intent However, the more populous states are not of most thematic maps is to provide the reader with a map necessarily the largest states in area, and from which comparisons can be made and so geography is so a map that shows population data in the almost always inappropriate. This fact alone creates problems geographical sense inevitably skews our perception of the distribution of that data for perception and cognition. Accounting for these problems because the geography becomes dominant. might be addressed in many ways such as manipulating the We end up with a misleading map because data itself. Alternatively, instead of changing the data and densely populated states are relatively small maintaining the geography, you can retain the data values but and vice versa. Cartograms will always give modify the geography to create a cartogram. the map reader the correct proportion of the mapped data variable precisely because it modifies the geography to account for the There are four general types of cartogram. They each problem. distort geographical space and account for the disparities caused by unequal distribution of the population among The term cartogramme can be traced to the areas of different sizes. -

Numbers on Thematic Maps: Helpful Simplicity Or Too Raw to Be Useful for Map Reading?

International Journal of Geo-Information Article Numbers on Thematic Maps: Helpful Simplicity or Too Raw to Be Useful for Map Reading? Jolanta Korycka-Skorupa * and Izabela Małgorzata Goł˛ebiowska Department of Geoinformatics, Cartography and Remote Sensing, Faculty of Geography and Regional Studies, University of Warsaw, Krakowskie Przedmiescie 30, 00-927 Warsaw, Poland; [email protected] * Correspondence: [email protected] Received: 29 May 2020; Accepted: 26 June 2020; Published: 28 June 2020 Abstract: As the development of small-scale thematic cartography continues, there is a growing interest in simple graphic solutions, e.g., in the form of numerical values presented on maps to replace or complement well-established quantitative cartographic methods of presentation. Numbers on maps are used as an independent form of data presentation or function as a supplement to the cartographic presentation, becoming a legend placed directly on the map. Despite the frequent use of numbers on maps, this relatively simple form of presentation has not been extensively empirically evaluated. This article presents the results of an empirical study aimed at comparing the usability of numbers on maps for the presentation of quantitative information to frequently used proportional symbols, for simple map-reading tasks. The study showed that the use of numbers on single-variable and two-variable maps results in a greater number of correct answers and also often an improved response time compared to the use of proportional symbols. Interestingly, the introduction of different sizes of numbers did not significantly affect their usability. Thus, it has been proven that—for some tasks—map users accept this bare-bones version of data presentation, often demonstrating a higher level of preference for it than for proportional symbols. -

Beyond Choropleth Maps: a Review of Techniques to Visualize Quantitative Areal Geodata

INFOVIS READING GROUP WS 2015/16 Beyond choropleth maps: A review of techniques to visualize quantitative areal geodata Alsino Skowronnek– University of Applied Sciences Potsdam, Department of Design Abstract—Modern digital technologies and the ubiquity of spatial data around us have recently led to an increased output and visibility of geovisualizations and digital maps. Maps and »map-like« visualizations are virtually everywhere. While areal thematic geodata has traditionally often been represented as choropleth maps, a multitude of alternative techniques exist that address the shortcomings of choropleths. Advances of these alternative techniques derive both from the traditional domains of geography and cartography, as well as from more recent disciplines such as information visualization or even non-academic domains such as data journalism. This paper will review traditional and recent visualization techniques for quantitative areal geodata beyond choropleths and evaluate their potential and limitations in a comparative manner. Index Terms—Choropleth Maps, Geovisualization, Cartograms, Grid Maps, Spatial Treemaps ––––––––––––––––––– ✦ ––––––––––––––––––– 1INTRODUCTION choropleths exhibit a number of shortcomings (i.e. sup- port of specific data types and certain visual inadequa- cies) in different situations. eople have been fascinated with maps and geospatial This paper will briefly review the literature on choro- representationsP of the world as long as we can think. This pleth maps in the following part, before contrasting the is not only due to maps’ inherent potential for storytelling technique with three alternative approaches, their main and identification with places, but also due to humans’ characteristics and implications for visualizing areal geo- excellent spatio-cognitive abilities, which allow us to easi- data in more general terms. -

Simple Thematic Mapping

The Stata Journal (2004) 4, Number 4, pp. 361–378 Simple thematic mapping Maurizio Pisati Department of Sociology and Social Research, Universit`adegli Studi di Milano-Bicocca, Italy [email protected] Abstract. Thematic maps illustrate the spatial distribution of one or more variables of interest within a given geographic unit. In a sense, a thematic map is the spatial analyst’s equivalent to the scatterplot in nonspatial analysis. This paper presents the tmap package, a set of Stata programs designed to draw five kinds of thematic maps: choropleth, proportional symbol, deviation, dot, and label maps. The first three kinds of maps are intended to depict area data, the fourth is suitable for representing point data, and the fifth can be used to show data of both types. Keywords: gr0008, tmap choropleth, tmap propsymbol, tmap deviation, tmap dot, tmap label, thematic map, choropleth map, proportional symbol map, deviation map, dot map, label map 1 Introduction Thematic maps represent the spatial distribution of one or more variables of interest within a given geographical unit (Monmonier 1993; Kraak and Ormeling 1996). For example, a sociologist could use a choropleth map (a.k.a., shaded map) to show how the percent of families below the poverty line varies across the states or the provinces of a given country. In turn, a police officer could be interested in analyzing a dot map showing the locations of drug markets within a given city. As Bailey and Gatrell (1995) have put it, in a sense, a thematic map is the spatial analyst’s equivalent to the scatter plot in nonspatial analysis. -

1 'Intelligent' Dasymetric Mapping and Its Comparison to Other Areal

‘Intelligent’ Dasymetric Mapping and Its Comparison to Other Areal Interpolation Techniques Jeremy Mennis Department of Geography and Urban Studies, 1115 W. Berks St., 309 Gladfelter Hall, Temple University, Philadelphia, PA 19122 Phone: (215) 204-4748, Fax: (215) 204-7388, Email: [email protected] Torrin Hultgren Department of Geography, UCB 360, University of Colorado, Boulder, CO 80309 Email: [email protected] 1 Abstract In previous research we have described a dasymetric mapping technique that combines an analyst's subjective knowledge with a sampling approach to parameterize the reapportionment of data from a choropleth map to a dasymetric map. In the present research, we describe a comparison of the proposed dasymetric mapping technique with the conventional areal weighting and 'binary' dasymetric mapping techniques. The comparison is made using a case study dasymetric mapping of U.S. Census tract-level population data in the Front Range of Colorado using land cover data as ancillary data. Error is quantified using U.S. Census block- level population data. The proposed dasymetric mapping technique outperforms areal weighting and certain parameterizations of the proposed technique outperform 'binary' dasymetric mapping. 1 Introduction A dasymetric map depicts a statistical surface as a set of enumerated zones, where the zone boundaries reflect the underlying variation in the surface and represent the steepest surface escarpments (Dent, 1999). Dasymetric mapping has its roots in the work of Russian cartographer Semenov Tian-Shansky (Bielecka, 2005) and American J.K. Wright (1936). Later significant contributions to dasymetric mapping were made by O'Cleary (1969) and, concurrent with more recent advances in GIS and environmental remote sensing, researchers in spatial analysis (Goodchild et al., 1993; Wu et al., 2005). -

Dasymetric Mapping

Dasymetric Mapping Some geographical distributions are best mapped as ‘volumes’ that represent surfaces characterized by plateaus of relative uniformity separated from one another by relatively steep slopes or escarpments where there is a marked change in statistical value. There are two techniques for defining this stepped surface, the choroplethic and the dasymetric. The choroplethic technique requires only grouping of similar values, and detail is constrained by the boundaries of enumeration units which rarely have to do with the variable being mapped. In choroplethic mapping, emphasis in the graphic statement is placed upon comparing relative magnitudes across the surface of the map. In contrast, the dasymetric method highlights areas of homogeneity and areas of sudden change and is produced by refining the values estimated by the choroplethic technique. Your task is to produce a dasymetric map of cropland in south central Ohio counties, using four variables to refine the enumerated data. Prepare this map and all related maps using ArcView GIS. It might be a good idea to keep a journal log while you are working through this exercise, annotated with hardcopy maps if you prefer, personal notes describing in your own words what the commands you performed in ArcView do, for later reference. Data for the initial distribution is given on the base map on page 4. Initial files for the assignment are in the Handouts folder in the usual Csiss folder on \\ubar\labs\. Copy the folder labeled dasy_ohio from the Handouts directory to your personal directory (for example to E:). Open the file dasy.apr in your directory with ArcView. -

An Evaluation of Visualization Methods for Population Statistics Based on Choropleth Maps

An Evaluation of Visualization Methods for Population Statistics Based on Choropleth Maps Lonni Besanc¸on, Matthew Cooper, Anders Ynnerman, and Fred´ eric´ Vernier Fig. 1. Choropleth map (A) augmented with 3D extrusion (C), contiguous cartogram (D), and rectangular glyphs (E) at the same level of granularity and with 3D extrusion (B), Heatmap (F) and dot map (G) at a finer level of granularity. Abstract— We evaluate several augmentations to the choropleth map to convey additional information, including glyphs, 3D, cartograms, juxtaposed maps, and shading methods. While choropleth maps are a common method used to represent societal data, with multivariate data they can impede as much as improve understanding. In particular large, low population density regions often dominate the map and can mislead the viewer as to the message conveyed. Our results highlight the potential of 3D choropleth maps as well as the low accuracy of choropleth map tasks with multivariate data. We also introduce and evaluate popcharts, four techniques designed to show the density of population at a very fine scale on top of choropleth maps. All the data, results, and scripts are available from osf.io/8rxwg/ Index Terms—Choropleth maps, bivariate maps 1INTRODUCTION A perennial issue with mapping statistical information onto choro- said to be harder to understand [102]. It is thus still unclear which pleth maps is that they tend to overemphasize large, yet often strategies should be adopted to create bivariate maps to properly convey sparsely populated, administrative areas because of their strong vi- population information in addition to the measured variable usually sual weight [1, 44, 64, 88, 94]. -

Mapping Crime: Understanding Hot Spots

U.S. Department of Justice Office of Justice Programs AUG. AUG. National Institute of Justice 05 Special REPORT Mapping Crime: Understanding Hot Spots www.ojp.usdoj.gov/nij U.S. Department of Justice Office of Justice Programs 810 Seventh Street N.W. Washington, DC 20531 Alberto R. Gonzales Attorney General Regina B. Schofield Assistant Attorney General Sarah V. Hart Director, National Institute of Justice This and other publications and products of the National Institute of Justice can be found at: National Institute of Justice www.ojp.usdoj.gov/nij Office of Justice Programs Partnerships for Safer Communities www.ojp.usdoj.gov AUG. 05 Mapping Crime: Understanding Hot Spots John E. Eck, Spencer Chainey, James G. Cameron, Michael Leitner, and Ronald E. Wilson NCJ 209393 Sarah V. Hart Director This document is not intended to create, does not create, and may not be relied upon to create any rights, substantive or procedural, enforceable by law by any party in any matter civil or criminal. Findings and conclusions of the research reported here are those of the authors and do not necessarily reflect the official position or policies of the U.S. Department of Justice. The products, manufacturers, and organizations discussed in this document are presented for informational purposes only and do not constitute product approval or endorsement by the U.S. Department of Justice. The National Institute of Justice is a component of the Office of Justice Programs, which also includes the Bureau of Justice Assistance, the Bureau of Justice Statistics, the Office of Juvenile Justice and Delinquency Prevention, and the Office for Victims of Crime. -

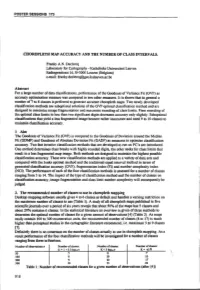

Choropleth Map Accuracy and the Number of Class Intervals

POSTER SESSIONS 173 CHOROPLETH MAP ACCURACY AND THE NUMBER OF CLASS INTERVALS. Franky A.N. Declercq Laboratory for Cartography - Katholieke Universiteit Leuven Redingenstraat 16,8-3000 Leuven (Belgium) e-mail: [email protected] Abstract For a large number of data classifications, performance of the Goodness of Variance Fit (GVF) as accuracy optimization measure was compared to two other measures. It is shown that in general a number of7 to 8 classes is preferred to generate accurate choropleth maps. Two newly developed classification methods are suboptimal solutions of the GVF optimal classification method and are designed to minimize image fragmentation and maximize rounding of class limits. Firm rounding of the optimal class limits to less than two significant digits decreases accuracy only slightly. Suboptimal classifications that yield a less fragmented image become rather inaccurate and need 9 to 10 classes to maintain classification accuracy. 1 Aim The Goodness of Variance Fit (GVF) is compared to the Goodness of Deviation around the Median Fit (GDMF) and Goodness of Absolute Deviation Fit (GAOF) as measures to optimize classification accuracy. Two fast iterative classification methods that are developed to run on PC's are introduced. One method determines class breaks with highly rounded digits, the other seeks for class limits that result in a less fragmented map image. Both methods are designed to maintain the highest possible classification accuracy. These new classification methods are applied to a variety of data sets and compared with the Jenks optimal method and the traditional equal interval method in terms of generated classification accuracy (GVF), fragmentation index <PI) and number complexity index (NCI). -

CHOROPLETH MAPS on HIGH RESOLUTION Crts : the EFFECTS of NUMBER of CLASSES and HUE on COMMUNICATION

CHOROPLETH MAPS ON HIGH RESOLUTION CRTs : THE EFFECTS OF NUMBER OF CLASSES AND HUE ON COMMUNICATION By: Patricia Gilmartin and Elisabeth Shelton Gilmartin, P. and E. Shelton (1990) Choropleth Maps on High Resolution CRTs: The Effects of Number of Classes and Hue on Communication. Cartographica, v. 26(2): 40-52. Made available courtesy of University of Toronto Press: http://www.utpress.utoronto.ca/ ***Reprinted with permission. No further reproduction is authorized without written permission from University of Toronto Press. This version of the document is not the version of record. Figures and/or pictures may be missing from this format of the document.*** Abstract: The research reported here was designed to determine how quickly and accurately map readers viewing choropleth maps on a high-resolution computer monitor are able to identify to which class an areal unit on the map belongs, when the map has between four and eight classes and is produced in shades of either gray, green or magenta. As expected, accuracy rates decreased and reaction times increased as the number of classes on the map increased, Accuracy rates ranged from 91.9% for four-class maps to 68.2% for eight-class maps (averaged for all three colors used in the study). Hue also affected accuracy rates and reaction times, the best results being obtained with achromatic (gray-shaded) maps: 84.5% correct, averaged over all numbers of classes. Maps shaded with magenta proved to be the least satisfactory with an accuracy rate of 72.8%. The study provides cartographers with empirical guidelines regarding what level of map-reading accuracy might be expected for choropleth maps designed with a given number of map classes, in a specific hue, and displayed on a high- resolution graphics monitor. -

Geography 281 Map Making with GIS Project Three: Viewing Data Spatially

Geography 281 Map Making with GIS Project Three: Viewing Data Spatially This activity introduces three of the most common thematic maps: Choropleth maps Dot density maps Graduated symbol maps You will learn how, when and why to create each type along with some of the pitfalls to avoid when making them. Most of the work will take place within the Symbology tab of the Layer properties form in the ArcMap Data View. In ArcMap Layout View, you will learn how to Set Relative Paths Modify legend text The first part of the activity guides you though the steps and decisions needed to create a series of maps illustrating the spatial relationship between city population, state population and area in the lower 48 states. The On Your Own part lets you apply what you've learned to create a new map that combines information from two previous maps. The data needed for proj3 is located in the \\Geogsrv\data\geog281\proj3 directory. Project 3 files: Description: Feature Type: counties48 shapefile base map of US counties polygon us48_major_cities shapefile Major U.S. cities point us48_states shapefile base map of lower 48 states polygon Visual Variables Maps use symbols to portray data. By selecting the right type of symbol you can create an intuitive map that is easy to read. Choosing the wrong symbol can mislead and confuse the map reader. Depending on the data type (qualitative or quantitative) and the type of feature (point, line, or polygon) you are mapping, you have many choices with regards to selecting symbol color (hue and value), size, shape, and texture.