Of (Block) Banded Toeplitz Matrices*

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Eigenvalues and Eigenvectors of Tridiagonal Matrices∗

Electronic Journal of Linear Algebra ISSN 1081-3810 A publication of the International Linear Algebra Society Volume 15, pp. 115-133, April 2006 ELA http://math.technion.ac.il/iic/ela EIGENVALUES AND EIGENVECTORS OF TRIDIAGONAL MATRICES∗ SAID KOUACHI† Abstract. This paper is continuation of previous work by the present author, where explicit formulas for the eigenvalues associated with several tridiagonal matrices were given. In this paper the associated eigenvectors are calculated explicitly. As a consequence, a result obtained by Wen- Chyuan Yueh and independently by S. Kouachi, concerning the eigenvalues and in particular the corresponding eigenvectors of tridiagonal matrices, is generalized. Expressions for the eigenvectors are obtained that differ completely from those obtained by Yueh. The techniques used herein are based on theory of recurrent sequences. The entries situated on each of the secondary diagonals are not necessary equal as was the case considered by Yueh. Key words. Eigenvectors, Tridiagonal matrices. AMS subject classifications. 15A18. 1. Introduction. The subject of this paper is diagonalization of tridiagonal matrices. We generalize a result obtained in [5] concerning the eigenvalues and the corresponding eigenvectors of several tridiagonal matrices. We consider tridiagonal matrices of the form −α + bc1 00 ... 0 a1 bc2 0 ... 0 .. .. 0 a2 b . An = , (1) .. .. .. 00. 0 . .. .. .. . cn−1 0 ... ... 0 an−1 −β + b n−1 n−1 ∞ where {aj}j=1 and {cj}j=1 are two finite subsequences of the sequences {aj}j=1 and ∞ {cj}j=1 of the field of complex numbers C, respectively, and α, β and b are complex numbers. We suppose that 2 d1, if j is odd ajcj = 2 j =1, 2, ..., (2) d2, if j is even where d 1 and d2 are complex numbers. -

Parametrizations of K-Nonnegative Matrices

Parametrizations of k-Nonnegative Matrices Anna Brosowsky, Neeraja Kulkarni, Alex Mason, Joe Suk, Ewin Tang∗ October 2, 2017 Abstract Totally nonnegative (positive) matrices are matrices whose minors are all nonnegative (positive). We generalize the notion of total nonnegativity, as follows. A k-nonnegative (resp. k-positive) matrix has all minors of size k or less nonnegative (resp. positive). We give a generating set for the semigroup of k-nonnegative matrices, as well as relations for certain special cases, i.e. the k = n − 1 and k = n − 2 unitriangular cases. In the above two cases, we find that the set of k-nonnegative matrices can be partitioned into cells, analogous to the Bruhat cells of totally nonnegative matrices, based on their factorizations into generators. We will show that these cells, like the Bruhat cells, are homeomorphic to open balls, and we prove some results about the topological structure of the closure of these cells, and in fact, in the latter case, the cells form a Bruhat-like CW complex. We also give a family of minimal k-positivity tests which form sub-cluster algebras of the total positivity test cluster algebra. We describe ways to jump between these tests, and give an alternate description of some tests as double wiring diagrams. 1 Introduction A totally nonnegative (respectively totally positive) matrix is a matrix whose minors are all nonnegative (respectively positive). Total positivity and nonnegativity are well-studied phenomena and arise in areas such as planar networks, combinatorics, dynamics, statistics and probability. The study of total positivity and total nonnegativity admit many varied applications, some of which are explored in “Totally Nonnegative Matrices” by Fallat and Johnson [5]. -

New Algorithms in the Frobenius Matrix Algebra for Polynomial Root-Finding

City University of New York (CUNY) CUNY Academic Works Computer Science Technical Reports CUNY Academic Works 2014 TR-2014006: New Algorithms in the Frobenius Matrix Algebra for Polynomial Root-Finding Victor Y. Pan Ai-Long Zheng How does access to this work benefit ou?y Let us know! More information about this work at: https://academicworks.cuny.edu/gc_cs_tr/397 Discover additional works at: https://academicworks.cuny.edu This work is made publicly available by the City University of New York (CUNY). Contact: [email protected] New Algoirthms in the Frobenius Matrix Algebra for Polynomial Root-finding ∗ Victor Y. Pan[1,2],[a] and Ai-Long Zheng[2],[b] Supported by NSF Grant CCF-1116736 and PSC CUNY Award 64512–0042 [1] Department of Mathematics and Computer Science Lehman College of the City University of New York Bronx, NY 10468 USA [2] Ph.D. Programs in Mathematics and Computer Science The Graduate Center of the City University of New York New York, NY 10036 USA [a] [email protected] http://comet.lehman.cuny.edu/vpan/ [b] [email protected] Abstract In 1996 Cardinal applied fast algorithms in Frobenius matrix algebra to complex root-finding for univariate polynomials, but he resorted to some numerically unsafe techniques of symbolic manipulation with polynomials at the final stages of his algorithms. We extend his work to complete the computations by operating with matrices at the final stage as well and also to adjust them to real polynomial root-finding. Our analysis and experiments show efficiency of the resulting algorithms. 2000 Math. -

Graph Equivalence Classes for Spectral Projector-Based Graph Fourier Transforms Joya A

1 Graph Equivalence Classes for Spectral Projector-Based Graph Fourier Transforms Joya A. Deri, Member, IEEE, and José M. F. Moura, Fellow, IEEE Abstract—We define and discuss the utility of two equiv- Consider a graph G = G(A) with adjacency matrix alence graph classes over which a spectral projector-based A 2 CN×N with k ≤ N distinct eigenvalues and Jordan graph Fourier transform is equivalent: isomorphic equiv- decomposition A = VJV −1. The associated Jordan alence classes and Jordan equivalence classes. Isomorphic equivalence classes show that the transform is equivalent subspaces of A are Jij, i = 1; : : : k, j = 1; : : : ; gi, up to a permutation on the node labels. Jordan equivalence where gi is the geometric multiplicity of eigenvalue 휆i, classes permit identical transforms over graphs of noniden- or the dimension of the kernel of A − 휆iI. The signal tical topologies and allow a basis-invariant characterization space S can be uniquely decomposed by the Jordan of total variation orderings of the spectral components. subspaces (see [13], [14] and Section II). For a graph Methods to exploit these classes to reduce computation time of the transform as well as limitations are discussed. signal s 2 S, the graph Fourier transform (GFT) of [12] is defined as Index Terms—Jordan decomposition, generalized k gi eigenspaces, directed graphs, graph equivalence classes, M M graph isomorphism, signal processing on graphs, networks F : S! Jij i=1 j=1 s ! (s ;:::; s ;:::; s ;:::; s ) ; (1) b11 b1g1 bk1 bkgk I. INTRODUCTION where sij is the (oblique) projection of s onto the Jordan subspace Jij parallel to SnJij. -

Polynomial Sequences Generated by Linear Recurrences

Innocent Ndikubwayo Polynomial Sequences Generated by Linear Recurrences: Location and Reality of Zeros Polynomial Sequences Generated by Linear Recurrences: Location and Reality of Zeros Linear Recurrences: Location by Sequences Generated Polynomial Innocent Ndikubwayo ISBN 978-91-7911-462-6 Department of Mathematics Doctoral Thesis in Mathematics at Stockholm University, Sweden 2021 Polynomial Sequences Generated by Linear Recurrences: Location and Reality of Zeros Innocent Ndikubwayo Academic dissertation for the Degree of Doctor of Philosophy in Mathematics at Stockholm University to be publicly defended on Friday 14 May 2021 at 15.00 in sal 14 (Gradängsalen), hus 5, Kräftriket, Roslagsvägen 101 and online via Zoom, public link is available at the department website. Abstract In this thesis, we study the problem of location of the zeros of individual polynomials in sequences of polynomials generated by linear recurrence relations. In paper I, we establish the necessary and sufficient conditions that guarantee hyperbolicity of all the polynomials generated by a three-term recurrence of length 2, whose coefficients are arbitrary real polynomials. These zeros are dense on the real intervals of an explicitly defined real semialgebraic curve. Paper II extends Paper I to three-term recurrences of length greater than 2. We prove that there always exist non- hyperbolic polynomial(s) in the generated sequence. We further show that with at most finitely many known exceptions, all the zeros of all the polynomials generated by the recurrence lie and are dense on an explicitly defined real semialgebraic curve which consists of real intervals and non-real segments. The boundary points of this curve form a subset of zero locus of the discriminant of the characteristic polynomial of the recurrence. -

Subspace-Preserving Sparsification of Matrices with Minimal Perturbation

Subspace-preserving sparsification of matrices with minimal perturbation to the near null-space. Part I: Basics Chetan Jhurani Tech-X Corporation 5621 Arapahoe Ave Boulder, Colorado 80303, U.S.A. Abstract This is the first of two papers to describe a matrix sparsification algorithm that takes a general real or complex matrix as input and produces a sparse output matrix of the same size. The non-zero entries in the output are chosen to minimize changes to the singular values and singular vectors corresponding to the near null-space of the input. The output matrix is constrained to preserve left and right null-spaces exactly. The sparsity pattern of the output matrix is automatically determined or can be given as input. If the input matrix belongs to a common matrix subspace, we prove that the computed sparse matrix belongs to the same subspace. This works with- out imposing explicit constraints pertaining to the subspace. This property holds for the subspaces of Hermitian, complex-symmetric, Hamiltonian, cir- culant, centrosymmetric, and persymmetric matrices, and for each of the skew counterparts. Applications of our method include computation of reusable sparse pre- conditioning matrices for reliable and efficient solution of high-order finite element systems. The second paper in this series [1] describes our open- arXiv:1304.7049v1 [math.NA] 26 Apr 2013 source implementation, and presents further technical details. Keywords: Sparsification, Spectral equivalence, Matrix structure, Convex optimization, Moore-Penrose pseudoinverse Email address: [email protected] (Chetan Jhurani) Preprint submitted to Computers and Mathematics with Applications October 10, 2018 1. Introduction We present and analyze a matrix-valued optimization problem formulated to sparsify matrices while preserving the matrix null-spaces and certain spe- cial structural properties. -

(Hessenberg) Eigenvalue-Eigenmatrix Relations∗

(HESSENBERG) EIGENVALUE-EIGENMATRIX RELATIONS∗ JENS-PETER M. ZEMKE† Abstract. Explicit relations between eigenvalues, eigenmatrix entries and matrix elements are derived. First, a general, theoretical result based on the Taylor expansion of the adjugate of zI − A on the one hand and explicit knowledge of the Jordan decomposition on the other hand is proven. This result forms the basis for several, more practical and enlightening results tailored to non-derogatory, diagonalizable and normal matrices, respectively. Finally, inherent properties of (upper) Hessenberg, resp. tridiagonal matrix structure are utilized to construct computable relations between eigenvalues, eigenvector components, eigenvalues of principal submatrices and products of lower diagonal elements. Key words. Algebraic eigenvalue problem, eigenvalue-eigenmatrix relations, Jordan normal form, adjugate, principal submatrices, Hessenberg matrices, eigenvector components AMS subject classifications. 15A18 (primary), 15A24, 15A15, 15A57 1. Introduction. Eigenvalues and eigenvectors are defined using the relations Av = vλ and V −1AV = J. (1.1) We speak of a partial eigenvalue problem, when for a given matrix A ∈ Cn×n we seek scalar λ ∈ C and a corresponding nonzero vector v ∈ Cn. The scalar λ is called the eigenvalue and the corresponding vector v is called the eigenvector. We speak of the full or algebraic eigenvalue problem, when for a given matrix A ∈ Cn×n we seek its Jordan normal form J ∈ Cn×n and a corresponding (not necessarily unique) eigenmatrix V ∈ Cn×n. Apart from these constitutional relations, for some classes of structured matrices several more intriguing relations between components of eigenvectors, matrix entries and eigenvalues are known. For example, consider the so-called Jacobi matrices. -

Explicit Inverse of a Tridiagonal (P, R)–Toeplitz Matrix

Explicit inverse of a tridiagonal (p; r){Toeplitz matrix A.M. Encinas, M.J. Jim´enez Departament de Matemtiques Universitat Politcnica de Catalunya Abstract Tridiagonal matrices appears in many contexts in pure and applied mathematics, so the study of the inverse of these matrices becomes of specific interest. In recent years the invertibility of nonsingular tridiagonal matrices has been quite investigated in different fields, not only from the theoretical point of view (either in the framework of linear algebra or in the ambit of numerical analysis), but also due to applications, for instance in the study of sound propagation problems or certain quantum oscillators. However, explicit inverses are known only in a few cases, in particular when the tridiagonal matrix has constant diagonals or the coefficients of these diagonals are subjected to some restrictions like the tridiagonal p{Toeplitz matrices [7], such that their three diagonals are formed by p{periodic sequences. The recent formulae for the inversion of tridiagonal p{Toeplitz matrices are based, more o less directly, on the solution of second order linear difference equations, although most of them use a cumbersome formulation, that in fact don not take into account the periodicity of the coefficients. This contribution presents the explicit inverse of a tridiagonal matrix (p; r){Toeplitz, which diagonal coefficients are in a more general class of sequences than periodic ones, that we have called quasi{periodic sequences. A tridiagonal matrix A = (aij) of order n + 2 is called (p; r){Toeplitz if there exists m 2 N0 such that n + 2 = mp and ai+p;j+p = raij; i; j = 0;:::; (m − 1)p: Equivalently, A is a (p; r){Toeplitz matrix iff k ai+kp;j+kp = r aij; i; j = 0; : : : ; p; k = 0; : : : ; m − 1: We have developed a technique that reduces any linear second order difference equation with periodic or quasi-periodic coefficients to a difference equation of the same kind but with constant coefficients [3]. -

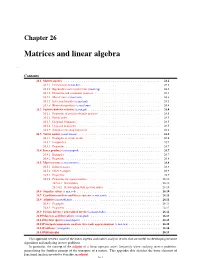

Matrices and Linear Algebra

Chapter 26 Matrices and linear algebra ap,mat Contents 26.1 Matrix algebra........................................... 26.2 26.1.1 Determinant (s,mat,det)................................... 26.2 26.1.2 Eigenvalues and eigenvectors (s,mat,eig).......................... 26.2 26.1.3 Hermitian and symmetric matrices............................. 26.3 26.1.4 Matrix trace (s,mat,trace).................................. 26.3 26.1.5 Inversion formulas (s,mat,mil)............................... 26.3 26.1.6 Kronecker products (s,mat,kron).............................. 26.4 26.2 Positive-definite matrices (s,mat,pd) ................................ 26.4 26.2.1 Properties of positive-definite matrices........................... 26.5 26.2.2 Partial order......................................... 26.5 26.2.3 Diagonal dominance.................................... 26.5 26.2.4 Diagonal majorizers..................................... 26.5 26.2.5 Simultaneous diagonalization................................ 26.6 26.3 Vector norms (s,mat,vnorm) .................................... 26.6 26.3.1 Examples of vector norms.................................. 26.6 26.3.2 Inequalities......................................... 26.7 26.3.3 Properties.......................................... 26.7 26.4 Inner products (s,mat,inprod) ................................... 26.7 26.4.1 Examples.......................................... 26.7 26.4.2 Properties.......................................... 26.8 26.5 Matrix norms (s,mat,mnorm) .................................... 26.8 26.5.1 -

Toeplitz and Toeplitz-Block-Toeplitz Matrices and Their Correlation with Syzygies of Polynomials

TOEPLITZ AND TOEPLITZ-BLOCK-TOEPLITZ MATRICES AND THEIR CORRELATION WITH SYZYGIES OF POLYNOMIALS HOUSSAM KHALIL∗, BERNARD MOURRAIN† , AND MICHELLE SCHATZMAN‡ Abstract. In this paper, we re-investigate the resolution of Toeplitz systems T u = g, from a new point of view, by correlating the solution of such problems with syzygies of polynomials or moving lines. We show an explicit connection between the generators of a Toeplitz matrix and the generators of the corresponding module of syzygies. We show that this module is generated by two elements of degree n and the solution of T u = g can be reinterpreted as the remainder of an explicit vector depending on g, by these two generators. This approach extends naturally to multivariate problems and we describe for Toeplitz-block-Toeplitz matrices, the structure of the corresponding generators. Key words. Toeplitz matrix, rational interpolation, syzygie 1. Introduction. Structured matrices appear in various domains, such as scientific computing, signal processing, . They usually express, in a linearize way, a problem which depends on less pa- rameters than the number of entries of the corresponding matrix. An important area of research is devoted to the development of methods for the treatment of such matrices, which depend on the actual parameters involved in these matrices. Among well-known structured matrices, Toeplitz and Hankel structures have been intensively studied [5, 6]. Nearly optimal algorithms are known for the multiplication or the resolution of linear systems, for such structure. Namely, if A is a Toeplitz matrix of size n, multiplying it by a vector or solving a linear system with A requires O˜(n) arithmetic operations (where O˜(n) = O(n logc(n)) for some c > 0) [2, 12]. -

A Logarithmic Minimization Property of the Unitary Polar Factor in The

A logarithmic minimization property of the unitary polar factor in the spectral norm and the Frobenius matrix norm Patrizio Neff∗ Yuji Nakatsukasa† Andreas Fischle‡ October 30, 2018 Abstract The unitary polar factor Q = Up in the polar decomposition of Z = Up H is the minimizer over unitary matrices Q for both Log(Q∗Z) 2 and its Hermitian part k k sym (Log(Q∗Z)) 2 over both R and C for any given invertible matrix Z Cn×n and k * k ∈ any matrix logarithm Log, not necessarily the principal logarithm log. We prove this for the spectral matrix norm for any n and for the Frobenius matrix norm in two and three dimensions. The result shows that the unitary polar factor is the nearest orthogonal matrix to Z not only in the normwise sense, but also in a geodesic distance. The derivation is based on Bhatia’s generalization of Bernstein’s trace inequality for the matrix exponential and a new sum of squared logarithms inequality. Our result generalizes the fact for scalars that for any complex logarithm and for all z C 0 ∈ \ { } −iϑ 2 2 −iϑ 2 2 min LogC(e z) = log z , min Re LogC(e z) = log z . ϑ∈(−π,π] | | | | || ϑ∈(−π,π] | | | | || Keywords: unitary polar factor, matrix logarithm, matrix exponential, Hermitian part, minimization, unitarily invariant norm, polar decomposition, sum of squared logarithms in- equality, optimality, matrix Lie-group, geodesic distance arXiv:1302.3235v4 [math.CA] 2 Jan 2014 AMS 2010 subject classification: 15A16, 15A18, 15A24, 15A44, 15A45, 26Dxx ∗Corresponding author, Head of Lehrstuhl f¨ur Nichtlineare Analysis und Modellierung, Fakult¨at f¨ur Mathematik, Universit¨at Duisburg-Essen, Campus Essen, Thea-Leymann Str. -

A Note on Multilevel Toeplitz Matrices

Spec. Matrices 2019; 7:114–126 Research Article Open Access Lei Cao and Selcuk Koyuncu* A note on multilevel Toeplitz matrices https://doi.org/110.1515/spma-2019-0011 Received August 7, 2019; accepted September 12, 2019 Abstract: Chien, Liu, Nakazato and Tam proved that all n × n classical Toeplitz matrices (one-level Toeplitz matrices) are unitarily similar to complex symmetric matrices via two types of unitary matrices and the type of the unitary matrices only depends on the parity of n. In this paper we extend their result to multilevel Toeplitz matrices that any multilevel Toeplitz matrix is unitarily similar to a complex symmetric matrix. We provide a method to construct the unitary matrices that uniformly turn any multilevel Toeplitz matrix to a complex symmetric matrix by taking tensor products of these two types of unitary matrices for one-level Toeplitz matrices according to the parity of each level of the multilevel Toeplitz matrices. In addition, we introduce a class of complex symmetric matrices that are unitarily similar to some p-level Toeplitz matrices. Keywords: Multilevel Toeplitz matrix; Unitary similarity; Complex symmetric matrices 1 Introduction Although every complex square matrix is similar to a complex symmetric matrix (see Theorem 4.4.24, [5]), it is known that not every n × n matrix is unitarily similar to a complex symmetric matrix when n ≥ 3 (See [4]). Some characterizations of matrices unitarily equivalent to a complex symmetric matrix (UECSM) were given by [1] and [3]. Very recently, a constructive proof that every Toeplitz matrix is unitarily similar to a complex symmetric matrix was given in [2] in which the unitary matrices turning all n × n Toeplitz matrices to complex symmetric matrices was given explicitly.