Signal Processing with Scilab

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Moving Average Filters

CHAPTER 15 Moving Average Filters The moving average is the most common filter in DSP, mainly because it is the easiest digital filter to understand and use. In spite of its simplicity, the moving average filter is optimal for a common task: reducing random noise while retaining a sharp step response. This makes it the premier filter for time domain encoded signals. However, the moving average is the worst filter for frequency domain encoded signals, with little ability to separate one band of frequencies from another. Relatives of the moving average filter include the Gaussian, Blackman, and multiple- pass moving average. These have slightly better performance in the frequency domain, at the expense of increased computation time. Implementation by Convolution As the name implies, the moving average filter operates by averaging a number of points from the input signal to produce each point in the output signal. In equation form, this is written: EQUATION 15-1 Equation of the moving average filter. In M &1 this equation, x[ ] is the input signal, y[ ] is ' 1 % y[i] j x [i j ] the output signal, and M is the number of M j'0 points used in the moving average. This equation only uses points on one side of the output sample being calculated. Where x[ ] is the input signal, y[ ] is the output signal, and M is the number of points in the average. For example, in a 5 point moving average filter, point 80 in the output signal is given by: x [80] % x [81] % x [82] % x [83] % x [84] y [80] ' 5 277 278 The Scientist and Engineer's Guide to Digital Signal Processing As an alternative, the group of points from the input signal can be chosen symmetrically around the output point: x[78] % x[79] % x[80] % x[81] % x[82] y[80] ' 5 This corresponds to changing the summation in Eq. -

Input and Output Directions and Hankel Singular Values CEE 629

Principal Input and Output Directions and Hankel Singular Values CEE 629. System Identification Duke University, Fall 2017 1 Continuous-time systems in the frequency domain In the frequency domain, the input-output relationship of a LTI system (with r inputs, m outputs, and n internal states) is represented by the m-by-r rational frequency response function matrix equation y(ω) = H(ω)u(ω) . At a frequency ω a set of inputs with amplitudes u(ω) generate steady-state outputs with amplitudes y(ω). (These amplitude vectors are, in general, complex-valued, indicating mag- nitude and phase.) The singular value decomposition of the transfer function matrix is H(ω) = Y (ω) Σ(ω) U ∗(ω) (1) where: U(ω) is the r by r orthonormal matrix of input amplitude vectors, U ∗U = I, and Y (ω) is the m by m orthonormal matrix of output amplitude vectors, Y ∗Y = I Σ(ω) is the m by r diagonal matrix of singular values, Σ(ω) = diag(σ1(ω), σ2(ω), ··· σn(ω)) At any frequency ω, the singular values are ordered as: σ1(ω) ≥ σ2(ω) ≥ · · · ≥ σn(ω) ≥ 0 Re-arranging the singular value decomposition of H(s), H(ω)U(ω) = Y (ω) Σ(ω) or H(ω) ui(ω) = σi(ω) yi(ω) where ui(ω) and yi(ω) are the i-th columns of U(ω) and Y (ω). Since ||ui(ω)||2 = 1 and ||yi(ω)||2 = 1, the singular value σi(ω) represents the scaling from inputs with complex am- plitudes ui(ω) to outputs with amplitudes yi(ω). -

Discrete-Time Fourier Transform 7

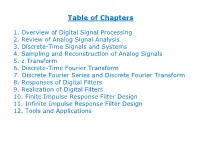

Table of Chapters 1. Overview of Digital Signal Processing 2. Review of Analog Signal Analysis 3. Discrete-Time Signals and Systems 4. Sampling and Reconstruction of Analog Signals 5. z Transform 6. Discrete-Time Fourier Transform 7. Discrete Fourier Series and Discrete Fourier Transform 8. Responses of Digital Filters 9. Realization of Digital Filters 10. Finite Impulse Response Filter Design 11. Infinite Impulse Response Filter Design 12. Tools and Applications Chapter 1: Overview of Digital Signal Processing Chapter Intended Learning Outcomes: (i) Understand basic terminology in digital signal processing (ii) Differentiate digital signal processing and analog signal processing (iii) Describe basic digital signal processing application areas H. C. So Page 1 Signal: . Anything that conveys information, e.g., . Speech . Electrocardiogram (ECG) . Radar pulse . DNA sequence . Stock price . Code division multiple access (CDMA) signal . Image . Video H. C. So Page 2 0.8 0.6 0.4 0.2 0 vowel of "a" -0.2 -0.4 -0.6 0 0.005 0.01 0.015 0.02 time (s) Fig.1.1: Speech H. C. So Page 3 250 200 150 100 ECG 50 0 -50 0 0.5 1 1.5 2 2.5 time (s) Fig.1.2: ECG H. C. So Page 4 1 0.5 0 -0.5 transmitted pulse -1 0 0.2 0.4 0.6 0.8 1 time 1 0.5 0 -0.5 received pulse received t -1 0 0.2 0.4 0.6 0.8 1 time Fig.1.3: Transmitted & received radar waveforms H. C. So Page 5 Radar transceiver sends a 1-D sinusoidal pulse at time 0 It then receives echo reflected by an object at a range of Reflected signal is noisy and has a time delay of which corresponds to round trip propagation time of radar pulse Given the signal propagation speed, denoted by , is simply related to as: (1.1) As a result, the radar pulse contains the object range information H. -

Lecture 3: Transfer Function and Dynamic Response Analysis

Transfer function approach Dynamic response Summary Basics of Automation and Control I Lecture 3: Transfer function and dynamic response analysis Paweł Malczyk Division of Theory of Machines and Robots Institute of Aeronautics and Applied Mechanics Faculty of Power and Aeronautical Engineering Warsaw University of Technology October 17, 2019 © Paweł Malczyk. Basics of Automation and Control I Lecture 3: Transfer function and dynamic response analysis 1 / 31 Transfer function approach Dynamic response Summary Outline 1 Transfer function approach 2 Dynamic response 3 Summary © Paweł Malczyk. Basics of Automation and Control I Lecture 3: Transfer function and dynamic response analysis 2 / 31 Transfer function approach Dynamic response Summary Transfer function approach 1 Transfer function approach SISO system Definition Poles and zeros Transfer function for multivariable system Properties 2 Dynamic response 3 Summary © Paweł Malczyk. Basics of Automation and Control I Lecture 3: Transfer function and dynamic response analysis 3 / 31 Transfer function approach Dynamic response Summary SISO system Fig. 1: Block diagram of a single input single output (SISO) system Consider the continuous, linear time-invariant (LTI) system defined by linear constant coefficient ordinary differential equation (LCCODE): dny dn−1y + − + ··· + _ + = an n an 1 n−1 a1y a0y dt dt (1) dmu dm−1u = b + b − + ··· + b u_ + b u m dtm m 1 dtm−1 1 0 initial conditions y(0), y_(0),..., y(n−1)(0), and u(0),..., u(m−1)(0) given, u(t) – input signal, y(t) – output signal, ai – real constants for i = 1, ··· , n, and bj – real constants for j = 1, ··· , m. How do I find the LCCODE (1)? . -

An Approximate Transfer Function Model for a Double-Pipe Counter-Flow Heat Exchanger

energies Article An Approximate Transfer Function Model for a Double-Pipe Counter-Flow Heat Exchanger Krzysztof Bartecki Division of Control Science and Engineering, Opole University of Technology, ul. Prószkowska 76, 45-758 Opole, Poland; [email protected] Abstract: The transfer functions G(s) for different types of heat exchangers obtained from their par- tial differential equations usually contain some irrational components which reflect quite well their spatio-temporal dynamic properties. However, such a relatively complex mathematical representa- tion is often not suitable for various practical applications, and some kind of approximation of the original model would be more preferable. In this paper we discuss approximate rational transfer func- tions Gˆ(s) for a typical thick-walled double-pipe heat exchanger operating in the counter-flow mode. Using the semi-analytical method of lines, we transform the original partial differential equations into a set of ordinary differential equations representing N spatial sections of the exchanger, where each nth section can be described by a simple rational transfer function matrix Gn(s), n = 1, 2, ... , N. Their proper interconnection results in the overall approximation model expressed by a rational transfer function matrix Gˆ(s) of high order. As compared to the previously analyzed approximation model for the double-pipe parallel-flow heat exchanger which took the form of a simple, cascade interconnection of the sections, here we obtain a different connection structure which requires the use of the so-called linear fractional transformation with the Redheffer star product. Based on the resulting rational transfer function matrix Gˆ(s), the frequency and the steady-state responses of the approximate model are compared here with those obtained from the original irrational transfer Citation: Bartecki, K. -

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli 1 • FIR Filter Design Problem Given H(z) or H(ejw), find filter coefficients {b0, b1, b2, ….. bN-1} which are equal to {h0, h1, h2, ….hN-1} in the case of FIR filters. 1 z-1 z-1 z-1 z-1 x[n] h0 h1 h2 h3 hN-2 hN-1 1 1 1 1 1 y[n] Consider a general (infinite impulse response) definition: ¥ H (z) = å h[n] z-n n=-¥ 2 From complex variable theory, the inverse transform is: 1 n -1 h[n] = ò H (z)z dz 2pj C Where C is a counterclockwise closed contour in the region of convergence of H(z) and encircling the origin of the z-plane • Evaluating H(z) on the unit circle ( z = ejw ) : ¥ H (e jw ) = åh[n]e- jnw n=-¥ 1 p h[n] = ò H (e jw )e jnwdw where dz = jejw dw 2p -p 3 • Design of an ideal low pass FIR digital filter H(ejw) K -2p -p -wc 0 wc p 2p w Find ideal low pass impulse response {h[n]} 1 p h [n] = H (e jw )e jnwdw LP ò 2p -p 1 wc = Ke jnwdw 2p ò -wc Hence K h [n] = sin(nw ) n = 0, ±1, ±2, …. ±¥ LP np c 4 Let K = 1, wc = p/4, n = 0, ±1, …, ±10 The impulse response coefficients are n = 0, h[n] = 0.25 n = ±4, h[n] = 0 = ±1, = 0.225 = ±5, = -0.043 = ±2, = 0.159 = ±6, = -0.053 = ±3, = 0.075 = ±7, = -0.032 n = ±8, h[n] = 0 = ±9, = 0.025 = ±10, = 0.032 5 Non Causal FIR Impulse Response We can make it causal if we shift hLP[n] by 10 units to the right: K h [n] = sin((n -10)w ) LP (n -10)p c n = 0, 1, 2, …. -

Signals and Systems Lecture 8: Finite Impulse Response Filters

Signals and Systems Lecture 8: Finite Impulse Response Filters Dr. Guillaume Ducard Fall 2018 based on materials from: Prof. Dr. Raffaello D’Andrea Institute for Dynamic Systems and Control ETH Zurich, Switzerland G. Ducard 1 / 46 Outline 1 Finite Impulse Response Filters Definition General Properties 2 Moving Average (MA) Filter MA filter as a simple low-pass filter Fast MA filter implementation Weighted Moving Average Filter 3 Non-Causal Moving Average Filter Non-Causal Moving Average Filter Non-Causal Weighted Moving Average Filter 4 Important Considerations Phase is Important Differentiation using FIR Filters Frequency-domain observations Higher derivatives G. Ducard 2 / 46 Finite Impulse Response Filters Moving Average (MA) Filter Definition Non-Causal Moving Average Filter General Properties Important Considerations Outline 1 Finite Impulse Response Filters Definition General Properties 2 Moving Average (MA) Filter MA filter as a simple low-pass filter Fast MA filter implementation Weighted Moving Average Filter 3 Non-Causal Moving Average Filter Non-Causal Moving Average Filter Non-Causal Weighted Moving Average Filter 4 Important Considerations Phase is Important Differentiation using FIR Filters Frequency-domain observations Higher derivatives G. Ducard 3 / 46 Finite Impulse Response Filters Moving Average (MA) Filter Definition Non-Causal Moving Average Filter General Properties Important Considerations FIR filters : definition The class of causal, LTI finite impulse response (FIR) filters can be captured by the difference equation M−1 y[n]= bku[n − k], Xk=0 where 1 M is the number of filter coefficients (also known as filter length), 2 M − 1 is often referred to as the filter order, 3 and bk ∈ R are the filter coefficients that describe the dependence on current and previous inputs. -

A Passive Synthesis for Time-Invariant Transfer Functions

IEEE TRANSACTIONS ON CIRCUIT THEORY, VOL. CT-17, NO. 3, AUGUST 1970 333 A Passive Synthesis for Time-Invariant Transfer Functions PATRICK DEWILDE, STUDENT MEMBER, IEEE, LEONARD hiI. SILVERRJAN, MEMBER, IEEE, AND R. W. NEW-COMB, MEMBER, IEEE Absfroct-A passive transfer-function synthesis based upon state- [6], p. 307), in which a minimal-reactance scalar transfer- space techniques is presented. The method rests upon the formation function synthesis can be obtained; it provides a circuit of a coupling admittance that, when synthesized by. resistors and consideration of such concepts as stability, conkol- gyrators, is to be loaded by capacitors whose voltages form the state. By the use of a Lyapunov transformation, the coupling admittance lability, and observability. Background and the general is made positive real, while further transformations allow internal theory and position of state-space techniques in net- dissipation to be moved to the source or the load. A general class work theory can be found in [7]. of configurations applicable to integrated circuits and using only grounded gyrators, resistors, and a minimal number of capacitors II. PRELIMINARIES is obtained. The minimum number of resistors for the structure is also obtained. The technique illustrates how state-variable We first recall several facts pertinent to the intended theory can be used to obtain results not yet available through synthesis. other methods. Given a transfer function n X m matrix T(p) that is rational wit.h real coefficients (called real-rational) and I. INTRODUCTION that has T(m) = D, a finite constant matrix, there exist, real constant matrices A, B, C, such that (lk is the N ill, a procedure for time-v:$able minimum-re- k X lc identity, ,C[ ] is the Laplace transform) actance passive synthesis of a “stable” impulse response matrix was given based on a new-state- T(p) = D + C[pl, - A]-‘B, JXYI = T(~)=Wl equation technique for imbedding the impulse-response (14 matrix in a passive driving-point impulse-response matrix. -

Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation

Computers, Materials & Continua Tech Science Press DOI:10.32604/cmc.2021.014752 Article Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation Dah-Jing Jwo1, *, Wei-Yeh Chang1 and I-Hua Wu2 1Department of Communications, Navigation and Control Engineering, National Taiwan Ocean University, Keelung, 202-24, Taiwan 2Innovative Navigation Technology Ltd., Kaohsiung, 801, Taiwan *Corresponding Author: Dah-Jing Jwo. Email: [email protected] Received: 01 October 2020; Accepted: 08 November 2020 Abstract: This paper revisits the characteristics of windowing techniques with various window functions involved, and successively investigates spectral leak- age mitigation utilizing the Welch method. The discrete Fourier transform (DFT) is ubiquitous in digital signal processing (DSP) for the spectrum anal- ysis and can be efciently realized by the fast Fourier transform (FFT). The sampling signal will result in distortion and thus may cause unpredictable spectral leakage in discrete spectrum when the DFT is employed. Windowing is implemented by multiplying the input signal with a window function and windowing amplitude modulates the input signal so that the spectral leakage is evened out. Therefore, windowing processing reduces the amplitude of the samples at the beginning and end of the window. In addition to selecting appropriate window functions, a pretreatment method, such as the Welch method, is effective to mitigate the spectral leakage. Due to the noise caused by imperfect, nite data, the noise reduction from Welch’s method is a desired treatment. The nonparametric Welch method is an improvement on the peri- odogram spectrum estimation method where the signal-to-noise ratio (SNR) is high and mitigates noise in the estimated power spectra in exchange for frequency resolution reduction. -

Transformations for FIR and IIR Filters' Design

S S symmetry Article Transformations for FIR and IIR Filters’ Design V. N. Stavrou 1,*, I. G. Tsoulos 2 and Nikos E. Mastorakis 1,3 1 Hellenic Naval Academy, Department of Computer Science, Military Institutions of University Education, 18539 Piraeus, Greece 2 Department of Informatics and Telecommunications, University of Ioannina, 47150 Kostaki Artas, Greece; [email protected] 3 Department of Industrial Engineering, Technical University of Sofia, Bulevard Sveti Kliment Ohridski 8, 1000 Sofia, Bulgaria; mastor@tu-sofia.bg * Correspondence: [email protected] Abstract: In this paper, the transfer functions related to one-dimensional (1-D) and two-dimensional (2-D) filters have been theoretically and numerically investigated. The finite impulse response (FIR), as well as the infinite impulse response (IIR) are the main 2-D filters which have been investigated. More specifically, methods like the Windows method, the bilinear transformation method, the design of 2-D filters from appropriate 1-D functions and the design of 2-D filters using optimization techniques have been presented. Keywords: FIR filters; IIR filters; recursive filters; non-recursive filters; digital filters; constrained optimization; transfer functions Citation: Stavrou, V.N.; Tsoulos, I.G.; 1. Introduction Mastorakis, N.E. Transformations for There are two types of digital filters: the Finite Impulse Response (FIR) filters or Non- FIR and IIR Filters’ Design. Symmetry Recursive filters and the Infinite Impulse Response (IIR) filters or Recursive filters [1–5]. 2021, 13, 533. https://doi.org/ In the non-recursive filter structures the output depends only on the input, and in the 10.3390/sym13040533 recursive filter structures the output depends both on the input and on the previous outputs. -

System Identification and Power Spectrum Estimation

182 IEEE TRANSACTIONS ON ACOUSTICS, SPEECH, AND SIGNAL PROCESSING, VOL. ASSP-27, NO. 2, APRIL 1979 Short-TimeFourier Analysis Techniques for FIR System Identification and Power Spectrum Estimation LAWRENCER. RABINER, FELLOW, IEEE, AND JONT B. ALLEN, MEMBER, IEEE Abstract—A wide variety of methods have been proposed for system F[çb] modeling and identification. To date, the most successful of these L = length of w(n) methods have been time domain procedures such as least squares analy- sis, or linear prediction (ARMA models). Although spectral techniques N= length ofDFTF[] have been proposed for spectral estimation and system identification, N' = number of data points the resulting spectral and system estimates have always been strongly N1 = lower limit on sum affected by the analysis window (biased estimates), thereby reducing N7 upper limit on sum the potential applications of this class of techniques. In this paper we M = length of h propose a novel short-time Fourier transform analysis technique in M = estimate of M which the influences of the window on a spectral estimate can essen- tially be removed entirely (an unbiased estimator) by linearly combin- h(n) = systems impulse response ing biased estimates. As a result, section (FFT) lengths for analysis can h(n) = estimate of h(n) be made as small as possible, thereby increasing the speed of the algo- F[.] = Fourier transform operator rithm without sacrificing accuracy. The proposed algorithm has the F'' [] = inverse Fourier transform important property that as the number of samples used in the estimate q1 = number of 0 diagonals below main increases, the solution quickly approaches the least squares (theoreti- cally optimum) solution. -

State Space Models

State Space Models MUS420 Equations of motion for any physical system may be Introduction to Linear State Space Models conveniently formulated in terms of its state x(t): Julius O. Smith III ([email protected]) Center for Computer Research in Music and Acoustics (CCRMA) Input Forces u(t) Department of Music, Stanford University Stanford, California 94305 ft Model State x(t) x˙(t) February 5, 2019 Outline R State Space Models x˙(t)= ft[x(t),u(t)] • where Linear State Space Formulation • x(t) = state of the system at time t Markov Parameters (Impulse Response) • u(t) = vector of external inputs (typically driving forces) Transfer Function • ft = general function mapping the current state x(t) and Difference Equations to State Space Models inputs u(t) to the state time-derivative x˙(t) • Similarity Transformations The function f may be time-varying, in general • • t Modal Representation (Diagonalization) This potentially nonlinear time-varying model is • • Matlab Examples extremely general (but causal) • Even the human brain can be modeled in this form • 1 2 State-Space History Key Property of State Vector The key property of the state vector x(t) in the state 1. Classic phase-space in physics (Gibbs 1901) space formulation is that it completely determines the System state = point in position-momentum space system at time t 2. Digital computer (1950s) 3. Finite State Machines (Mealy and Moore, 1960s) Future states depend only on the current state x(t) • and on any inputs u(t) at time t and beyond 4. Finite Automata All past states and the entire input history are 5.