Sprite Tracking in Two-Dimensional Video Games Elizabeth M. Shen

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1 Fully Optimized: the (Post)Human Art of Speedrunning Like Their Cognate Forms of New Media, the Everyday Ubiquity of Video

Fully Optimized: The (Post)human Art of Speedrunning Item Type Article Authors Hay, Jonathan Citation Hay, J. (2020). Fully Optimized: The (Post)human Art of Speedrunning. Journal of Posthuman Studies: Philosophy, Technology, Media, 4(1), 5 - 24. Publisher Penn State University Press Journal Journal of Posthuman Studies Download date 01/10/2021 15:57:06 Item License https://creativecommons.org/licenses/by-nc-nd/4.0/ Link to Item http://hdl.handle.net/10034/623585 Fully Optimized: The (post)human art of speedrunning Like their cognate forms of new media, the everyday ubiquity of video games in contemporary Western cultures is symptomatic of the always-already “(post)human” (Hayles 1999, 246) character of the mundane lifeworlds of those members of our species who live in such technologically saturated societies. This article therefore takes as its theoretical basis N. Katherine Hayles’ proposal that our species presently inhabits an intermediary stage between being human and posthuman; that we are currently (post)human, engaged in a process of constantly becoming posthuman. In the space of an entirely unremarkable hour, we might very conceivably interface with our mobile phone in order to access and interpret GPS data, stream a newly released album of music, phone a family member who is physically separated from us by many miles, pass time playing a clicker game, and then absentmindedly catch up on breaking news from across the globe. In this context, video games are merely one cultural practice through which we regularly interface with technology, and hence, are merely one constituent aspect of the consummate inundation of technologies into the everyday lives of (post)humans. -

Ready Player One by Ernest Cline

Ready Player One by Ernest Cline Chapter 1 Everyone my age remembers where they were and what they were doing when they first heard about the contest. I was sitting in my hideout watching cartoons when the news bulletin broke in on my video feed, announcing that James Halliday had died during the night. I’d heard of Halliday, of course. Everyone had. He was the videogame designer responsible for creating the OASIS, a massively multiplayer online game that had gradually evolved into the globally networked virtual reality most of humanity now used on a daily basis. The unprecedented success of the OASIS had made Halliday one of the wealthiest people in the world. At first, I couldn’t understand why the media was making such a big deal of the billionaire’s death. After all, the people of Planet Earth had other concerns. The ongoing energy crisis. Catastrophic climate change. Widespread famine, poverty, and disease. Half a dozen wars. You know: “dogs and cats living together … mass hysteria!” Normally, the newsfeeds didn’t interrupt everyone’s interactive sitcoms and soap operas unless something really major had happened. Like the outbreak of some new killer virus, or another major city vanishing in a mushroom cloud. Big stuff like that. As famous as he was, Halliday’s death should have warranted only a brief segment on the evening news, so the unwashed masses could shake their heads in envy when the newscasters announced the obscenely large amount of money that would be doled out to the rich man’s heirs. 2 But that was the rub. -

Weigel Colostate 0053N 16148.Pdf (853.6Kb)

THESIS ONLINE SPACES: TECHNOLOGICAL, INSTITUTIONAL, AND SOCIAL PRACTICES THAT FOSTER CONNECTIONS THROUGH INSTAGRAM AND TWITCH Submitted by Taylor Weigel Department of Communication Studies In partial fulfillment of the requirements For the Degree of Master of Arts Colorado State University Fort Collins, Colorado Summer 2020 Master’s Committee: Advisor: Evan Elkins Greg Dickinson Rosa Martey Copyright by Taylor Laureen Weigel All Rights Reserved ABSTRACT ONLINE SPACES: TECHNOLOGICAL, INSTITUTIONAL, AND SOCIAL PRACTICES THAT FOSTER CONNECTIONS THROUGH INSTAGRAM AND TWITCH We are living in an increasingly digital world.1 In the past, critical scholars have focused on the inequality of access and unequal relationships between the elite, who controlled the media, and the masses, whose limited agency only allowed for alternate meanings of dominant discourse and media.2 With the rise of social networking services (SNSs) and user-generated content (UGC), critical work has shifted from relationships between the elite and the masses to questions of infrastructure, online governance, technological affordances, and cultural values and practices instilled in computer mediated communication (CMC).3 This thesis focuses specifically on technological and institutional practices of Instagram and Twitch and the social practices of users in these online spaces, using two case studies to explore the production of connection- oriented spaces through Instagram Stories and Twitch streams, which I argue are phenomenologically live media texts. In the following chapters, I answer two research questions. First, I explore the question, “Are Instagram Stories and Twitch streams fostering connections between users through institutional and technological practices of phenomenologically live texts?” and second, “If they 1 “We” in this case refers to privileged individuals from successful post-industrial societies. -

SEUM: Speedrunners from Hell Think Arcade

Warp Reference: SEUM: Speedrunners From Hell Think Arcade ----- Context ----- SEUM: Speedrunners from Hell is about a man named Marty who has his beer stolen by Satan. He proceeds to head to hell to get it back. That’s about it really. The game focuses more on gameplay, only incorporating story to give some context as to why the player is running through hell. ----- What Worked Well ----- Short, Simple Levels: Levels were, for the most part, were very lightweight and were designed to be completed in 20 seconds or less. This allowed the game to have 80+ levels in it, and it doesn’t feel exhausting to players to complete them all. In addition, levels that introduced a new power up or mechanic were extremely simple and focused solely on that new element, and allowing other levels to introduce how it mixed with other mechanics. This created a sort of modular design, where each level served a specific purpose. Also, levels were cleverly named to describe what the player was supposed to do, learn, or overcome, which at times served as a hint. Figure 1: A tutorial level 1 8/2/18 Warp Reference: SEUM: Speedrunners From Hell Think Arcade Figure 2: A slightly more complex level Figure 3: More difficult level still confined to a single tower Instant Restart: Levels load incredibly fast in SEUM, and with the press of the ‘R’ key the player instantly restarts the level back to its initial state. There’s no lengthy death sequence, and as soon as the player knows they messed up they can immediately restart and be back in the action in well under a second. -

Copyright by Kaitlin Elizabeth Hilburn 2017

Copyright by Kaitlin Elizabeth Hilburn 2017 The Report Committee for Kaitlin Elizabeth Hilburn Certifies that this is the approved version of the following report: Transformative Gameplay Practices: Speedrunning through Hyrule APPROVED BY SUPERVISING COMMITTEE: Supervisor: Suzanne Scott Kathy Fuller-Seeley Transformative Gameplay Practices: Speedrunning through Hyrule by Kaitlin Elizabeth Hilburn, B.S. Comm Report Presented to the Faculty of the Graduate School of The University of Texas at Austin in Partial Fulfillment of the Requirements for the Degree of Master of Arts The University of Texas at Austin May 2017 Dedication Dedicated to my father, Ben Hilburn, the first gamer I ever watched. Abstract Transformative Gameplay Practices: Speedrunning Through Hyrule Kaitlin Elizabeth Hilburn, M.A. The University of Texas at Austin, 2017 Supervisor: Suzanne Scott The term “transformative” gets used in both fan studies and video game studies and gestures toward a creative productivity that goes beyond simply consuming a text. However, despite this shared term, game studies and fan studies remain fairly separate in their respective examination of fans and gamers, in part due to media differences between video games and more traditional media, like television. Bridging the gap between these two fields not only helps to better explain transformative gameplay, but also offers additional insights in how fans consume texts, often looking for new ways to experience the source text. This report examines the transformative gameplay practices found within video game fan communities and provides an overview of their development and spread. It looks at three facets of transformative gameplay, performance, mastery, and education, using the transformative gameplay practices around The Legend of Zelda: Ocarina of Time (1998) as a primary case study. -

Analysing Constraint Play in Digital Games 自製工坊:分析應用於數碼遊戲中的限制性玩法

CITY UNIVERSITY OF HONG KONG 香港城市大學 Workshops of Our Own: Analysing Constraint Play in Digital Games 自製工坊:分析應用於數碼遊戲中的限制性玩法 Submitted to School of Creative Media 創意媒體學院 In Partial Fulfilment of the Requirements for the Degree of Doctor of Philosophy 哲學博士學位 by Johnathan HARRINGTON September 2020 二零二零年九月 ABSTRACT Players are at the heart of games: games are only fully realised when players play them. Contemporary games research has acknowledged players’ importance when discussing games. Player-based research in game studies has been largely oriented either towards specific types of play, or towards analysing players as parts of games. While such approaches have their merits, they background creative traditions shared across different play. Games share players, and there is knowledge to be gleamed from analysing the methods players adopt across different games, especially when these methods are loaded with intent to make something new. In this thesis, I will argue that players design, record, and share their own play methods with other players. Through further research into the Oulipo’s potential contributions to games research, as well as a thorough analysis of current game studies texts on play as method, I will argue that the Oulipo’s concept of constraints can help us better discuss player-based design. I will argue for constraints by analysing various different types of player created play methods. I will outline a descriptive model that discusses these play methods through shared language, and analysed as a single practice with shared commonalities. By the end of this thesis, I will have shown that players’ play methods are often measured and creative. -

Time Invaders: Conceptualizing Performative Game Time

Time invaders: conceptualizing performative game time Darshana Jayemanne Jayemanne, D. 2017. Performativity in art, literature and videogames. In: Performativity in art, literature and videogames. Cham: Palgrave Macmillan. Reproduced with permission of Palgrave Macmillan. This extract is taken from the author's original manuscript and has not been edited. The definitive, published, version of record is available here: https://www.palgrave.com/gb/book/9783319544502 and https://link.springer.com/chapter/10.1007% 2F978-3-319-54451-9_10 Time Invaders – Conceptualizing performance game time The ultimate phase of a MMORPG such as World of Warcraft or the more recent hybrid RPG-FPS Destiny, where the most committed players tend to find themselves before long, is often referred to as the ‘endgame’. This is a point where the levelling system tops out and the narrative has concluded. The endgame is thus the result of considerable experience with the game and its systems, a very large ensemble of both successful and infelicitous performances. This final stage still involves considerable activity — challenging the most difficult enemies in search of the rarest loot, competing with other players and so on. It is a twilight state of both accomplishment and anticipation. As an ‘end’, it is very different from the threatening Game Over screen that is always just moments away in many arcade or action games. The differences between these two end states suggests that the notion of how games end, and the way this impacts on the experience of play, could be illuminating with regard to the problem of characterizing performances — because to end the game typically involves a summation or adjudication on the felicity of a particular and actualized performative multiplicity. -

Naxxramas Speedrun Regulations

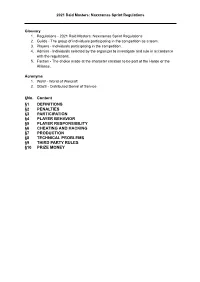

2021 Raid Masters: Naxxramas Sprint Regulations Glossary 1. Regulations - 2021 Raid Masters: Naxxramas Sprint Regulations 2. Guilds - The group of individuals participating in the competition as a team. 3. Players - Individuals participating in the competition. 4. Admins - Individuals selected by the organizer to investigate and rule in accordance with the regulations. 5. Faction - The choice made at the character creation to be part of the Horde or the Alliance. Acronyms 1. WoW - World of Warcraft 2. DDoS - Distributed Denial of Service §No. Content §1 DEFINITIONS §2 PENALTIES §3 PARTICIPATION §4 PLAYER BEHAVIOR §5 PLAYER RESPONSIBILITY §6 CHEATING AND HACKING §7 PRODUCTION §8 TECHNICAL PROBLEMS §9 THIRD PARTY RULES §10 PRIZE MONEY 2021 Raid Masters: Naxxramas Sprint Regulations §1 DEFINITIONS §1.1 Winner and placements The guild that has the quickest time after the last time slot for participation has concluded is considered the winner. Subsequent guilds obtain their placement based on the amount of previous guilds that have a quicker rank than them in which they become one (1) placement higher. Placements are starting at one (1) and a lower placement is considered better. §2 PENALTIES §2.1 Penalty applications Admins have the right to disqualify any guild from the race that is in violation of paragraphs: three (§3), four (§4), five (§5) with accordance to the regulations. §2.2 Bans, disqualifications and suspensions Suspension prevents a specific player or guild from participating in any competition for a specified amount of time. If a guild continues to use said player the guild will automatically be disqualified. Bans prevents a specific player or guild from participating indefinitely. -

On Becoming “Like Esports”: Twitch As a Platform for the Speedrunning Community

On Becoming “Like eSports”: Twitch as a Platform for the Speedrunning Community Rainforest Scully-Blaker Concordia University Montreal, QC, Canada [email protected] EXTENDED ABSTRACT Since its foundation in 2011 and subsequent purchase by Amazon in 2014, Twitch.tv has promoted and shared the growth of many online gaming communities. By affording an unprecedented level of interaction between broadcasters and their audiences through site features such as a live chat window and subscriber incentives, Twitch has reshaped how gameplay footage is shared online, and not just for Let’s Players. In his article, “The socio-technical architecture of digital labor: Converting play into YouTube money” (2014), Hector Postigo discusses what he calls ‘YouTube-worthy’ gameplay – that play which the site’s gaming content creators strive for when accumulating footage for their videos. The concept of ‘YouTube-worthy’ does well to encapsulate not only the effort involved in the content creator practice, but also offers insight into some of the platform-specific limitations and affordances of YouTube. It is in this spirit that this paper will pose the question of what ‘Twitch-worthy’ gameplay might look like. For indeed, how are Twitch content creators to guarantee the same quality of YouTube ‘highlight reels’ when their gameplay is shared live and uncut with their audience within seconds of it taking place? To answer this question, this paper focuses on the speedrunning community, a growing community of players devoted to completing games as quickly as possible without the use of cheats or cheat devices, and how members of this community relate to Twitch as a platform. -

Fair Use, Fair Play: Video Game Performances and "Let's Plays" As Transformative Use Dan Hagen

Washington Journal of Law, Technology & Arts Volume 13 | Issue 3 Article 3 4-1-2018 Fair Use, Fair Play: Video Game Performances and "Let's Plays" as Transformative Use Dan Hagen Follow this and additional works at: https://digitalcommons.law.uw.edu/wjlta Part of the Intellectual Property Law Commons Recommended Citation Dan Hagen, Fair Use, Fair Play: Video Game Performances and "Let's Plays" as Transformative Use, 13 Wash. J. L. Tech. & Arts 245 (2018). Available at: https://digitalcommons.law.uw.edu/wjlta/vol13/iss3/3 This Article is brought to you for free and open access by the Law Reviews and Journals at UW Law Digital Commons. It has been accepted for inclusion in Washington Journal of Law, Technology & Arts by an authorized editor of UW Law Digital Commons. For more information, please contact [email protected]. Hagen: Fair Use, Fair Play: Video Game Performances and "Let's Plays" as WASHINGTON JOURNAL OF LAW, TECHNOLOGY & ARTS VOLUME 13, ISSUE 3 SPRING 2018 FAIR USE, FAIR PLAY: VIDEO GAME PERFORMANCES AND “LET’S PLAYS” AS TRANSFORMATIVE USE Dan Hagen* © Dan Hagen Cite as: 13 Wash. J.L. Tech. & Arts 245 (2018) http://digital.law.washington.edu/dspace-law/handle/1773.1/1816 ABSTRACT With the advent of social video upload sites like YouTube, what constitutes fair use has become a hotly debated and often litigated subject. Major content rights holders in the movie and music industry assert ownership rights of content on video upload platforms, and the application of the fair use doctrine to such content is largely unclear. -

Esports - Professional Cheating in Computer Games

eSports - Professional Cheating in Computer Games Marc Ruef Research Department, scip AG [email protected] https://www.scip.ch Abstract: Computer game cheating has been around as long as competitive gaming itself. Manipulation is frequently found in the speedrunning scene. Professional eSports and their commercial trappings are making these tricks ever more lucrative. There are various options for gaining an edge in online games. Technical measures can make cheating more difficult or at least detectable after the fact. Keywords: Artificial Intelligence, Denial of Service, Detect, Drugs, Excel, Exploit, Fraud, Machine Learning, Magazin, Policy 1. Preface recordings of their own game sessions. Through their contacts, Rogers and Mitchell managed to avoid this This paper was written in 2018 as part of a research project validation and chalked up records that in all likelihood were at scip AG, Switzerland. It was initially published online at never real. https://www.scip.ch/en/?labs.20180906 and is available in English and German. Providing our clients with innovative Todd Rogers held a record of 5.51 seconds for the Atari research for the information technology of the future is an 2600 game Dragster. But when user Apollo Legend reverse essential part of our company culture. engineered the game, it became apparent that the purported time was not even possible. The developer of the game 2. Introduction confirmed this [1] years later. Twin Galaxies annulled the record and banned Rogers [2]. Computer games are big business. Videos, streaming and competitive leagues have driven the commercialization of Billy Mitchell’s downfall came when it came to light that gaming. -

Community Embeddings Reveal Large-Scale Cultural Organization Of

Community embeddings reveal large-scale cultural organization of online platforms Isaac Waller* and Ashton Anderson Department of Computer Science, University of Toronto {walleris,ashton}@cs.toronto.edu * Corresponding author September 2020 Abstract Optimism about the Internet’s potential to bring the world together has been tempered by concerns about its role in inflaming the “culture wars”. Via mass selection into like-minded groups, online society may be becoming more fragmented and polarized, particularly with respect to partisan differences. However, our ability to measure the cultural makeup of online communities, and in turn understand the cultural structure of online platforms, is limited by the pseudonymous, unstructured, and large-scale nature of digital discussion. Here we develop a neural embedding methodology to quantify the positioning of online communities along cultural dimensions by leveraging large-scale patterns of aggregate behaviour. Applying our methodology to 4.8B Reddit comments made in 10K communities over 14 years, we find that the macro-scale community structure is organized along cultural lines, and that relationships between online cultural concepts are more complex than simply reflecting their offline analogues. Examining political content, we show Reddit underwent a significant polarization event around the 2016 U.S. presidential election, and remained highly polarized for years afterward. Contrary to conventional wisdom, however, instances of individual users becoming more polarized over time are rare; the majority of platform-level polarization is driven by the arrival of new and newly political users. Our methodology is broadly applicable to the study of online culture, and our findings have implications for the design of online platforms, understanding the cultural contexts of online content, and quantifying cultural shifts in online behaviour.