KLEE: Unassisted and Automatic Generation of High-Coverage Tests for Complex Systems Programs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

At—At, Batch—Execute Commands at a Later Time

at—at, batch—execute commands at a later time at [–csm] [–f script] [–qqueue] time [date] [+ increment] at –l [ job...] at –r job... batch at and batch read commands from standard input to be executed at a later time. at allows you to specify when the commands should be executed, while jobs queued with batch will execute when system load level permits. Executes commands read from stdin or a file at some later time. Unless redirected, the output is mailed to the user. Example A.1 1 at 6:30am Dec 12 < program 2 at noon tomorrow < program 3 at 1945 pm August 9 < program 4 at now + 3 hours < program 5 at 8:30am Jan 4 < program 6 at -r 83883555320.a EXPLANATION 1. At 6:30 in the morning on December 12th, start the job. 2. At noon tomorrow start the job. 3. At 7:45 in the evening on August 9th, start the job. 4. In three hours start the job. 5. At 8:30 in the morning of January 4th, start the job. 6. Removes previously scheduled job 83883555320.a. awk—pattern scanning and processing language awk [ –fprogram–file ] [ –Fc ] [ prog ] [ parameters ] [ filename...] awk scans each input filename for lines that match any of a set of patterns specified in prog. Example A.2 1 awk '{print $1, $2}' file 2 awk '/John/{print $3, $4}' file 3 awk -F: '{print $3}' /etc/passwd 4 date | awk '{print $6}' EXPLANATION 1. Prints the first two fields of file where fields are separated by whitespace. 2. Prints fields 3 and 4 if the pattern John is found. -

GPU-Based Password Cracking on the Security of Password Hashing Schemes Regarding Advances in Graphics Processing Units

Radboud University Nijmegen Faculty of Science Kerckhoffs Institute Master of Science Thesis GPU-based Password Cracking On the Security of Password Hashing Schemes regarding Advances in Graphics Processing Units by Martijn Sprengers [email protected] Supervisors: Dr. L. Batina (Radboud University Nijmegen) Ir. S. Hegt (KPMG IT Advisory) Ir. P. Ceelen (KPMG IT Advisory) Thesis number: 646 Final Version Abstract Since users rely on passwords to authenticate themselves to computer systems, ad- versaries attempt to recover those passwords. To prevent such a recovery, various password hashing schemes can be used to store passwords securely. However, recent advances in the graphics processing unit (GPU) hardware challenge the way we have to look at secure password storage. GPU's have proven to be suitable for crypto- graphic operations and provide a significant speedup in performance compared to traditional central processing units (CPU's). This research focuses on the security requirements and properties of prevalent pass- word hashing schemes. Moreover, we present a proof of concept that launches an exhaustive search attack on the MD5-crypt password hashing scheme using modern GPU's. We show that it is possible to achieve a performance of 880 000 hashes per second, using different optimization techniques. Therefore our implementation, executed on a typical GPU, is more than 30 times faster than equally priced CPU hardware. With this performance increase, `complex' passwords with a length of 8 characters are now becoming feasible to crack. In addition, we show that between 50% and 80% of the passwords in a leaked database could be recovered within 2 months of computation time on one Nvidia GeForce 295 GTX. -

Administering Unidata on UNIX Platforms

C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\ADMINUNIX\ADMINUNIXTITLE.fm March 5, 2010 1:34 pm Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta UniData Administering UniData on UNIX Platforms UDT-720-ADMU-1 C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\ADMINUNIX\ADMINUNIXTITLE.fm March 5, 2010 1:34 pm Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Notices Edition Publication date: July, 2008 Book number: UDT-720-ADMU-1 Product version: UniData 7.2 Copyright © Rocket Software, Inc. 1988-2010. All Rights Reserved. Trademarks The following trademarks appear in this publication: Trademark Trademark Owner Rocket Software™ Rocket Software, Inc. Dynamic Connect® Rocket Software, Inc. RedBack® Rocket Software, Inc. SystemBuilder™ Rocket Software, Inc. UniData® Rocket Software, Inc. UniVerse™ Rocket Software, Inc. U2™ Rocket Software, Inc. U2.NET™ Rocket Software, Inc. U2 Web Development Environment™ Rocket Software, Inc. wIntegrate® Rocket Software, Inc. Microsoft® .NET Microsoft Corporation Microsoft® Office Excel®, Outlook®, Word Microsoft Corporation Windows® Microsoft Corporation Windows® 7 Microsoft Corporation Windows Vista® Microsoft Corporation Java™ and all Java-based trademarks and logos Sun Microsystems, Inc. UNIX® X/Open Company Limited ii SB/XA Getting Started The above trademarks are property of the specified companies in the United States, other countries, or both. All other products or services mentioned in this document may be covered by the trademarks, service marks, or product names as designated by the companies who own or market them. License agreement This software and the associated documentation are proprietary and confidential to Rocket Software, Inc., are furnished under license, and may be used and copied only in accordance with the terms of such license and with the inclusion of the copyright notice. -

LATEX for Beginners

LATEX for Beginners Workbook Edition 5, March 2014 Document Reference: 3722-2014 Preface This is an absolute beginners guide to writing documents in LATEX using TeXworks. It assumes no prior knowledge of LATEX, or any other computing language. This workbook is designed to be used at the `LATEX for Beginners' student iSkills seminar, and also for self-paced study. Its aim is to introduce an absolute beginner to LATEX and teach the basic commands, so that they can create a simple document and find out whether LATEX will be useful to them. If you require this document in an alternative format, such as large print, please email [email protected]. Copyright c IS 2014 Permission is granted to any individual or institution to use, copy or redis- tribute this document whole or in part, so long as it is not sold for profit and provided that the above copyright notice and this permission notice appear in all copies. Where any part of this document is included in another document, due ac- knowledgement is required. i ii Contents 1 Introduction 1 1.1 What is LATEX?..........................1 1.2 Before You Start . .2 2 Document Structure 3 2.1 Essentials . .3 2.2 Troubleshooting . .5 2.3 Creating a Title . .5 2.4 Sections . .6 2.5 Labelling . .7 2.6 Table of Contents . .8 3 Typesetting Text 11 3.1 Font Effects . 11 3.2 Coloured Text . 11 3.3 Font Sizes . 12 3.4 Lists . 13 3.5 Comments & Spacing . 14 3.6 Special Characters . 15 4 Tables 17 4.1 Practical . -

Course Outline & Schedule

Course Outline & Schedule Call US 408-759-5074 or UK +44 20 7620 0033 Linux Advanced Shell Programming Tools Curriculum The Linux Foundation Course Code LASP Duration 3 Day Course Price $1,830 Course Description The Linux Advanced Shell Programming Tools course is designed to give delegates practical experience using a range of Linux tools to manipulate text and incorporate them into Linux shell scripts. The delegate will practise: Using the shell command line editor Backing up and restoring files Scheduling background jobs using cron and at Using regular expressions Editing text files with sed Using file comparison utilities Using the head and tail utilities Using the cut and paste utilities Using split and csplit Identifying and translating characters Sorting files Translating characters in a file Selecting text from files with the grep family of commands Creating programs with awk Course Modules Review of Shell Fundamentals (1 topic) ◾ Review of UNIX Commands Using Unix Shells (6 topics) ◾ Command line history and editing ◾ The Korn and POSIX shells Perpetual Solutions - Page 1 of 5 Course Outline & Schedule Call US 408-759-5074 or UK +44 20 7620 0033 ◾ The Bash shell ◾ Command aliasing ◾ The shell startup file ◾ Shell environment variables Redirection, Pipes and Filters (7 topics) ◾ Standard I/O and redirection ◾ Pipes ◾ Command separation ◾ Conditional execution ◾ Grouping Commands ◾ UNIX filters ◾ The tee command Backup and Restore Utilities (6 topics) ◾ Archive devices ◾ The cpio command ◾ The tar command ◾ The dd command ◾ Exercise: -

HEP Computing Part I Intro to UNIX/LINUX Adrian Bevan

HEP Computing Part I Intro to UNIX/LINUX Adrian Bevan Lectures 1,2,3 [email protected] 1 Lecture 1 • Files and directories. • Introduce a number of simple UNIX commands for manipulation of files and directories. • communicating with remote machines [email protected] 2 What is LINUX • LINUX is the operating system (OS) kernel. • Sitting on top of the LINUX OS are a lot of utilities that help you do stuff. • You get a ‘LINUX distribution’ installed on your desktop/laptop. This is a sloppy way of saying you get the OS bundled with lots of useful utilities/applications. • Use LINUX to mean anything from the OS to the distribution we are using. • UNIX is an operating system that is very similar to LINUX (same command names, sometimes slightly different functionalities of commands etc). – There are usually enough subtle differences between LINUX and UNIX versions to keep you on your toes (e.g. Solaris and LINUX) when running applications on multiple platforms …be mindful of this if you use other UNIX flavours. – Mac OS X is based on a UNIX distribution. [email protected] 3 Accessing a machine • You need a user account you should all have one by now • can then log in at the terminal (i.e. sit in front of a machine and type in your user name and password to log in to it). • you can also log in remotely to a machine somewhere else RAL SLAC CERN London FNAL in2p3 [email protected] 4 The command line • A user interfaces with Linux by typing commands into a shell. -

Factor — Factor Analysis

Title stata.com factor — Factor analysis Description Quick start Menu Syntax Options for factor and factormat Options unique to factormat Remarks and examples Stored results Methods and formulas References Also see Description factor and factormat perform a factor analysis of a correlation matrix. The commands produce principal factor, iterated principal factor, principal-component factor, and maximum-likelihood factor analyses. factor and factormat display the eigenvalues of the correlation matrix, the factor loadings, and the uniqueness of the variables. factor expects data in the form of variables, allows weights, and can be run for subgroups. factormat is for use with a correlation or covariance matrix. Quick start Principal-factor analysis using variables v1 to v5 factor v1 v2 v3 v4 v5 As above, but retain at most 3 factors factor v1-v5, factors(3) Principal-component factor analysis using variables v1 to v5 factor v1-v5, pcf Maximum-likelihood factor analysis factor v1-v5, ml As above, but perform 50 maximizations with different starting values factor v1-v5, ml protect(50) As above, but set the seed for reproducibility factor v1-v5, ml protect(50) seed(349285) Principal-factor analysis based on a correlation matrix cmat with a sample size of 800 factormat cmat, n(800) As above, retain only factors with eigenvalues greater than or equal to 1 factormat cmat, n(800) mineigen(1) Menu factor Statistics > Multivariate analysis > Factor and principal component analysis > Factor analysis factormat Statistics > Multivariate analysis > Factor and principal component analysis > Factor analysis of a correlation matrix 1 2 factor — Factor analysis Syntax Factor analysis of data factor varlist if in weight , method options Factor analysis of a correlation matrix factormat matname, n(#) method options factormat options matname is a square Stata matrix or a vector containing the rowwise upper or lower triangle of the correlation or covariance matrix. -

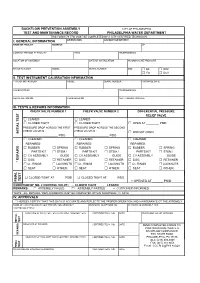

BFP Test Form

BACKFLOW PREVENTION ASSEMBLY CITY OF PHILADELPHIA TEST AND MAINTENANCE RECORD PHILADELPHIA WATER DEPARTMENT THIS FORM (79-770) MUST BE COMPLETED BY A CITY CERTIFIED TECHNICIAN I. GENERAL INFORMATION ORIENTATION ACCOUNT OR METER # NAME OF FACILITY ADDRESS ZIP CONTACT PERSON AT FACILITY TITLE TELEPHONE NO. LOCATION OF ASSEMBLY DATE OF INSTALLATION INCOMING LINE PRESSURE MANUFACTURER MODEL SERIAL NUMBER SIZE □ DS □ RPZ □ FS □ DCV II. TEST INSTRUMENT CALIBRATION INFORMATION TYPE OF INSTRUMENT MODEL SERIAL NUMBER PURCHASE DATE CALIBRATED BY TELEPHONE NO. REGISTRATION NO. CALIBRATED ON NEXT CALIBRATION DUE III. TESTS & REPAIRS INFORMATION CHECK VALVE NUMBER 1 CHECK VALVE NUMBER 2 DIFFERENTIAL PRESSURE RELIEF VALVE □ LEAKED □ LEAKED □ CLOSED TIGHT □ CLOSED TIGHT □ OPEN AT ________ PSID PRESSURE DROP ACROSS THE FIRST PRESSURE DROP ACROSS THE SECOND CHECK VALVE IS : CHECK VALVE IS : INITIAL TEST INITIAL □ DID NOT OPEN ______________________ PSID ______________________ PSID □ CLEANED □ CLEANED □ CLEANED REPAIRED: REPAIRED: REPAIRED: □ RUBBER □ SPRING □ RUBBER □ SPRING □ RUBBER □ SPRING PARTS KIT □ STEM / PARTS KIT □ STEM / PARTS KIT □ STEM / □ CV ASSEMBLY GUIDE □ CV ASSEMBLY GUIDE □ CV ASSEMBLY GUIDE □ DISC □ RETAINER □ DISC □ RETAINER □ DISC □ RETAINER * REPAIRS □ O - RINGS □ LOCKNUTS □ O - RINGS □ LOCKNUTS □ O - RINGS □ LOCKNUTS □ SEAT □ OTHER: □ SEAT □ OTHER: □ SEAT □ OTHER: □ CLOSED TIGHT AT ______ PSID □ CLOSED TIGHT AT ______ PSID TEST FINAL FINAL □ OPENED AT ______ PSID CONDITION OF NO. 2 CONTROL VALVE : □ CLOSED TIGHT □ LEAKED REMARKS : □ ASSEMBLY FAILED □ ASSEMBLY PASSED □ CUSTOMER INFORMED *NOTE : ALL REPAIRS / REPLACEMENTS MUST BE COMPLETED WITHIN FOURTEEN (14) DAYS IV. APPROVALS * I HEREBY CERTIFY THAT THIS DATA IS ACCURATE AND REFLECTS THE PROPER OPERATION AND MAINTENANCE OT THE ASSEMBLY NAME OF CERTIFIED BACKFLOW PREVENTION ASSEMBLY BUSINESS TELEPHONE NO. -

Version 7.8-Systemd

Linux From Scratch Version 7.8-systemd Created by Gerard Beekmans Edited by Douglas R. Reno Linux From Scratch: Version 7.8-systemd by Created by Gerard Beekmans and Edited by Douglas R. Reno Copyright © 1999-2015 Gerard Beekmans Copyright © 1999-2015, Gerard Beekmans All rights reserved. This book is licensed under a Creative Commons License. Computer instructions may be extracted from the book under the MIT License. Linux® is a registered trademark of Linus Torvalds. Linux From Scratch - Version 7.8-systemd Table of Contents Preface .......................................................................................................................................................................... vii i. Foreword ............................................................................................................................................................. vii ii. Audience ............................................................................................................................................................ vii iii. LFS Target Architectures ................................................................................................................................ viii iv. LFS and Standards ............................................................................................................................................ ix v. Rationale for Packages in the Book .................................................................................................................... x vi. Prerequisites -

File Permissions Do Not Restrict Root

Filesystem Security 1 General Principles • Files and folders are managed • A file handle provides an by the operating system opaque identifier for a • Applications, including shells, file/folder access files through an API • File operations • Access control entry (ACE) – Open file: returns file handle – Allow/deny a certain type of – Read/write/execute file access to a file/folder by – Close file: invalidates file user/group handle • Access control list (ACL) • Hierarchical file organization – Collection of ACEs for a – Tree (Windows) file/folder – DAG (Linux) 2 Discretionary Access Control (DAC) • Users can protect what they own – The owner may grant access to others – The owner may define the type of access (read/write/execute) given to others • DAC is the standard model used in operating systems • Mandatory Access Control (MAC) – Alternative model not covered in this lecture – Multiple levels of security for users and documents – Read down and write up principles 3 Closed vs. Open Policy Closed policy Open Policy – Also called “default secure” • Deny Tom read access to “foo” • Give Tom read access to “foo” • Deny Bob r/w access to “bar” • Give Bob r/w access to “bar • Tom: I would like to read “foo” • Tom: I would like to read “foo” – Access denied – Access allowed • Tom: I would like to read “bar” • Tom: I would like to read “bar” – Access allowed – Access denied 4 Closed Policy with Negative Authorizations and Deny Priority • Give Tom r/w access to “bar” • Deny Tom write access to “bar” • Tom: I would like to read “bar” – Access -

Student Number: Surname: Given Name

Computer Science 2211a Midterm Examination Sample Solutions 9 November 20XX 1 hour 40 minutes Student Number: Surname: Given name: Instructions/Notes: The examination has 35 questions on 9 pages, and a total of 110 marks. Put all answers on the question paper. This is a closed book exam. NO ELECTRONIC DEVICES OF ANY KIND ARE ALLOWED. 1. [4 marks] Which of the following Unix commands/utilities are filters? Correct answers are in blue. mkdir cd nl passwd grep cat chmod scriptfix mv 2. [1 mark] The Unix command echo HOME will print the contents of the environment variable whose name is HOME. True False 3. [1 mark] In C, the null character is another name for the null pointer. True False 4. [3 marks] The protection code for the file abc.dat is currently –rwxr--r-- . The command chmod a=x abc.dat is equivalent to the command: a. chmod 755 abc.dat b. chmod 711 abc.dat c. chmod 155 abc.dat d. chmod 111 abc.dat e. none of the above 5. [3 marks] The protection code for the file abc.dat is currently –rwxr--r-- . The command chmod ug+w abc.dat is equivalent to the command: a. chmod 766 abc.dat b. chmod 764 abc.dat c. chmod 754 abc.dat d. chmod 222 abc.dat e. none of the above 2 6. [3 marks] The protection code for def.dat is currently dr-xr--r-- , and the protection code for def.dat/ghi.dat is currently -r-xr--r-- . Give one or more chmod commands that will set the protections properly so that the owner of the two files will be able to delete ghi.dat using the command rm def.dat/ghi.dat chmod u+w def.dat or chmod –r u+w def.dat 7. -

Cuda Compiler Driver Nvcc

CUDA COMPILER DRIVER NVCC TRM-06721-001_v10.1 | August 2019 Reference Guide CHANGES FROM PREVIOUS VERSION ‣ Major update to the document to reflect recent nvcc changes. www.nvidia.com CUDA Compiler Driver NVCC TRM-06721-001_v10.1 | ii TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. Overview................................................................................................... 1 1.1.1. CUDA Programming Model......................................................................... 1 1.1.2. CUDA Sources........................................................................................ 1 1.1.3. Purpose of NVCC.................................................................................... 2 1.2. Supported Host Compilers...............................................................................2 Chapter 2. Compilation Phases................................................................................3 2.1. NVCC Identification Macro.............................................................................. 3 2.2. NVCC Phases............................................................................................... 3 2.3. Supported Input File Suffixes...........................................................................4 2.4. Supported Phases......................................................................................... 4 Chapter 3. The CUDA Compilation Trajectory............................................................