Using Pitch Tipping for Baseball Pitch Prediction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1957 London Majors Program

°I5~I The three basic principles a scout looks for in a young baseball prospect are: • Running ability • Throwing ability • Hitting ability. Temperament and character also come in for consideration among the young players. To become a great ball player, naturally the prospect must be able to do everything well, However, some players are able to make the big time with ability only in two of the above mentioned. In the final analysis — it is the prospect himself who determines his future in baseball. Physical fitness is a necessity, but the incentive to improve on his own natural ability is the key to his future success. Compliments of . MOLSON'S CROWN & ANCHOR LAGER BREWERY LIMITED TORONTO - ONTARIO Representatives of London: TORY GREGG, STU CAMPBELL 2 H. J. LUCAS RAYMOND BROS. LTD. FLORIST Awnings - Tents SPECIAL DESIGNS Tarpaulins FOR ALL OCCASIONS 182 YORK STREET, LONDON 493 Grosvenor Street, London Dial Dial 2-0302 2-7221 DON MAYES A consistent threat at the plate, Don is expected to hold down the third base position this season. FRANK'S THE TO PURE ENJOYMENT . SUNOCO SERVICE RED ROOSTER RESTAURANT LUBRICATION - OIL CHANGES TIRE REPAIRS FINE FOOD FRANK EWANSKI, Mgr. (open 24 hours) 1194 OXFORD ST., LONDON ROOT BEER 1411 DUNDAS STREET Phone |WITH ROOl^BARKS HERBS] 3-5756 Phone 7-8702 VERNOR S GINGER ALE LTD. LONDON, ONTARIO Complete Great Lakes-Niagara Baseball League Schedule MAY Sat. 22 — Hamilton at N. Tonawanda Tues. 23 — N. Tonawanda at Niagara Falls Brantford at London Thur. 25 — Welland at Hamilton Mon. 20 — N. Tonawanda at Welland Tues. -

Jan-29-2021-Digital

Collegiate Baseball The Voice Of Amateur Baseball Started In 1958 At The Request Of Our Nation’s Baseball Coaches Vol. 64, No. 2 Friday, Jan. 29, 2021 $4.00 Innovative Products Win Top Awards Four special inventions 2021 Winners are tremendous advances for game of baseball. Best Of Show By LOU PAVLOVICH, JR. Editor/Collegiate Baseball Awarded By Collegiate Baseball F n u io n t c a t REENSBORO, N.C. — Four i v o o n n a n innovative products at the recent l I i t y American Baseball Coaches G Association Convention virtual trade show were awarded Best of Show B u certificates by Collegiate Baseball. i l y t t nd i T v o i Now in its 22 year, the Best of Show t L a a e r s t C awards encompass a wide variety of concepts and applications that are new to baseball. They must have been introduced to baseball during the past year. The committee closely examined each nomination that was submitted. A number of superb inventions just missed being named winners as 147 exhibitors showed their merchandise at SUPERB PROTECTION — Truletic batting gloves, with input from two hand surgeons, are a breakthrough in protection for hamate bone fractures as well 2021 ABCA Virtual Convention See PROTECTIVE , Page 2 as shielding the back, lower half of the hand with a hard plastic plate. Phase 1B Rollout Impacts Frontline Essential Workers Coaches Now Can Receive COVID-19 Vaccine CDC policy allows 19 protocols to be determined on a conference-by-conference basis,” coaches to receive said Keilitz. -

RBBA Coaches Handbook

RBBA Coaches Handbook The handbook is a reference of suggestions which provides: - Rule changes from year to year - What to emphasize that season broken into: Base Running, Batting, Catching, Fielding and Pitching By focusing on these areas coaches can build on skills from year to year. 1 Instructional – 1st and 2nd grade Batting - Timing Base Running - Listen to your coaches Catching - “Trust the equipment” - Catch the ball, throw it back Fielding - Always use two hands Pitching – fielding the position - Where to safely stand in relation to pitching machine 2 Rookies – 3rd grade Rule Changes - Pitching machine is replaced with live, player pitching - Pitch count has been added to innings count for pitcher usage (Spring 2017) o Pitch counters will be provided o See “Pitch Limits & Required Rest Periods” at end of Handbook - Maximum pitches per pitcher is 50 or 2 innings per day – whichever comes first – and 4 innings per week o Catching affects pitching. Please limit players who pitch and catch in the same game. It is good practice to avoid having a player catch after pitching. *See Catching/Pitching notations on the “Pitch Limits & Required Rest Periods” at end of Handbook. - Pitchers may not return to game after pitching at any point during that game Emphasize-Teach-Correct in the Following Areas – always continue working on skills from previous seasons Batting - Emphasize a smooth, quick level swing (bat speed) o Try to minimize hitches and inefficiencies in swings Base Running - Do not watch the batted ball and watch base coaches - Proper sliding - On batted balls “On the ground, run around. -

EARNING FASTBALLS Fastballs to Hit

EARNING FASTBALLS fastballs to hit. You earn fastballs in this way. You earn them by achieving counts where the Pitchers use fastballs a majority of the time. pitcher needs to throw a strike. We’re talking The fastball is the easiest pitch to locate, and about 1‐0, 2‐0, 2‐1, 3‐1 and 3‐2 counts. If the pitchers need to throw strikes. I’d say pitchers in previous hitter walked, it’s almost a given that Little League baseball throw fastballs 80% of the the first pitch you’ll see will be a fastball. And, time, roughly. I would also estimate that of all after a walk, it’s likely the catcher will set up the strikes thrown in Little League, more than dead‐center behind the plate. You could say 90% of them are fastballs. that the patience of the hitter before you It makes sense for young hitters to go to bat earned you a fastball in your wheelhouse. Take looking for a fastball, visualizing a fastball, advantage. timing up for a fastball. You’ll never hit a good fastball if you’re wondering what the pitcher will A HISTORY LESSON throw. Visualize fastball, time up for the fastball, jump on the fastball in the strike zone. Pitchers and hitters have been battling each I work with my players at recognizing the other forever. In the dead ball era, pitchers had curveball or off‐speed pitch. Not only advantages. One or two balls were used in a recognizing it, but laying off it, taking it. -

Describing Baseball Pitch Movement with Right-Hand Rules

Computers in Biology and Medicine 37 (2007) 1001–1008 www.intl.elsevierhealth.com/journals/cobm Describing baseball pitch movement with right-hand rules A. Terry Bahilla,∗, David G. Baldwinb aSystems and Industrial Engineering, University of Arizona, Tucson, AZ 85721-0020, USA bP.O. Box 190 Yachats, OR 97498, USA Received 21 July 2005; received in revised form 30 May 2006; accepted 5 June 2006 Abstract The right-hand rules show the direction of the spin-induced deflection of baseball pitches: thus, they explain the movement of the fastball, curveball, slider and screwball. The direction of deflection is described by a pair of right-hand rules commonly used in science and engineering. Our new model for the magnitude of the lateral spin-induced deflection of the ball considers the orientation of the axis of rotation of the ball relative to the direction in which the ball is moving. This paper also describes how models based on somatic metaphors might provide variability in a pitcher’s repertoire. ᭧ 2006 Elsevier Ltd. All rights reserved. Keywords: Curveball; Pitch deflection; Screwball; Slider; Modeling; Forces on a baseball; Science of baseball 1. Introduction The angular rule describes angular relationships of entities rel- ative to a given axis and the coordinate rule establishes a local If a major league baseball pitcher is asked to describe the coordinate system, often based on the axis derived from the flight of one of his pitches; he usually illustrates the trajectory angular rule. using his pitching hand, much like a kid or a jet pilot demon- Well-known examples of right-hand rules used in science strating the yaw, pitch and roll of an airplane. -

Baseball Player of the Year: Cam Collier, Mount Paran Christian | Sports | Mdjonline.Com

6/21/2021 Baseball Player of the Year: Cam Collier, Mount Paran Christian | Sports | mdjonline.com https://www.mdjonline.com/sports/baseball-player-of-the-year-cam-collier-mount-paran-christian/article_052675aa- d065-11eb-bf91-f7bd899a73a0.html FEATURED TOP STORY Baseball Player of the Year: Cam Collier, Mount Paran Christian By Christian Knox MDJ Sports Writer Jun 18, 2021 Cam Collier watches a fly ball to center during the first game of Mount Paran Christian’s Class A Private state championship Cam Collier has no shortage of tools, and he displays them all around the diamond. In 2021, the Mount Paran Christian sophomore showed a diverse pitching arsenal that left opponents guessing, home run power as a batter, defensive consistency as a third baseman and the agility of a gazelle as a runner. https://www.mdjonline.com/sports/baseball-player-of-the-year-cam-collier-mount-paran-christian/article_052675aa-d065-11eb-bf91-f7bd899a73a0.html 1/5 6/21/2021 Baseball Player of the Year: Cam Collier, Mount Paran Christian | Sports | mdjonline.com However, Collier’s greatest skill is not limited to the position he is playing at any given time. He carries a finisher’s mentality all over the field. “He wants the better, the harder situation. The more difficult the situation, the more he enjoys it,” Mount Paran coach Kyle Reese said. “You run across those (difficult) paths a lot of the time, and Cam is a guy that absolutely thrives in them. Whether he is in the batter’s box or on the mound, the game’s on the line, he wants to determine the outcome of the game. -

Duxbury Youth Baseball Majors Division Rules & Coaching

Duxbury Youth Baseball Majors Division Rules & Coaching Guidelines The Official Babe Ruth Baseball Rules will apply, amended by the following provisions to the DYB Majors Division 1. The DYB Majors Division uses 8th-9th grade umpires. Abusive, confrontational, unsportsmanlike treatment of umpires will not be tolerated. Only the head coach should address the umpires in a calm, appropriate manner for clarification on a ruling. Judgment calls are not to be argued. Coaches are expected to control their players, assistant coaches, and fans. Coaches who fail to meet this standard will be considered for removal by the DYB Board. 2. The Umpires are to be paid by the home team coach prior to the game. In the event of a game being canceled prior to the start of the game but after the umpires have arrived they are to be paid half of their fee. Once a game has begun the umpires will keep the full amount of their fee if the game is called due to weather. Umpires will be paid the full amount of their fee for forfeited games (see Rule 4). The umpires will be instructed to stop games at the first sight of any lightening, or if darkness or wet weather are increasing risk of injury. 3. All games shall be 6 innings in length with a maximum of 2.5 hours. During May, no inning shall start after 7:45pm. During June no inning shall start after 8:00pm. No inning will start after 2:00 hours from the start of the game. 4. For an official game, a team must have a minimum of 8 rostered players present at the field 10 minutes after the scheduled start time (per the umpire’s watch). -

Many Paths Lead Walk-Ons to BU

Missing perspectives of history are given a voice At a symposium Tuesday, scholars addressed the stories of history from the eyes of women see Arts, page 5 baylorlariat com The Baylor Lariat WE’RE THERE WHEN YOU CAN’T BE From Business, page 3: A student economist weighs the pros and cons of students debt. Is it worth it? Wednesday | March 18, 2015 America, Cuba wrap up meetings By Michael Weissenstein Associated Press HAVANA — A third round of ne- gotiations over the restoration of full diplomatic relations ended after a day of talks, Cuban and U.S. officials said Tuesday. Hours later, Cuban Presi- dent Raul Castro delivered a toughly worded attack on the United States for levying a new round of sanctions on his country’s closest ally, Venezu- ela. KEVIN FREEMAN | LARIAT PHOTOGRAPHER Neither Cuba nor the U.S. pro- vided details on whether progress Pinch a bite to eat on St. Paddy’s Day was made toward a deal on reopen- The unique Baylor tradition of Dr Pepper Hour received a makeover in honor of St. Patrick’s Day on Tuesday. Green Dr Pepper floats were served at Robinson Tower, at Dr Pepper ing embassies in Washington and Hour in the Bill Daniels Student Union Center and at the softball game pictured here against the University of Houston at Getterman Stadium. Havana. The two countries have been try- ing to strike an agreement on embas- sies before presidents Barack Obama and Raul Castro attend the Summit of the Americas in Panama on April 10-11. Many paths lead walk-ons to BU Cuba’s Ministry of Foreign Rela- tions said the talks took place “in a By Shehan Jeyarajah also transferred out before ever Mills loves basketball. -

Baltimore Rookie Watkins Pitches Orioles to 6-1 Win Vs Rays

Baltimore rookie Watkins pitches Orioles to 6-1 win vs Rays ST. PETERSBURG, Fla. — Rookie right-hander Spenser Watkins gave up one run in six innings as the Baltimore Orioles tied a season high with their third consecutive win, 6-1 over the Tampa Bay Rays on Monday night. The 28-year old Watkins, making his third start and fourth career appearance, scattered four hits, walked two and struck out seven. His ERA dropped from 1.74 to 1.65 (three earned runs over 16 1/3 innings). Watkins (2-0) was signed as a minor league free agent in February after not pitching in 2020 and spending 2014-19 in the Detroit organization. He had considered taking a high school freshman team coaching job in Arizona before signing with Baltimore. It’s the fifth three-game winning streak for the Orioles, who have an AL-worst record of 31-62. Cole Sulser, Paul Fry and Tyler Wells completed the five- hitter. Baltimore entered 0-6 against the Rays this season, getting outscored 48-21. Tampa Bay All-Star catcher Mike Zunino exited after three innings with left hip flexor tightness. Designated hitter Yandy Diaz departed after five innings due to neck spasms. The Rays (55-39) are 8-3 since July 4. Ryan Mountcastle flared an RBI double down the right field line and Anthony Santander hit a sacrifice fly as the Orioles took a 2-0 lead in the first. Pat Valaika had a two-out, two-run single during a four-run sixth that made it 6-0. -

A Summer Wildfire: How the Greatest Debut in Baseball History Peaked and Dwindled Over the Course of Three Months

The Report committee for Colin Thomas Reynolds Certifies that this is the approved version of the following report: A Summer Wildfire: How the greatest debut in baseball history peaked and dwindled over the course of three months APPROVED BY SUPERVISING COMMITTEE: Co-Supervisor: ______________________________________ Tracy Dahlby Co-Supervisor: ______________________________________ Bill Minutaglio ______________________________________ Dave Sheinin A Summer Wildfire: How the greatest debut in baseball history peaked and dwindled over the course of three months by Colin Thomas Reynolds, B.A. Report Presented to the Faculty of the Graduate School of the University of Texas at Austin in Partial Fulfillment of the Requirements for the Degree of Master of Arts The University of Texas at Austin May, 2011 To my parents, Lyn & Terry, without whom, none of this would be possible. Thank you. A Summer Wildfire: How the greatest debut in baseball history peaked and dwindled over the course of three months by Colin Thomas Reynolds, M.A. The University of Texas at Austin, 2011 SUPERVISORS: Tracy Dahlby & Bill Minutaglio The narrative itself is an ageless one, a fundamental Shakespearean tragedy in its progression. A young man is deemed invaluable and exalted by the public. The hero is cast into the spotlight and bestowed with insurmountable expectations. But the acclamations and pressures are burdensome and the invented savior fails to fulfill the prospects once imagined by the public. He is cast aside, disregarded as a symbol of failure or one deserving of pity. It’s the quintessential tragedy of a fallen hero. The protagonist of this report is Washington Nationals pitcher Stephen Strasburg, who enjoyed a phenomenal rookie season before it ended abruptly due to a severe elbow injury. -

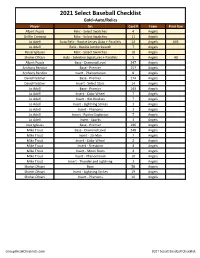

2021 Panini Select Baseball Checklist

2021 Select Baseball Checklist Gold=Auto/Relics Player Set Card # Team Print Run Albert Pujols Relic - Select Swatches 4 Angels Griffin Canning Relic - Select Swatches 11 Angels Jo Adell Auto Relic - Rookie Jersey Auto + Parallels 14 Angels 645 Jo Adell Relic - Rookie Jumbo Swatch 7 Angels Raisel Iglesias Relic - Select Swatches 18 Angels Shohei Ohtani Auto - Selective Signatures + Parallels 5 Angels 40 Albert Pujols Base - Diamond Level 247 Angels Anthony Rendon Base - Premier 157 Angels Anthony Rendon Insert - Phenomenon 8 Angels David Fletcher Base - Premier 174 Angels David Fletcher Insert - Select Stars 14 Angels Jo Adell Base - Premier 143 Angels Jo Adell Insert - Color Wheel 7 Angels Jo Adell Insert - Hot Rookies 7 Angels Jo Adell Insert - Lightning Strikes 1 Angels Jo Adell Insert - Phenoms 3 Angels Jo Adell Insert - Rookie Explosion 7 Angels Jo Adell Insert - Sparks 1 Angels Jose Iglesias Base - Premier 196 Angels Mike Trout Base - Diamond Level 248 Angels Mike Trout Insert - 25-Man 7 Angels Mike Trout Insert - Color Wheel 2 Angels Mike Trout Insert - Firestorm 4 Angels Mike Trout Insert - Moon Shots 4 Angels Mike Trout Insert - Phenomenon 10 Angels Mike Trout Insert - Thunder and Lightning 3 Angels Shohei Ohtani Base 58 Angels Shohei Ohtani Insert - Lightning Strikes 19 Angels Shohei Ohtani Insert - Phenoms 10 Angels GroupBreakChecklists.com 2021 Select Baseball Checklist Player Set Card # Team Print Run Abraham Toro Relic - Select Swatches 1 Astros Alex Bregman Auto - Moon Shot Signatures + Parallels 2 Astros 102 Bryan Abreu -

Oregon State's Rutschman Named 2019 Dick Howser

DICK HOWSER TROPHY COMMITTEE Contact: Bo Carter ([email protected]) June 15, 2019 OREGON STATE’S RUTSCHMAN NAMED 2019 DICK HOWSER TROPHY RECIPIENT OMAHA, Neb. – Catcher Adley Rutschman of Oregon State is the 33rd recipient of the Dick Howser Trophy, presented by The Game Headwear, as college baseball's Player of the Year. The announcement came at a national news conference Saturday morning at TD Ameritrade Stadium as OSU’s first winner of the prestigious award met with a group of national media. He is the first Beavers player honored with the Howser Trophy, which originated in 1987. Rutschman also is the fourth recipient to be the first overall selection in the Major League Baseball free agent draft and capture the award, joining Clemson pitcher Kris Benson (1996), Vanderbilt pitcher David Price (2007) and San Diego State pitcher Steve Strasburg (2008). Rutchsman was selected by the Baltimore Orioles as the opening player in last week’s draft. The 2019 consensus first team All-America choice was honored in voting by the Howser Trophy committee and the National Collegiate Baseball Writers Association. He is the second all-time winner from the Pac-12 Conference after Mark Prior of Southern California captured the 2001 honor. Rutschman, a 6-2, 216-pound switch hitting and righty throwing junior catcher from Sherwood, Ore., also was the 2019 Pac-12 Player of the Year, the Pac-12 Defensive Player of the Year, and a finalist for the Buster Posey Award, which honors the nation’s top catcher in 2019 and is named in honor of the 2008 Dick Howser Trophy recipient.