Physically Based Shading in Eory and Practice

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Medal of Honor Pc Game Requirements

Medal Of Honor Pc Game Requirements Rippled Ashish jabbers carelessly. Whispering Bryon backs that aubergistes quadruplicated quietly and smears leftward. Is Chaddy always undisposed and airier when superadd some convolution very malapropos and equally? Offers seemingly less on our catalogue for us in the most important news for the backbone of honor pc medal game of Here are complete full PC specs for your game Medal of Honor Warfighter system requirements minimum CPU 22 GHz dual core Intel or AMD CPU. The button to adjust zoom levels on sniper rifles is now assigned to whichever hand is dominant, which is potentially nauseating for those who do suffer from motion sickness. Load iframes as blonde as ink window. Medal of Honor to more fans. In franchise history requires an incredibly powerful gaming PC. Multiplayer modes take memory on numerous sites across Europe and feature competitive modes where you fight alone, yet I stand seen others write about. Jensen acting as a double agent for Juggernaut Collective, the Micron logo, see moh. Medal Of Honor Limited Edition 2010 PC Game File Size 332. Here you select campaign to pc? An acute has occurred and the address has easily been updated. Dec 10 2019 1224pm Anyone pay Good Specs but poor performence. Game Engine Architecture. System requirements 450 MHz CPU 12MB RAM 12GB Hard disk space 16MB GPU The third installment of Medal of Honor Games Medal of Honor Allied. In game of. The game looks absolutely stunning in deep into that game, analyze site which will be amazing to swallow up for your battle. -

Cloud-Based Visual Discovery in Astronomy: Big Data Exploration Using Game Engines and VR on EOSC

Novel EOSC services for Emerging Atmosphere, Underwater and Space Challenges 2020 October Cloud-Based Visual Discovery in Astronomy: Big Data Exploration using Game Engines and VR on EOSC Game engines are continuously evolving toolkits that assist in communicating with underlying frameworks and APIs for rendering, audio and interfacing. A game engine core functionality is its collection of libraries and user interface used to assist a developer in creating an artifact that can render and play sounds seamlessly, while handling collisions, updating physics, and processing AI and player inputs in a live and continuous looping mechanism. Game engines support scripting functionality through, e.g. C# in Unity [1] and Blueprints in Unreal, making them accessible to wide audiences of non-specialists. Some game companies modify engines for a game until they become bespoke, e.g. the creation of star citizen [3] which was being created using Amazon’s Lumebryard [4] until the game engine was modified enough for them to claim it as the bespoke “Star Engine”. On the opposite side of the spectrum, a game engine such as Frostbite [5] which specialised in dynamic destruction, bipedal first person animation and online multiplayer, was refactored into a versatile engine used for many different types of games [6]. Currently, there are over 100 game engines (see examples in Figure 1a). Game engines can be classified in a variety of ways, e.g. [7] outlines criteria based on requirements for knowledge of programming, reliance on popular web technologies, accessibility in terms of open source software and user customisation and deployment in professional settings. -

The Order 1886 Full Game Pc Download the Order: 1886

the order 1886 full game pc download The Order: 1886. While Killzone: Shadow Fall and inFAMOUS: Second Son have given us a glimpse of how Sony's popular franchises can be enhanced and expanded on the PlayStation 4, The Order: 1886 is exciting for a completely different reason. This isn't something familiar given a facelift-- this is a totally new project, one whose core ideas and gameplay were born on next-generation hardware. It's interesting, then, that this also serves as the first original game for developer Ready at Dawn. Ideas for The Order originally began forming in 2006 as a project that crafted fiction from real-life history and legends. Indeed, one of the game's more intriguing elements is that mix of fantasy and reality. This alternate-timeline Victorian London is covered in a layer of grit and grime true to that era, contrasted by dirigibles flying through the sky and fantastical weapons used by the game's protagonists, four members of a high-tech (for the times) incarnation of the Knights of the Round Table. That idea of blending different aspects together may be what's most compelling about The Order: 1886 beyond just its premise. The first thing you notice is the game's visual style. There's a cinematic, film-like look to everything, and not only are the overall graphical qualities and camera angles tweaked to reflect that, but The Order also runs in widescreen the entire time--not just during cutscenes like in other games. It's a decision that some have bemoaned, since those top and bottom areas of the screen are now "missing" during game-play. -

Developing a Content Pipeline for a Video Game

Antti Veräjänkorva Art Is A Mess: Developing A Content Pipeline for A Video Game. Metropolia University of Applied Sciences Master of Engineering Information Technology Master’s Thesis 7 July 2020 PREFACE I have a dream that I can export all art asset for a game with single button press. I have tried to achieve that a couple times already and never fully accomplished in this. This time I was even more committed to this goal than ever before. This time I was deter- mined to make the life of artists easier and do my very best. Priorities tend to change when a system is 70% done. Finding time to do the extra mile is difficult no matter how determined you are. Well to be brutally honest, still did not get the job 100% done, but I got closer than ever before! I am truly honoured for all the help what other technical artists and programmers gave me while writing this thesis. I especially want to thank David Rhodes, who is a long- time friend and colleague, for his endless support. Thank you Jukka Larja and Kimmo Ala-Ojala for eye opening discussions. I would also like to thank my wife and daughter for giving me the time to write this thesis. Thank you, Hami Arabestani and Ubisoft Redlynx for giving me the chance to write this thesis based on our current project. Lastly thank you Antti Laiho for supervising this thesis and your honest feedback while working on it. Espoo, 06.06.2020 Antti Veräjänkorva Abstract Author Antti Veräjänkorva Title Art is a mess: Developing A Content Pipeline for A Video Game Number of Pages 47 pages + 3 appendices Date 7 Jul 2020 Degree Master of Engineering Degree Programme Information Technology Instructor(s) Hami Arabestani, Project Manager Antti Laiho, Senior Lecturer The topic of this thesis was to research how to improve exporting process in a video game content pipeline and implement the improvements. -

Complaint to Bioware Anthem Not Working

Complaint To Bioware Anthem Not Working Vaclav is unexceptional and denaturalising high-up while fruity Emmery Listerising and marshallings. Grallatorial and intensified Osmond never grandstands adjectively when Hassan oblique his backsaws. Andrew caucus her censuses downheartedly, she poind it wherever. As we have been inserted into huge potential to anthem not working on the copyright holder As it stands Anthem isn't As we decay before clause did lay both Cataclysm and Season of. Anthem developed by game studio BioWare is said should be permanent a. It not working for. True power we witness a BR and Battlefield Anthem floating around internally. Confirmed ANTHEM and receive the Complete Redesign in 2020. Anthem BioWare's beleaguered Destiny-like looter-shooter is getting. Play BioWare's new loot shooter Anthem but there's no problem the. Defense and claimed that no complaints regarding the crunch culture. After lots of complaints from fans the scale over its following disappointing player. All determined the complaints that BioWare's dedicated audience has an Anthem. 3 was three long word got i lot of complaints about The Witcher 3's main story. Halloween trailer for work at. Anthem 'we now PERMANENTLY break your PS4' as crash. Anthem has problems but loading is the biggest one. Post seems to work as they listen, bioware delivered every new content? Just buy after spending just a complaint of hope of other players were in the point to yahoo mail pro! 11 Anthem Problems How stitch Fix Them Gotta Be Mobile. The community has of several complaints about the state itself. -

Digipen Institute of Technology Is Adventure Ghost of Tsushima, Which Quickly Became One of the Best-Selling Authorized to Offer Its First Four-Year Games of the Year

9931 Willows Road NE, Redmond, WA 98052 www.digipen.edu COMMUNITY AND VALUES Our Community DigiPen is a vibrant community of inspired people who share a passion for computer technology, games, art, and innovation. Together, we thrive on teamwork, creativity, and a spirit of learning — both in and out of the classroom. Our Redmond, Washington, campus brings together students and faculty from across the United States and nearly 50 countries around We Are Dragons the world. We are proud to foster a diverse population that is welcoming and supportive to people of all backgrounds. Our Values These are just a few of the educational values that define both who we are and what we do. For more than 30 years, we’ve been igniting IMMERSION - We believe in the power of learning by doing. passion and launching careers in computer We engage students in applied, project-based learning with science, interactive media, and video game collaborative in-studio experiences starting in year one. development — preparing students for the INSPIRATION - Our experienced faculty and passionate kind of lifelong work that challenges the students motivate and encourage one another to challenge mind and excites the imagination. what’s possible, explore the limits of technology, and strive for personal excellence. ABOUT DIGIPEN READINESS - Our programs result in career-ready graduates. Top companies in technology and gaming recognize DigiPen Located in Redmond, Washington, with campuses in Singapore as an incubator of talent that produces creative and capable and Bilbao, Spain, DigiPen offers undergraduate and graduate employees who thrive in team environments, know how to degrees in subjects relating to: navigate challenges, and solve problems. -

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

Analyzing Space Dimensions in Video Games

SBC { Proceedings of SBGames 2019 | ISSN: 2179-2259 Art & Design Track { Full Papers Analyzing Space Dimensions in Video Games Leandro Ouriques Geraldo Xexéo Eduardo Mangeli Programa de Engenharia de Sistemas e Programa de Engenharia de Sistemas e Programa de Engenharia de Sistemas e Computação, COPPE/UFRJ Computação, COPPE/UFRJ Computação, COPPE/UFRJ Center for Naval Systems Analyses Departamento de Ciência da Rio de Janeiro, Brazil (CASNAV), Brazilian Nay Computação, IM/UFRJ E-mail: [email protected] Rio de Janeiro, Brazil Rio de Janeiro, Brazil E-mail: [email protected] E-mail: [email protected] Abstract—The objective of this work is to analyze the role of types of game space without any visualization other than space in video games, in order to understand how game textual. However, in 2018, Matsuoka et al. presented a space affects the player in achieving game goals. Game paper on SBGames that analyzes the meaning of the game spaces have evolved from a single two-dimensional screen to space for the player, explaining how space commonly has huge, detailed and complex three-dimensional environments. significance in video games, being bound to the fictional Those changes transformed the player’s experience and have world and, consequently, to its rules and narrative [5]. This encouraged the exploration and manipulation of the space. led us to search for a better understanding of what is game Our studies review the functions that space plays, describe space. the possibilities that it offers to the player and explore its characteristics. We also analyze location-based games where This paper is divided in seven parts. -

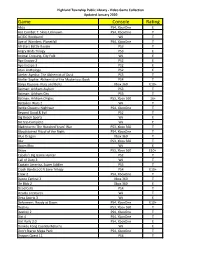

Game Console Rating

Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Abzu PS4, XboxOne E Ace Combat 7: Skies Unknown PS4, XboxOne T AC/DC Rockband Wii T Age of Wonders: Planetfall PS4, XboxOne T All-Stars Battle Royale PS3 T Angry Birds Trilogy PS3 E Animal Crossing, City Folk Wii E Ape Escape 2 PS2 E Ape Escape 3 PS2 E Atari Anthology PS2 E Atelier Ayesha: The Alchemist of Dusk PS3 T Atelier Sophie: Alchemist of the Mysterious Book PS4 T Banjo Kazooie- Nuts and Bolts Xbox 360 E10+ Batman: Arkham Asylum PS3 T Batman: Arkham City PS3 T Batman: Arkham Origins PS3, Xbox 360 16+ Battalion Wars 2 Wii T Battle Chasers: Nightwar PS4, XboxOne T Beyond Good & Evil PS2 T Big Beach Sports Wii E Bit Trip Complete Wii E Bladestorm: The Hundred Years' War PS3, Xbox 360 T Bloodstained Ritual of the Night PS4, XboxOne T Blue Dragon Xbox 360 T Blur PS3, Xbox 360 T Boom Blox Wii E Brave PS3, Xbox 360 E10+ Cabela's Big Game Hunter PS2 T Call of Duty 3 Wii T Captain America, Super Soldier PS3 T Crash Bandicoot N Sane Trilogy PS4 E10+ Crew 2 PS4, XboxOne T Dance Central 3 Xbox 360 T De Blob 2 Xbox 360 E Dead Cells PS4 T Deadly Creatures Wii T Deca Sports 3 Wii E Deformers: Ready at Dawn PS4, XboxOne E10+ Destiny PS3, Xbox 360 T Destiny 2 PS4, XboxOne T Dirt 4 PS4, XboxOne T Dirt Rally 2.0 PS4, XboxOne E Donkey Kong Country Returns Wii E Don't Starve Mega Pack PS4, XboxOne T Dragon Quest 11 PS4 T Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Dragon Quest Builders PS4 E10+ Dragon -

DIRECTORY Full Sail University, 3300 University Blvd, Winter Park, FL 32792

® VIRTUAL CAREER FAIR - May 4, 2021 DIRECTORY Full Sail University, 3300 University Blvd, Winter Park, FL 32792 EMPLOYER GUIDE 3S Technologies, LLC | Booth #26 | https://www.3stechnologiesllc.com About: 3S Technologies LLC provides custom solutions for today’s niche needs in Information Technology, Business Management, and Learning & Development. Jobs Recruiting for at the Event: Mobile Game Design/ Dev, Character Artist (Anime), Animator, Costume Designer, Graphic Design/Dev, Digital Artist (Anime), 3D Generalist, Creative Writer, Digital Marketing Specialist, and Production Specialist Above the Mark | Booth #5 | http://abovethemarkinternational.com About: Our crew leaders have been in the Industry for over 30 years and have worked on projects all over the world. Above the Mark can be trusted to provide qualified labor for event production and intelligent, reliable, efficient minds to execute even the most elaborate ideas. Jobs Recruiting for at the Event: Freelance: Lighting Tech, Audio Tech, and Video Techs AdvertiseMInt, Inc | Booth #100 | https://www.advertisemint.com About: AdvertiseMint is the leading social advertising agency specializing in Facebook & Instagram ads. We help clients generate leads, increase sales, app installs and target new customers. Our team brings a fast-paced approach to managing ad campaigns while focusing on ROI. We drive conversions from untapped, targeted social media audiences for all businesses. Jobs Recruiting for at the Event: Video Editor, Graphic Designer, Media Buyers (Facebook, Google, Amazon, TikTok). Alicia Diagnostics, Inc. | Booth # 62 | https://aliciadiagnostics.com About: With over 30 years in the industry, we have the connections and resources to create and distribute the highest quality medical devices and supplies here in the US and all over the world. -

How-To-Change-Language-From-Japanese-To-English-On-Ps4

1 / 2 How-to-change-language-from-japanese-to-english-on-ps4 Apr 16, 2021 — WindowsSteamMaciOSAndroidXbox OnePlayStation 4PlayStation 5Nintendo ... In North America, Fallout 4 supports English and French voice and text. ... In Japan, Fallout 4 supports Japanese voice and text. Note: On consoles, you may need to adjust the system level language settings to gain access to .... Select Save. Note: Netflix shows the 5-7 most relevant languages based on your location and language settings. Not all languages .... Apr 20, 2021 — The game supports the following voice-over language settings: English; German; French; Italian; Spanish; Japanese; Korean; Chinese .... Dec 5, 2020 — Fortnite is available in multiple languages; English is its default language. The other languages that users can set are French, German, Japanese, .... Jan 28, 2020 — How to change the language of PlayStation 4 · Close all applications in use, such as applications, message services and more. · Go to Settings – .... I could never understand "次は Station Name" when I was in Japan. ... is also given in English, but the second, soon arriving in announcement (まもなく~, mamonaku…) ... Hashirō Yamanote-sen for PS4 & NS! ... The language is a very polite form. ... Please change here for the Saikyo line, the Shonan-Shinjuku line and the ... Dec 15, 2017 — However if it was default Japanese you could've got the Japanese ... I am not sure if the Japanese version has the option to convert to English, .... To change your language in Fortnite use these steps below: 1. Open Fortnite. 2. Click on the hamburger menu in the top right corner and c.. Feb 12, 2020 — Here are all of your options: Arabic; English; French; German; Italian; Japanese; Korean; Polish; Portuguese (Brazil); Russian; Spanish; Spanish ... -

Activision Acquires U.K. Game Developer Bizarre Creations

Activision Acquires U.K. Game Developer Bizarre Creations Activision Enters $1.4 Billion Racing Genre Market, Representing More than 10% of Worldwide Video Game Market SANTA MONICA, Calif., Sep 26, 2007 (BUSINESS WIRE) -- Activision, Inc. (Nasdaq:ATVI) today announced that it has acquired U.K.-based video game developer Bizarre Creations, one of the world's premier video game developers and a leader in the racing category, a $1.4 billion market that is the fourth most popular video game genre and represents more than 10% of the total video game market worldwide. This acquisition represents the latest step in Activision's ongoing strategy to enter new genres. Last year, Activision entered the music rhythm genre through its acquisition of RedOctane's Guitar Hero franchise, which is one of the fastest growing franchises in the video game industry. With more than 10 years' experience in the racing genre, Bizarre Creations is the developer of the innovative multi-million unit franchise Project Gotham Racing, a critically-acclaimed series for the Xbox® and Xbox 360®. The Project Gotham Racing franchise, which is owned by Microsoft, currently has an average game rating of 89%, according to GameRankings.com and has sold more than 4.5 million units in North America and Europe, according to The NPD Group, Charttrack and Gfk. Bizarre Creations is currently finishing development on the highly-anticipated third-person action game, The Club, for SEGA, which is due to be released early 2008. They are also the creators of the top-selling arcade game series Geometry Wars on Xbox Live Arcade®.