A Novel Logistic Regression Model Combining Semi-Supervised

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Supplemental Materials Supplemental Table 1

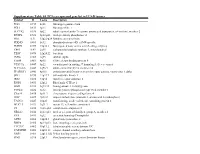

Electronic Supplementary Material (ESI) for RSC Advances. This journal is © The Royal Society of Chemistry 2016 Supplemental Materials Supplemental Table 1. The differentially expressed proteins from rat pancreas identified by proteomics (SAP vs. SO) No. Protein name Gene name ratio P value 1 Metallothionein Mt1m 3.35 6.34E-07 2 Neutrophil antibiotic peptide NP-2 Defa 3.3 8.39E-07 3 Ilf2 protein Ilf2 3.18 1.75E-06 4 Numb isoform o/o rCG 3.12 2.73E-06 5 Lysozyme Lyz2 3.01 5.63E-06 6 Glucagon Gcg 2.89 1.17E-05 7 Serine protease HTRA1 Htra1 2.75 2.97E-05 8 Alpha 2 macroglobulin cardiac isoform (Fragment) 2.75 2.97E-05 9 Myosin IF (Predicted) Myo1f 2.65 5.53E-05 10 Neuroendocrine secretory protein 55 Gnas 2.61 7.60E-05 11 Matrix metallopeptidase 8 Mmp8 2.57 9.47E-05 12 Protein Tnks1bp1 Tnks1bp1 2.53 1.22E-04 13 Alpha-parvin Parva 2.47 1.78E-04 14 C4b-binding protein alpha chain C4bpa 2.42 2.53E-04 15 Protein KTI12 homolog Kti12 2.41 2.74E-04 16 Protein Rab11fip5 Rab11fip5 2.41 2.84E-04 17 Protein Mcpt1l3 Mcpt1l3 2.33 4.43E-04 18 Phospholipase B-like 1 Plbd1 2.33 4.76E-04 Aldehyde dehydrogenase (NAD), cytosolic 19 2.32 4.93E-04 (Fragments) 20 Protein Dpy19l2 Dpy19l2 2.3 5.68E-04 21 Regenerating islet-derived 3 alpha, isoform CRA_a Reg3a 2.27 6.74E-04 22 60S acidic ribosomal protein P1 Rplp1 2.26 7.22E-04 23 Serum albumin Alb 2.25 7.98E-04 24 Ribonuclease 4 Rnase4 2.24 8.25E-04 25 Cct-5 protein (Fragment) Cct5 2.24 8.52E-04 26 Protein S100-A9 S100a9 2.22 9.71E-04 27 Creatine kinase M-type Ckm 2.21 1.00E-03 28 Protein Larp4b Larp4b 2.18 1.25E-03 -

Supplementary Table S4. FGA Co-Expressed Gene List in LUAD

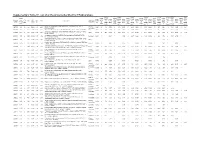

Supplementary Table S4. FGA co-expressed gene list in LUAD tumors Symbol R Locus Description FGG 0.919 4q28 fibrinogen gamma chain FGL1 0.635 8p22 fibrinogen-like 1 SLC7A2 0.536 8p22 solute carrier family 7 (cationic amino acid transporter, y+ system), member 2 DUSP4 0.521 8p12-p11 dual specificity phosphatase 4 HAL 0.51 12q22-q24.1histidine ammonia-lyase PDE4D 0.499 5q12 phosphodiesterase 4D, cAMP-specific FURIN 0.497 15q26.1 furin (paired basic amino acid cleaving enzyme) CPS1 0.49 2q35 carbamoyl-phosphate synthase 1, mitochondrial TESC 0.478 12q24.22 tescalcin INHA 0.465 2q35 inhibin, alpha S100P 0.461 4p16 S100 calcium binding protein P VPS37A 0.447 8p22 vacuolar protein sorting 37 homolog A (S. cerevisiae) SLC16A14 0.447 2q36.3 solute carrier family 16, member 14 PPARGC1A 0.443 4p15.1 peroxisome proliferator-activated receptor gamma, coactivator 1 alpha SIK1 0.435 21q22.3 salt-inducible kinase 1 IRS2 0.434 13q34 insulin receptor substrate 2 RND1 0.433 12q12 Rho family GTPase 1 HGD 0.433 3q13.33 homogentisate 1,2-dioxygenase PTP4A1 0.432 6q12 protein tyrosine phosphatase type IVA, member 1 C8orf4 0.428 8p11.2 chromosome 8 open reading frame 4 DDC 0.427 7p12.2 dopa decarboxylase (aromatic L-amino acid decarboxylase) TACC2 0.427 10q26 transforming, acidic coiled-coil containing protein 2 MUC13 0.422 3q21.2 mucin 13, cell surface associated C5 0.412 9q33-q34 complement component 5 NR4A2 0.412 2q22-q23 nuclear receptor subfamily 4, group A, member 2 EYS 0.411 6q12 eyes shut homolog (Drosophila) GPX2 0.406 14q24.1 glutathione peroxidase -

Open-Angle Glaucoma: from Epidemiology to Molecular Aspects and Anatomical Features

Open-angle glaucoma: from epidemiology to molecular aspects and anatomical features Hendrika Springelkamp Acknowledgements: The research described in this thesis was supported by the NWO Graduate Programme 2010 BOO (022.002.023). The Rotterdam Study and ERF were supported by the Netherlands Organisation of Scientific Research (NWO; 91111025); Erasmus Medical Center and Erasmus University, Rotterdam, The Netherlands; Netherlands Organization for Health Research and Development (ZonMw); UitZicht; the Research Institute for Diseases in the Elderly; the Ministry of Education, Culture and Science; the Ministry for Health, Welfare and Sports; the European Commission (DG XII); the municipality of Rotterdam; the Netherlands Genomics Initiative/NWO; Center for Medical Systems Biology of NGI; Stichting Lijf en Leven; Stichting Oogfonds Nederland; Landelijke Stichting voor Blinden en Slechtzienden; Prof. Dr. Henkes Stichting; Algemene Nederlandse Vereniging ter Voorkoming van Blind- heid; MD Fonds; Glaucoomfonds; Medical Workshop; Heidelberg Engineering; Topcon Europe BV. The generation and management of GWAS genotype data for the Rotterdam Study is supported by the Netherlands Organisation of Scientific Research NWO Investments (nr. 175.010.2005.011, 911-03-012). This study is funded by the Research Institute for Diseases in the Elderly (014-93-015; RIDE2), the Netherlands Genomics Initiative (NGI)/Netherlands Organisation for Scientific Research (NWO) project nr. 050-060-810. The publication of this thesis was financially supported by: Glaucoomfonds, Prof.Dr. Henkes Stichting, Landelijke Stichting voor Blinden en Slecht- zienden, Erasmus MC (Department of Epidemiology), Allergan BV, Bayer B.V., Théa Pharma Cover & lay-out: Jessy Wijgerde Printing: Ridderprint BV ISBN: 9789491462252 © 2016 H. Springelkamp All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means without permission of the author, or, when appro- priate, of the publisher of the publications. -

Modeling and Analysis of Acute Leukemia Using Human Hematopoietic Stem and Progenitor Cells

Modeling and analysis of acute leukemia using human hematopoietic stem and progenitor cells A dissertation submitted to the Graduate School of the University of Cincinnati in partial fulfillment of the requirement for the degree of Doctor of Philosophy in the Molecular &Developmental Biology Graduate Program of the College of Medicine by Shan Lin BS, Tsinghua University, 2009 Dissertation Committee: James C. Mulloy, PhD (Chair) Geraldine Guasch, PhD Ashish R. Kumar, MD, PhD Daniel T. Starczynowski, PhD Yi Zheng, PhD Abstract For decades, elegant mouse models have yielded important insights into the complex biology of acute leukemia development. However, species differences between human and mouse could have significant influences on biological and translational applications. Therefore, human primary hematopoietic cells and xenograft mouse models have become important research tools in the field. In this report, we briefly review the methodologies that use human primary hematopoietic cells to model acute leukemia and examine the effects of leukemic oncogenes. The advantages and limitations of the human model system compared to syngeneic mouse models are discussed. The species-related complexity in human disease modeling is highlighted in the study establishing a faithful model of proB-ALL caused by MLL-AF4, the fusion product of the t(4;11). MLL-AF4 proB-ALL has poor prognosis, the lack of an accurate model hampers the study of disease pathobiology and therapeutic testing. We find human AF4 cDNA inhibits retroviral production and efficient transduction, this limitation can be overcome by fusing MLL with murine Af4, highly conserved with human AF4. Whereas MLL-Af4-transduced murine cells induce only AML, transduced human CD34+ cells produce proB-ALL faithful to t(4;11) disease, fully recapitulating the immunophenotypic and molecular aspects of the disease. -

A Meta-Analysis of the Effects of High-LET Ionizing Radiations in Human Gene Expression

Supplementary Materials A Meta-Analysis of the Effects of High-LET Ionizing Radiations in Human Gene Expression Table S1. Statistically significant DEGs (Adj. p-value < 0.01) derived from meta-analysis for samples irradiated with high doses of HZE particles, collected 6-24 h post-IR not common with any other meta- analysis group. This meta-analysis group consists of 3 DEG lists obtained from DGEA, using a total of 11 control and 11 irradiated samples [Data Series: E-MTAB-5761 and E-MTAB-5754]. Ensembl ID Gene Symbol Gene Description Up-Regulated Genes ↑ (2425) ENSG00000000938 FGR FGR proto-oncogene, Src family tyrosine kinase ENSG00000001036 FUCA2 alpha-L-fucosidase 2 ENSG00000001084 GCLC glutamate-cysteine ligase catalytic subunit ENSG00000001631 KRIT1 KRIT1 ankyrin repeat containing ENSG00000002079 MYH16 myosin heavy chain 16 pseudogene ENSG00000002587 HS3ST1 heparan sulfate-glucosamine 3-sulfotransferase 1 ENSG00000003056 M6PR mannose-6-phosphate receptor, cation dependent ENSG00000004059 ARF5 ADP ribosylation factor 5 ENSG00000004777 ARHGAP33 Rho GTPase activating protein 33 ENSG00000004799 PDK4 pyruvate dehydrogenase kinase 4 ENSG00000004848 ARX aristaless related homeobox ENSG00000005022 SLC25A5 solute carrier family 25 member 5 ENSG00000005108 THSD7A thrombospondin type 1 domain containing 7A ENSG00000005194 CIAPIN1 cytokine induced apoptosis inhibitor 1 ENSG00000005381 MPO myeloperoxidase ENSG00000005486 RHBDD2 rhomboid domain containing 2 ENSG00000005884 ITGA3 integrin subunit alpha 3 ENSG00000006016 CRLF1 cytokine receptor like -

Dynamic Palmitoylation Events Following T-Cell Receptor Signaling

bioRxiv preprint doi: https://doi.org/10.1101/831388; this version posted November 5, 2019. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. Dynamic Palmitoylation Events Following T-Cell Receptor Signaling Eliot Morrison1, Tatjana Wegner1, Andres Ernesto Zucchetti2, Miguel Álvaro-Benito1, Ashley Zheng1, 3 4 5 2 1* Stefanie Kliche , Eberhard Krause , Britta Brügger , Claire Hivroz & Christian Freund 1Freie Universität Berlin, Institute for Chemistry & Biochemistry, Laboratory of Protein Biochemistry, Thielallee 63, 14195 Berlin 2Institut Curie, PSL Research University, INSERM U932, Integrative analysis of T cell activation team, 26 rue d'Ulm, 75248, Paris Cedex 05, France 3Otto-von-Guericke-University, Institute of Molecular and Clinical Immunology, Health Campus Immunology, Infectiology and Inflammation, Leipziger Strasse 44, 39120 Magdeburg, Germany 4Leibniz Institute for Molecular Pharmacology, Mass Spectrometry Unit, Robert-Rössle-Str 10, 13125 Berlin 5Heidelberg University Biochemistry Center (BZH), Im Neuenheimer Feld 328, 69120, Heidelberg, Germany. *Corresponding address: [email protected] Abstract Palmitoylation is the reversible addition of palmitate to cysteine via a thioester linkage. Following stimulation of the T-cell receptor we find a number of proteins are newly palmitoylated, including those involved in vesicle- mediated transport and Ras signal transduction. Among these stimulation-dependent palmitoylation targets are the v-SNARE VAMP7, important for docking of vesicular LAT during TCR signaling, and the largely undescribed palmitoyl acyltransferase DHHC18 that is expressed in two isoforms in T cells. Using our newly developed On- Plate Palmitoylation Assay (OPPA), we show DHHC18 is capable of palmitoylating VAMP7 at Cys183. -

UNIVERSITY of CALIFORNIA RIVERSIDE Quantitative Proteomic

UNIVERSITY OF CALIFORNIA RIVERSIDE Quantitative Proteomic Analysis for Assessing the Mechanisms of Action of Anti-Cancer Drugs and Arsenite A Dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Chemistry by Fan Zhang December 2013 Dissertation Committee: Dr. Yinsheng Wang, Chairperson Dr. Cynthia Larive Dr. Pingyun Feng Copyright by Fan Zhang 2013 The Dissertation of Fan Zhang is approved: Committee Chairperson University of California, Riverside ACKNOWLEDGEMENTS This dissertation can never be completed without the help and support from many people. I owe my appreciation to all those who have made this dissertation possible and because of whom my graduate experience has been one that I will cherish forever. First and foremost, I would like to give my deepest gratitude to my research adviser, Professor Yinsheng Wang, for his valuable guidance and consistent encouragement on all my research projects during my PhD study at UCR. Professor Wang encouraged me not only to grow as a solid chemist and experimentalist, but also as an independent thinker. His diligent and serious research attitude has impressive impact on the maturity of my personality. His patience and support helped me to overcome the obstacles and desperate situations during these past five years. I would say that I could never finish the PhD study without Professor Wang‟s great mentorship. For everything you have done for me, Professor Wang, thank you. I would like to give my appreciation to my committees: Professor Cynthia Larive and Professor Pingyun Feng, for reading my dissertation and providing me their helpful comments; Professor Quan (Jason) Cheng, for teaching me the knowledge in his class; and Professor Jeff Bachant, for some wise advice he gave me on my research. -

Supplementary Table S1. List of All the Proteins Identified by Itraq Analysis

Supplementary Table S1. List of all the proteins identified by iTRAQ analysis. OVSA OVISE OVMA RMG- JHOS4 OVCA OVSA SKOV Seque OVISE OVMA RMG- JHOS4 OVCA OVSA HO/H SKOV # OVISE /HOSE OVMA NA/HO RMG- I/HOS JHOS4 /HOSE OVCA R3/HO HO/H SKOV 3/HOS Accession nce MW calc. Subcellular /HOSE NA/HO I/HOS /HOSE R3/HO HO/H OSE2 3/HOS Pept # AAs Score Protein name /HOSE 2C NA/HO SE2C I/HOS E2C /HOSE 2C R3/HO SE2C OSE2 3/HOS E2C number covera [kDa] pI localization 2C SE2C E2C 2C SE2C OSE2 C E2C ides 2C Variabi SE2C Variabi E2C Variabi 2C Variabi SE2C Variabi C E2C Variabi ge(%) Count Count Count Count Count C Variabi Count lity [%] lity [%] lity [%] lity [%] lity [%] Count lity [%] lity [%] Extended synaptotagmin-2 OS=Homo sapiens GN=ESYT2 PE=1 SV=1 - Plasma A0FGR8 13.9 10 921 102.29 9.26 421.7 1.043 12 19.8 0.758 9 15.3 0.969 11 43.5 1.080 11 27.3 0.800 10 34.7 3.281 12 74.1 1.430 9 38.6 [ESYT2_HUMAN] membrane A0MZ66 3.65 2 631 71.60 5.33 49.5 Shootin-1 OS=Homo sapiens GN=KIAA1598 PE=1 SV=4 - [SHOT1_HUMAN] Other 2.515 3 33.6 1.495 3 13.1 1.985 2 56.9 1.684 3 5.4 1.561 3 31.4 1.881 3 22.2 2.783 3 32.1 Acetolactate synthase-like protein OS=Homo sapiens GN=ILVBL PE=1 SV=2 - A1L0T0 10.13 5 632 67.82 8.15 231.2 Other 0.820 4 20.3 0.675 4 37.0 0.833 4 37.6 0.880 4 44.3 0.789 4 37.8 3.677 4 78.5 0.929 4 13.0 [ILVBL_HUMAN] Uncharacterized protein KIAA0564 OS=Homo sapiens GN=KIAA0564 PE=1 A3KMH1 1.15 2 1905 214.69 7.40 30.9 Secreted 0.934 1 0.661 1 1.650 2 28.7 1.928 2 18.0 0.949 2 38.8 1.748 2 10.7 2.450 1 SV=2 - [K0564_HUMAN] WD repeat-containing protein -

Computational and Experimental Approaches for Evaluating the Genetic Basis of Mitochondrial Disorders

Computational and Experimental Approaches For Evaluating the Genetic Basis of Mitochondrial Disorders The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters. Lieber, Daniel Solomon. 2013. Computational and Experimental Citation Approaches For Evaluating the Genetic Basis of Mitochondrial Disorders. Doctoral dissertation, Harvard University. Accessed April 17, 2018 4:10:16 PM EDT Citable Link http://nrs.harvard.edu/urn-3:HUL.InstRepos:11158264 This article was downloaded from Harvard University's DASH Terms of Use repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http://nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA (Article begins on next page) Computational and Experimental Approaches For Evaluating the Genetic Basis of Mitochondrial Disorders A dissertation presented by Daniel Solomon Lieber to The Committee on Higher Degrees in Systems Biology in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the subject of Systems Biology Harvard University Cambridge, Massachusetts April 2013 © 2013 - Daniel Solomon Lieber All rights reserved. Dissertation Adviser: Professor Vamsi K. Mootha Daniel Solomon Lieber Computational and Experimental Approaches For Evaluating the Genetic Basis of Mitochondrial Disorders Abstract Mitochondria are responsible for some of the cell’s most fundamental biological pathways and metabolic processes, including aerobic ATP production by the mitochondrial respiratory chain. In humans, mitochondrial dysfunction can lead to severe disorders of energy metabolism, which are collectively referred to as mitochondrial disorders and affect approximately 1:5,000 individuals. These disorders are clinically heterogeneous and can affect multiple organ systems, often within a single individual. -

Affymetrix Probe ID Gene Symbol 1007 S at DDR1 1494 F At

Affymetrix Probe ID Gene Symbol 1007_s_at DDR1 1494_f_at CYP2A6 1552312_a_at MFAP3 1552368_at CTCFL 1552396_at SPINLW1 /// WFDC6 1552474_a_at GAMT 1552486_s_at LACTB 1552586_at TRPV3 1552619_a_at ANLN 1552628_a_at HERPUD2 1552680_a_at CASC5 1552928_s_at MAP3K7IP3 1552978_a_at SCAMP1 1553099_at TIGD1 1553106_at C5orf24 1553530_a_at ITGB1 1553997_a_at ASPHD1 1554127_s_at MSRB3 1554152_a_at OGDH 1554168_a_at SH3KBP1 1554217_a_at CCDC132 1554279_a_at TRMT2B 1554334_a_at DNAJA4 1554480_a_at ARMC10 1554510_s_at GHITM 1554524_a_at OLFM3 1554600_s_at LMNA 1555021_a_at SCARF1 1555058_a_at LPGAT1 1555197_a_at C21orf58 1555282_a_at PPARGC1B 1555460_a_at SLC39A6 1555559_s_at USP25 1555564_a_at CFI 1555594_a_at MBNL1 1555729_a_at CD209 1555733_s_at AP1S3 1555906_s_at C3orf23 1555945_s_at FAM120A 1555947_at FAM120A 1555950_a_at CD55 1557137_at TMEM17 1557910_at HSP90AB1 1558027_s_at PRKAB2 1558680_s_at PDE1A 1559136_s_at FLJ44451 /// IDS 1559490_at LRCH3 1562378_s_at PROM2 1562443_at RLBP1L2 1563522_at DDX10 /// LOC401533 1563834_a_at C1orf62 1566509_s_at FBXO9 1567214_a_at PNN 1568678_s_at FGFR1OP 1569629_x_atLOC389906 /// LOC441528 /// LOC728687 /// LOC729162 1598_g_at GAS6 /// LOC100133684 200064_at HSP90AB1 200596_s_at EIF3A 200597_at EIF3A 200604_s_at PRKAR1A 200621_at CSRP1 200638_s_at YWHAZ 200640_at YWHAZ 200641_s_at YWHAZ 200702_s_at DDX24 200742_s_at TPP1 200747_s_at NUMA1 200762_at DPYSL2 200872_at S100A10 200878_at EPAS1 200931_s_at VCL 200965_s_at ABLIM1 200998_s_at CKAP4 201019_s_at EIF1AP1 /// EIF1AX 201028_s_at CD99 201036_s_at HADH -

Genomic Determinants of Protein Abundance Variation in Colorectal

bioRxiv preprint doi: https://doi.org/10.1101/092767; this version posted December 9, 2016. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. 1 Genomic determinants of protein abundance 2 variation in colorectal cancer cells 3 Theodoros I. Roumeliotis1,*,#, Steven Paul Williams1,*, Emanuel Gonçalves2, 4 Fatemeh Zamanzad Ghavidel2, Nanne Aben4, Magali Michaut4, Michael Schubert2, 5 James C. Wright1, Mi Yang3, Clara Alsinet1, Rodrigo Dienstmann6,7, Justin Guinney6, 6 Pedro Beltrao2, Alvis Brazma2, Oliver Stegle2, David J. Adams1, Lodewyk 7 Wessels4,5,†, Julio Saez-Rodriguez2,3, †, Ultan McDermott1,†, Jyoti S. Choudhary1,†,# 8 1Wellcome Trust Sanger Institute, Wellcome Trust Genome Campus, Hinxton, Cambridgeshire CB10 1SA, UK. 9 2European Molecular Biology Laboratory, European Bioinformatics Institute, Wellcome Trust Genome Campus, Cambridge 10 CB10 1SA, UK. 11 3RWTH Aachen University, Faculty of Medicine, Joint Research Center for Computational Biomedicine, Aachen, Germany. 12 4Computational Cancer Biology, The Netherlands Cancer Institute, Amsterdam, The Netherlands. 13 5Faculty of EEMCS, Delft University of Technology, Delft, The Netherlands. 14 6Computational Oncology, Sage Bionetworks, Fred Hutchinson Cancer Research Center, Seattle, USA. 15 7Oncology Data Science, Vall d'Hebron Institute of Oncology, Barcelona, Spain. 16 17 *Co-first author 18 †Co-senior author 19 #Corresponding author 20 [email protected] (J.S.C.), 21 [email protected] (T.I.R.) (lead contact) 1 bioRxiv preprint doi: https://doi.org/10.1101/092767; this version posted December 9, 2016. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. -

An in Silico Argument for Mitochondrial Microrna As a Determinant Of

www.nature.com/scientificreports OPEN An in silico argument for mitochondrial microRNA as a determinant of primary non Received: 31 August 2017 Accepted: 29 January 2018 function in liver transplantation Published: xx xx xxxx Shirin Elizabeth Khorsandi , Siamak Salehi, Miriam Cortes, Hector Vilca-Melendez, Krishna Menon, Parthi Srinivasan, Andreas Prachalias, Wayel Jassem & Nigel Heaton Mitochondria have their own genomic, transcriptomic and proteomic machinery but are unable to be autonomous, needing both nuclear and mitochondrial genomes. The aim of this work was to use computational biology to explore the involvement of Mitochondrial microRNAs (MitomiRs) and their interactions with the mitochondrial proteome in a clinical model of primary non function (PNF) of the donor after cardiac death (DCD) liver. Archival array data on the diferential expression of miRNA in DCD PNF was re-analyzed using a number of publically available computational algorithms. 10 MitomiRs were identifed of importance in DCD PNF, 7 with predicted interaction of their seed sequence with the mitochondrial transcriptome that included both coding, and non coding areas of the hypervariability region 1 (HVR1) and control region. Considering miRNA regulation of the nuclear encoded mitochondrial proteome, 7 hypothetical small proteins were identifed with homolog function that ranged from co-factor for formation of ATP Synthase, REDOX balance and an importin/exportin protein. In silico, unconventional seed interactions, both non canonical and alternative seed sites, appear to be of greater importance in MitomiR regulation of the mitochondrial genome. Additionally, a number of novel small proteins of relevance in transplantation have been identifed which need further characterization. Cold preservation has been the mainstay of donor liver maintenance in transplantation for the past 50 years.